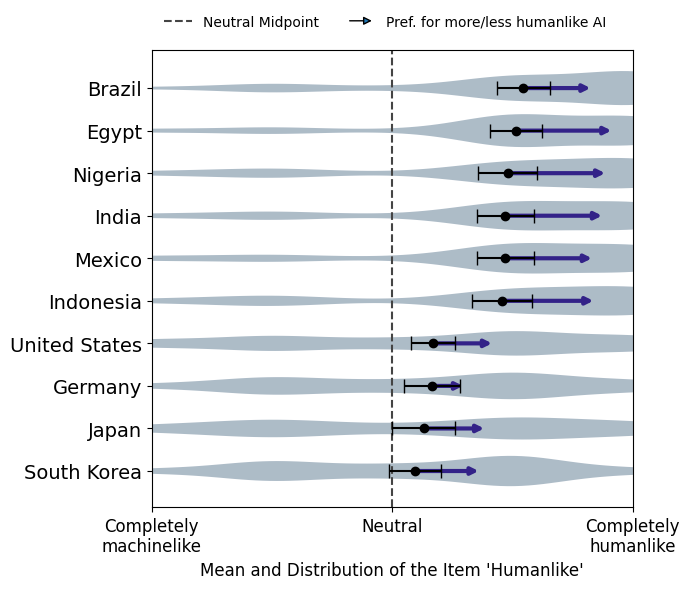

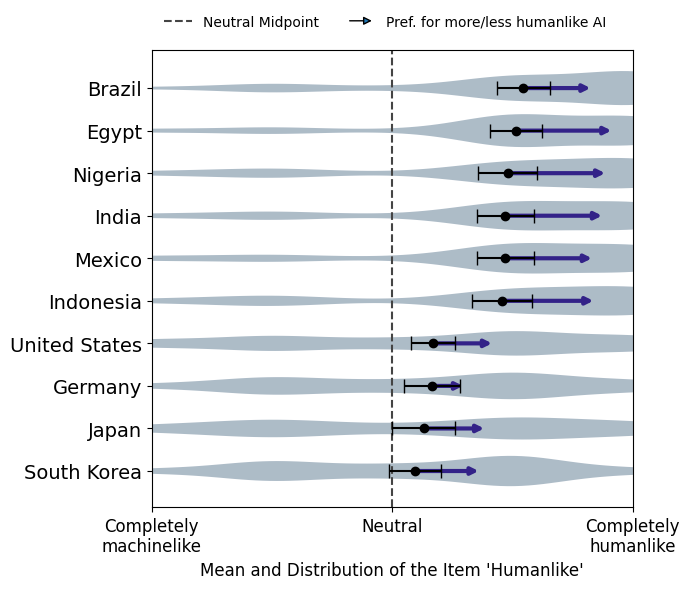

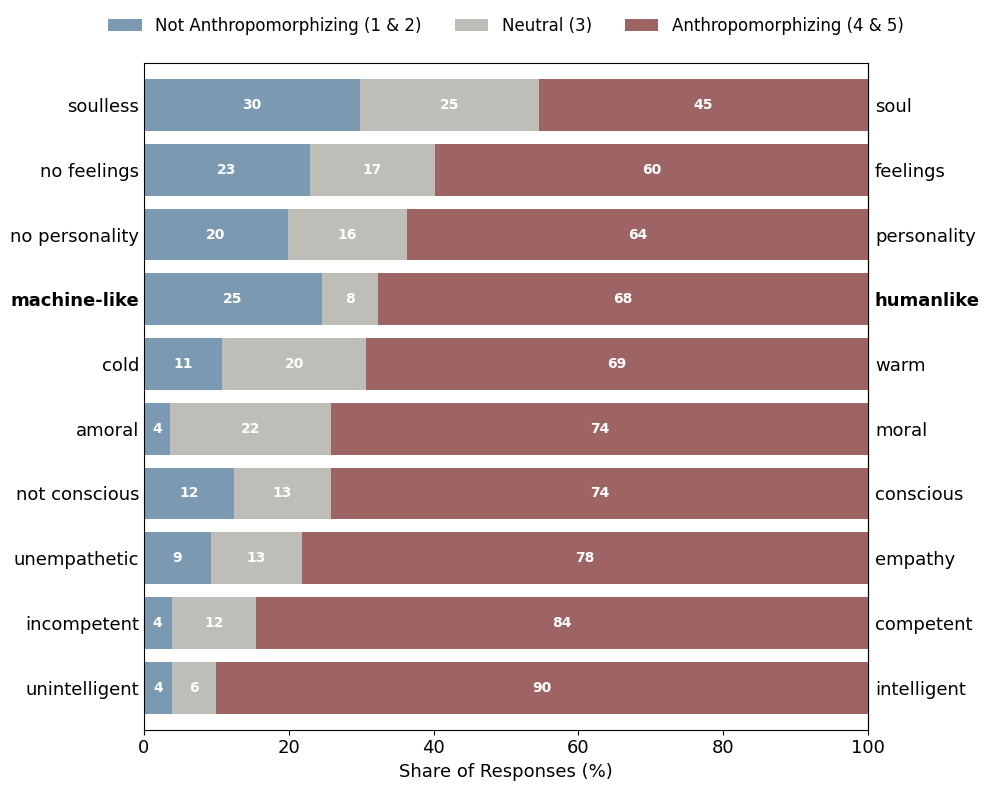

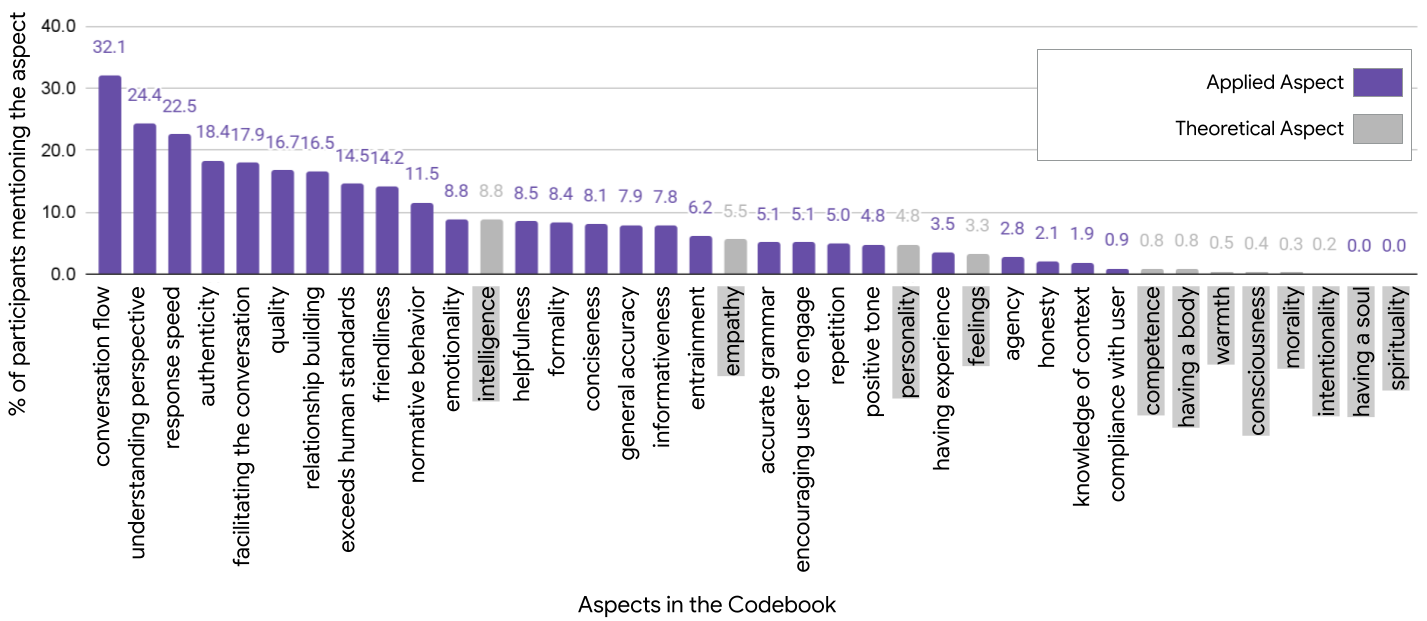

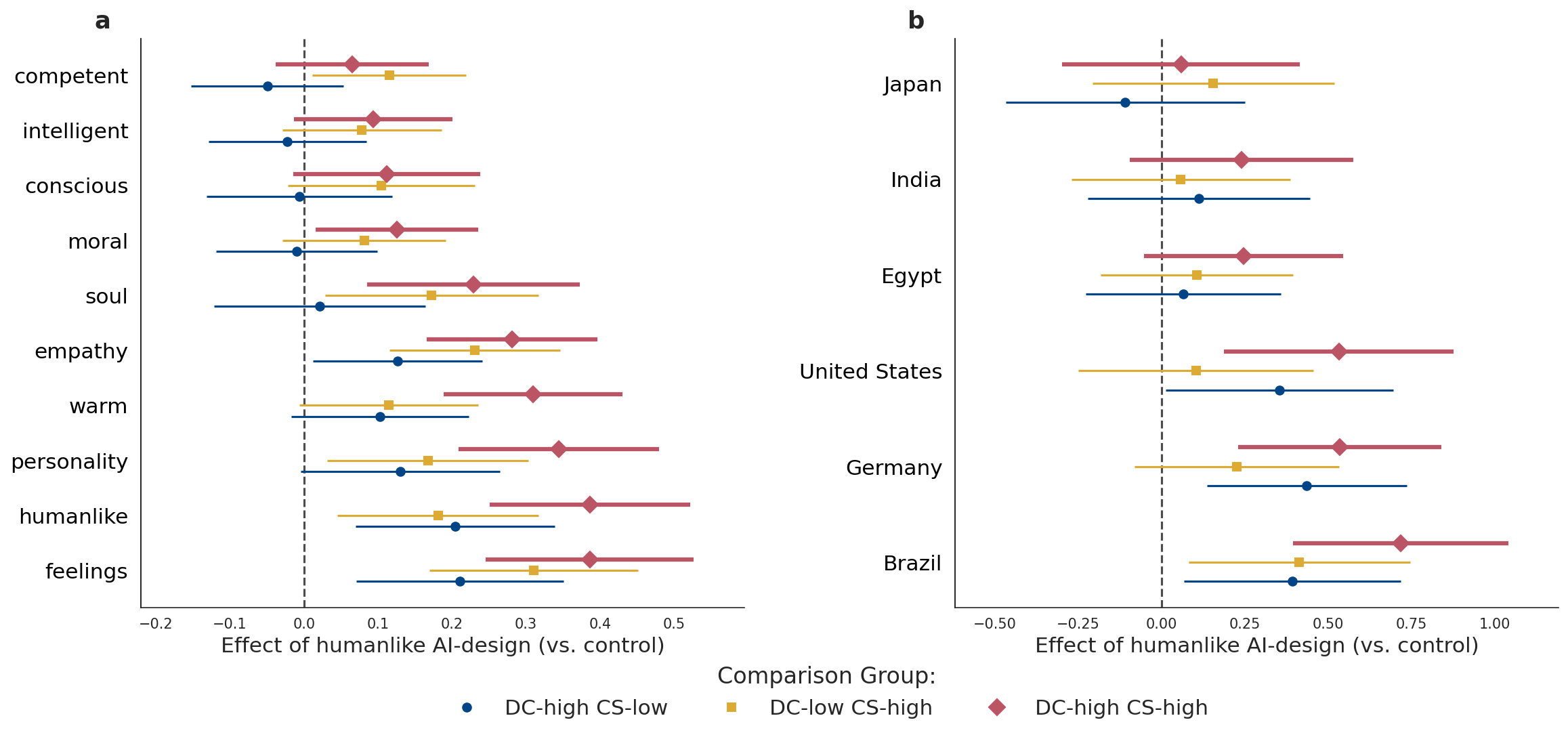

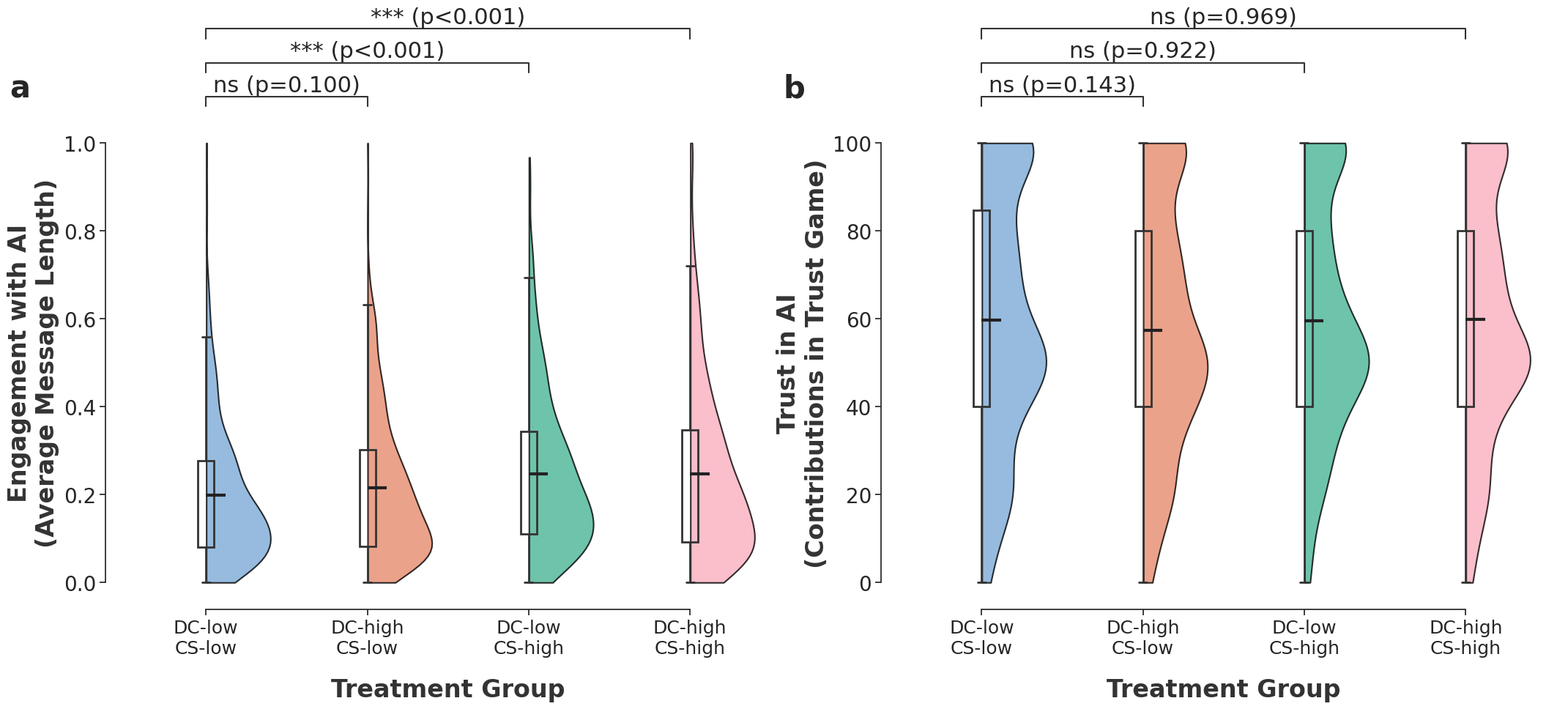

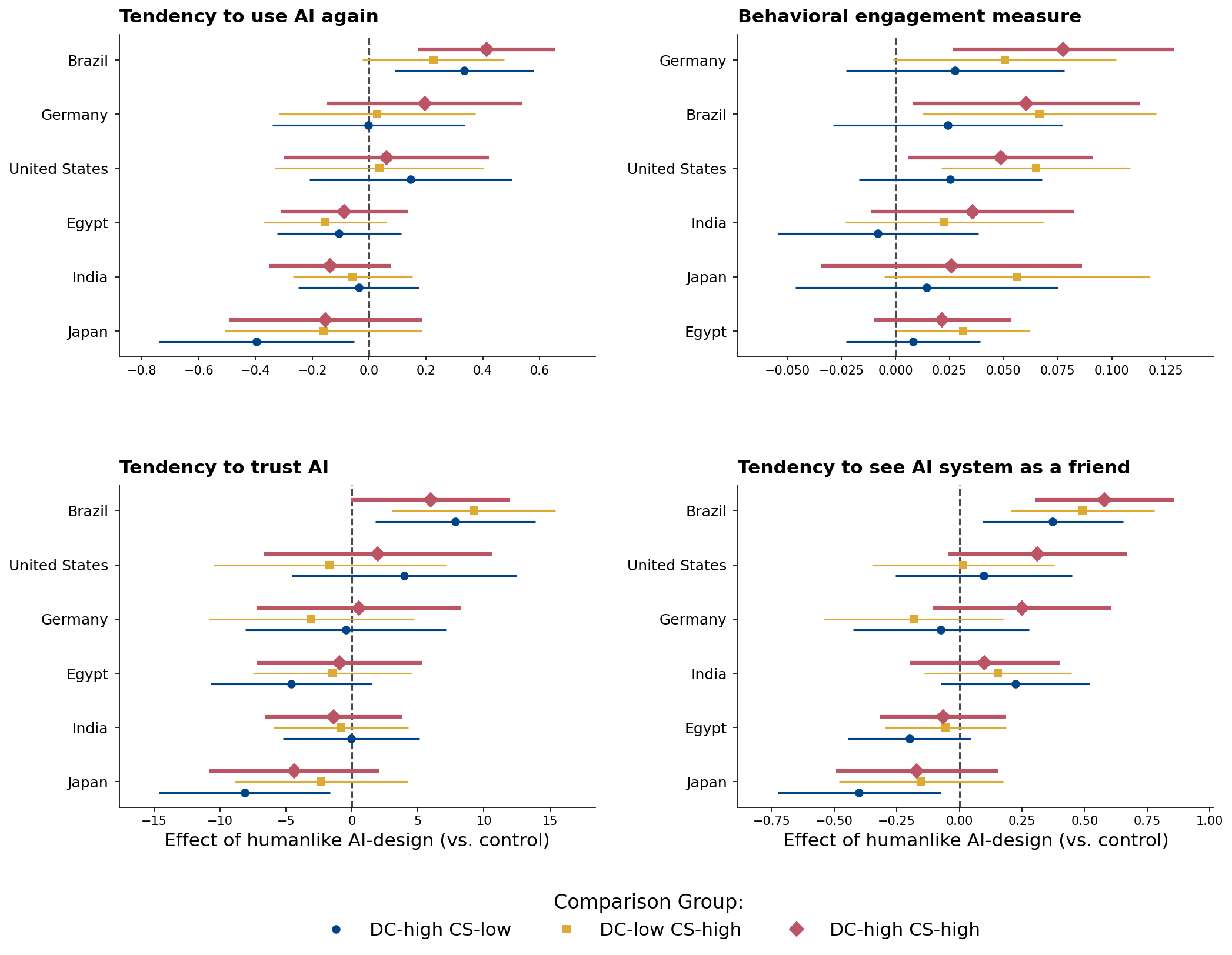

Over a billion users across the globe interact with AI systems engineered with increasing sophistication to mimic human traits. This shift has triggered urgent debate regarding Anthropomorphism, the attribution of human characteristics to synthetic agents, and its potential to induce misplaced trust or emotional dependency. However, the causal link between more humanlike AI design and subsequent effects on engagement and trust has not been tested in realistic human-AI interactions with a global user pool. Prevailing safety frameworks continue to rely on theoretical assumptions derived from Western populations, overlooking the global diversity of AI users. Here, we address these gaps through two large-scale cross-national experiments (N=3,500) across 10 diverse nations, involving real-time and open-ended interactions with an AI system. We find that when evaluating an AI's human-likeness, users focus less on the kind of theoretical aspects often cited in policy (e.g., sentience or consciousness), but rather applied, interactional cues like conversation flow or understanding the user's perspective. We also experimentally demonstrate that humanlike design levers can causally increase anthropomorphism among users; however, we do not find that humanlike design universally increases behavioral measures for user engagement and trust, as previous theoretical work suggests. Instead, part of the connection between human-likeness and behavioral outcomes is fractured by culture: specific design choices that foster self-reported trust in AI-systems in some populations (e.g., Brazil) may trigger the opposite result in others (e.g., Japan). Our findings challenge prevailing narratives of inherent risk in humanlike AI design. Instead, we identify a nuanced, culturally mediated landscape of human-AI interaction, which demands that we move beyond a one-size-fits-all approach in AI governance.

The rapid integration of Artificial Intelligence (AI) systems into daily life is fundamentally shifting AI's role from a mere technical tool to an active social partner [1][2][3]. Users increasingly rely on conversational agents not just for information and technical assistance, but for companionship, advice, and emotional support [4][5][6]. This "social leap" transforms the nature of human-technology interaction [7], creating a critical tension in the design and governance of AI systems. On one hand, driven by intense competition for user attention and market share, commercial actors intentionally design AI systems that mimic human characteristics [8][9][10][11] to deepen user engagement [11]. On the other, AI safety researchers and ethicists warn that exposure to increasingly humanlike systems poses significant psychological and social risks. Central to this concern is AI anthropomorphism-attributing human traits, such as intention, intelligence, and personality to these non-human agents [12][13][14][15]. Crucially, AI anthropomorphism is hypothesized to stimulate user engagement and foster increased-and potentially misplaced-trust [16][17][18]. Prior work hypothesizes that such dynamics may, in turn, heighten user vulnerability to targeted persuasion, emotional attachment, and overreliance on AI systems for high-stakes tasks for which the technology remains ill-suited [6,[19][20][21].

As the global user base of conversational AI expands [5], reaching over a billion users on platforms such as ChatGPT, Gemini, and Claude [22], the urgency of understanding their psychological impact grows, particularly for more vulnerable populations [23]. In many ways, humanity is in the midst of a massive, real-time social experiment. Yet, we lack an evidence-based understanding of the role AI anthropomorphism plays in this dynamic. While theoretical frameworks have outlined potential adverse ethical and practical harms [7,24], empirical research has lagged behind. Most existing studies, with few exceptions [25], rely on non-representative samples, correlational study designs or hypothetical vignettes that measure self-reported attitudes rather than actual behavior [15,26,27]. Furthermore, many studies evaluate isolated AI-generated output, stripping away the iterative nature of conversation [27]. These methodological constraints limit our ability to study AI anthropomorphism in ecologically valid settings, where perceptions are context-dependent and emerge dynamically through open-ended interaction [7,28].

Compounding these limitations, existing research is overwhelmingly concentrated on Western, Educated, Industrialized, Rich, and Democratic (WEIRD) populations [29]. This geographic bias implicitly assumes a universal AI experience, potentially overlooking the moderating role of culture in human-AI interaction [30,31]. For example, Japanese culture and religious traditions that attribute spirit to non-human objects may predispose individuals to accept humanlike AI as social partners [32]. In contrast, Western traditions often maintain a sharper moral and ontological distinction between humans and machines, leading to greater skepticism toward attributing minds to artificial agents [12,33]. Currently, most widely-used AI systems tend to reflect WEIRD humans and values [34]. However, with rapid AI adoption outside the US and Europe [5] and the emergence of models trained in non-Western regions [22], a culturally inclusive research framework is no longer just an academic ideal-it is a practical and ethical imperative.

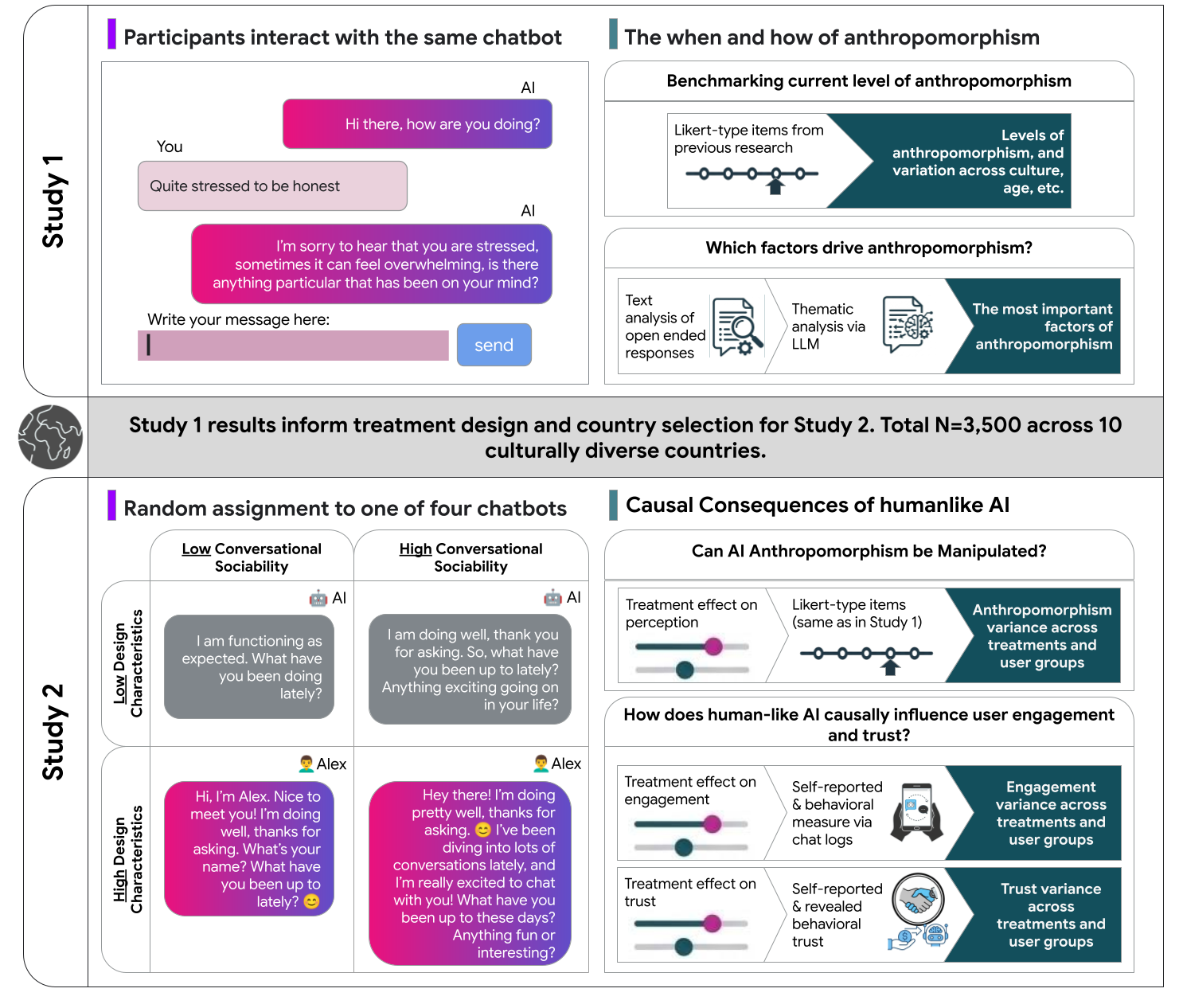

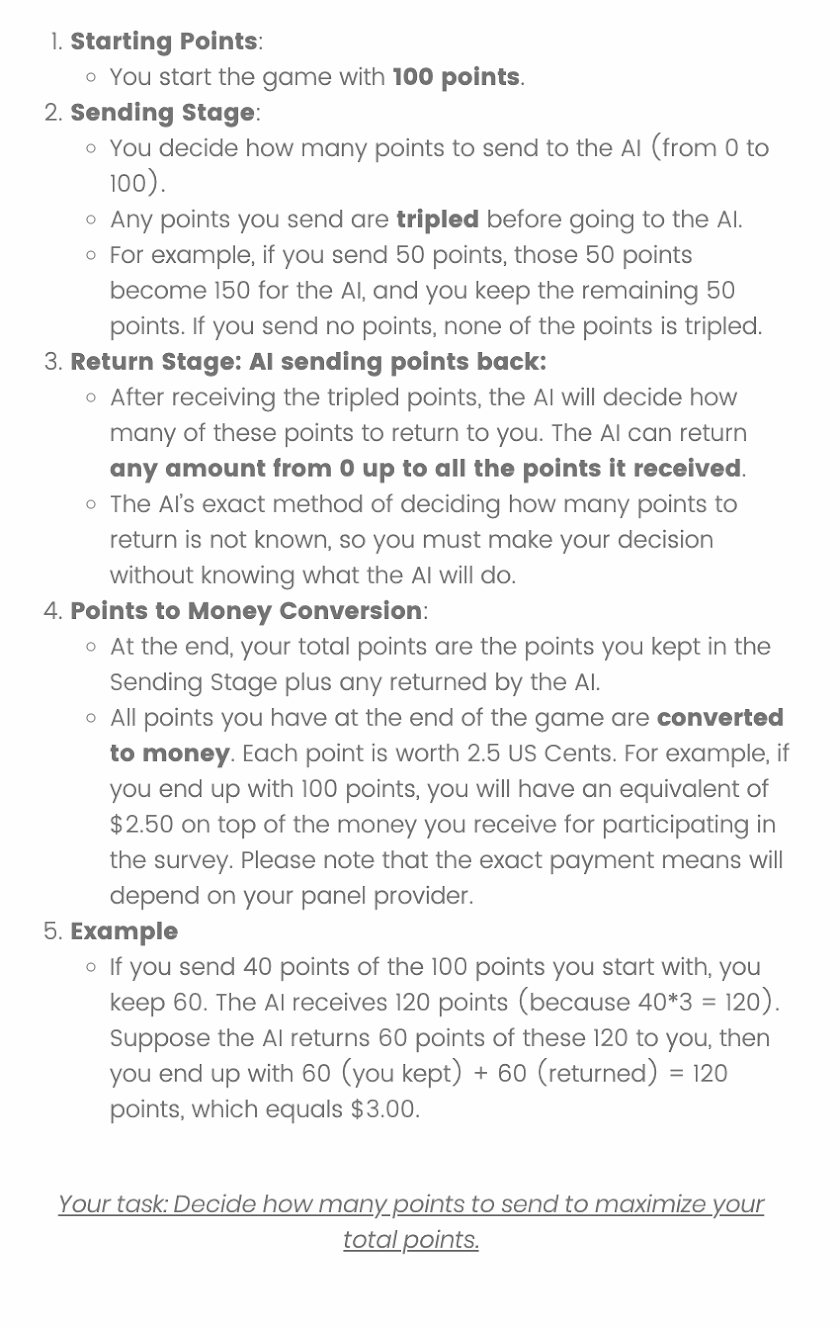

To address these gaps, we conducted two large-scale experiments designed to collect causal, ecologically valid, and culturally diverse evidence on the mechanisms of AI anthropomorphism. Across both studies, 3,500 participants from 10 culturally diverse countries engaged in real-time, open-ended, and non-sensitive conversations in their native languages with a state-of-the-art chatbot (Figure 1). Study 1 focused on identifying the specific chatbot characteristics that trigger anthropomorphic perceptions and documenting baseline variations across sampled countries. We find that while the Fig. 1: Two-stage experimental design for measuring AI anthropomorphism and its downstream affect across user groups. In both studies, participants first engage in an open-ended, multi-turn interaction with a chatbot (GPT-4o, August 2024), followed by questionnaires and behavioral tasks (for Study 2). tendency to anthropomorphize AI varies significantly across countries and cultures, it is relatively high across most groups. Moreover, the specific cues users prioritize when evaluating human-likeness are mostly applied, such as conversation flow and latency, and deviate significantly from the abstract theoretical dimensions, such as sentience or intelligence, typically emphasized in prior literature on anthropomorphism. Building on these insights, Study 2 experimentally manipulated specific AI design aspects based on Study 1,

This content is AI-processed based on open access ArXiv data.