📝 Original Info Title: AncientBench: Towards Comprehensive Evaluation on Excavated and Transmitted Chinese CorporaArXiv ID: 2512.17756Date: 2025-12-19Authors: Zhihan Zhou, Daqian Shi, Rui Song, Lida Shi, Xiaolei Diao, Hao Xu📝 Abstract Comprehension of ancient texts plays an important role in archaeology and understanding of Chinese history and civilization. The rapid development of large language models needs benchmarks that can evaluate their comprehension of ancient characters. Existing Chinese benchmarks are mostly targeted at modern Chinese and transmitted documents in ancient Chinese, but the part of excavated documents in ancient Chinese is not covered. To meet this need, we propose the AncientBench, which aims to evaluate the comprehension of ancient characters, especially in the scenario of excavated documents. The AncientBench is divided into four dimensions, which correspond to the four competencies of ancient character comprehension: glyph comprehension, pronunciation comprehension, meaning comprehension, and contextual comprehension. The benchmark also contains ten tasks, including radical, phonetic radical, homophone, cloze, translation, and more, providing a comprehensive framework for evaluation. We convened archaeological researchers to conduct experimental evaluations, proposed an ancient model as baseline, and conducted extensive experiments on the currently best-performing large language models. The experimental results reveal the great potential of large language models in ancient textual scenarios as well as the gap with humans. Our research aims to promote the development and application of large language models in the field of archaeology and ancient Chinese language.

💡 Deep Analysis

📄 Full Content AncientBench: Towards Comprehensive Evaluation on Excavated and

Transmitted Chinese Corpora

Zhihan Zhou1,2, Daqian Shi2,3, Rui Song1,2, Lida Shi2,4, Xiaolei Diao*1,2,5, Hao Xu*1,2

1College of Computer Science and Technology, Jilin University

2Key Laboratory of Ancient Chinese Script, Culture Relics and Artificial Intelligence, Jilin University

3Digital Environment Research Institute, Queen Mary University of London

4School of Artificial Intelligence, Jilin University

5Department of Information Engineering and Computer Science, University of Trento

{zhzhou25, shild21}@mails.jlu.edu.cn, d.shi@qmul.ac.uk, {songrui, xuhao}@jlu.edu.cn, xiaolei.diao@unitn.it

Abstract

Comprehension of ancient texts plays an important role in

archaeology and understanding of Chinese history and civ-

ilization. The rapid development of large language models

needs benchmarks that can evaluate their comprehension of

ancient characters. Existing Chinese benchmarks are mostly

targeted at modern Chinese and transmitted documents in an-

cient Chinese, but the part of excavated documents in ancient

Chinese is not covered. To meet this need, we propose the

AncientBench, which aims to evaluate the comprehension of

ancient characters, especially in the scenario of excavated

documents. The AncientBench is divided into four dimen-

sions, which correspond to the four competencies of ancient

character comprehension: glyph comprehension, pronuncia-

tion comprehension, meaning comprehension, and contextual

comprehension. The benchmark also contains ten tasks, in-

cluding radical, phonetic radical, homophone, cloze, trans-

lation, and more, providing a comprehensive framework for

evaluation. We convened archaeological researchers to con-

duct experimental evaluations, proposed an ancient model as

baseline, and conducted extensive experiments on the cur-

rently best-performing large language models. The experi-

mental results reveal the great potential of large language

models in ancient textual scenarios as well as the gap with

humans. Our research aims to promote the development and

application of large language models in the field of archaeol-

ogy and ancient Chinese language.

Introduction

As one of the most spoken languages in the world, Chinese

has received extensive attention in recent years in the field

of natural language processing (NLP). As an important part

of the Chinese language, ancient Chinese carries extremely

rich historical and cultural information, and its study is of

great significance for the traceability of Chinese history, the

protection of cultural heritage, and the development of his-

torical linguistics. However, traditional ancient Chinese re-

search methods are highly dependent on the researcher’s

memory and linguistic intuition, which is often inefficient

*Corresponding authors

Copyright © 2026, Association for the Advancement of Artificial

Intelligence (www.aaai.org). All rights reserved.

Figure 1: Comparison of excavated documents with trans-

mitted documents. (a) Bamboo Book of Chu, excavated doc-

uments. (b) Silk Books, excavated documents. (c) Book of

Poetry, transmitted documents.

and difficult to deal with large-scale corpus systematically,

limiting the breadth and depth of research.

With the rapid development of artificial intelligence tech-

nology, especially large language models (LLMs), ancient

Chinese research is gradually stepping towards a new

paradigm of data-driven and model-supported. LLMs have

demonstrated excellent generalization ability in natural lan-

guage understanding and natural language generation, and

have shown great potential in text analysis, language struc-

ture modeling, and so on. In order to systematically evalu-

ate the capability of these models, academics have gradually

constructed a series of standardized evaluation systems, such

as MMLU(Hendrycks et al. 2021a), BIG-bench(Srivastava

et al. 2022), and HELM(Bommasani, Liang, and Lee 2023),

etc., which have become important tools for measuring the

general language intelligence of models. This technological

trend offers new opportunities for ancient Chinese process-

ing, especially in understanding difficult ancient texts.

In the Chinese context, based on its linguistic character-

istics and application requirements, a series of benchmarks

have emerged to evaluate the capability of Chinese LLMs,

such as CLUE(Xu et al. 2020a), CMMLU(Li et al. 2024),

arXiv:2512.17756v1 [cs.CL] 19 Dec 2025

and MMCU(Zeng 2023), etc. Most of these benchmarks

cover a wide range of domains in Chinese scenarios, such

as language comprehension, logical reasoning, instruction

execution, etc., which promote the evaluation and iterative

optimization of Chinese language models. In recent years,

some datasets related to ancient Chinese have also appeared,

e.g., ACLUE(Zhang and Li 2023) and WYWEB(Zhou et al.

2023). However, the tasks related to ancient Chinese in such

benchmarks are scarce, mostly limited to syntax parsing,

with no unified evaluation criteria, making

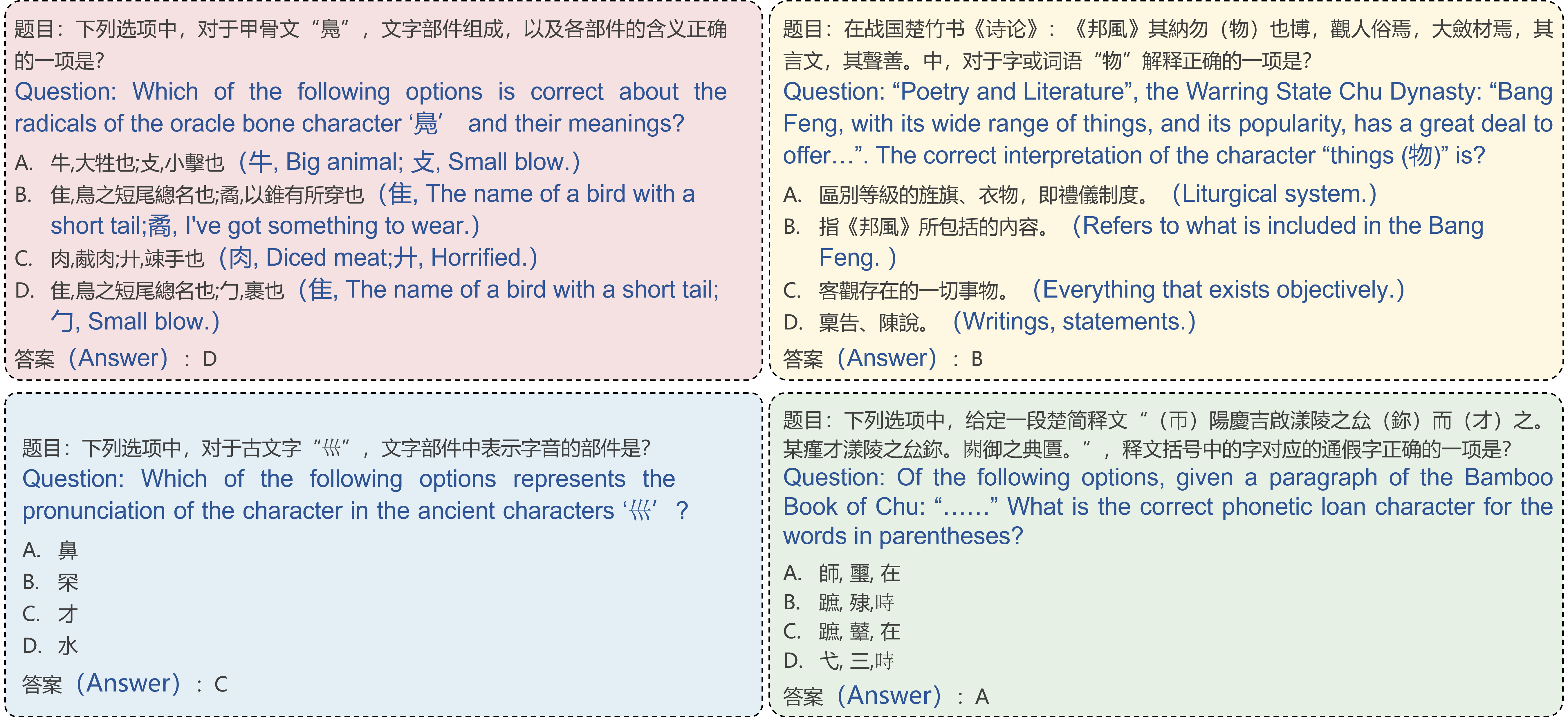

📸 Image Gallery

Reference This content is AI-processed based on open access ArXiv data.