Prescriptive Process Monitoring (PresPM) recommends interventions during business processes to optimize key performance indicators (KPIs). In realistic settings, interventions are rarely isolated: organizations need to align sequences of interventions to jointly steer the outcome of a case. Existing PresPM approaches fall short in this respect. Many focus on a single intervention decision, while others treat multiple interventions independently, ignoring how they interact over time. Methods that do address these dependencies depend either on simulation or data augmentation to approximate the process to train a Reinforcement Learning (RL) agent, which can create a reality gap and introduce bias. We introduce SCOPE, a PresPM approach that learns aligned sequential intervention recommendations. SCOPE employs backward induction to estimate the effect of each candidate intervention action, propagating its impact from the final decision point back to the first. By leveraging causal learners, our method can utilize observational data directly, unlike methods that require constructing process approximations for reinforcement learning. Experiments on both an existing synthetic dataset and a new semi-synthetic dataset show that SCOPE consistently outperforms state-of-the-art PresPM techniques in optimizing the KPI. The novel semi-synthetic setup, based on a real-life event log, is provided as a reusable benchmark for future work on sequential PresPM.

PresPM uses machine learning to provide case-specific recommendations at different decision points during the execution of business processes. These recommendations concern interventions, such as managerial escalations or customer communications, that aim to improve KPIs, for example, throughput time or cost efficiency. PresPM holds the potential to move organizations from merely describing and predicting process behavior towards actively steering process executions [16]. In this paper, we use the terms intervention for any controllable action taken on an ongoing case, and intervention recommendation for the action suggested by a PresPM method at a decision point.

In many processes, intervention decisions are not isolated. Typically, a process contains multiple, interdependent decision points that jointly determine the outcome of a case. The effect of an earlier intervention depends on which interventions will be applied later in the same case. Optimizing intervention decisions one by one is therefore insufficient, because actions that look beneficial locally can undermine overall KPI performance. For example, in a marketing process aimed at maximizing revenue, the decision to offer a client a discount may depend on an earlier choice to send a promotional email and the client’s response to that email. This sequence of decisions together shapes the revenue. Optimizing each decision independently without considering the future might make it look like sending a promotional email is only moderately valuable for some clients, even though if you follow it with a discount, it could be highly effective. Methods for PresPM thus need to reason over sequences of decisions and their combined effect on the final KPI.

Most existing PresPM approaches do not yet offer such sequential support. First, many methods address only a single intervention scenario, even when multiple opportunities to intervene exist [3,4,9,23,24,25,26]. These approaches may improve performance for a single intervention scenario, but they do not coordinate multiple decisions over the full case. Second, some approaches handle sequential decisions but optimize each decision point in isolation. They focus on the immediate effect of the next intervention, for instance, on the remaining processing time, without explicitly aligning interventions across decision points. This can still lead to suboptimal end-to-end outcomes with respect to the final KPI [18]. Third, other methods for sequential intervention recommendations rely on process approximations, such as Markov decision processes (MDP) or data augmentation [1,5], to train an RL agent, and the resulting policies inherit any misspecification in these approximations, which may lead to biased or underperforming recommendations in practice.

To address these limitations, this paper makes two key contributions:

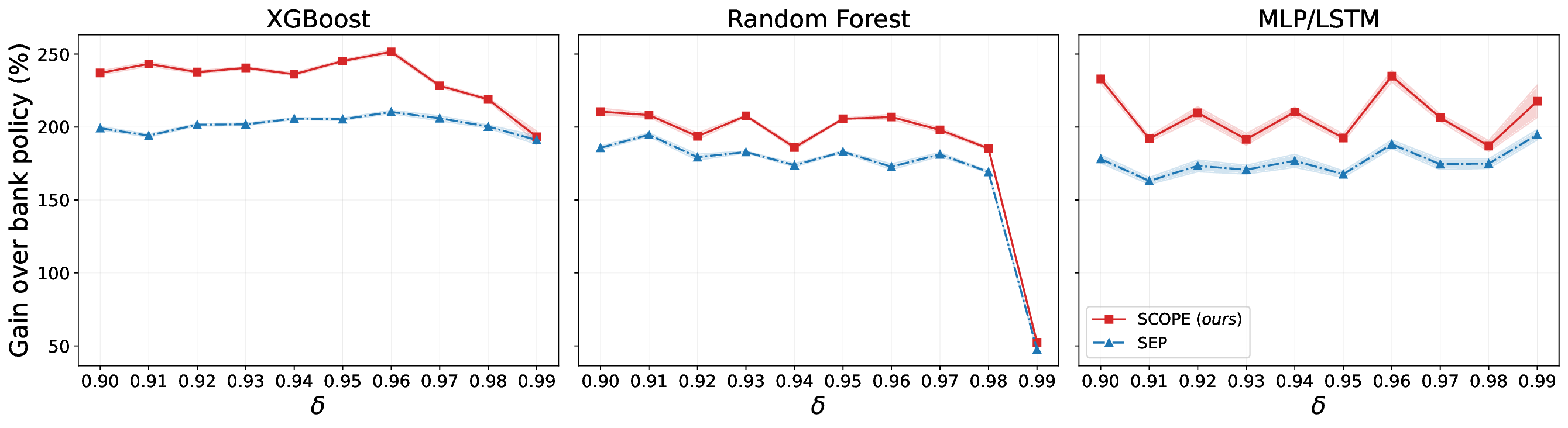

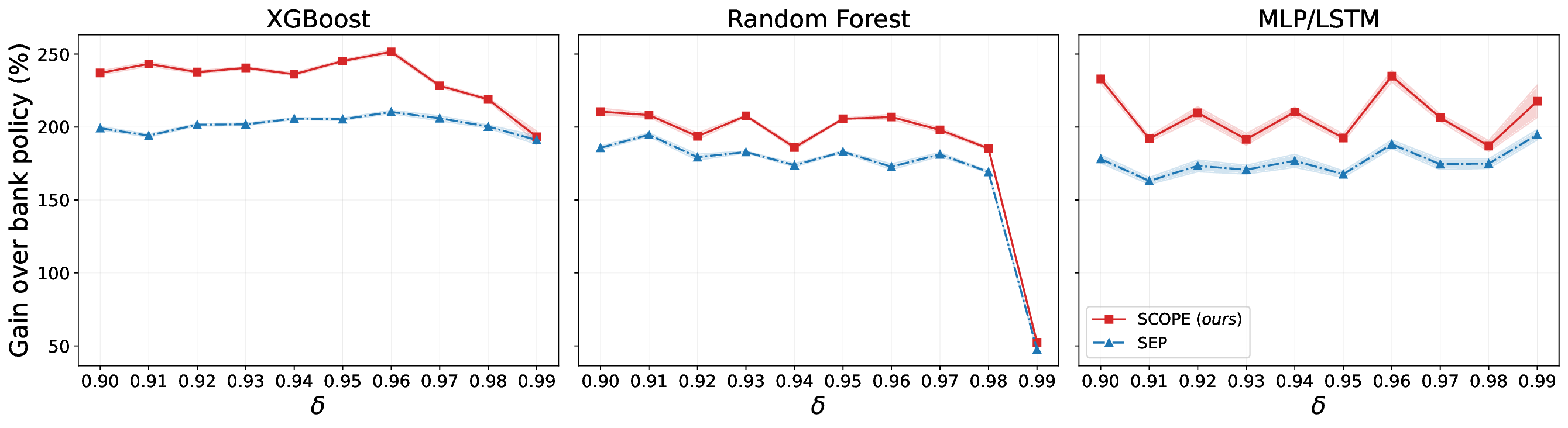

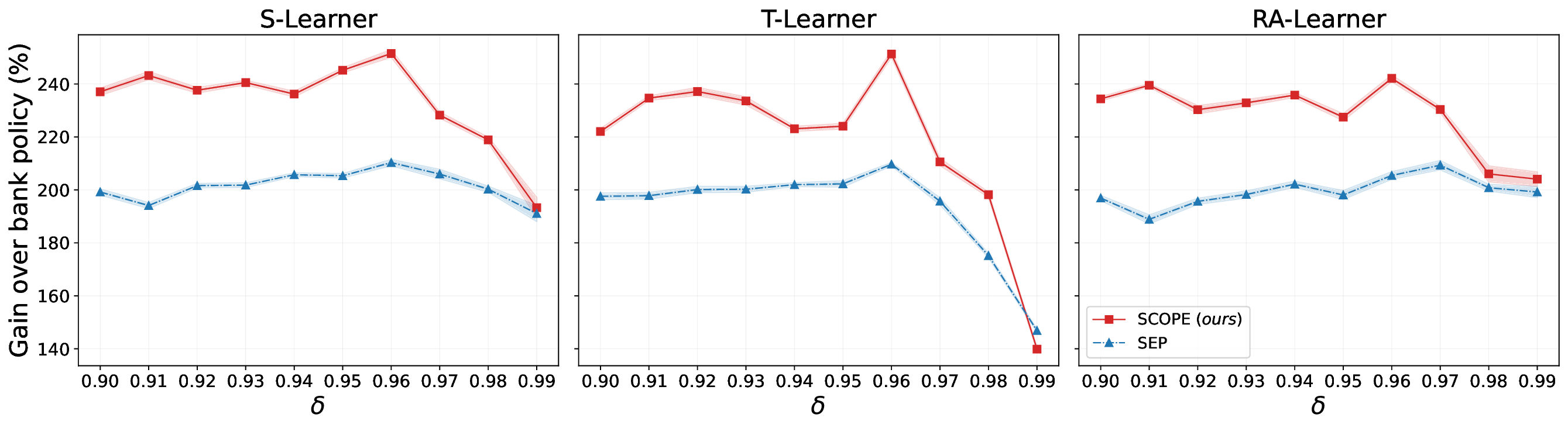

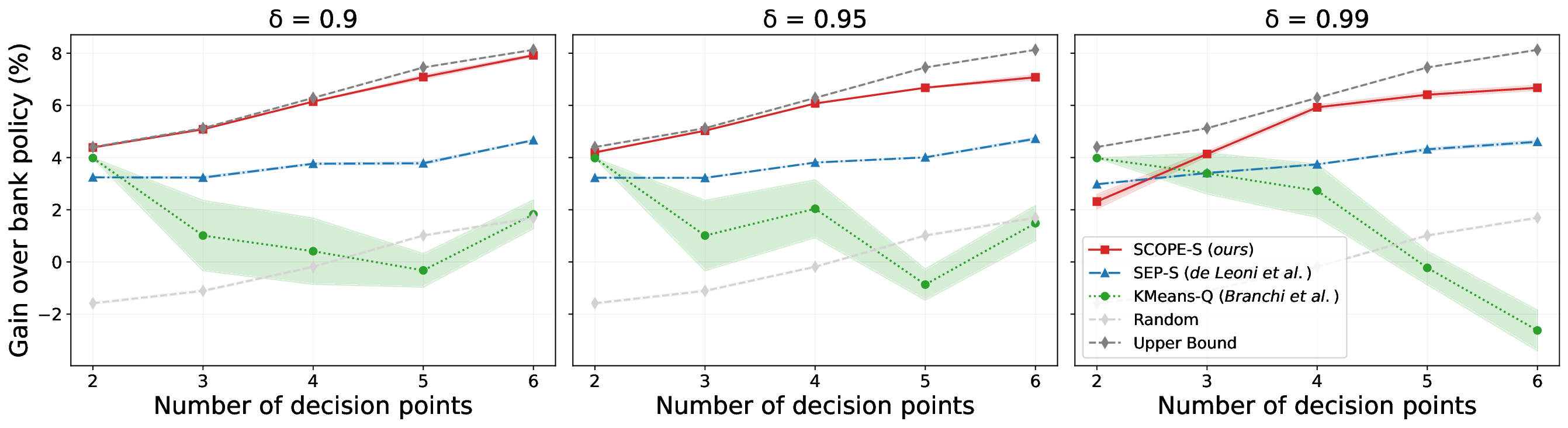

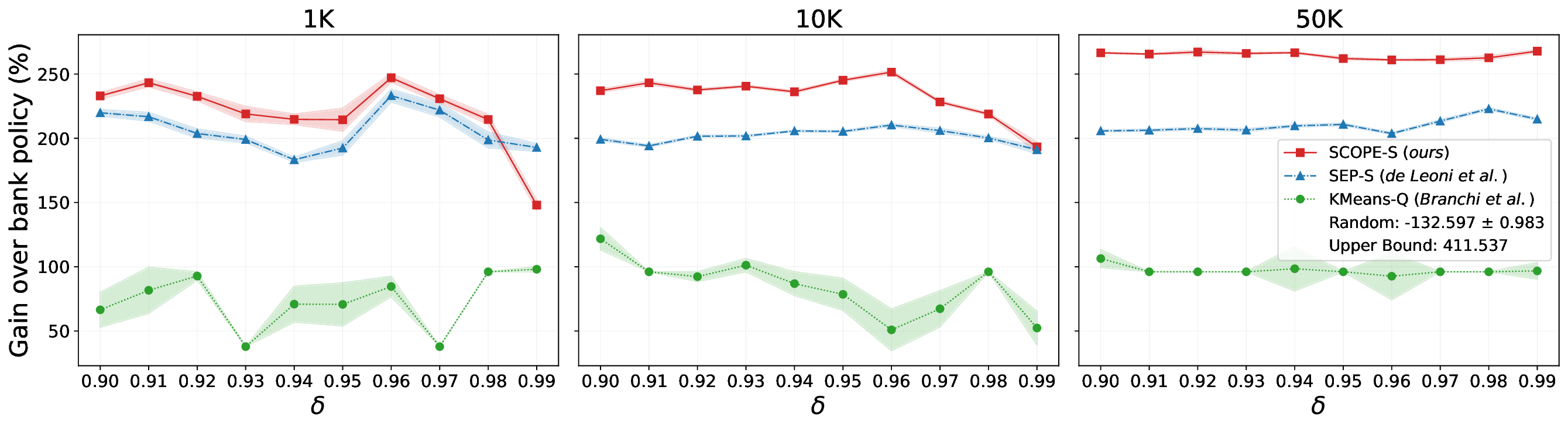

We propose SCOPE, a PresPM approach that combines causal learners with backward induction to learn causally grounded sequential intervention policies that are aligned across multiple decision points. For each decision point, a causal model estimates the effect of alternative interventions on the target KPI, given the observed process execution history. Backward induction then propagates the impact of later intervention decisions back to earlier ones by starting from the final decision point and recursively deriving the recommended action and its expected outcome at each preceding decision point. Operating directly on observational event logs, SCOPE uses causal learning to be able to identify actions rarely chosen historically but likely to enhance the target KPI, without requiring process-specific simulators or log augmentation.

We provide an empirical evaluation on existing synthetic data and a new semisynthetic setup based on a real-life event log. Our code and the novel semi-synthetic benchmark are made publicly available as a reusable resource for sequential PresPM in our GitHub repository. The results demonstrate that SCOPE consistently outperforms state-of-the-art PresPM techniques and highlight the importance of combining causal learning with backward induction for KPI optimization.

The remainder of this paper is structured as follows. Section 2 provides background and related work. Section 3 outlines the methodology. Section 4 presents the experiments and discussion. Finally, Section 5 concludes the paper.

Current PresPM approaches are generally based on two streams of research. The first is Causal Inference (CI), which aims to estimate the effects of potential interventions from observational data and use these estimates to guide decisions. This is achieved through causal learners, which are model setups designed to predict outcomes or causal effects from observational data. For example, an S-learner fits a single model that predicts the outcome using the intervention action as an input in addition to other features. PresPM approaches typically adapt causal learners to the proc

This content is AI-processed based on open access ArXiv data.