Trustworthy Artificial Intelligence (TAI) is gaining traction due to regulations and functional benefits. While Functional TAI (FTAI) focuses on how to implement trustworthy systems, Normative TAI (NTAI) focuses on regulations that need to be enforced. However, gaps between FTAI and NTAI remain, making it difficult to assess trustworthiness of AI systems. We argue that a bridge is needed, specifically by introducing a conceptual language which can match FTAI and NTAI. Such a semantic language can assist developers as a framework to assess AI systems in terms of trustworthiness. It can also help stakeholders translate norms and regulations into concrete implementation steps for their systems. In this position paper, we describe the current state-of-the-art and identify the gap between FTAI and NTAI. We will discuss starting points for developing a semantic language and the envisioned effects of it. Finally, we provide key considerations and discuss future actions towards assessment of TAI.

Trustworthy Artificial Intelligence (TAI) is increasingly recognised as essential for both development and deployment of AI systems to create trust and confidence with stakeholders and end users. Various approaches emerge to make AI more trustworthy, each reflecting different perspectives. The functional perspective focuses on creating AI systems with sufficient technical reliability and safety [1]. From a normative and legal point of view, TAI involves adherence to rules, standards, and compliance frameworks such as ISO/IEC 42001, ISO/IEC 23894 and the EU AI Act [2,3,4]. Socially, the emphasis of trustworthiness lies on the broader societal impact of AI, which addresses issues such as power, ethics, privacy, inclusivity, and the systems' perceived benevolence [5,6,7,8].

While many approaches have been proposed towards TAI, there is a clear lack of overlap and integration between functional and normative approaches. As the AI Act focuses on norms for highrisk systems, many existing initiatives provide risk assessment frameworks to check compliance [9,10,11,12].

For example, the AI Risk Ontology (AIRO) [13] has been proposed to determine which systems are high-risk and to document related risk information. Although useful, these risk-based approaches focus on application categories of AI systems and remain largely disconnected from system design choices and concrete implementation steps.

The past has shown that it can be difficult for developers to build systems that comply with regulations such as the General Data Protection Regulation (GDPR) [14]. Research has shown that this is in part because developers have trouble relating normative requirements to technical implementations [15,16]. Therefore, it was recommended to accompany the GDPR law with techniques to use for implementing each principle. We expect regulations on TAI such as the AI Act to face similar problems, as they do not provide accompanying techniques and guidelines for developers to use.

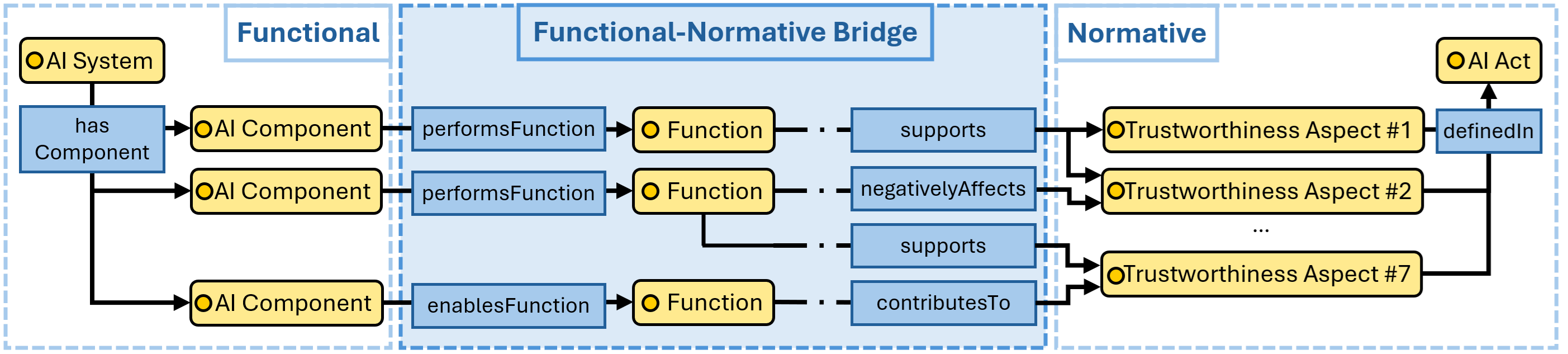

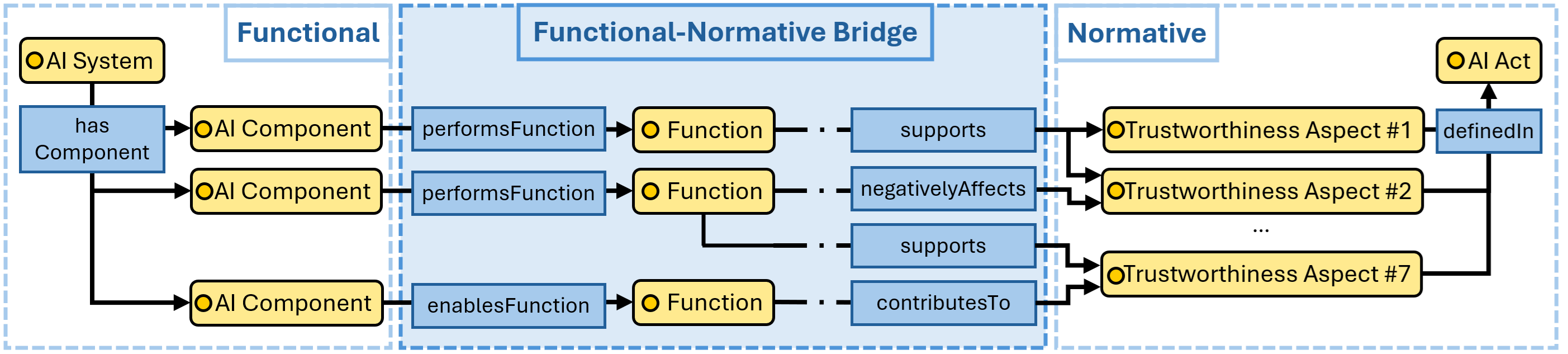

To bridge the gap between norms and regulations on one side and functional requirements on the other, we see the need for a mapping that 1) supports technical implementations in compliance with regulations and 2) translates legal standards into system requirements for developers. Without such a mapping, there is a risk that abstract or high-level trustworthiness principles remain disconnected from technical decisions during AI development and functional assessment of AI systems on TAI compliance proves elusive.

Therefore, we propose that a standardised semantic framework should be developed that relates functional properties of AI systems to key trustworthiness requirements. As we believe that the envisioned semantic framework should build on existing frameworks, we explore related work that can serve as a starting point. We include both normative frameworks and ways to systematically explore system designs. We then provide an initial design and conclude with key considerations and directions for future work.

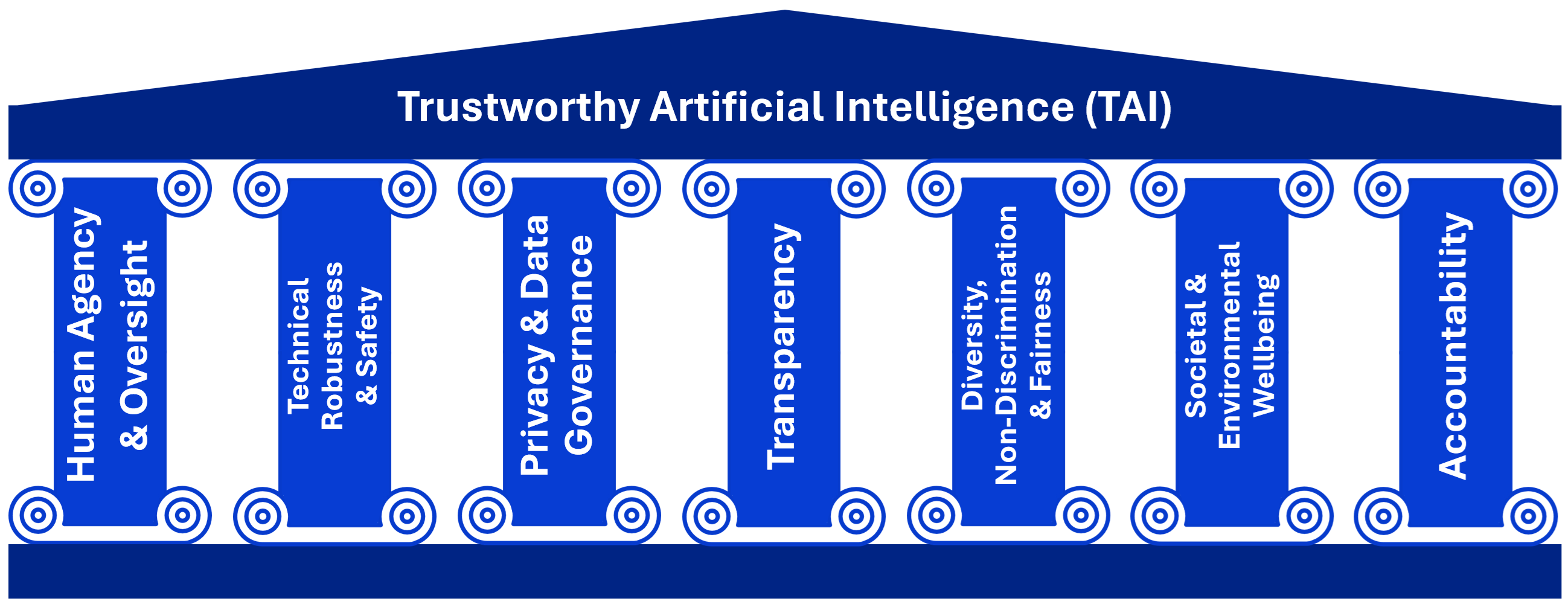

We now describe key starting points to consider for a semantic framework that bridges the gap between functional and normative AI requirements. For this, we first describe existing normative frameworks, then point to functional description frameworks, and finally introduce concepts that we argue should be included in the bridging language. An important legal framework to consider is the AI Act, which has formally gone into effect as of August 2024 as a legal framework proposed by the EU Commission [2,19]. The AI Act aims to promote the adoption of TAI systems by taking a risk-based approach with rules for AI developers and deployers. They identify which systems are considered high-risk AI systems. The EU HLEG has defined seven key principles or pillars for Trustworthy AI [17] as shown in Figure 1, which have been introduced in the AI Act [18]. AI systems are expected to adhere to these trustworthiness principles, under continuous evaluation throughout their life cycle. These principles are a solid starting point, as they provide definitions and goals towards TAI.

Many parties have created guidelines and assessment tools for trustworthy AI. The EU HLEG has created a list for self-assessment (ALTAI) [12], OECD introduces guidelines [20] with five values-based principles and recommendations to guide policymakers and AI actors. NIST presents the AI Risk Management Framework (AIRMF) [21]. Several tools have been specifically developed to support evaluation in terms of the AI Act [9,10,11,12]. These tools can serve as inspiration, though they focus primarily on risk identification and remain high-level.

Various semantic approaches have attempted to formalise Trustworthy AI [13,22,23], yet they lack explicit, unambiguous definitions on terminology like Transparency. ALTAI provides a glossary for many terms related to Trustworthy AI, but these lack more rigorous semantics and still provide room for interpretation. The aforementioned AIRO does capture te

This content is AI-processed based on open access ArXiv data.