Large Language Models (LLMs) often struggle with complex multi-step planning tasks, showing high rates of constraint violations and inconsistent solutions. Existing strategies such as Chain-of-Thought and ReAct rely on implicit state tracking and lack an explicit problem representation. Inspired by classical AI planning, we propose Model-First Reasoning (MFR), a two-phase paradigm in which the LLM first constructs an explicit model of the problem, defining entities, state variables, actions, and constraints, before generating a solution plan. Across multiple planning domains, including medical scheduling, route planning, resource allocation, logic puzzles, and procedural synthesis, MFR reduces constraint violations and improves solution quality compared to Chain-of-Thought and ReAct. Ablation studies show that the explicit modeling phase is critical for these gains. Our results suggest that many LLM planning failures stem from representational deficiencies rather than reasoning limitations, highlighting explicit modeling as a key component for robust and interpretable AI agents. All prompts, evaluation procedures, and task datasets are documented to facilitate reproducibility.

Large Language Models (LLMs) have demonstrated impressive capabilities in natural language understanding, reasoning, and decision-making, enabling their use as autonomous agents for planning, problem solving, and interaction with complex environments. Prompting strategies such as Chainof-Thought (CoT) [6] and ReAct [7] have significantly improved multi-step reasoning by encouraging explicit intermediate reasoning steps or interleaving reasoning with actions. Despite these advances, LLM-based agents continue to exhibit high rates of constraint violations, inconsistent plans, and brittle behavior in complex, long-horizon tasks.

These failures are especially pronounced in domains where correctness depends on maintaining a coherent internal state over many steps, respecting multiple interacting constraints, and avoiding implicit assumptions. Examples include medical scheduling, resource allocation, procedural execution, and other safety-or correctness-critical planning problems. While current approaches primarily focus on improving the reasoning process itself, we argue that this perspective overlooks a more fundamental limitation: reasoning is often performed without an explicit representation of the problem being reasoned about.

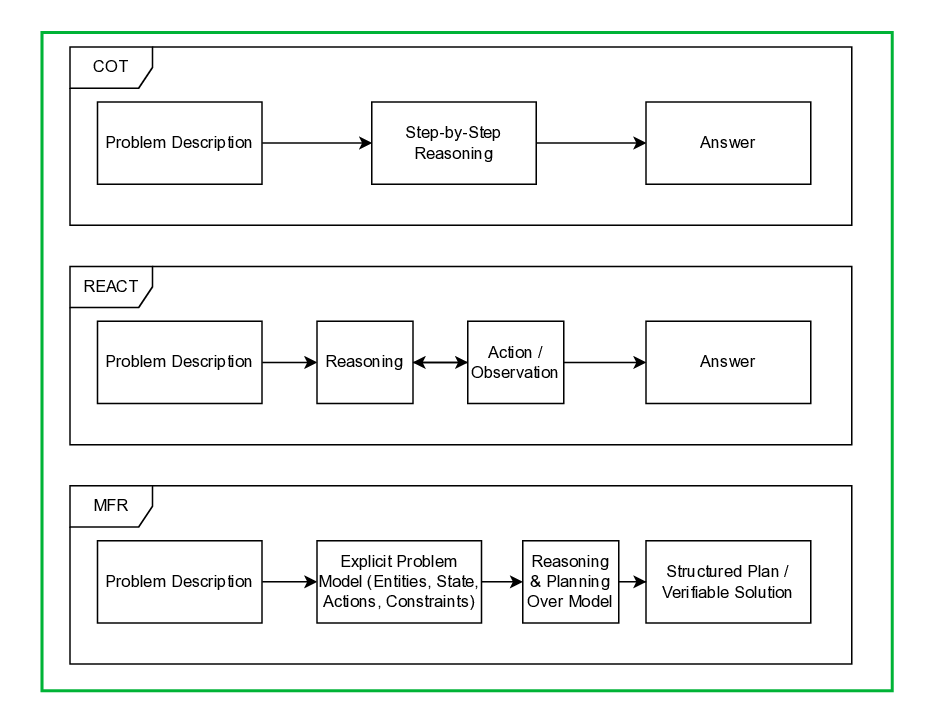

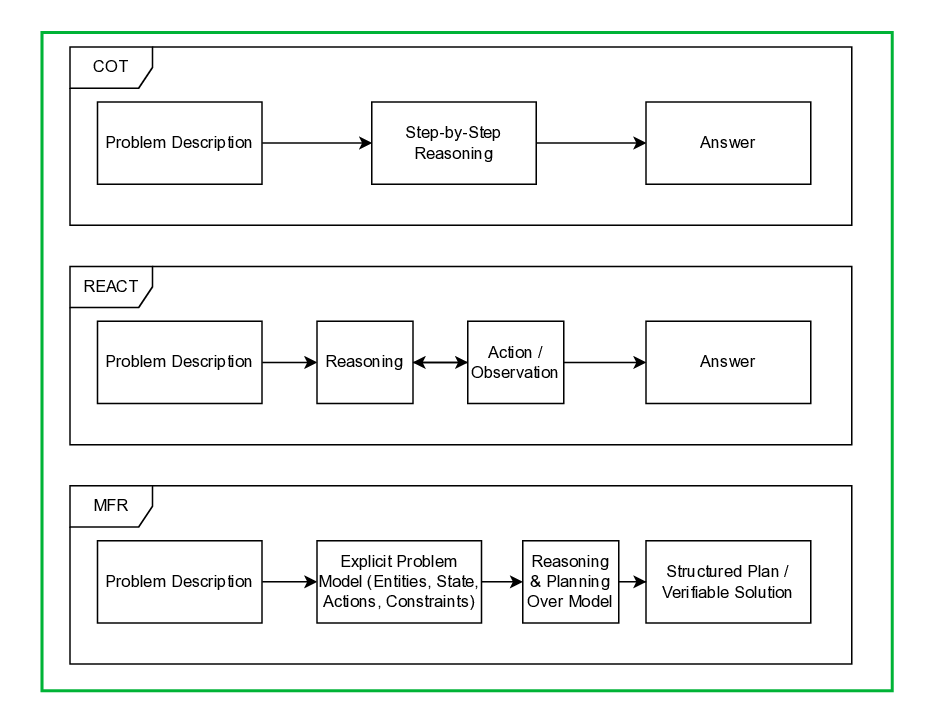

Chain-of-Thought prompting improves reasoning accuracy by encouraging LLMs to generate step-by-step explanations prior to producing an answer. However, CoT does not require the model to explicitly define the entities, state variables, or constraints that govern valid solutions. As a result, state is tracked implicitly within the model’s latent representations and natural language outputs, making it prone to drift, omission, and contradiction as reasoning length increases.

ReAct-style agents extend CoT by interleaving reasoning with actions and observations, enabling interaction with external tools and environments. While this improves adaptability, state tracking remains informal and distributed across free-form text. Observations are often assumed rather than derived, and constraints are rarely enforced globally. Consequently, reasoning can appear locally coherent while becoming globally inconsistent over longer horizons.

These approaches implicitly assume that improved reasoning procedures alone are sufficient for reliable planning. In practice, they rely on the model to infer and maintain a consistent internal representation of the problem without ever being required to make that representation explicit or verifiable.

In contrast, human reasoning-across science, engineering, and everyday problem-solving-is fundamentally model-based. Scientific inquiry begins by defining relevant entities, variables, and governing laws before drawing inferences. Engineers construct explicit models to analyze system behavior prior to optimization. In cognitive science, human reasoning is widely understood to operate over internal mental models that structure inference and prediction.

Errors in reasoning frequently arise not from faulty inference rules, but from incomplete or incorrect models. When a critical variable or constraint is omitted, even logically valid reasoning can lead to incorrect conclusions. From this perspective, reliable reasoning presupposes an explicit representation of what exists, how it can change, and what must remain invariant.

Classical AI planning systems formalize this principle through explicit domain models, such as Planning Domain Definition Language (PDDL), where entities, actions, preconditions, effects, and constraints are defined prior to planning. Reasoning is then performed over this fixed, verifiable structure. LLM-based agents, however, typically collapse modeling and reasoning into a single generative process, leaving the underlying structure implicit and unstable.

Viewed through this lens, hallucination is not merely the generation of false statements. Rather, it is a symptom of reasoning performed without a clearly defined model of the problem space.

Motivated by these observations, we propose Model-First Reasoning (MFR), a paradigm that explicitly separates problem representation from reasoning in LLM-based agents. In MFR, the model is first instructed to construct an explicit problem model before generating any solution or plan. This model includes:

• Relevant entities

• Actions with preconditions and effects

• Constraints that define valid solutions Only after this modeling phase is complete does the LLM proceed to the reasoning or planning phase, generating solutions that operate strictly within the defined model. This separation introduces a representational scaffold that constrains subsequent reasoning, reducing reliance on implicit latent state tracking and limiting the introduction of unstated assumptions.

Importantly, Model-First Reasoning does not require architectural changes, external symbolic solvers, or additional training. It is implemented purely through prompting, making it immediately applicable to existing LLMs and agent framew

This content is AI-processed based on open access ArXiv data.