Various weather modelling problems (e.g., weather forecasting, optimizing turbine placements, etc.) require ample access to high-resolution, highly accurate wind data. Acquiring such high-resolution wind data, however, remains a challenging and expensive endeavour. Traditional reconstruction approaches are typically either cost-effective or accurate, but not both. Deep learning methods, including diffusion models, have been proposed to resolve this trade-off by leveraging advances in natural image super-resolution. Wind data, however, is distinct from natural images, and wind super-resolvers often use upwards of 10 input channels, significantly more than the usual 3-channel RGB inputs in natural images. To better leverage a large number of conditioning variables in diffusion models, we present a generalization of classifier-free guidance (CFG) to multiple conditioning inputs. Our novel composite classifier-free guidance (CCFG) can be dropped into any pre-trained diffusion model trained with standard CFG dropout. We demonstrate that CCFG outputs are higher-fidelity than those from CFG on wind super-resolution tasks. We present WindDM, a diffusion model trained for industrial-scale wind dynamics reconstruction and leveraging CCFG. WindDM achieves state-of-the-art reconstruction quality among deep learning models and costs up to $1000\times$ less than classical methods.

As of 2025, the repercussions of climate change and global warming are becoming increasingly pronounced. To avoid a worsening climate catastrophe, transitioning to renewable energies is now a pressing priority. Wind turbines are a promising source, accounting for 25.5% of global renewable energy capacity [13]. Wind farms, however, have a high upfront cost, and their locations must be carefully selected. Turbines misplaced by even a few hundred meters could, in the long run, result in several megawatts of missed energy capture. Accurately and efficiently predicting granular wind pattern data is, therefore, an important problem in ensuring optimal turbine energy production.

Commonly, numerical weather prediction (NWP) models produce granular wind pattern data by super-resolving more readily available coarse wind data. For instance, global-scale data (0.25 • ≈ 30 km spatial resolution) are used as grounding data to acquire mesoscale data (1 -3 km resolution). Classically, NWP models belong to one of two categories: dynamical models or statistical models. Dynamical models serve as the gold standard, directly resolving the fluid dynamics to produce highly accurate predictions, albeit at a high cost. Statistical models, on the other hand, implicitly learn wind dynamics, yielding less accurate predictions but at an extremely low cost. Recent advances in computer vision motivate the application of deep learning models as a new, third option [20]. Such deep learning models typically use the predictions of a dynamical model as the ground-truth targets during training, and strike a compelling trade-off between accuracy and cost.

Deep learning has yielded remarkable success in natural image super-resolution [37]. However, super-resolving wind dynamics differs significantly from natural images. Firstly, unlike in image super-resolution, the low-res and high-res wind data distributions are fundamentally different. Often, low-res natural images are obtained by coarsening high-res images. For wind data, however, the low-res inputs and high-res targets are produced by different models that simulate the physics differently. Secondly, the desiderata for image and wind super-resolution are different. The downstream applications of high-resolution wind data focus primarily on aggregate distribution-level results. For instance, a common downstream product is the annual mean map obtained by averaging an entire year’s worth of model predictions. Thirdly, natural image super-resolution typically produces a high-res RGB image from a low-res RGB image. Thus, natural image super-resolution typically uses only 3 input variables. NWP models, on the other hand, leverage several additional conditioning inputs to guide the super-resolution process. Dynamical models often use hun- dreds of variables (e.g., the WRF model uses 293 variables [27]), and deep neural models commonly use dozens of variables (e.g., the GAN-based Sup3r model uses 16 variables [33]). Intuitively, accurately modeling wind dynamics fundamentally requires more variables than image superresolution uses. The air through which wind flows is a fluid, and thus governed by the Navier-Stokes equation, dependent on temperature, pressure, and density. Existing literature on conditional image generation from diffusion models typically focuses on the single conditioning variable case (e.g., class label or prompt) [29]. This motivates the central question of our work in the context of wind dynamics: How can we better leverage multiple conditioning modalities in diffusion probabilistic models?

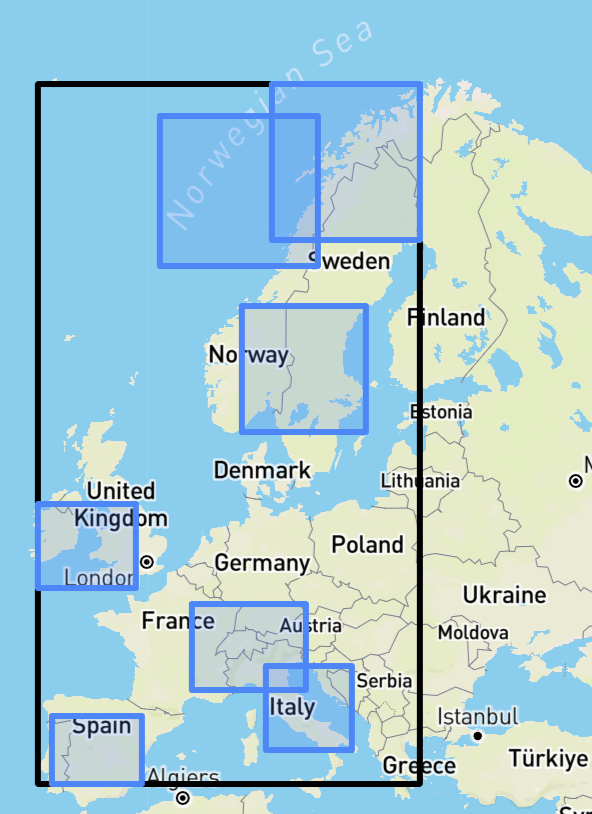

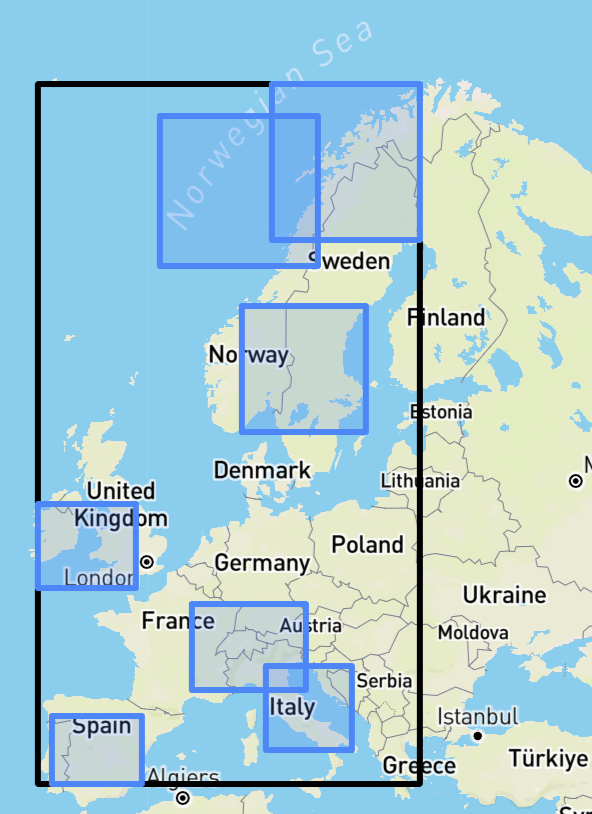

In this paper, we present WindDM: a diffusion model for efficient and accurate meso-to-global wind data superresolution. WindDM is trained on a new dataset of 265,390 timestamps of paired low-res and high-res wind data, with 8 input variables and 2 target variables. We present composite classifier-free guidance (CCFG), a novel generalization of classifier-free guidance (CFG) for multiple conditioning variables. CCFG is a simple inference algorithm that can be applied to any pre-trained diffusion model that supports usual CFG. We demonstrate that inference using CCFG yields improved sample quality, and the output distribution better matches the target distribution from a dynamical NWP model. Our CCFG introduces a new budget parameter, allowing practitioners to trade off higher compute usage for improved sample quality. We demonstrate that WindDM produces high-resolution predictions comparable to leading dynamical models and surpassing state-ofthe-art deep learning and statistical models.

Diffusion Models Diffusion models [11,32] have recently become the dominant image generative models for many applications, often surpassing other models like Generative Adversarial Networks (GANs) both in image quality and image diversity. Diffusion models have also been successfully applied to non-natural image domains, such as remote sensing [18] and medical imaging [17], and non-image domains, such as au

This content is AI-processed based on open access ArXiv data.