Time series forecasting predicts future values from past data. In real-world settings, some anomalous events have lasting effects and influence the forecast, while others are short-lived and should be ignored. Standard forecasting models fail to make this distinction, often either overreacting to noise or missing persistent shifts. We propose Co-TSFA (Contrastive Time Series Forecasting with Anomalies), a regularization framework that learns when to ignore anomalies and when to respond. Co-TSFA generates input-only and input-output augmentations to model forecast-irrelevant and forecast-relevant anomalies, and introduces a latent-output alignment loss that ties representation changes to forecast changes. This encourages invariance to irrelevant perturbations while preserving sensitivity to meaningful distributional shifts. Experiments on the Traffic and Electricity benchmarks, as well as on a real-world cash-demand dataset, demonstrate that Co-TSFA improves performance under anomalous conditions while maintaining accuracy on normal data. An anonymized GitHub repository with the implementation of Co-TSFA is provided and will be made public upon acceptance.

Anomalous Sequence 1

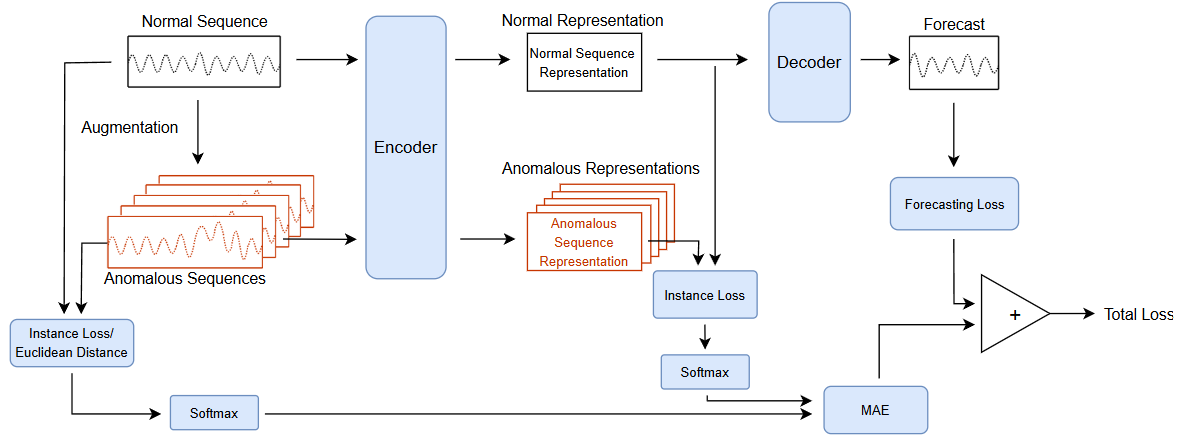

Normal Sequence Anomalous Sequence 2 Sequence 1 shows an input-only anomaly that should not affect the forecast, whereas Sequence 2 shows an input anomaly that persists into the output (forecast-relevant).

Time-series forecasting underpins many critical applications, including weather prediction Nie et al. (2023), financial market modeling Gao et al. (2024), and cash-demand forecasting for ATM replenishment Venkatesh et al. (2014). While time series often follow regular patterns such as seasonality and trend, these patterns are frequently disrupted by anomalous events. Some anomalies cause short-term fluctuations, such as a brief surge in energy usage during a cold night, whereas others lead to persistent changes, such as the long-lasting demand shifts during the COVID-19 pandemic. These disruptions challenge forecasting models that are trained only on normal conditions.

A particularly important setting is when anomalies occur at test time, where three scenarios may arise: (i) input-only anomalies, where corrupted history should be ignored so predictions remain unaffected (Figure 1, anomalous sequence 1); (ii) anomalies that start in the input window and persist into the prediction window, where forecasts should adapt to reflect the anomaly’s downstream effect (Figure 1, anomalous sequence 2); and (iii) normal conditions, where no anomaly is present and forecasts should follow the nominal trajectory. Because many anomalies are short-lived and systems can quickly return to normal, forecasting models must consistently handle all three scenarios within a single framework.

• We formalize the problem of Forecasting under Anomalous Conditions by distinguishing between forecast-relevant and forecast-irrelevant anomalies and highlighting the need for representation-level guidance in this setting. • We propose Co-TSFA, a contrastive regularization framework that enforces latent-output alignment under augmented scenarios, encouraging the model to respond proportionally to forecast-relevant shifts while remaining invariant to irrelevant perturbations. • We conduct extensive experiments on multiple benchmark datasets, demonstrating that Co-TSFA consistently improves forecasting accuracy under anomalous conditions without sacrificing performance on nominal data, outperforming existing robust and adaptive baselines.

Time-Series Forecasting under Clean Conditions. Classical forecasting models such as ARIMA Box & Jenkins (1970) rely on fixed parametric and linearity assumptions, which limits their ability to capture nonlinear dynamics. Deep learning models based on RNNs, LSTMs, and GRUs relaxed these assumptions by modeling nonlinear dependencies through recurrence. The introduction of Transformers Vaswani et al. (2017) further advanced the field by enabling efficient modeling of long-range dependencies. Recent state-of-the-art models such as Informer Zhou et al. (2021), FEDformer Zhou et al. (2022), iTransformer Liu et al., Autoformer Wu et al. (2021), and TimeXer Wang et al. (2025) Together, these lines of research highlight the importance of robust representations, but they do not explicitly address the joint challenge of handling normal conditions, input-only anomalies, and input-output anomalies at test time. Our work directly tackles this gap by training models to ignore irrelevant disturbances while adapting to anomalies that truly affect future outcomes.

We consider multivariate time-series forecasting. Each input sequence is x ∈ R T ×C , where T is the input window length and C is the number of channels. The goal is to predict a future sequence y ∈ R H×C over horizon H. The training set is

i=1 . A forecasting model comprises an encoder g ϕ : R T ×C → R T ′ ×D that extracts temporal representations and a forecasting head h ψ : R T ′ ×D → R H×C that maps representations to future values. The full predictor is

where T ′ is the latent sequence length and D is the representation dimension. The base forcasting objective minimizes a generic discrepancy ℓ(•, •) between the prediction ŷ = f θ (x) and the target y:

where ℓ is task-specific (e.g., MAE, MSE).

The test set may contain both normal and anomalous samples, where anomalies manifest as irregular patterns or distributional shifts in the input. Such anomalies can be short-lived, confined to the input window, or persistent, in which case their effects propagate into the prediction horizon (Fig. 1). Our goal is to develop a model that maintain performance on normal sequences while remaining robust and adaptive under anomalous test-time conditions.

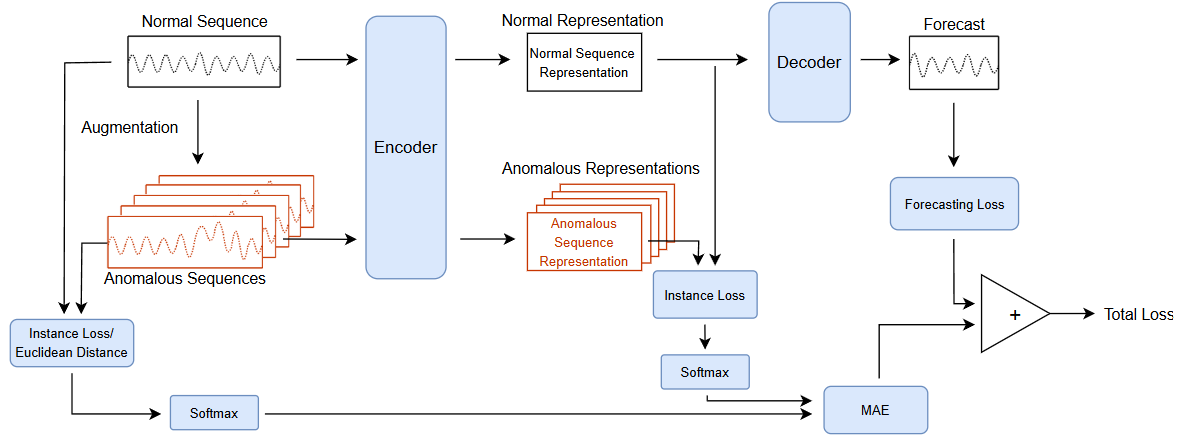

To improve forecasting robustness under test-time anomalies, we propose Co-TSFA, a contrastive regularization framework that explicitly aligns latent representations with outputs. The key assumption is that forecast-relevant shifts in the input should induce corresponding changes in the latent space, while forecast-invariant perturbations should leave the latent representation unaffected.

Co-T

This content is AI-processed based on open access ArXiv data.