📝 Original Info Title: CourtPressGER: A German Court Decision to Press Release Summarization DatasetArXiv ID: 2512.09434Date: 2025-12-10Authors: Sebastian Nagl, Mohamed Elganayni, Melanie Pospisil, Matthias Grabmair📝 Abstract Official court press releases from Germany's highest courts present and explain judicial rulings to the public, as well as to expert audiences. Prior NLP efforts emphasize technical headnotes, ignoring citizen-oriented communication needs. We introduce CourtPressGER, a 6.4k dataset of triples: rulings, human-drafted press releases, and synthetic prompts for LLMs to generate comparable releases. This benchmark trains and evaluates LLMs in generating accurate, readable summaries from long judicial texts. We benchmark small and large LLMs using reference-based metrics, factual-consistency checks, LLM-as-judge, and expert ranking. Large LLMs produce high-quality drafts with minimal hierarchical performance loss; smaller models require hierarchical setups for long judgments. Initial benchmarks show varying model performance, with human-drafted releases ranking highest.

💡 Deep Analysis

📄 Full Content CourtPressGER: A German Court Decision to Press Release

Summarization Dataset

Sebastian Nagl1,*, Mohamed Elganayni1, Melanie Pospisil1 and Matthias Grabmair1

1Technical University of Munich (TUM)

Abstract

Official court press releases from Germany’s highest courts present and explain judicial rulings to the public,

as well as to expert audiences. Prior NLP efforts emphasize technical headnotes, ignoring citizen-oriented

communication needs. We introduce CourtPressGER, a 6.4k dataset of triples: rulings, human-drafted press

releases, and synthetic prompts for LLMs to generate comparable releases. This benchmark trains and evaluates

LLMs in generating accurate, readable summaries from long judicial texts. We benchmark small and large

LLMs using reference-based metrics, factual-consistency checks, LLM-as-judge, and expert ranking. Large LLMs

produce high-quality drafts with minimal hierarchical performance loss; smaller models require hierarchical

setups for long judgments. Initial benchmarks show varying model performance, with human-drafted releases

ranking highest.

Keywords

Legal Layman Communication, Legal NLP, Text Summarization, Press Release Generation, German Court

Proceedings

1. Introduction

High-level German courts make decisions accessible through press releases that summarize essential

aspects and implications in understandable form. Releases authored by judges contain legal authority

and lay-friendly narrative, serving as an interface between judiciary and public. This represents targeted

legal summarization, where gold data is typically sparse. LLM progress suggests high-quality automatic

drafts are within reach, yet robust evaluations of legal decision summaries remain difficult, especially

in non-English languages. CourtPressGER advances German legal summarization by:

1. Collecting a large aligned corpus of German decisions and press releases (6.4k pairs),

2. deriving decision-specific summarization prompts,

3. benchmarking open and commercial LLMs, and

4. analyzing performance through automatic and expert assessment

This work contributes a dataset and evaluation framework for German legal summarization research,

with initial benchmarks establishing baseline performance.

2. Related Work

Legal-text summarization has progressed from early sentence-ranking heuristics borrowed from news

[1, 2] to domain-adapted encoder–decoder transformers such as Legal-BART and Legal-PEGASUS

[3, 4, 5]. Recent surveys report steady ROUGE gains but emphasize three persistent challenges—extreme

document length, jurisdiction-specific jargon, and the absence of factual-consistency metrics [6, 7].

Researchers address the length issue with hierarchical encoders and chunk-merge strategies for book-

length opinions [8] and Indian Supreme Court cases [9]. Yet expert evaluations reveal that higher ROUGE

scores do not necessarily align with legal usefulness [10], underscoring the need for multi-faceted

assessment.

Preprint - This contribution was accepted at JURIX AI4A2J Workshop 2025

*Corresponding author: sebastian.nagl@tum.de. The dataset and evaluation framework is available via GitHub.

© 2025 Copyright for this paper by its authors. Use permitted under Creative Commons License Attribution 4.0 International (CC BY 4.0).

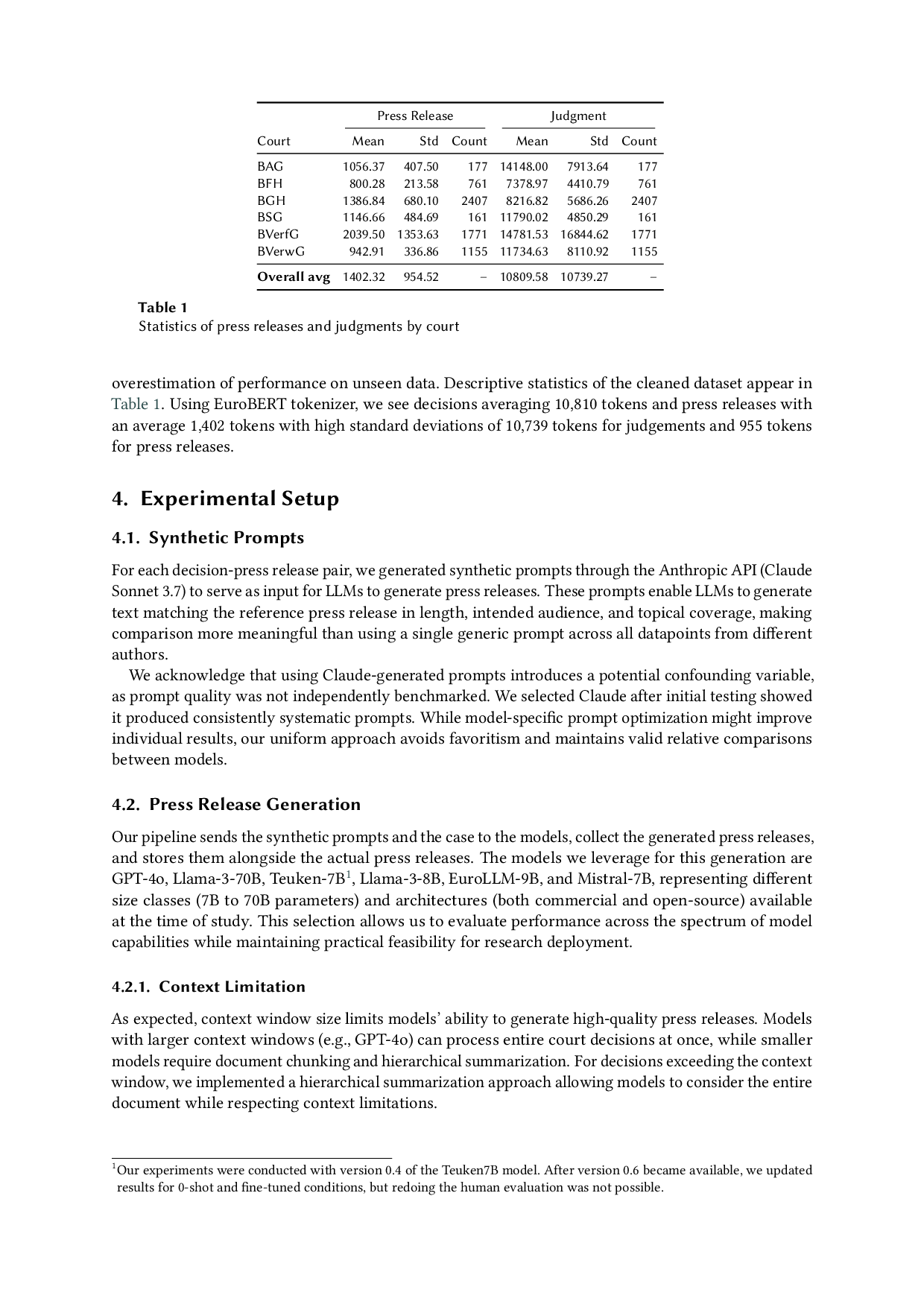

Datasets.

Larger legal summarization corpora typically pair expert-targeted summaries with their

underlying document. A distinction can be made between: first, summarizing legislation, such as

BillSum (U.S. bills; 11) and EUR-Lex-Sum (EU legislation; 5) and, second, case/judgment summarization,

such as the US-English Multi-LexSum (U.S. civil-rights cases; 12, including e.g., complaints and motions)

and Portuguese BrazilianBR (STF rulings; 13). For German, LegalSum covers ∼100k rulings with legal-

holding-focused Leitsätze [14] from the German legal context, and Rolshoven et al. [15] provides 57k

Regesten from Swiss courts. Both target legal practitioners and their summaries consist of concise,

technical, often extractive headnotes. To date, no corpus of significant size aligns German decisions

with non-headnote-based summaries written for mixed audiences, including journalists and the general

public.

Outside Germany, few resources focus on citizen-oriented summaries, e.g., TL;DR software license

synopses [16], Canadian lay summaries [17], and argument-aware rewriting [18]. The German ALeKS

project seeks to automate headnote generation.

Extractive approaches to summarization have been mostly succeeded by abstractive summarization

using transformers [19, 20, 21, 22] and faithfulness-enhancing rerankers [13]. Cross-jurisdiction transfer

of smaller models primarily trained in one jurisdiction poses a challenge Santosh et al. [23]. Large

commercial models are marketed as capable of summarizing judgments well, which we evaluate through

expert analysis.

Evaluation.

In addition to classic NLP metrics, we see newer factual metrics like QAGS (Question

Answering for evaluating Generated Summaries) (Wang et al. [24])

📸 Image Gallery

Reference This content is AI-processed based on open access ArXiv data.