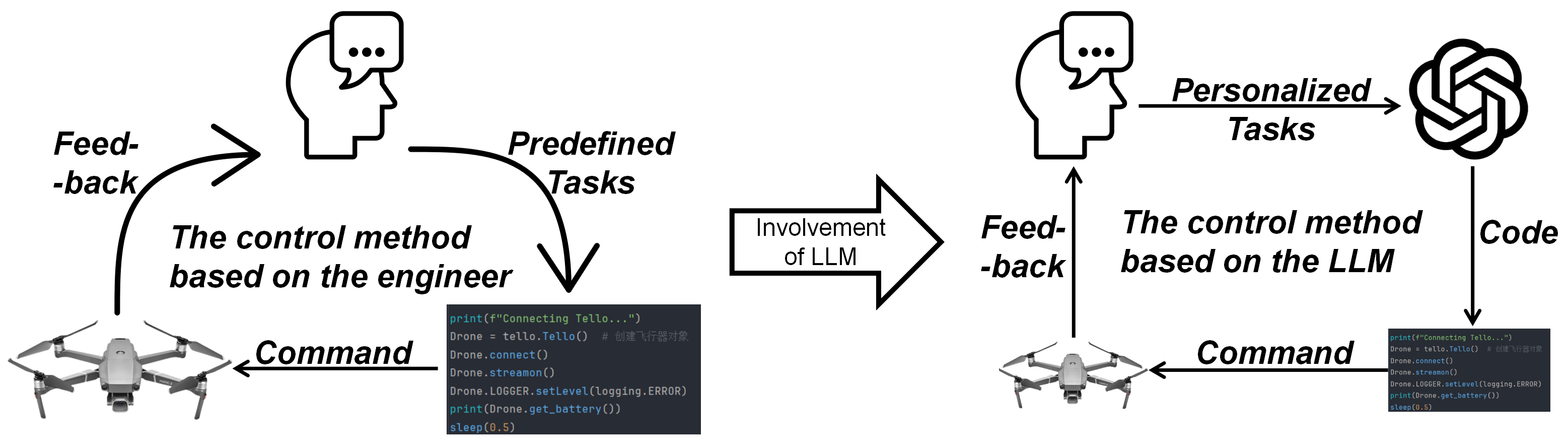

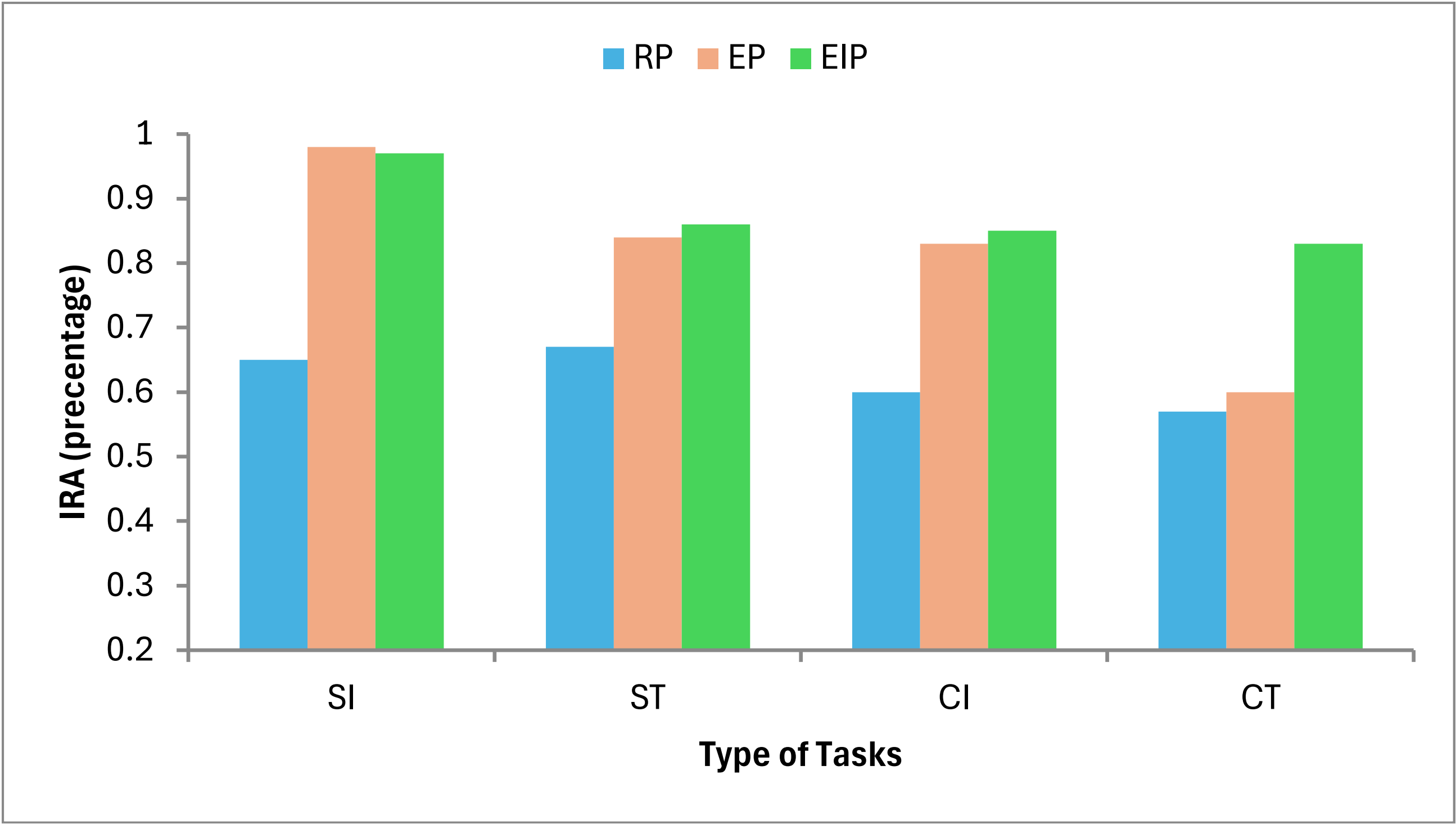

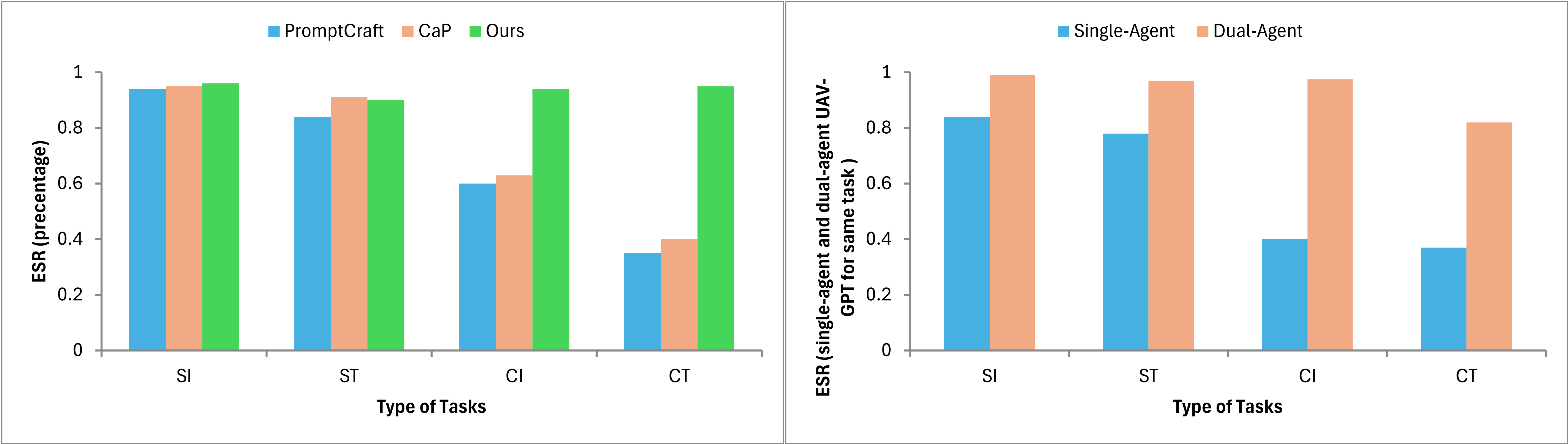

The future of UAV interaction systems is evolving from engineer-driven to user-driven, aiming to replace traditional predefined Human-UAV Interaction designs. This shift focuses on enabling more personalized task planning and design, thereby achieving a higher quality of interaction experience and greater flexibility, which can be used in many fileds, such as agriculture, aerial photography, logistics, and environmental monitoring. However, due to the lack of a common language between users and the UAVs, such interactions are often difficult to be achieved. The developments of Large Language Models possess the ability to understand nature languages and Robots' (UAVs') behaviors, marking the possibility of personalized Human-UAV Interaction. Recently, some HUI frameworks based on LLMs have been proposed, but they commonly suffer from difficulties in mixed task planning and execution, leading to low adaptability in complex scenarios. In this paper, we propose a novel dual-agent HUI framework. This framework constructs two independent LLM agents (a task planning agent, and an execution agent) and applies different Prompt Engineering to separately handle the understanding, planning, and execution of tasks. To verify the effectiveness and performance of the framework, we have built a task database covering four typical application scenarios of UAVs and quantified the performance of the HUI framework using three independent metrics. Meanwhile different LLM models are selected to control the UAVs with compared performance. Our user study experimental results demonstrate that the framework improves the smoothness of HUI and the flexibility of task execution in the tasks scenario we set up, effectively meeting users' personalized needs.

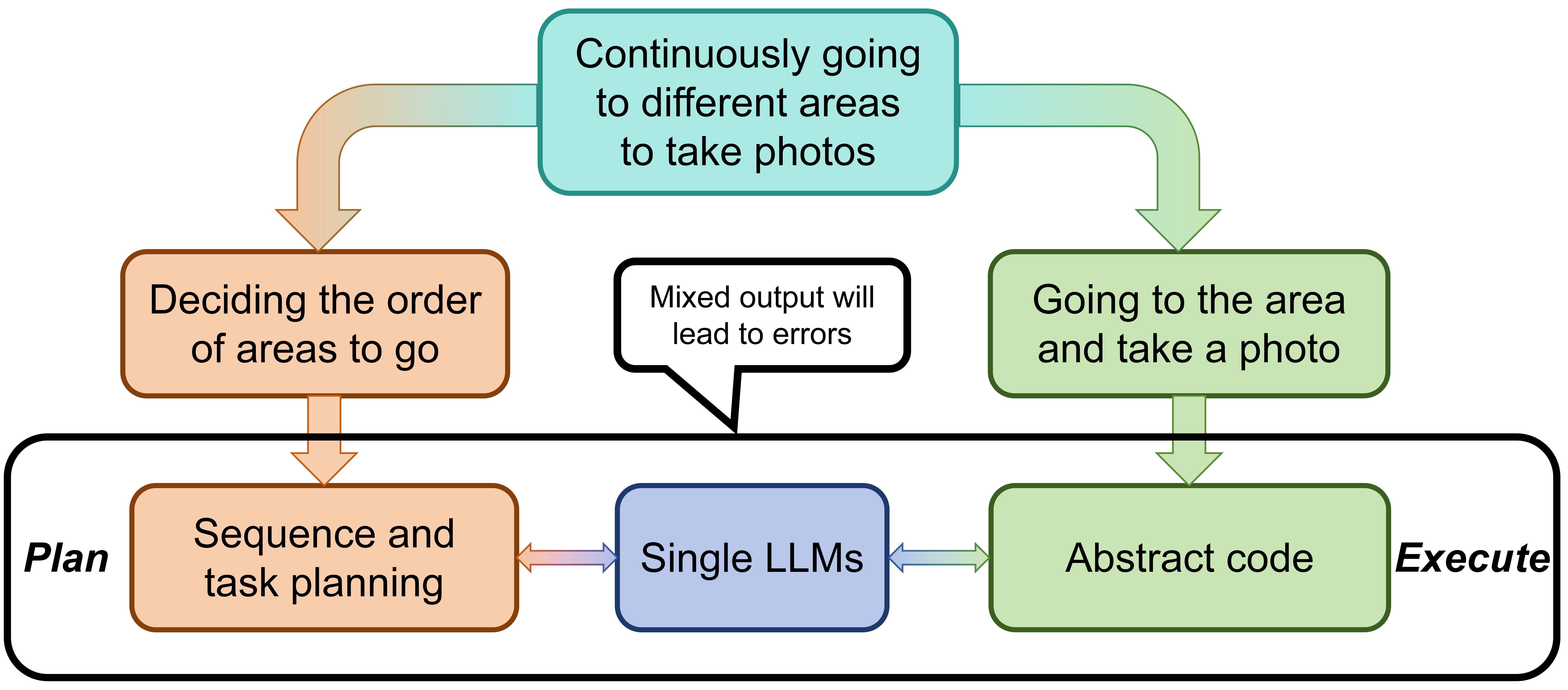

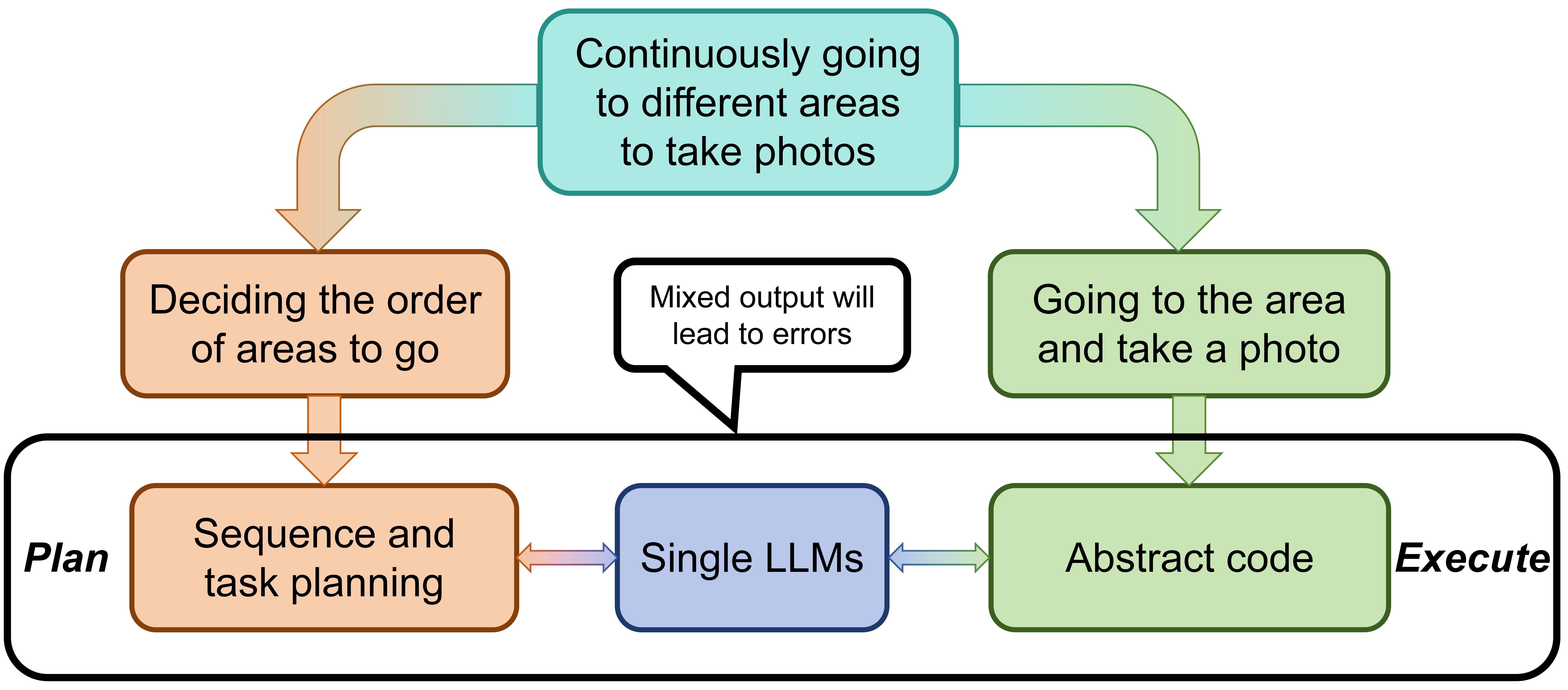

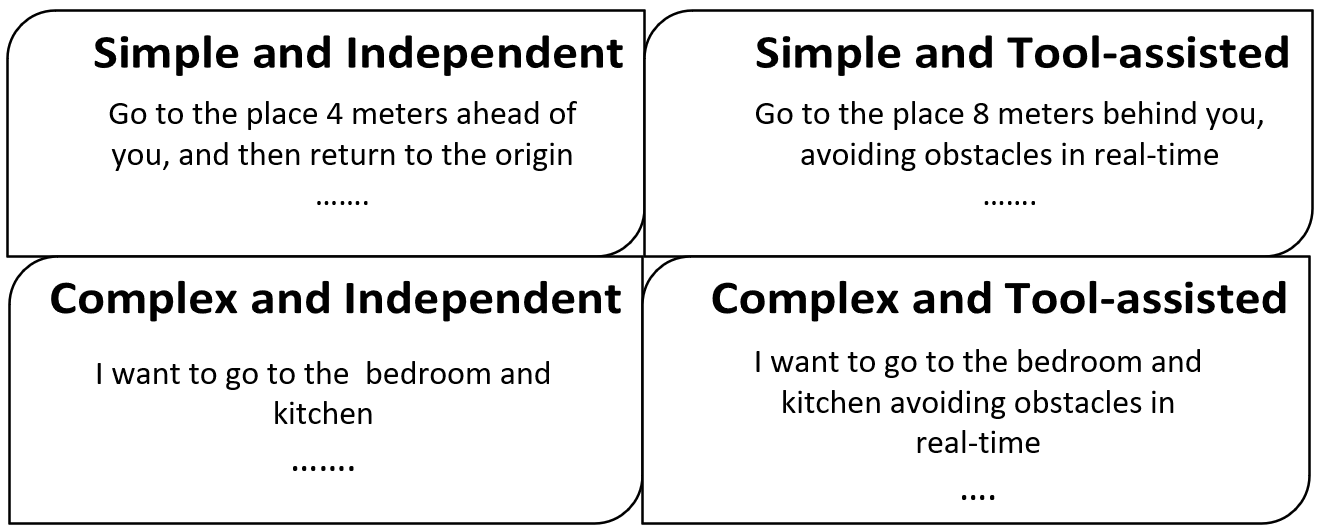

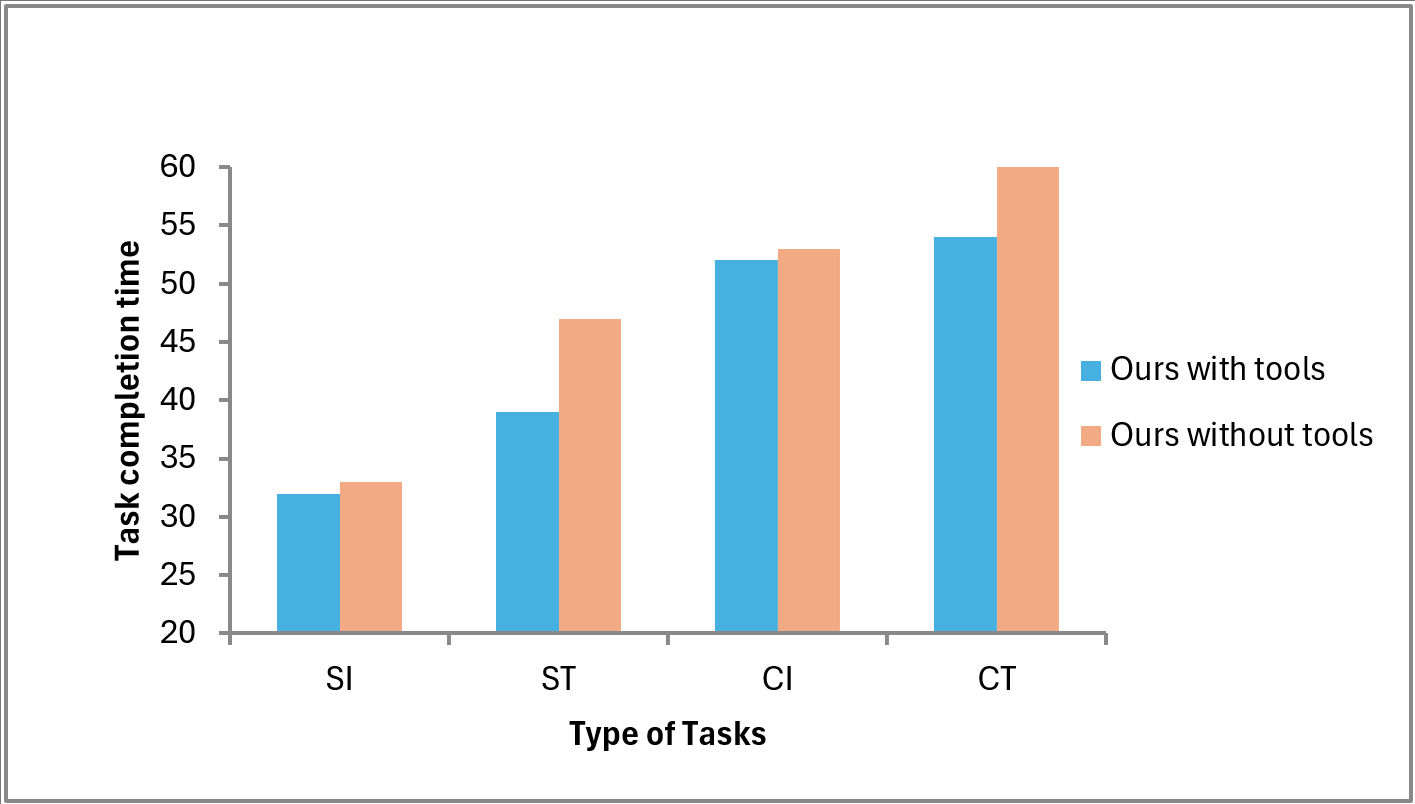

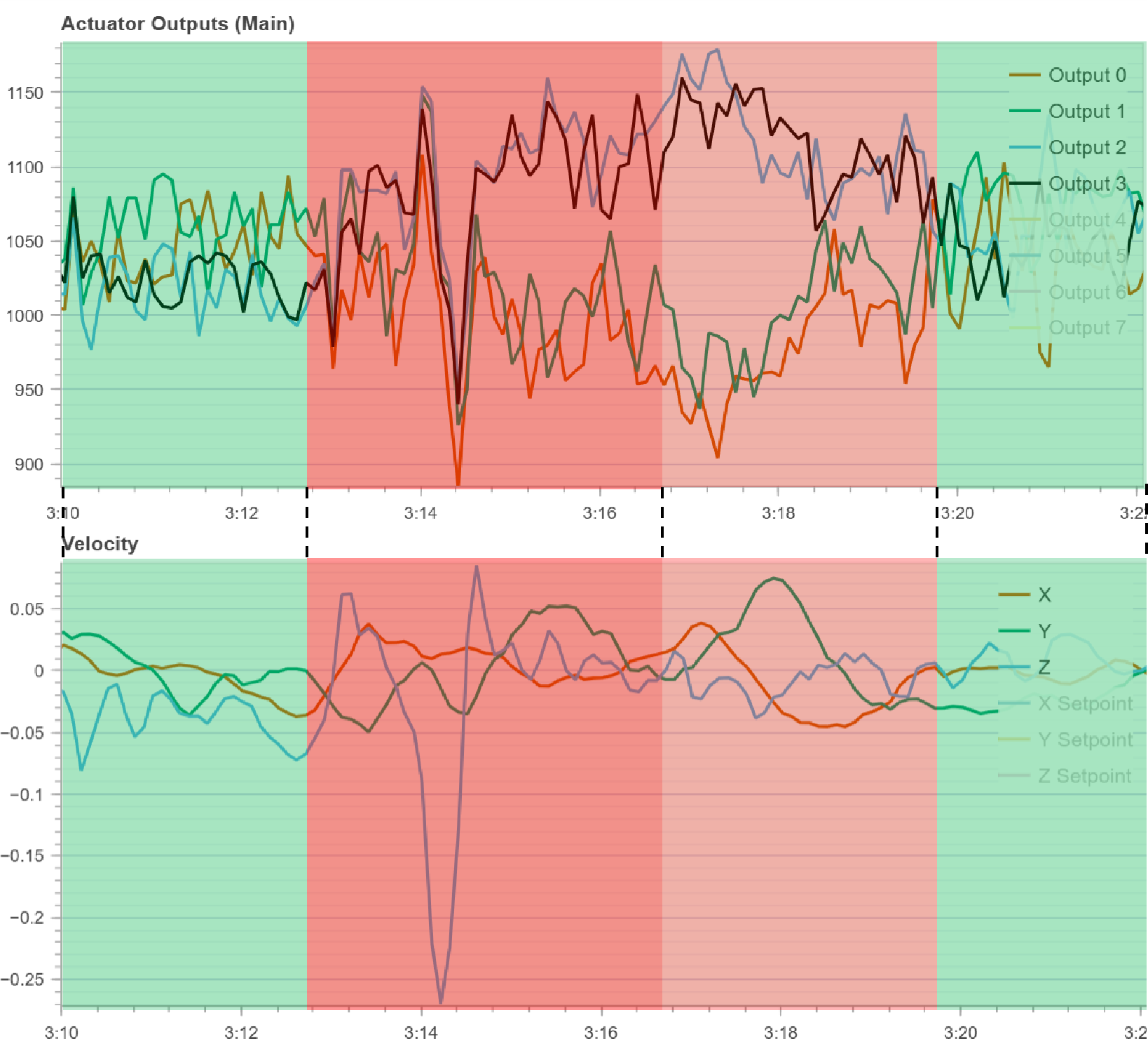

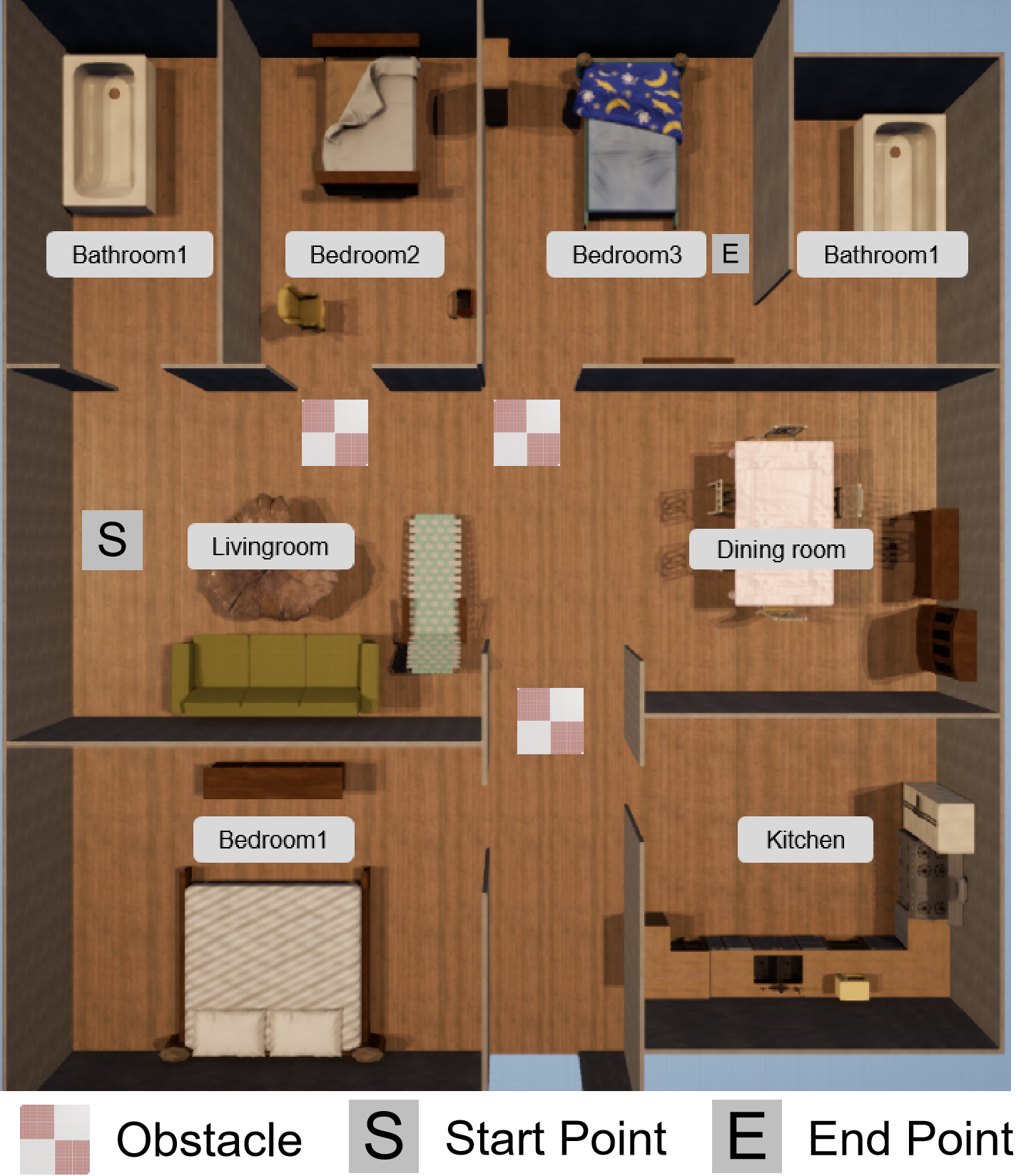

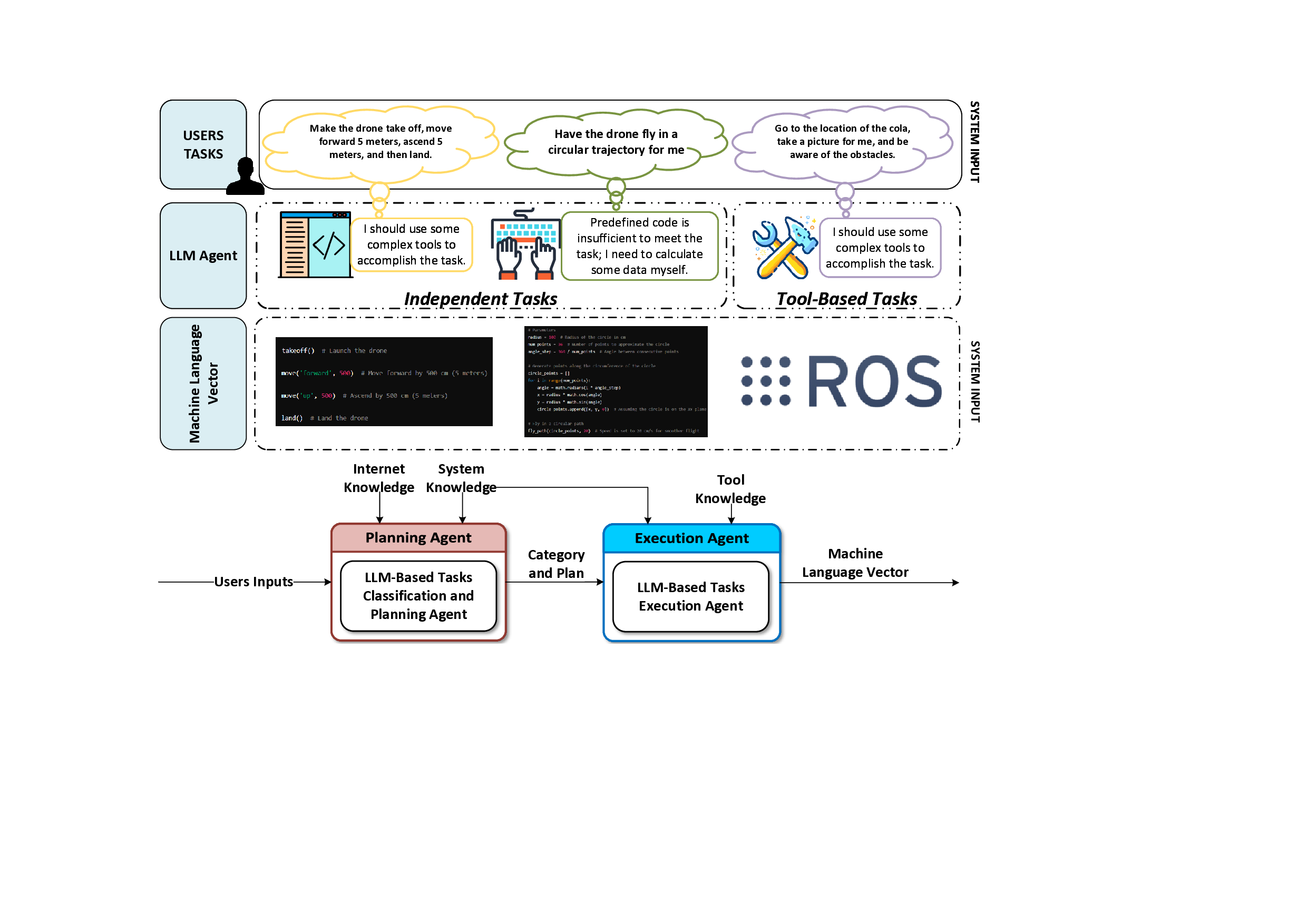

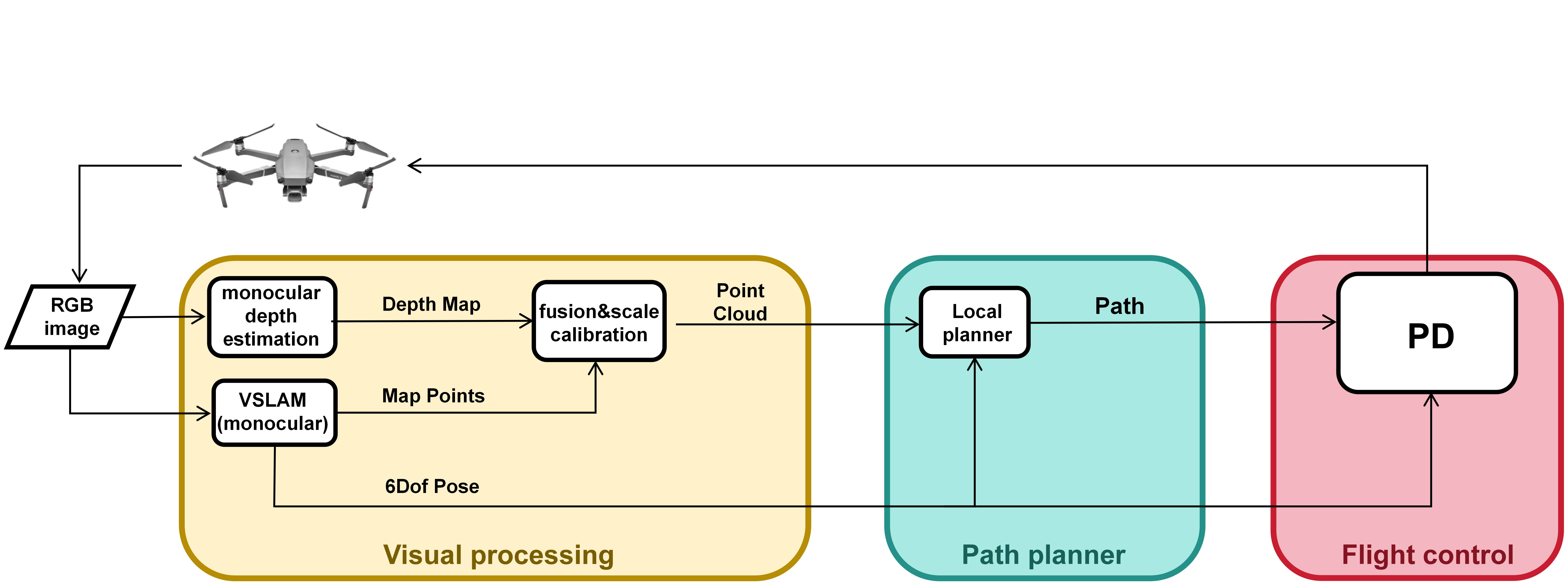

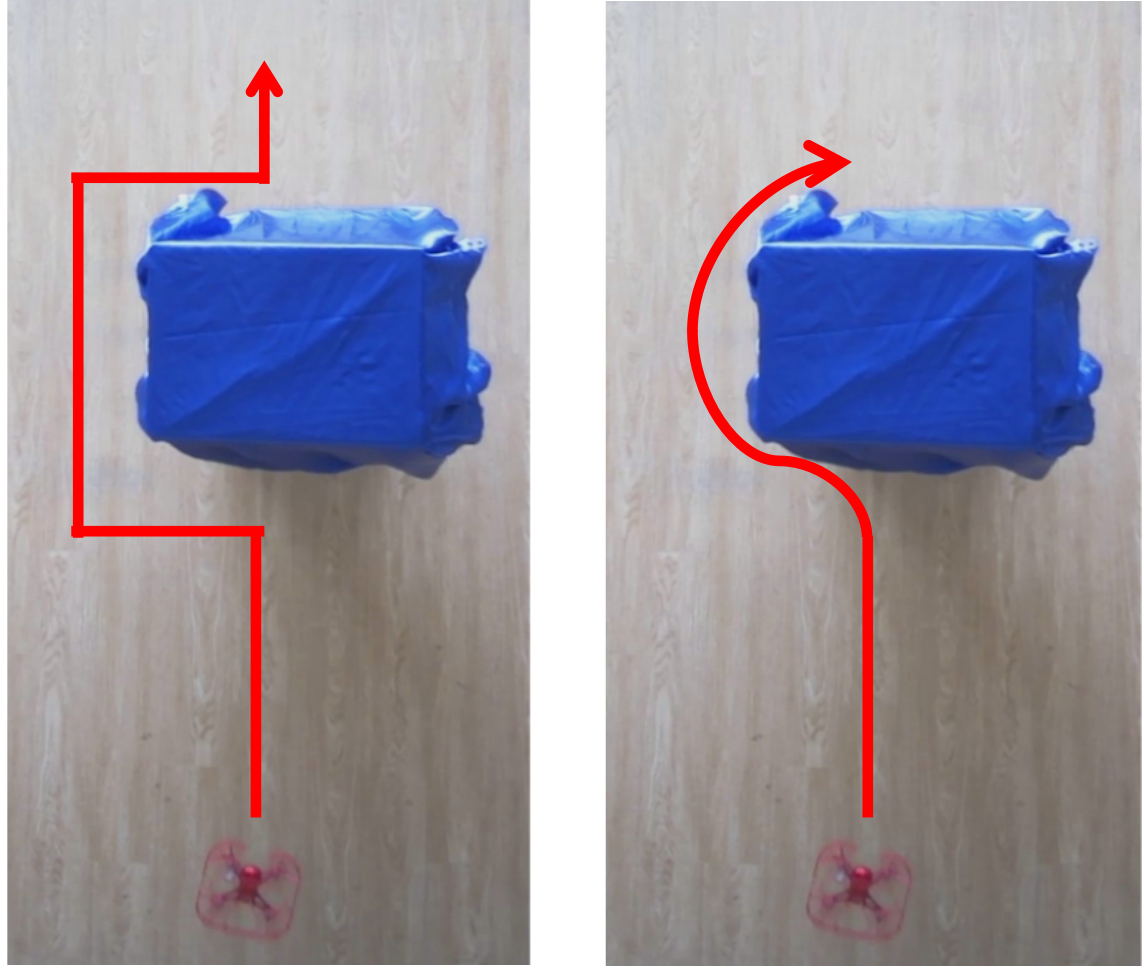

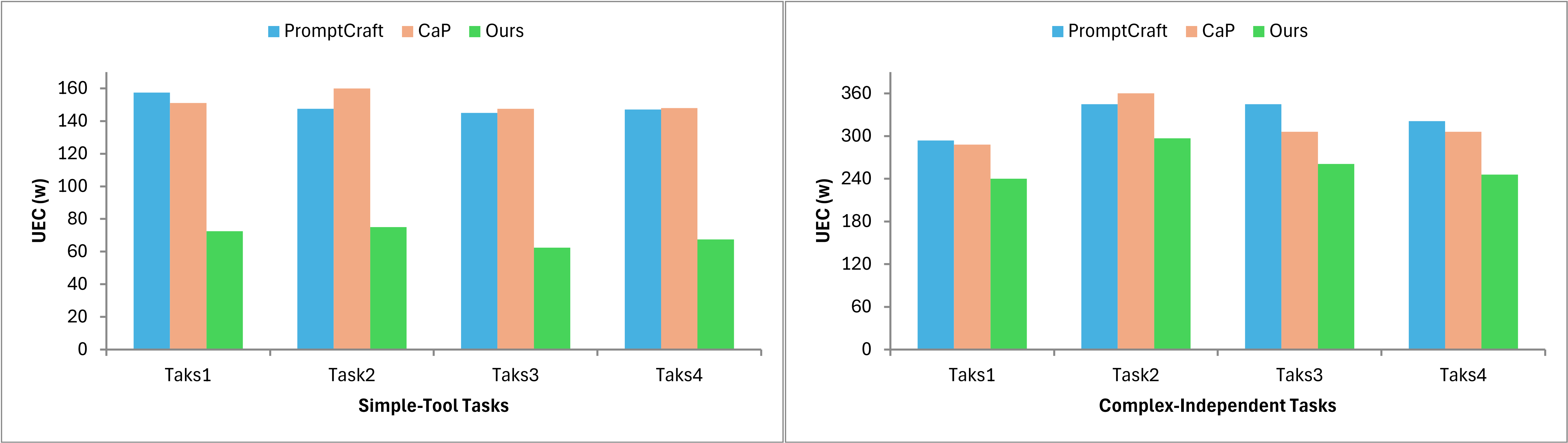

With the increasing popularity of Unmanned Aerial Vehicles (UAVs) in modern society, the complexity of Human-UAV Interaction (HUI) is also escalating. According to research by Rearch and Markets, the world's largest market research organization, the global UAVs market will reach a value of up to €53.1 billion by 2025 [1]. The Civil Aviation Administration of China published [2] that the number of registered UAVs in China had reached 1.2627 million by the end of 2023, representing a 32.2% increase from 2022. Clearly, the rapid proliferation of UAVs in human society has made the need to lower the threshold for HUI even more urgent. It is becoming more important that exploring and execution, as illustrated in Figure 1. This incorporation introduces errors, as the plan ideally should abstract from specific code or execution intricacies. In the context of task planning, LLMs are expected to concentrate on comprehending the task and its context, akin to a 'human' role. Conversely, during task execution, LLMs need to generate precise code or machine-readable instructions, functioning more like a 'script'. In practical scenarios, achieving a seamless transition between these 'human' and 'script' roles within a single LLM run presents a significant challenge. Consequently, when confronted with intricate planning and tasks, single LLM agent methods may encounter reduced planning efficiency or execution failures. 2. Task Execution: Furthermore, when using LLMs for task planning or executing independent tasks, if the complexity of the task exceeds the capabilities of the predefined high-level function library, the existing HUI frameworks based on LLMs [15] may fail to execute the task, especially for complex operations like obstacle avoidance. For UAVs, obstacle avoidance involves an intricate process, including receiving camera data, inferring surrounding obstacles, and calculating subsequent velocity vectors, which cannot be simplified into a script. Therefore, in such cases, the interaction framework must possess the ability to invoke tools to complete the task. Similarly, for task planning, when the complexity of the task surpasses the solo handling capacity of LLMs, the framework should also have the capability to call upon tools.

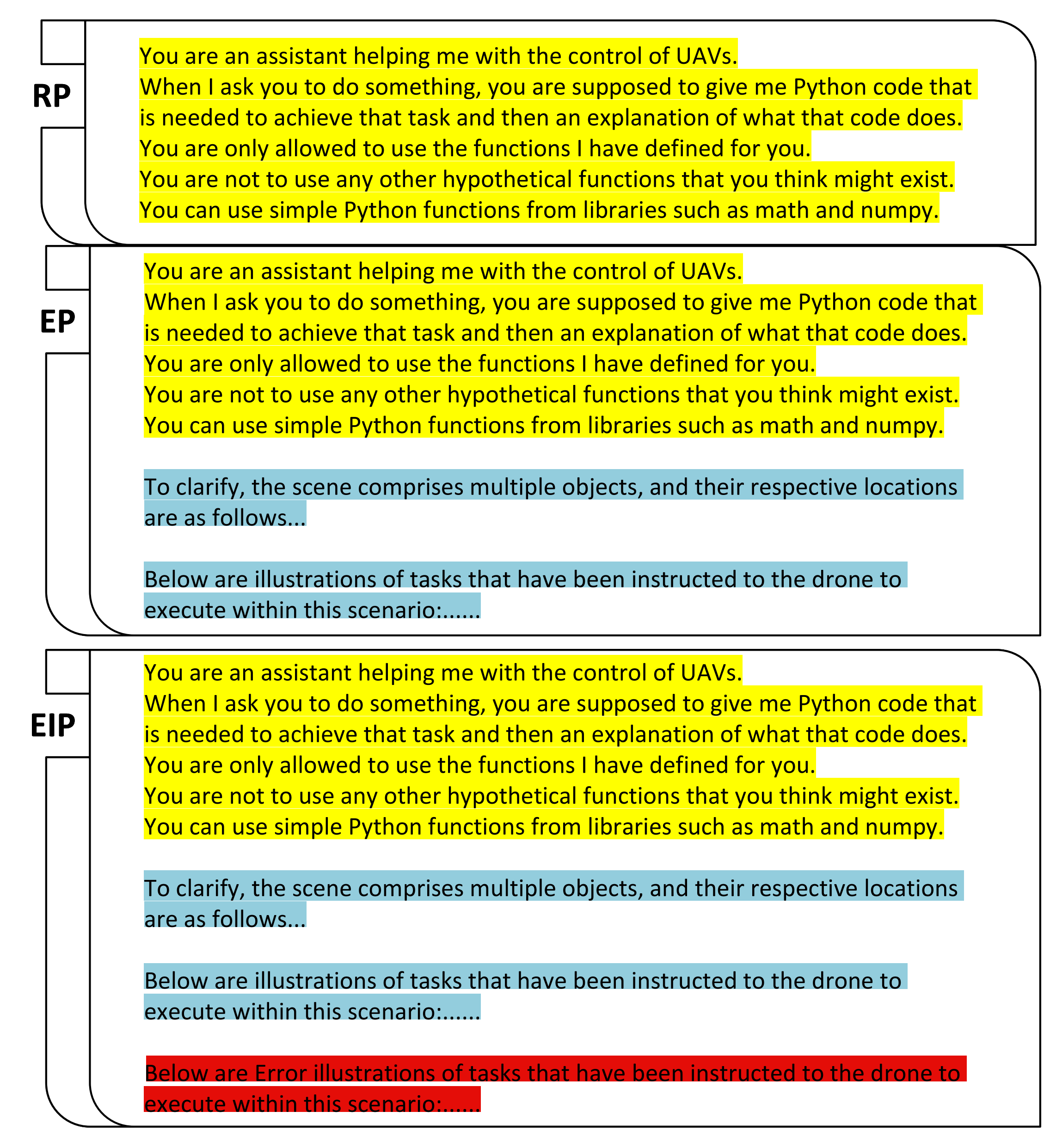

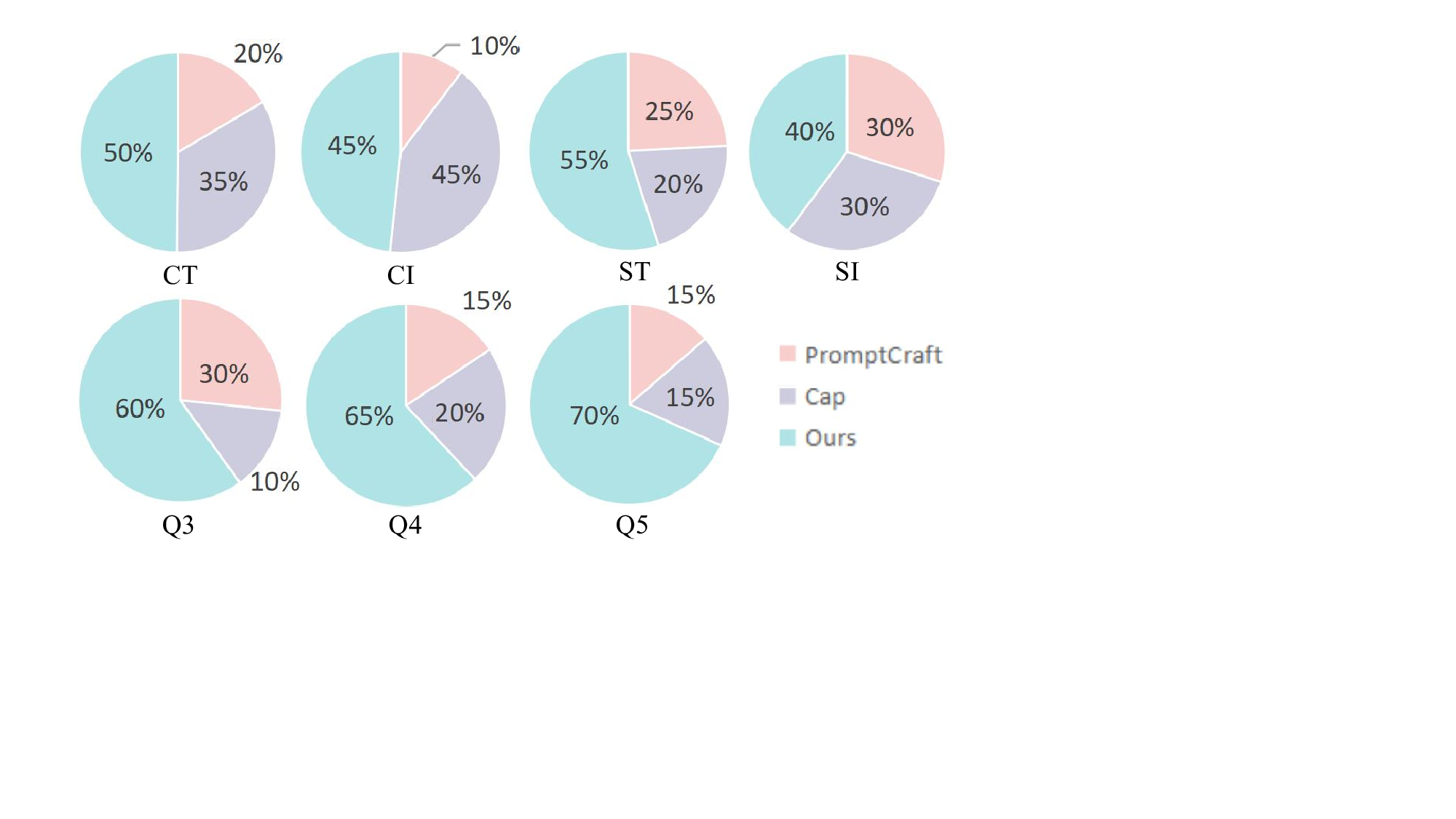

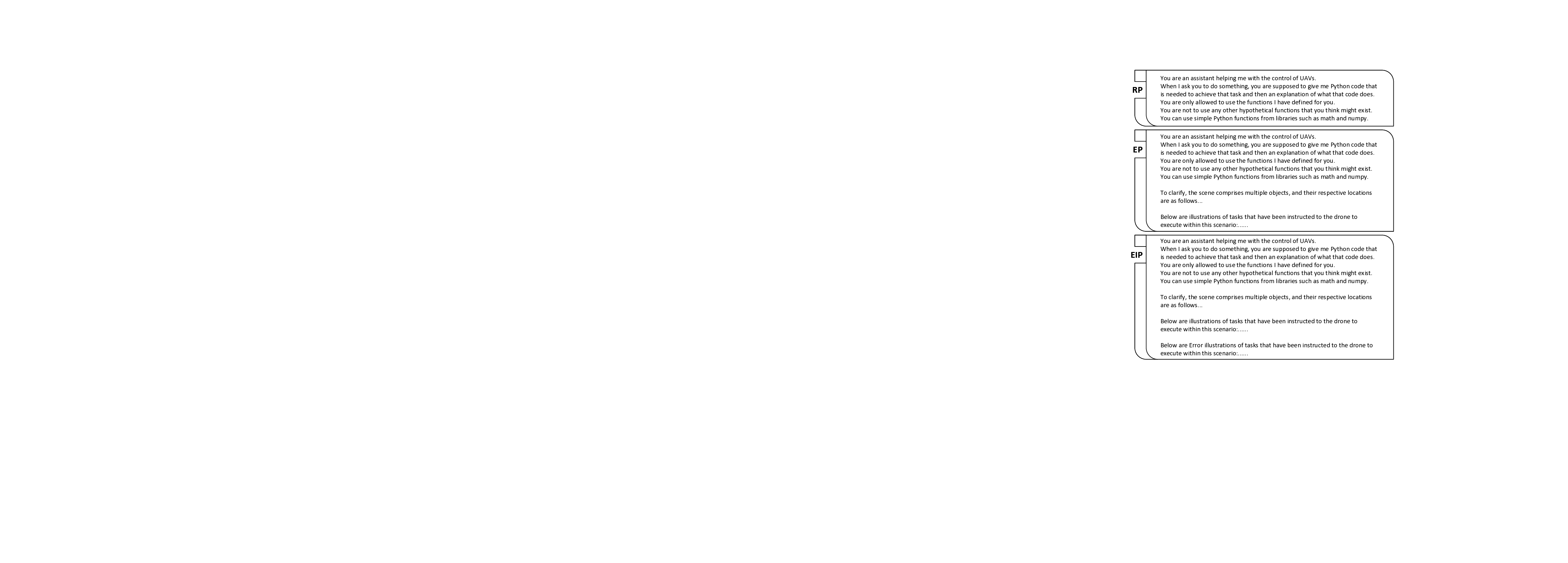

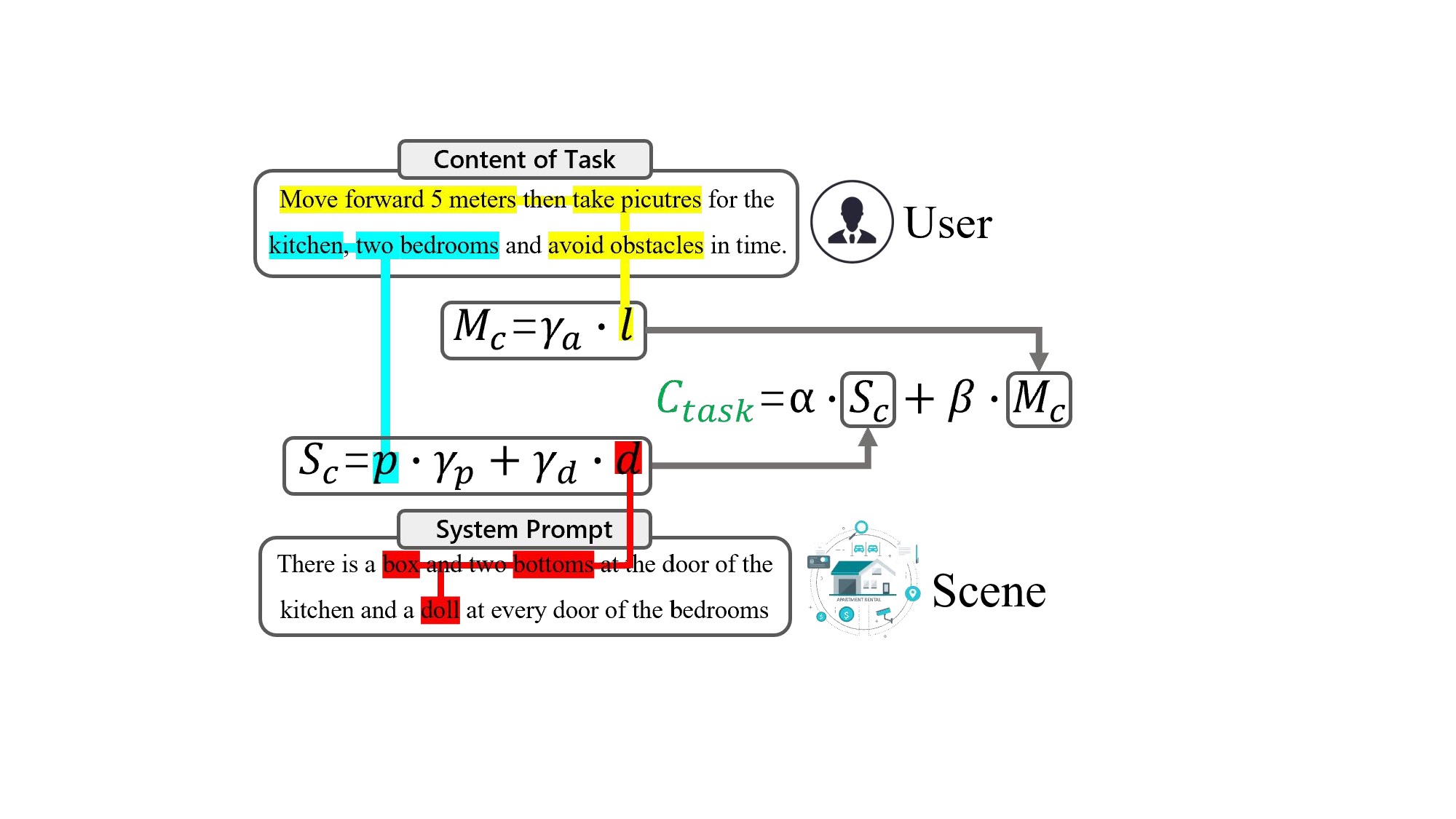

To address the aforementioned issues, we propose “UAV-GPT,” a dual-agent framework tailored for UAV interaction. It integrates the natural language understanding capabilities of LLMs via API interfaces, enabling UAVs to “understand” human language and learn to invoke tools to tackle complex task planning and execution challenges. The framework consists of two LLM agents: a planning agent and an execution agent. To solve the task planning problem, the planning agent utilizes the natural language understanding abilities of LLMs to classify and plan tasks, accurately categorizing user needs and formulating reasonable execution plans through a predefined behavior library and discrete task reorganization. It then conveys the classified and planned tasks to the execution agent. To solve the task execution problem, execution agent will select the most suitable method and executes the corresponding operational code based on the mapping relationship between a predefined command library and the LLM, ensuring precise and efficient execution of every instruction. To enhance generalization, we integrated ROS-based control algorithms which fall within the scope of the skill base architecture, the difference is that we use skills as a supplement to the robotics framework rather than as its foundation. Meanwhile, within the classical three-layer architecture, our planning and execution agents operate within the scope of the task planning and execution layers, respectively. Crucially, the integration of LLMs significantly optimizes these layers compared to traditional implementations: it empowers the planning layer with semantic reasoning to transcend hard-coded logic, and upgrades the execution layer with code comprehension to enable dynamic rather than static tool invocation. This ensures that, unlike purely skill-based or traditional architectures, our system achieves better flexibility and adaptability. In summary, our contributions are as follows:

• We propose a dual-agent intelligent interaction framework based on LLMs for UAV, which achieves precise classification of tasks and outputs solutions with high success rates and efficiency. Planning agent employs a predefined behavior library and discrete task reorganization to plan complex tasks reasonably, execution agent convert the solutions into precise machine language outputs. • We introduced traditional control algorithms to the execution agent to enhance its range of applicability. The execution agent evaluates tasks through the mapping relationship between a predefined command library and LLMs, and then selects a reasonable execution method to complete the tasks. • The user study demostrate that our framework improves HUI smoothness and task-

This content is AI-processed based on open access ArXiv data.