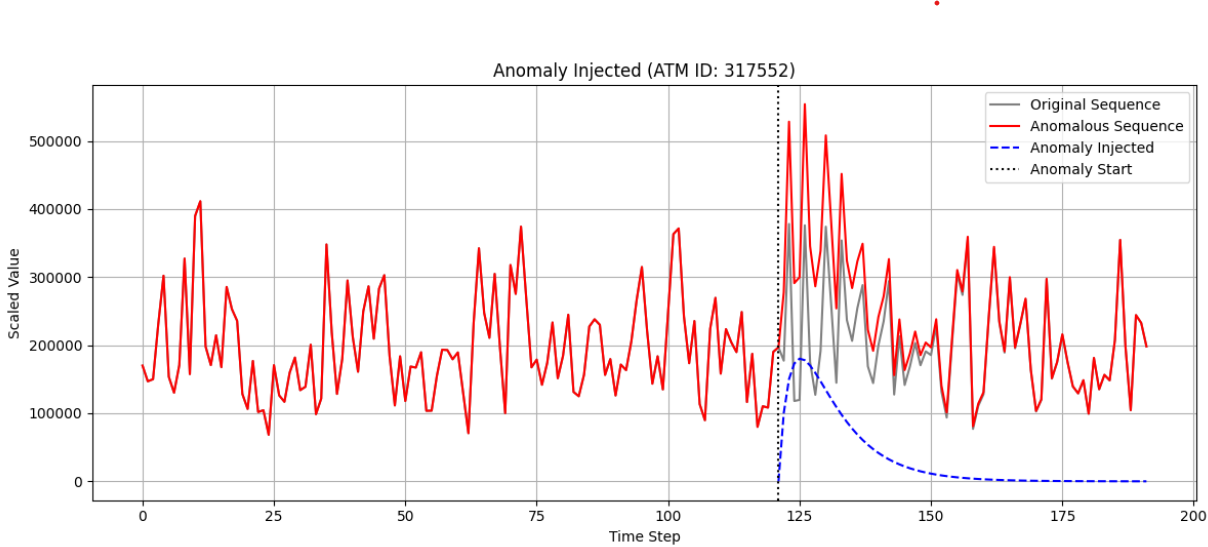

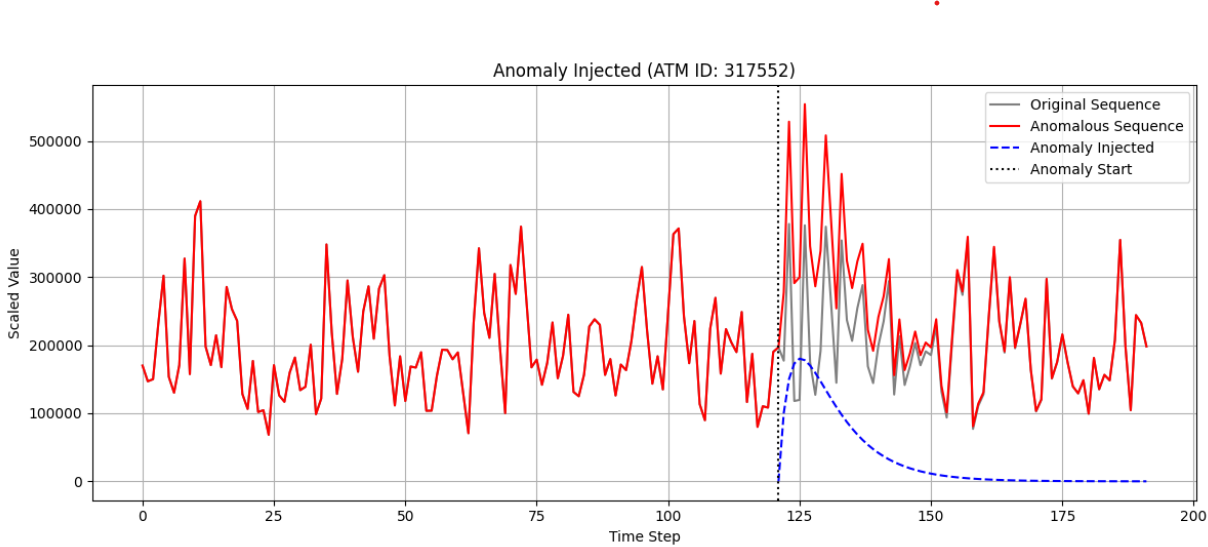

Reliable forecasting of multivariate time series under anomalous conditions is crucial in applications such as ATM cash logistics, where sudden demand shifts can disrupt operations. Modern deep forecasters achieve high accuracy on normal data but often fail when distribution shifts occur. We propose Weighted Contrastive Adaptation (WECA), a Weighted contrastive objective that aligns normal and anomaly-augmented representations, preserving anomaly-relevant information while maintaining consistency under benign variations. Evaluations on a nationwide ATM transaction dataset with domain-informed anomaly injection show that WECA improves SMAPE on anomaly-affected data by 6.1 percentage points compared to a normally trained baseline, with negligible degradation on normal data. These results demonstrate that WECA enhances forecasting reliability under anomalies without sacrificing performance during regular operations.

Accurate forecasting of ATM cash withdrawals is critical for cash logistics and service continuity [1,2]. Modern ATM networks produce multivariate time series with strong seasonal patterns and occasional abrupt shifts due to events such as crises, outages, or local disruptions. These anomalies induce distribution shifts that can degrade forecaster performance, leading to shortages or inefficiencies.

State-of-the-art deep forecasters (e.g., Transformers, frequency-domain models) [3,4,5,6] perform well on normal data but struggle to maintain reliable performance when the data distribution shifts due to rare or anomalous events. Contrastive learning [7,8] can improve representation generalization by aligning similar inputs, but strict alignment of normal and anomaly-augmented samples may suppress anomaly signals needed for accurate forecasts in anomalous situations. A method is required that balances stability with This work is partially supported by Vinnova, Sweden. sensitivity to anomalous changes, meaning it should forecast accurately during normal periods and still perform well when the data suddenly changes due to anomalies.

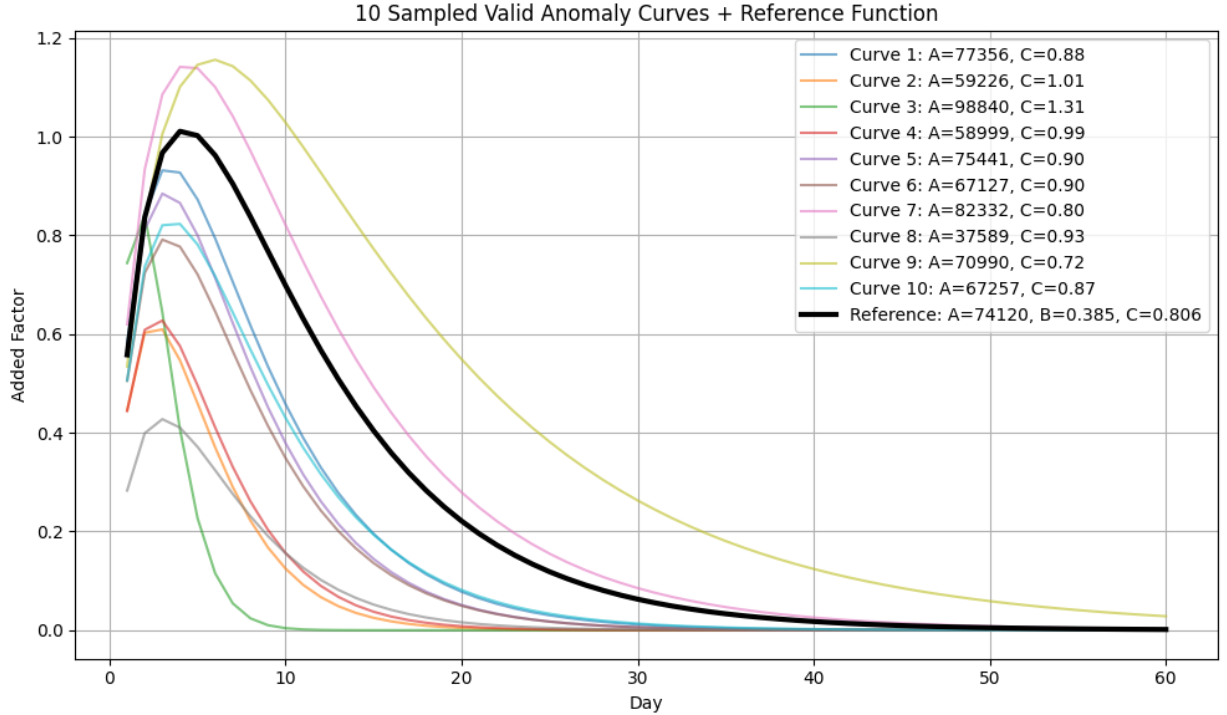

We propose Weighted-Contrastive Anomaly-Aware Adaptation (WECA), a training objective that applies weighted contrastive learning to softly align normal and augmented sequences, preserving anomaly-relevant distinctions. Experiments on a large-scale ATM dataset (1.3k ATMs, two years) show that WECA improves SMAPE on anomaly-affected data while maintaining accuracy on normal periods.

Contributions: (1) Formalize anomaly-aware forecasting as learning representations that are both invariant to benign variations and sensitive to anomalies. (2) Introduce a weighted contrastive loss that controls alignment strength, enabling a tunable invariance-sensitivity trade-off.

(3) Demonstrate improved accuracy under distribution shifts on real-world cash-demand forecasting data without degrading normal performance.

Time-series forecasting has evolved from classical models to deep neural architectures capable of capturing nonlinear and long-range dependencies. RNNs improved sequential modeling but suffered from vanishing gradients and slow recurrence [9]. These limitations motivated the adoption of Transformer-based forecasters, which use self-attention to efficiently model long-horizon dependencies [10,11]. Informer [12] and Autoformer [13] further reduced the cost of attention, making large-scale forecasting feasible. More recently, frequency-domain models such as TimesNet [14] and FEDformer [15] leverage Fourier transforms to extract seasonal patterns with high efficiency, while foundation models like MOMENT [16] pre-train general encoders for transfer learning across datasets. In parallel, self-supervised approaches such as TS2Vec [17], SoftCLT [18], and Timesurl [19] learn robust representations.

Despite these architectural advances, forecasting models trained on normal data often struggle with distribution shifts caused by rare or anomalous events. A straightforward approach involves fine-tuning pre-trained forecasters on anomaly-augmented data, but this risks that the model’s performance on normal data periods degrades significantly [20,21]. Representation learning provides a principled way to learn invariant features that generalize across changing conditions [5]. Contrastive learning [7] learns robust representations by aligning positive pairs in the latent space, making features invariant to distribution shifts. In forecasting, however, anomalies often change future values, so forcing full alignment between normal and anomaly-augmented samples can remove useful anomaly information.

We propose to control how strongly pairs are aligned. Mild perturbations receive strong alignment, while severe anomalies are only partially aligned, preserving anomalyrelevant signals. This leads to representations that remain stable for normal data but still adapt when severe distribution shifts occur.

Our goal is to train a forecaster that remains accurate under normal operating conditions while also performing well when the data distribution shifts due to anomalous events. Instead of adapting the model after anomalies are observed, we design a training objective that encourages the encoder to learn representations that are both consistent under benign variations and sensitive to anomaly-relevant changes from the start.

Formally, let x ∈ R T ×C denote an input window of T timesteps with C variables, and y ∈ R H×C the H-step forecast horizon. A forecasting model consists of an encoder g ϕ : R T ×C → R T ′ ×D , which maps the input into T ′ latent representations of dimension D, and a decoder h ψ : R T ′ ×D → R H×C that produces the forecast ŷ = h ψ (g ϕ (x)).

We propose Weighted Contrastive Anomaly-Aware Forecasting (WECA), a weighted contrastive objective that aligns representations of normal and anomaly-augmented inputs while preserving anomaly-specific information. Unlike standard contrastive learnin

This content is AI-processed based on open access ArXiv data.