The latency and power consumption of large language models (LLMs) are major constraints when serving them across a wide spectrum of hardware platforms, from mobile edge devices to cloud GPU clusters. Benchmarking is crucial for optimizing efficiency in both model deployment and next-generation model development. To address this need, we opensource a simple profiling tool, ELANA, for evaluating LLMs. ELANA is designed as a lightweight, academic-friendly profiler for analyzing model size, key-value (KV) cache size, prefilling latency (Time-to-first-token, TTFT), generation latency (Time-per-output-token, TPOT), and end-to-end latency (Time-to-last-token, TTLT) of LLMs on both multi-GPU and edge GPU platforms. It supports all publicly available models on Hugging Face and offers a simple command-line interface, along with optional energy consumption logging. Moreover, ELANA is fully compatible with popular Hugging Face APIs and can be easily customized or adapted to compressed or low bit-width models, making it ideal for research on efficient LLMs or for small-scale proof-of-concept studies. We release the ELANA profiling tool at: https://github.com/enyac-group/Elana.

Numerous emerging applications are powered by large language models (LLMs) today. Yet, serving models with parameters on the order of billions (e.g., 100B) poses significant challenges in meeting the required inference latency, memory, and energy costs. Extensive research has investigated quantization [Xiao et al., 2023, Lin et al., 2024a,b, Chiang et al., 2025a] and compression [Wang et al., 2025, Lin et al., 2025, Chiang et al., 2025b] techniques to reduce inference latency and model size for deployment. However, these research directions primarily focus on algorithmic design while overlooking energy consumption in their evaluations. Furthermore, existing profiling benchmarks and results depend heavily on tools developed individually by researchers, and a unified and fair profiling framework is still lacking.

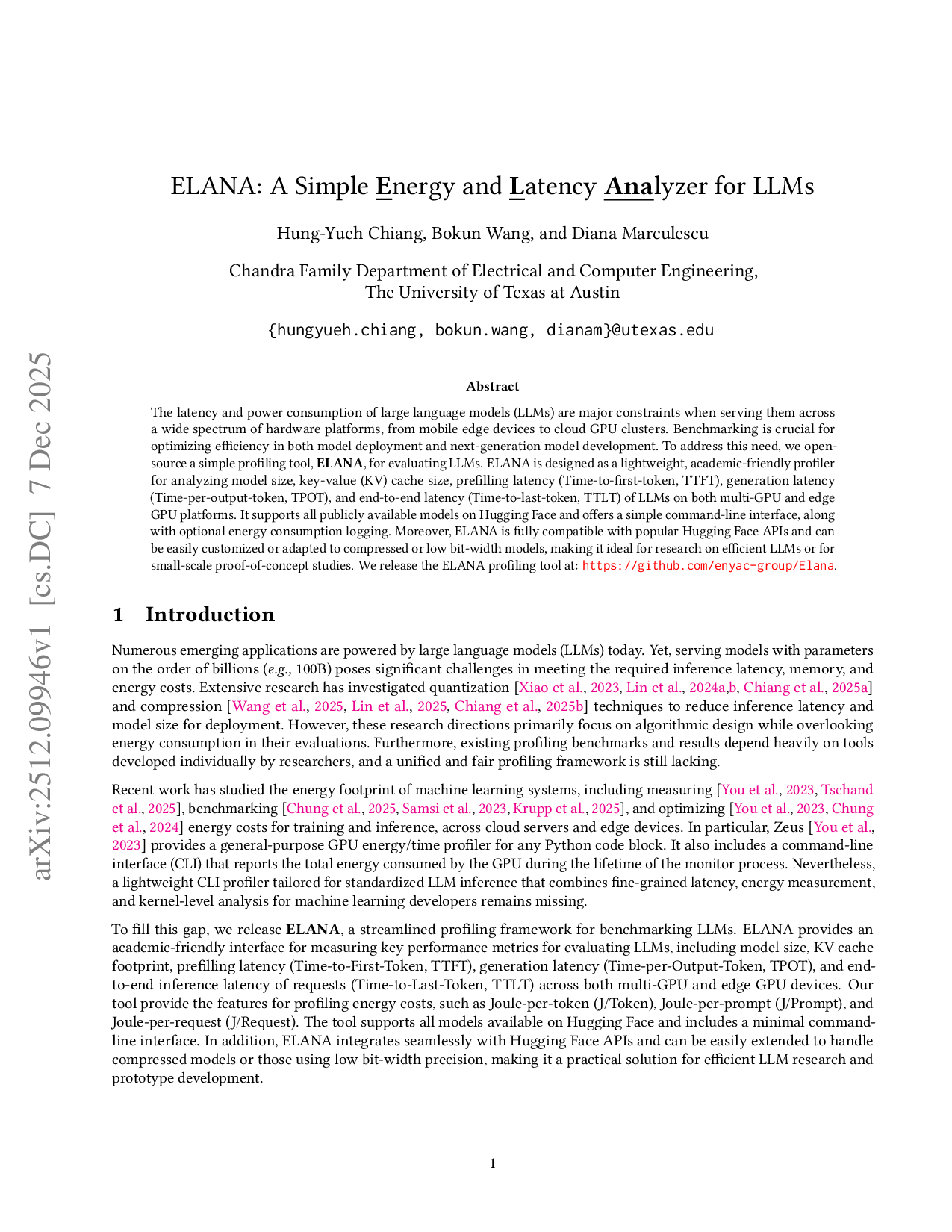

Recent work has studied the energy footprint of machine learning systems, including measuring [You et al., 2023, Tschand et al., 2025], benchmarking [Chung et al., 2025, Samsi et al., 2023, Krupp et al., 2025], and optimizing [You et al., 2023, Chung et al., 2024] energy costs for training and inference, across cloud servers and edge devices. In particular, Zeus [You et al., 2023] provides a general-purpose GPU energy/time profiler for any Python code block. It also includes a command-line interface (CLI) that reports the total energy consumed by the GPU during the lifetime of the monitor process. Nevertheless, a lightweight CLI profiler tailored for standardized LLM inference that combines fine-grained latency, energy measurement, and kernel-level analysis for machine learning developers remains missing.

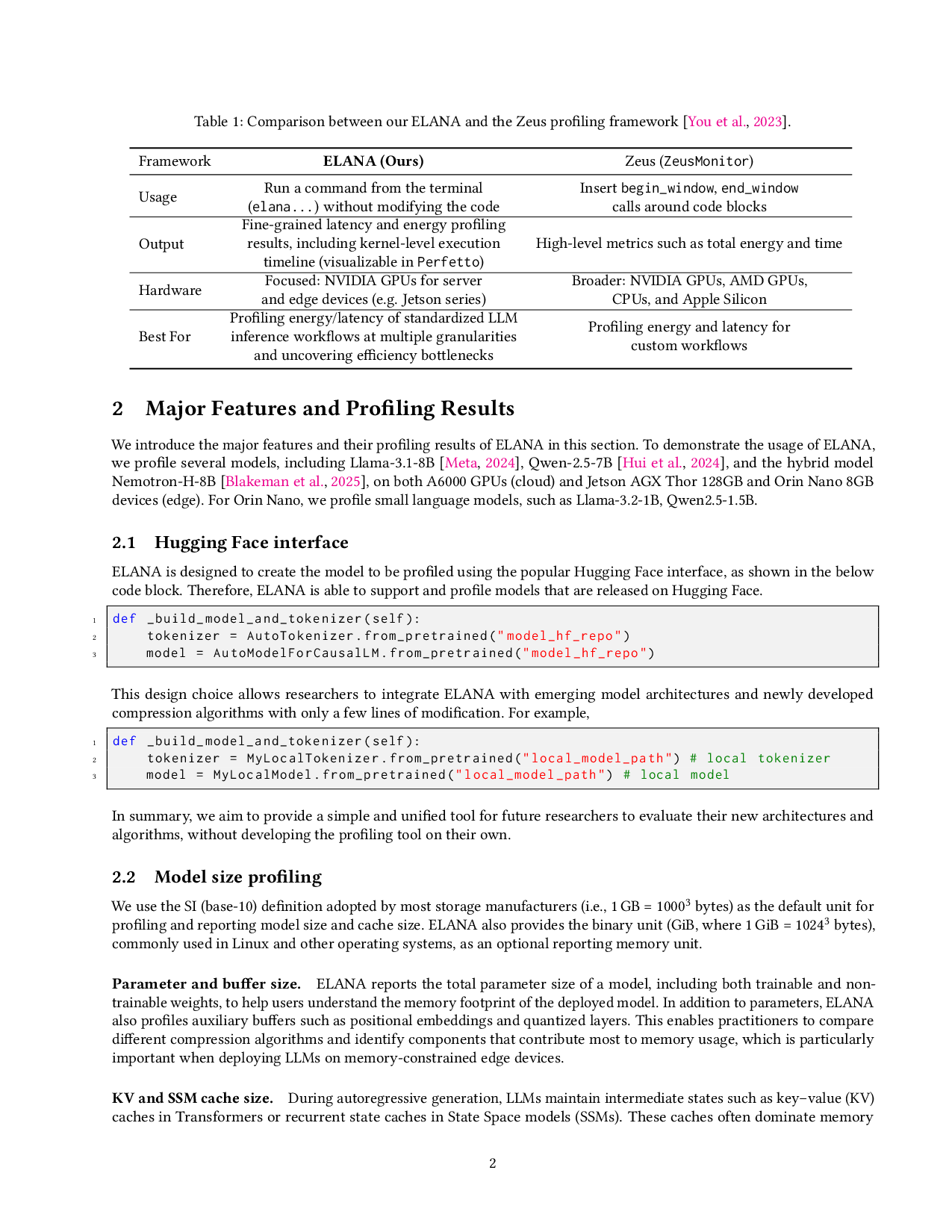

To fill this gap, we release ELANA, a streamlined profiling framework for benchmarking LLMs. ELANA provides an academic-friendly interface for measuring key performance metrics for evaluating LLMs, including model size, KV cache footprint, prefilling latency (Time-to-First-Token, TTFT), generation latency (Time-per-Output-Token, TPOT), and endto-end inference latency of requests (Time-to-Last-Token, TTLT) across both multi-GPU and edge GPU devices. Our tool provide the features for profiling energy costs, such as Joule-per-token (J/Token), Joule-per-prompt (J/Prompt), and Joule-per-request (J/Request). The tool supports all models available on Hugging Face and includes a minimal commandline interface. In addition, ELANA integrates seamlessly with Hugging Face APIs and can be easily extended to handle compressed models or those using low bit-width precision, making it a practical solution for efficient LLM research and prototype development. We introduce the major features and their profiling results of ELANA in this section. To demonstrate the usage of ELANA, we profile several models, including Llama-3.1-8B [Meta, 2024], Qwen-2.5-7B [Hui et al., 2024], and the hybrid model Nemotron-H-8B [Blakeman et al., 2025], on both A6000 GPUs (cloud) and Jetson AGX Thor 128GB and Orin Nano 8GB devices (edge). For Orin Nano, we profile small language models, such as Llama-3.2-1B, Qwen2.5-1.5B.

ELANA is designed to create the model to be profiled using the popular Hugging Face interface, as shown in the below code block. Therefore, ELANA is able to support and profile models that are released on Hugging Face. In summary, we aim to provide a simple and unified tool for future researchers to evaluate their new architectures and algorithms, without developing the profiling tool on their own.

We use the SI (base-10) definition adopted by most storage manufacturers (i.e., 1 GB = 1000 3 bytes) as the default unit for profiling and reporting model size and cache size. ELANA also provides the binary unit (GiB, where 1 GiB = 1024 3 bytes), commonly used in Linux and other operating systems, as an optional reporting memory unit.

Parameter and buffer size. ELANA reports the total parameter size of a model, including both trainable and nontrainable weights, to help users understand the memory footprint of the deployed model. In addition to parameters, ELANA also profiles auxiliary buffers such as positional embeddings and quantized layers. This enables practitioners to compare different compression algorithms and identify components that contribute most to memory usage, which is particularly important when deploying LLMs on memory-constrained edge devices.

KV and SSM cache size.

Time-to-first-token (TTFT, prefilling). TTFT measures the latency of the prefilling (i.e., prompting) stage, where the entire input prompt is processed before the model generates the first output token. This metric reflects the latency of the initial forward pass, and is particularly important for interactive applications such as chat assistants or long-context summarization. ELANA provides accurate TTFT measurements by isolating the prefilling stage and reporting both raw latency and averaged statistics over multiple runs. We prefill the model with random input prompts and profile the latency of TTFT. Since input prompt lengths vary in real a

This content is AI-processed based on open access ArXiv data.