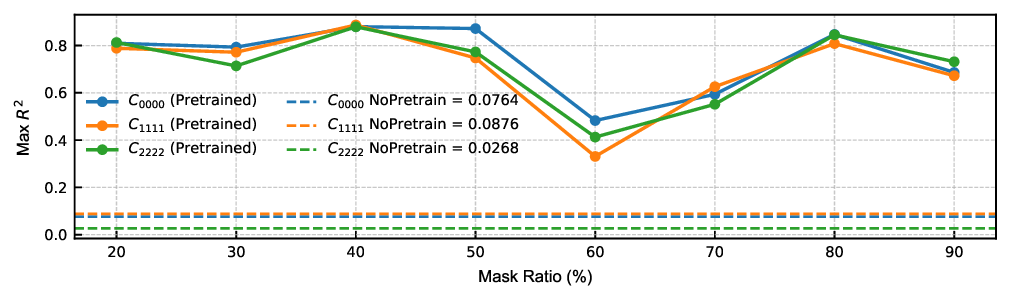

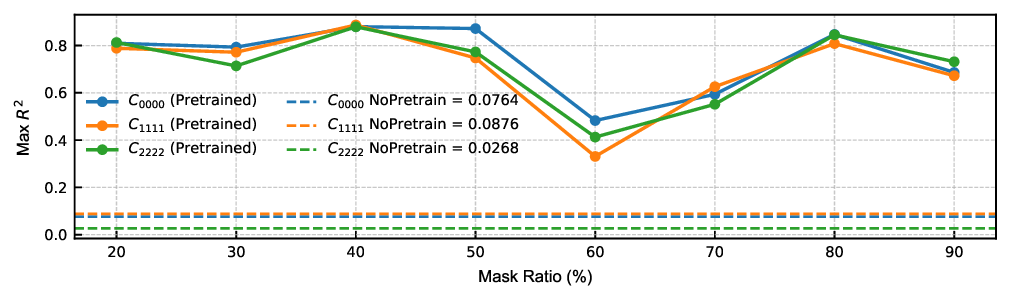

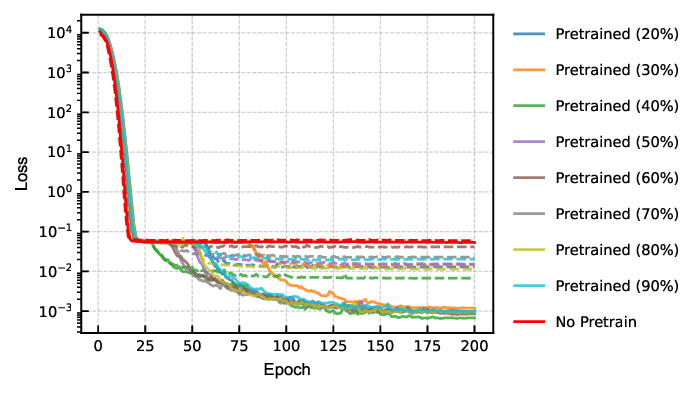

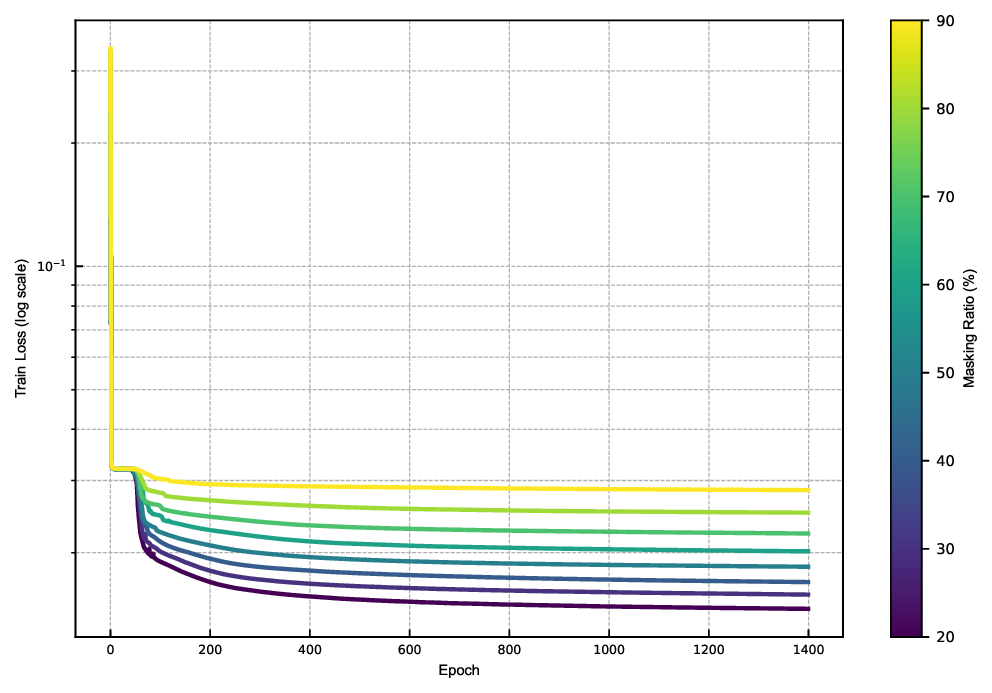

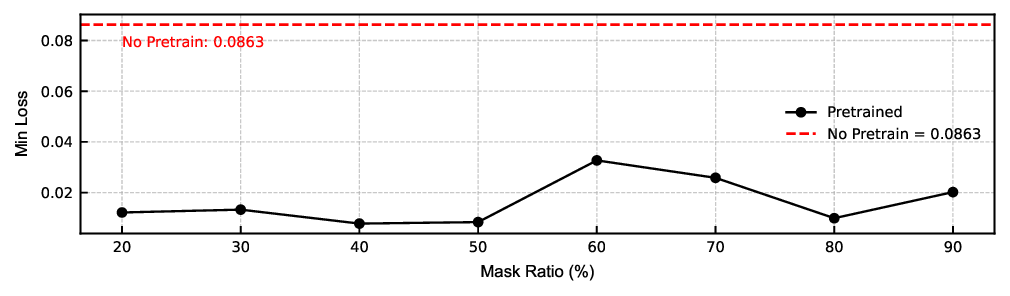

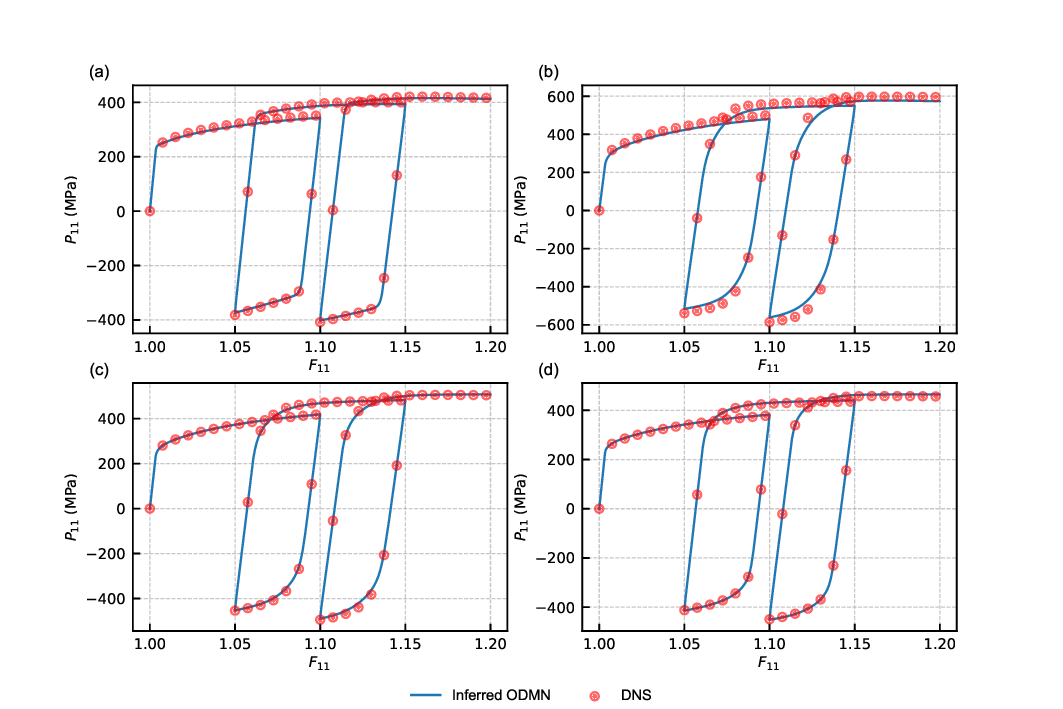

We present a three-dimensional foundation model for polycrystalline materials based on a masked autoencoder trained via large-scale self-supervised learning. The model is pretrained on $100{,}000$ voxelized synthetic face-centered cubic (FCC) microstructures whose crystallographic textures systematically span the texture hull using hierarchical simplex sampling. The transferability of the learned latent representations is evaluated on two downstream tasks: homogenized elastic stiffness prediction and nonlinear stress-strain response prediction. For the nonlinear task, the pretrained encoder is coupled with an orientation-aware interaction-based deep material network (ODMN), where latent features are used to infer microstructure-dependent surrogate parameters. The inferred ODMNs are subsequently combined with crystal plasticity to predict stress--strain responses for previously unseen microstructures. In stiffness prediction, the pretrained model achieves validation $R^2$ values exceeding 0.8, compared to below 0.1 for non-pretrained baselines. In nonlinear response prediction, mean stress errors remain below 4\%. These results demonstrate that self-supervised pretraining yields physically meaningful and transferable microstructural representations, providing a scalable framework for microstructure-property inference in polycrystalline materials.

Polycrystalline materials form the backbone of modern engineering applications, with their macroscopic mechanical behavior intrinsically governed by the underlying microstructural architecture, most notably crystallographic texture [1]. Consequently, elucidating microstructure-property relationships has become a central objective in materials design and performance optimization. In recent years, advances in artificial intelligence (AI) have opened new opportunities for establishing data-driven links between microstructural descriptors and effective material responses, offering a promising route toward accelerated computational materials modeling.

A wide range of machine learning architectures have been explored for polycrystalline systems. Convolutional neural networks (CNNs), such as U-Net, have been employed to predict full-field stress distributions in viscoplastic polycrystals [2]. Graph neural networks (GNNs) have demonstrated strong capability in capturing grain-level topological interactions, enabling accurate prediction of effective magnetostriction in heterogeneous polycrystals [3]. Variational autoencoders (VAEs) have been used to learn compact latent representations of electron backscatter diffraction (EBSD) patterns, yielding physically meaningful embeddings that improve EBSD indexing efficiency [4]. More recently, masked autoencoders (MAEs) have been applied to synthetic two-dimensional polycrystalline datasets for classification tasks; although pretraining was restricted to randomly textured microstructures, these studies neverthe-less highlight the potential of self-supervised learning for texture-aware representation learning [5]. Collectively, these efforts underscore the importance of latent representation learning in microstructure-aware materials modeling.

Building upon this progress, the concept of a foundation model extends representation learning by leveraging largescale pretraining on abundant data to extract transferable latent representations across diverse downstream tasks. In natural language processing, large language models such as BERT and GPT exemplify this paradigm by learning highly generalizable embeddings from massive text corpora [6,7]. Analogously, foundation models tailored to materials science have begun to emerge. For example, a masked autoencoder pretrained on synthetic two-dimensional composite microstructures has demonstrated strong versatility across downstream tasks [8].

To efficiently predict nonlinear mechanical responses of polycrystalline materials, physically informed surrogate models have been developed as alternatives to direct full-field simulations. Among these approaches, the orientation-aware interaction-based deep material network (ODMN) has been proposed as an efficient and physically interpretable surrogate modeling framework [9]. The ODMN extends the deep material network (DMN) architecture [10,11,12] and explicitly incorporates crystallographic texture effects through a hierarchical reduced-order representation. A distinctive feature of the ODMN is that its parameters can be trained using linear elastic homogenized responses, while remaining capable of extrapolating to nonlinear mechanical behavior during online prediction [10,13,11,14]. However, ODMN parameters are tied to individual microstructural realizations, limiting transferability across different textures. To address this issue, several studies have proposed interpolation-or learning-based strategies to generalize DMN parameters across microstructures [15,16,17,8]. Nevertheless, extension of such approaches to threedimensional polycrystalline microstructures, particularly for mapping learned representations to ODMN parameters, remains largely unexplored.

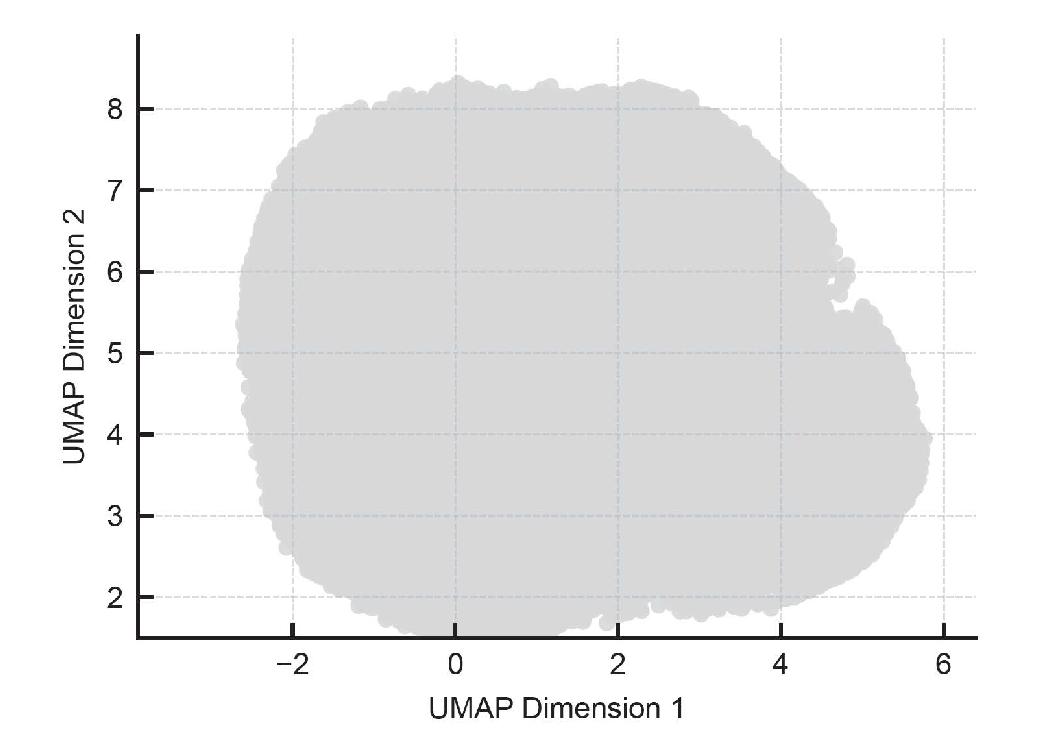

Motivated by these developments, this study investigates foundation models as a unifying framework for crystallographic texture informatics in polycrystalline materials. We introduce a foundation model as a universal microstructure encoder, whose learned latent representations are systematically mapped to ODMN parameters, enabling transferable nonlinear response prediction for previously unseen microstructures. The model is pretrained on a large-scale synthetic dataset spanning the complete crystallographic texture space of face-centered cubic (FCC) crystals, generated via hierarchical simplex sampling (HSS) [18,19]. Voxel-based polycrystalline microstructures constructed from these textures are used for large-scale self-supervised pretraining.

Building upon this dataset, we develop a three-dimensional voxel-based foundation model based on a masked autoencoder architecture, which learns texture-aware latent representations in a self-supervised manner. The pretrained model is subsequently fine-tuned for two representative downstream tasks: (1) prediction of homogenized stiffness and (2) inference of material surrogate model parameters via linear projection of the learned latent representations. In the second task, the inferred surrog

This content is AI-processed based on open access ArXiv data.