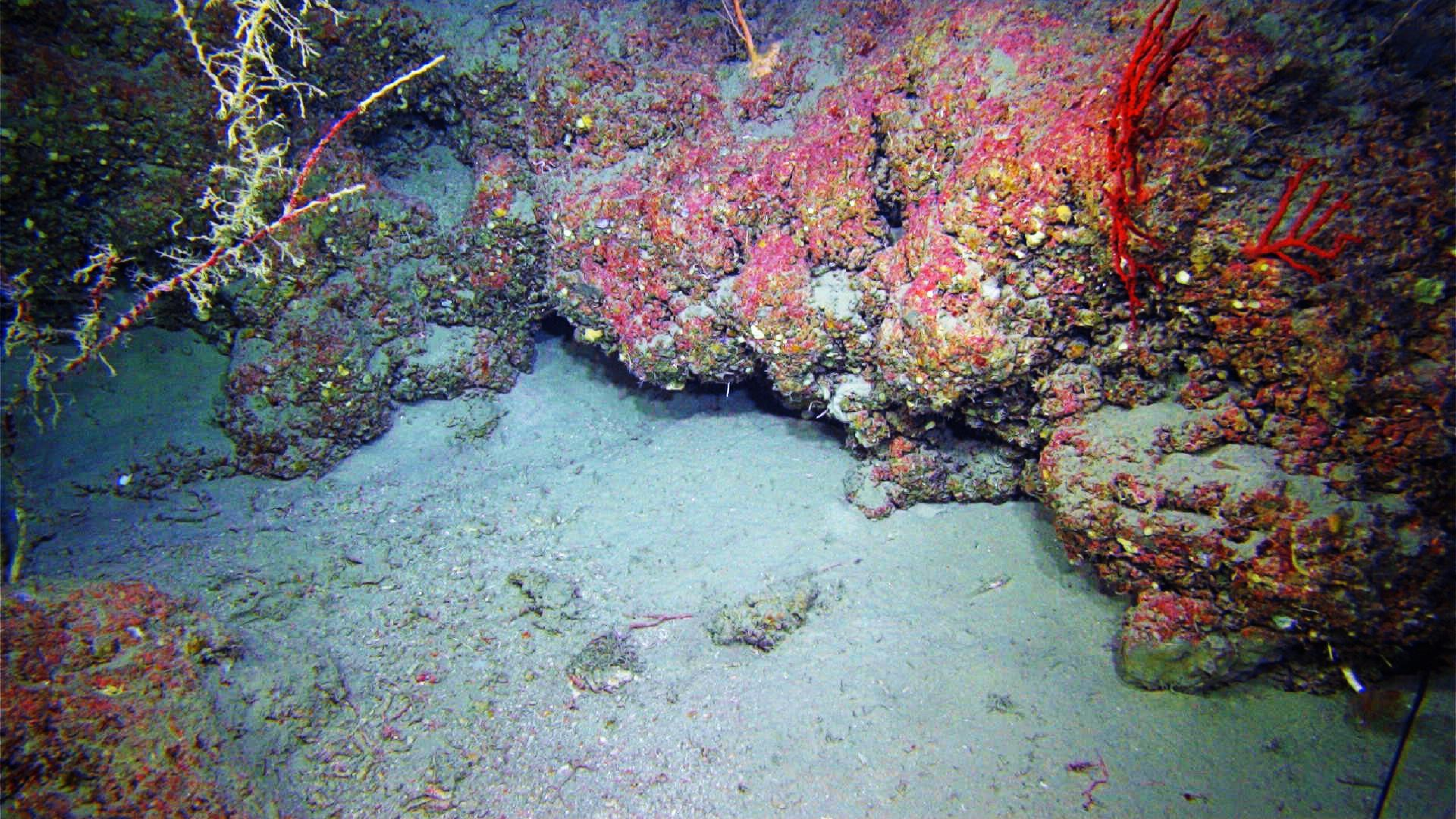

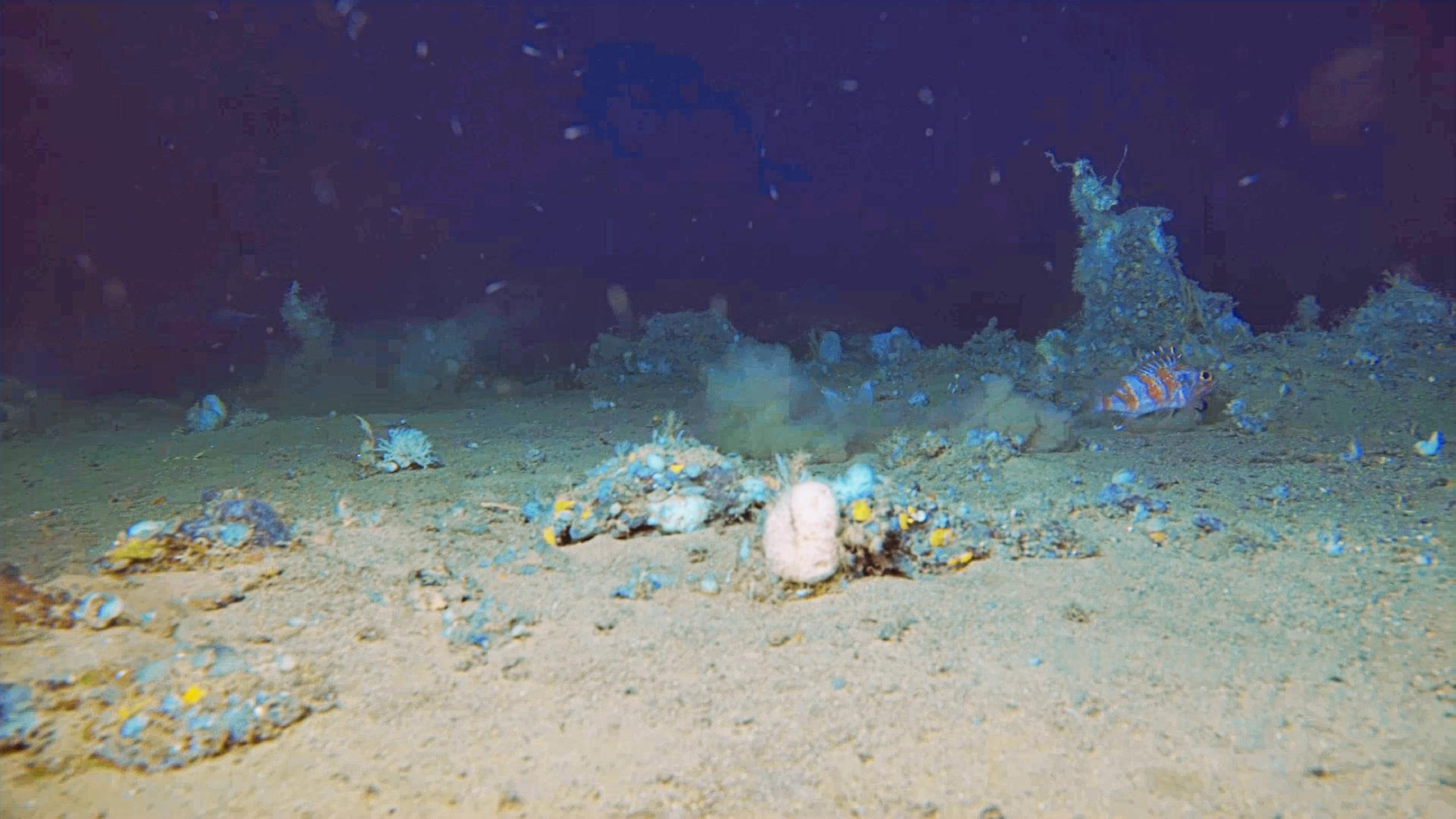

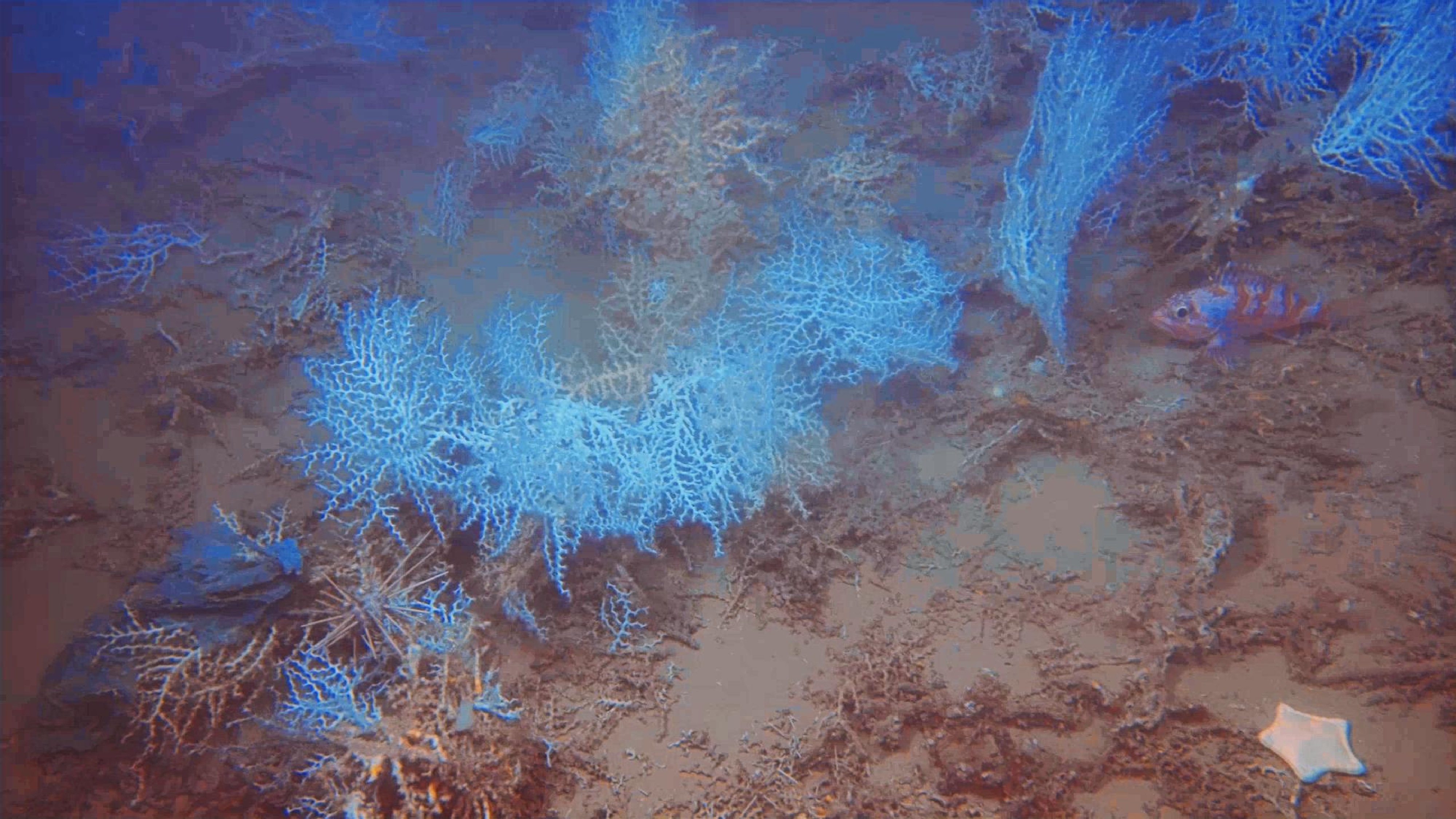

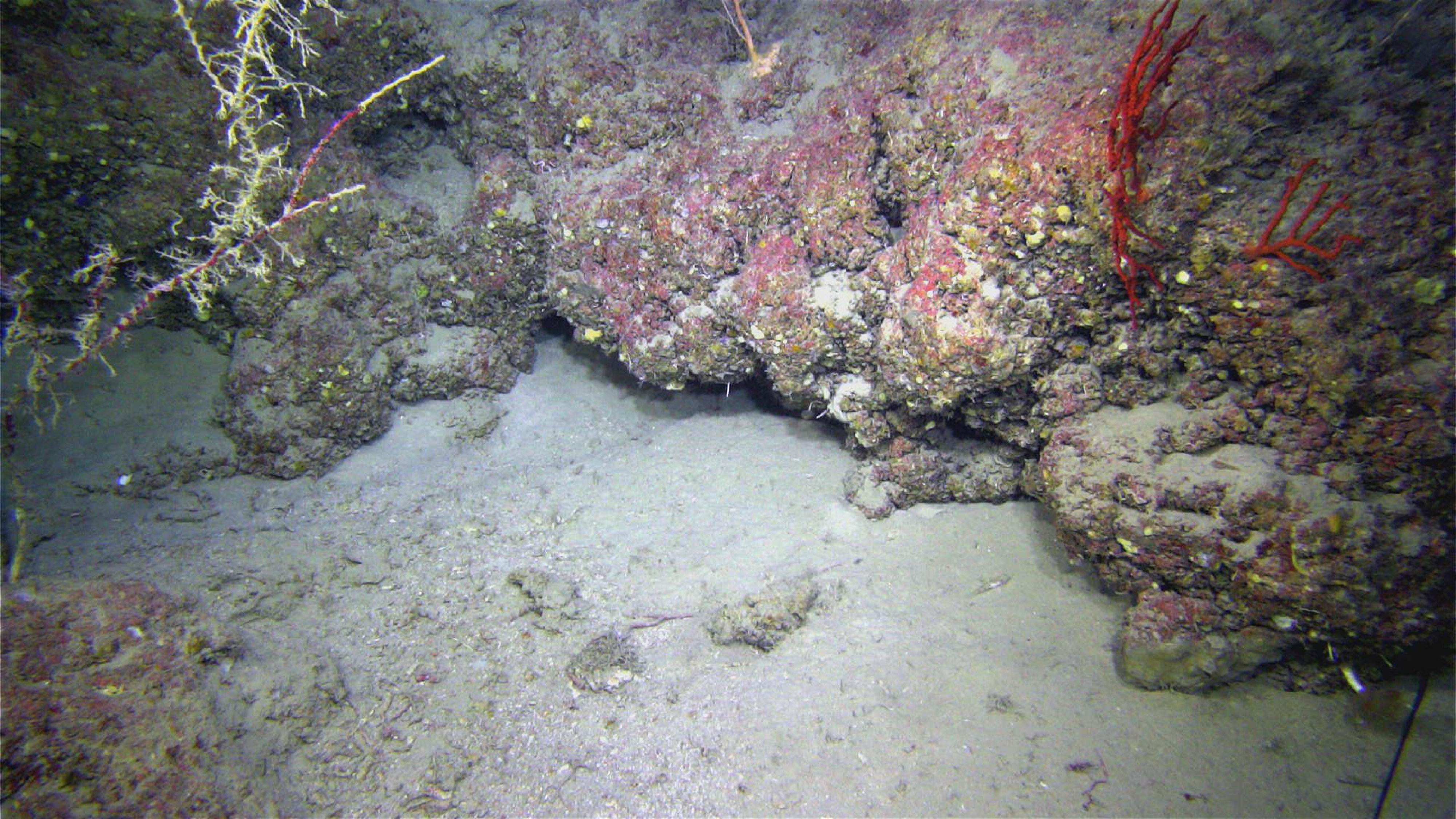

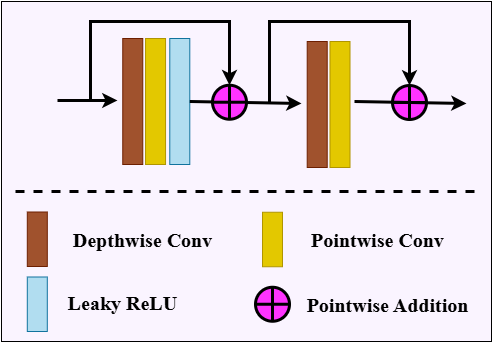

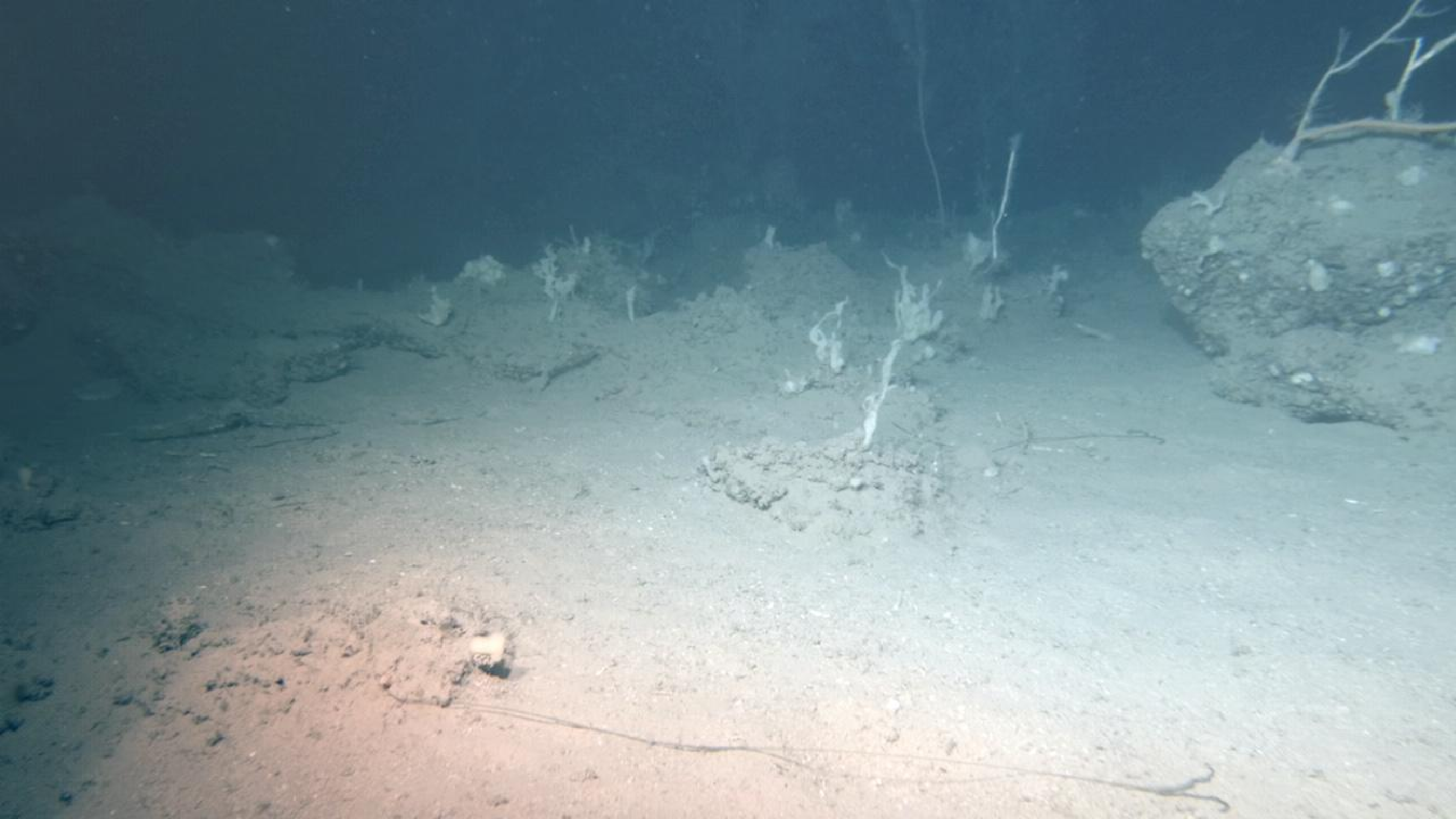

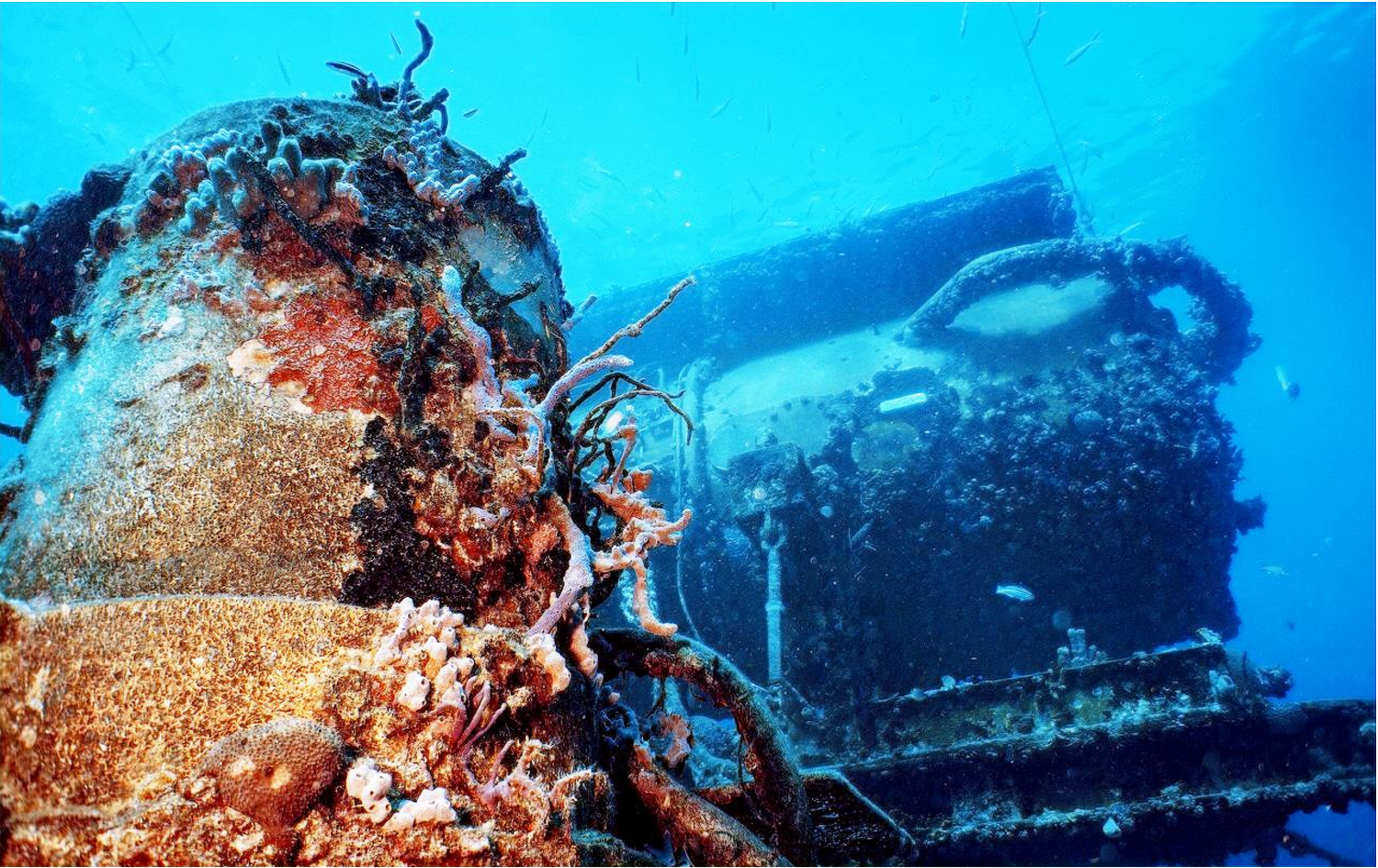

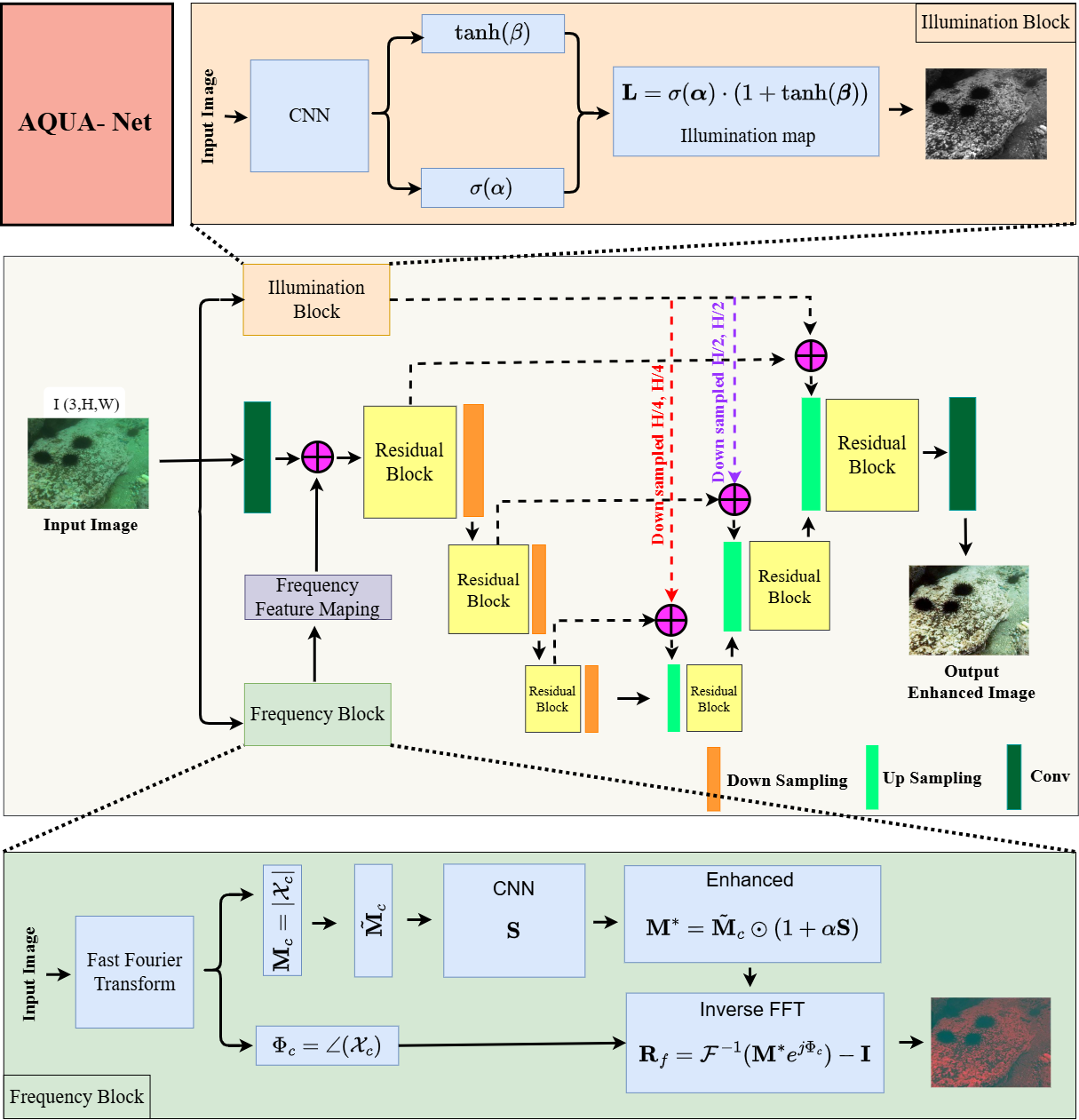

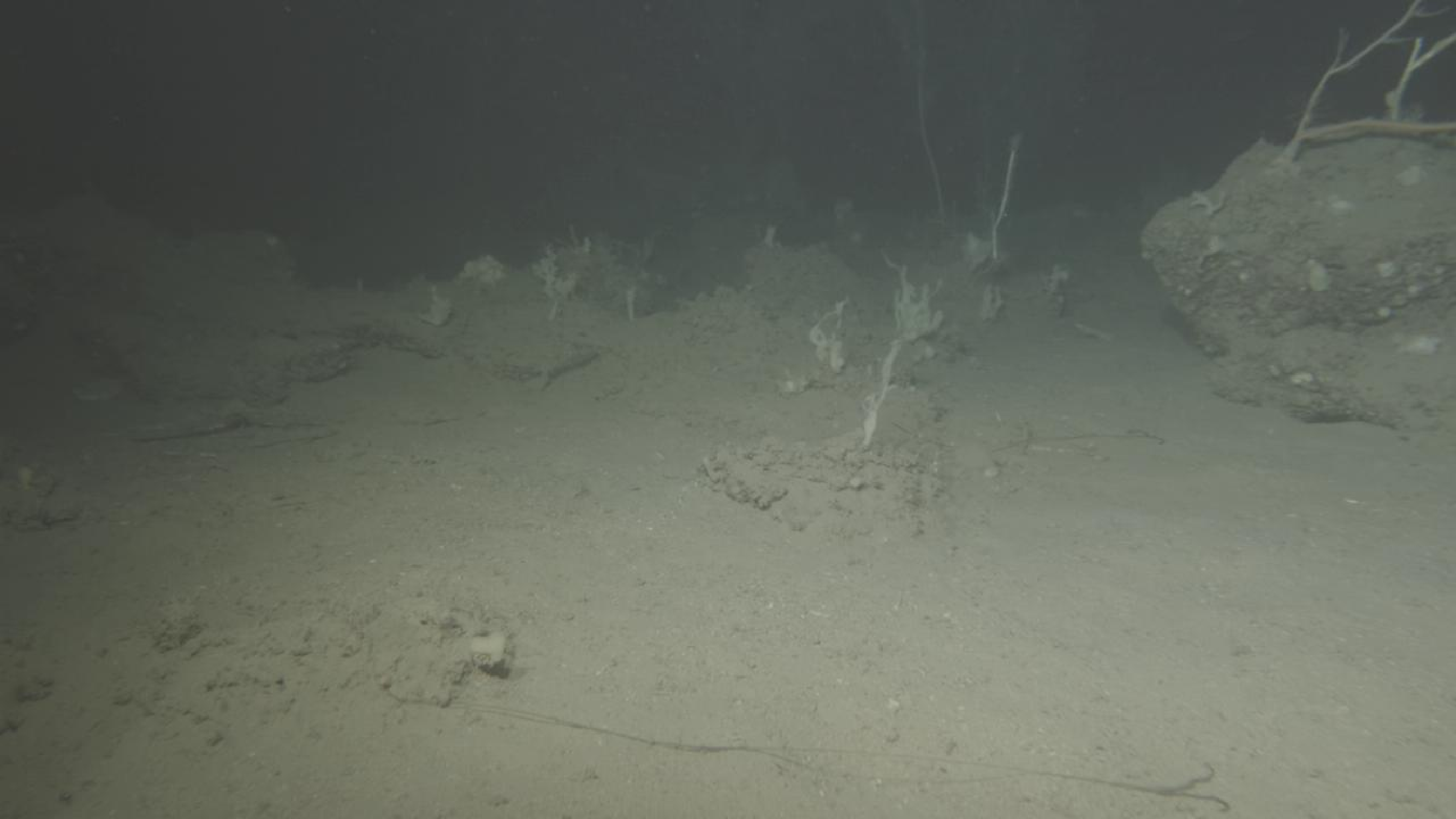

Underwater images often suffer from severe color distortion, low contrast, and a hazy appearance due to wavelength-dependent light absorption and scattering. Simultaneously, existing deep learning models exhibit high computational complexity, which limits their practical deployment for real-time underwater applications. To address these challenges, this paper presents a novel underwater image enhancement model, called Adaptive Frequency Fusion and Illumination Aware Network (AQUA-Net). It integrates a residual encoder decoder with dual auxiliary branches, which operate in the frequency and illumination domains. The frequency fusion encoder enriches spatial representations with frequency cues from the Fourier domain and preserves fine textures and structural details. Inspired by Retinex, the illumination-aware decoder performs adaptive exposure correction through a learned illumination map that separates reflectance from lighting effects. This joint spatial, frequency, and illumination design enables the model to restore color balance, visual contrast, and perceptual realism under diverse underwater conditions. Additionally, we present a high-resolution, real-world underwater video-derived dataset from the Mediterranean Sea, which captures challenging deep-sea conditions with realistic visual degradations to enable robust evaluation and development of deep learning models. Extensive experiments on multiple benchmark datasets show that AQUA-Net performs on par with SOTA in both qualitative and quantitative evaluations while using less number of parameters. Ablation studies further confirm that the frequency and illumination branches provide complementary contributions that improve visibility and color representation. Overall, the proposed model shows strong generalization capability and robustness, and it provides an effective solution for real-world underwater imaging applications.

particles, varying water conditions, and irregular optical properties introduce color shifts, reduced contrast, and worsen visibility. These effects vary with water conditions and the irregular optical properties of the underwater environment, making underwater image enhancement (UIE) a challenging task. Obtaining the clean, visually reliable UWIs is crucial for improving image quality, visibility, and enabling accurate observation and analysis. To address these challenges, many researchers developed different UIE models, such as the physical bases model and physically based free models [3], [4]. Physics-based methods mainly aim to accurately estimate the medium transmission and other imaging parameters, such as background light, to reconstruct a clean image by inverting the underwater image formation model [5]. Although these approaches can work well under certain conditions, their performance often becomes unstable and highly sensitive when dealing with complex or challenging underwater scenes. This difficulty arises because accurately estimating the medium transmission is essential, yet challenging. This is because the UWIs vary widely and are classified into ten classes based on the Jerlov water type [2], [6], each with different optical properties. As a result, estimating underwater imaging parameters accurately becomes complicated for traditional-based methods, including the physics-based model and physics-based free model.

Recently, advanced deep neural networks have demonstrated remarkable performance on UIE and improved both quantitative metrics and perceptual quality [7]- [12]. Despite these gains, several of these approaches [7], [9], [13], [14] are computationally complex and require a significantly large number of parameters and Floating Point Operations (FLOPs), which limit their practicality for real-world deployment. Additionally, existing architectures rely on generic encoder-decoder structures originally developed for natural-image tasks rather than underwater environments [14], [15]. These models struggle to fully account for the unique spectral distortions, frequencydependent degradation, and non-uniform illumination patterns found in underwater scenes. As a result, they often enhance images globally but remain limited in recovering fine textures, suppressing low-frequency haze, or reconstructing spatially consistent color distributions. This mismatch between model design and underwater imaging physics restricts their generalization capability and leads to inconsistent restoration across diverse water types.

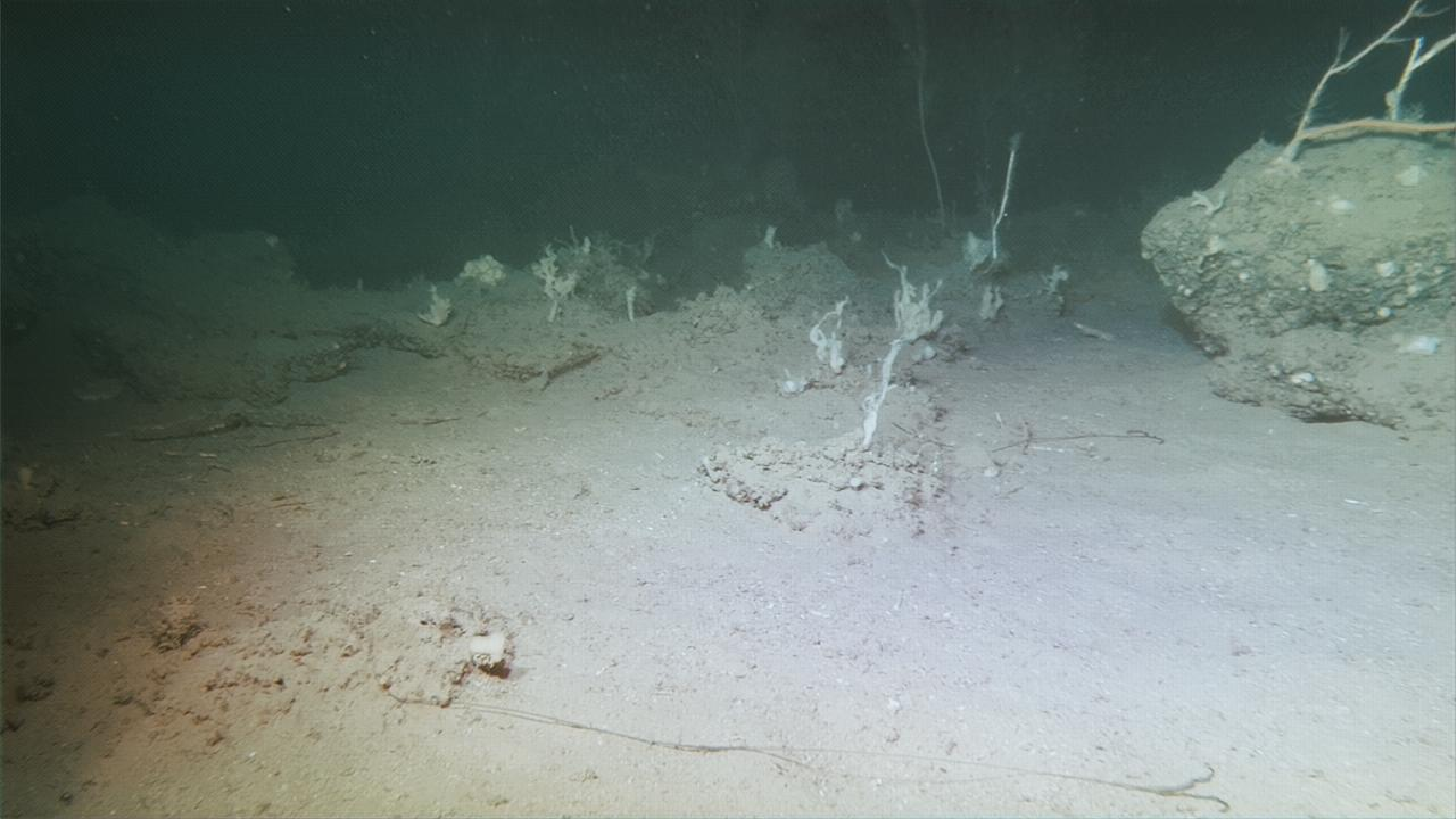

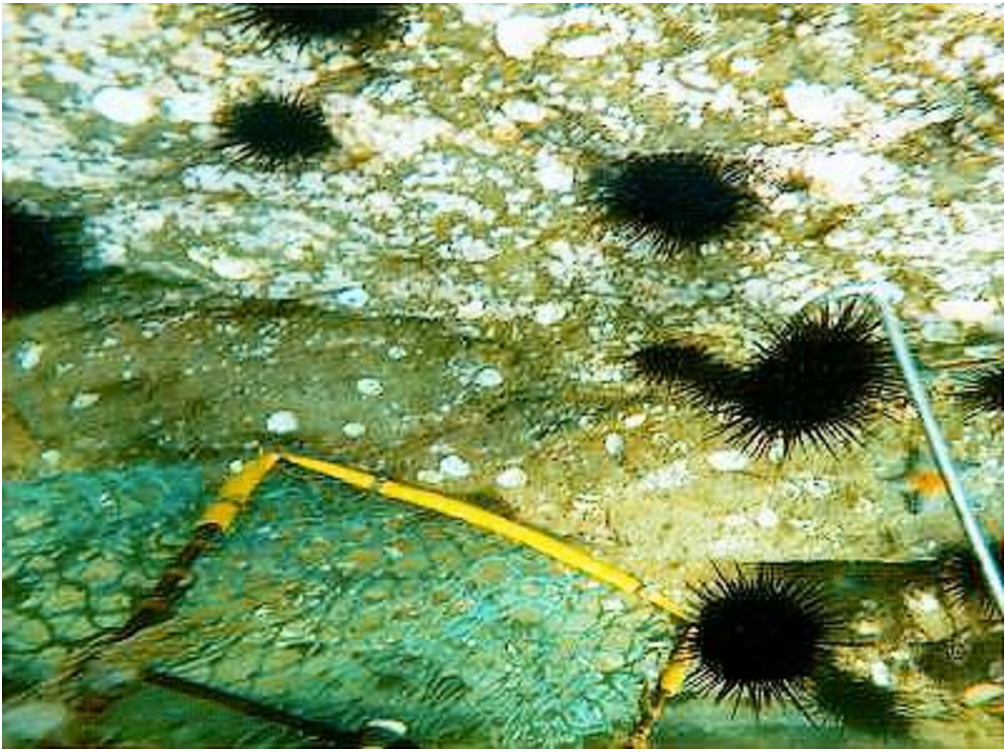

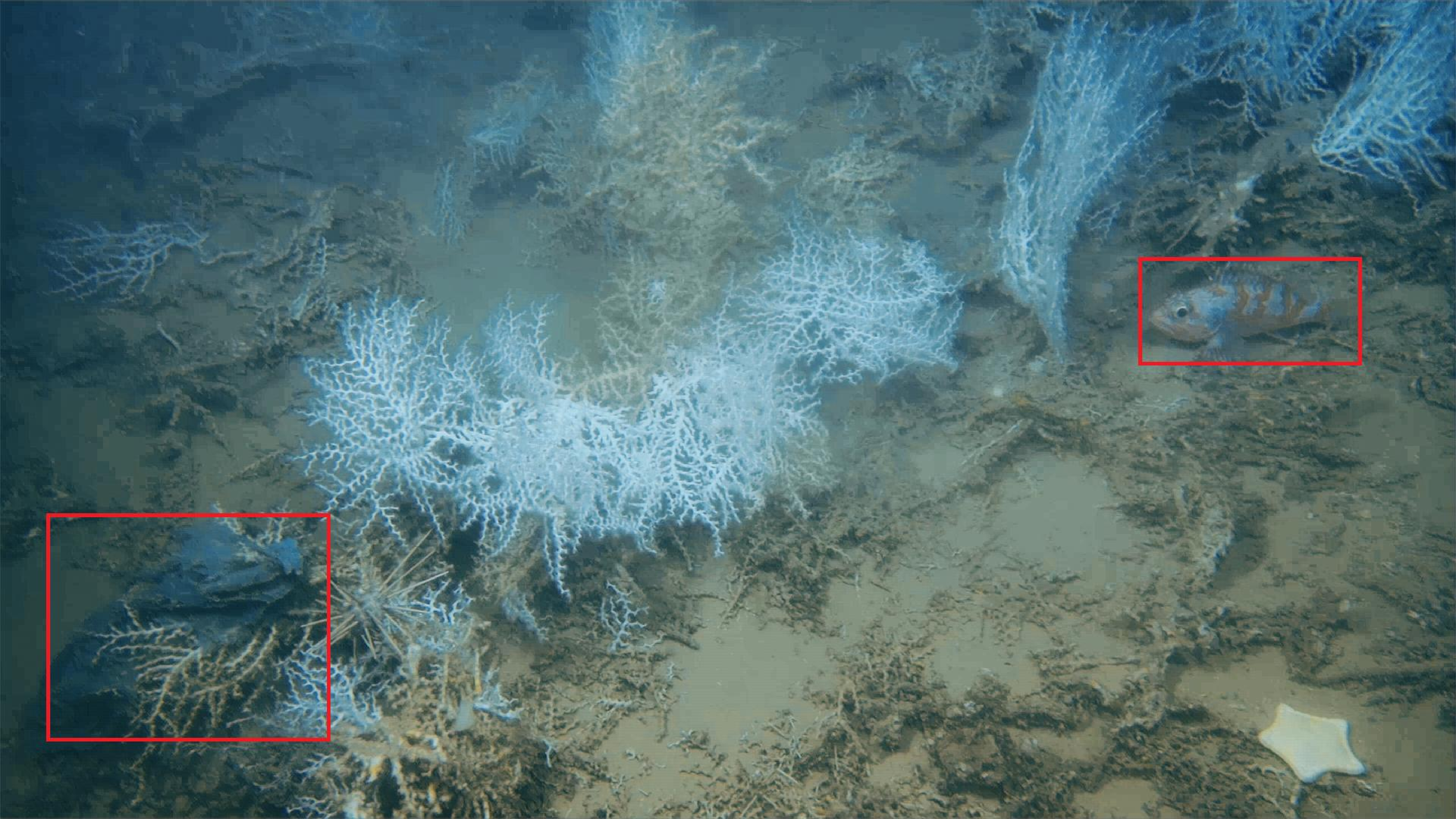

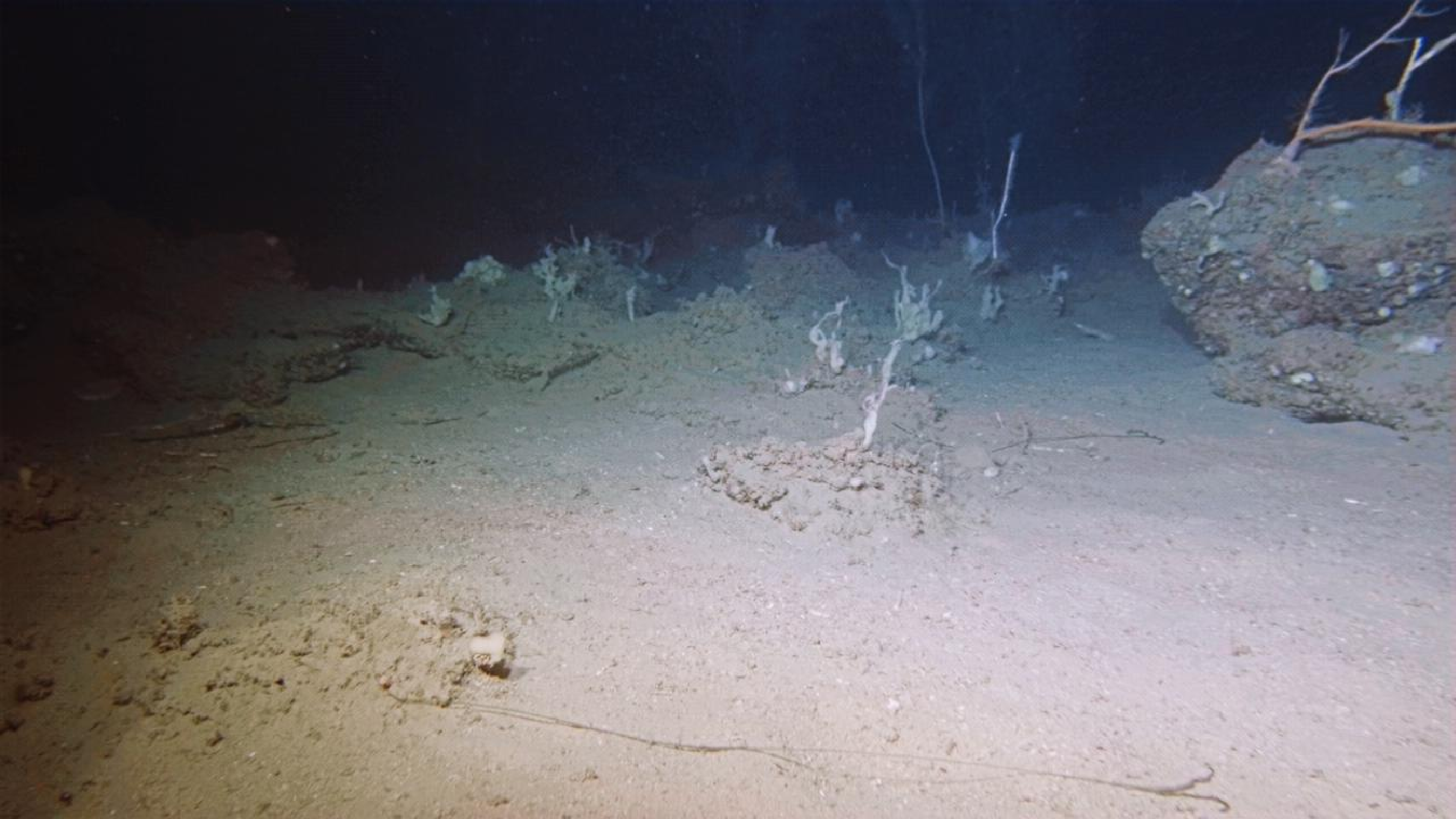

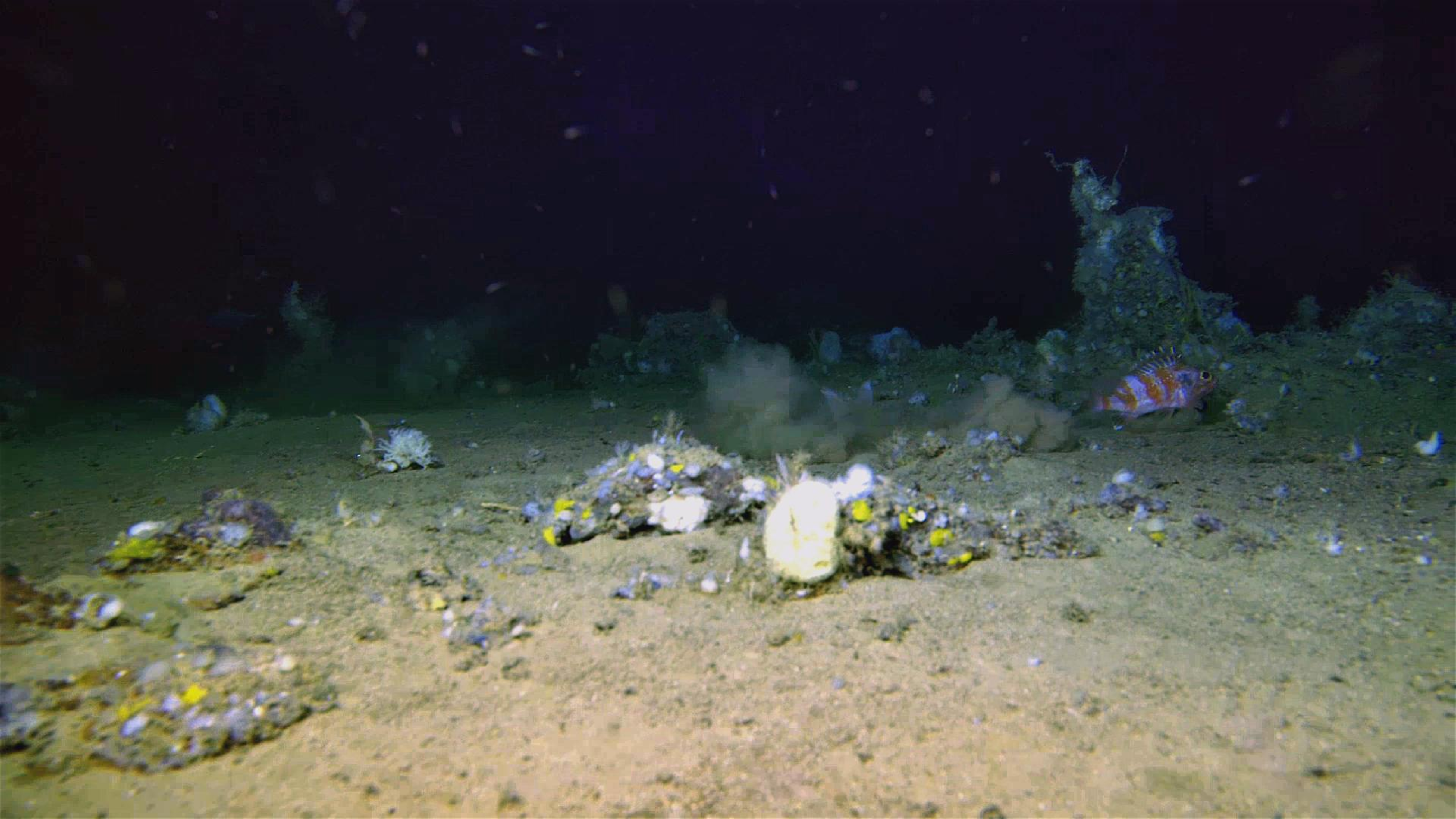

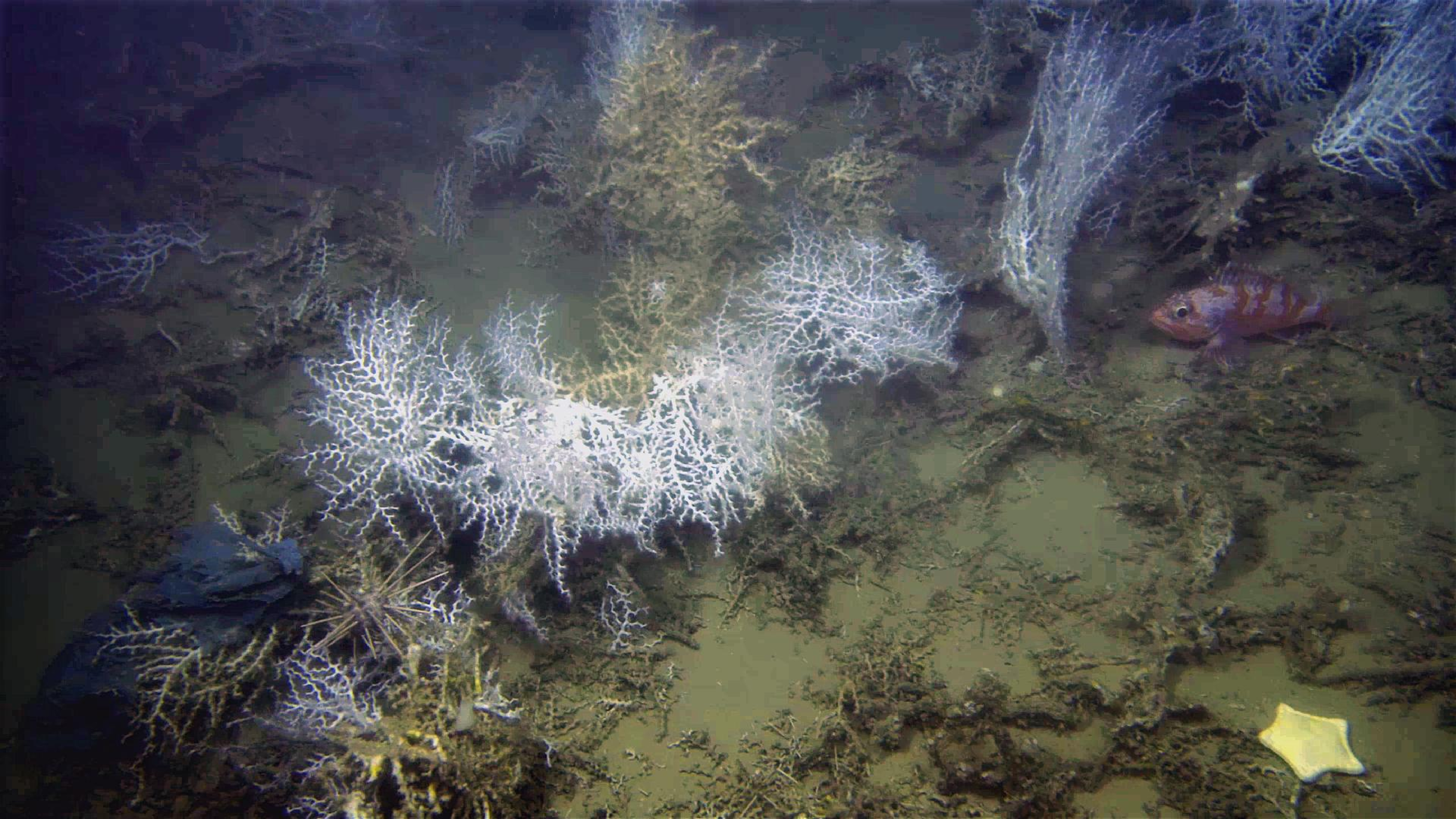

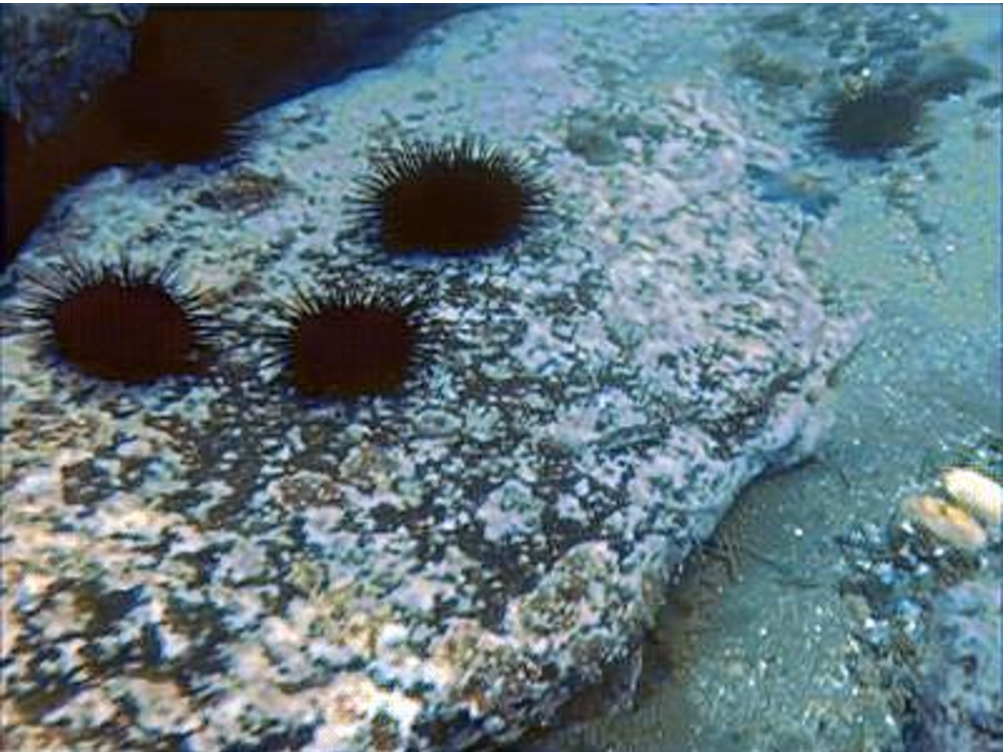

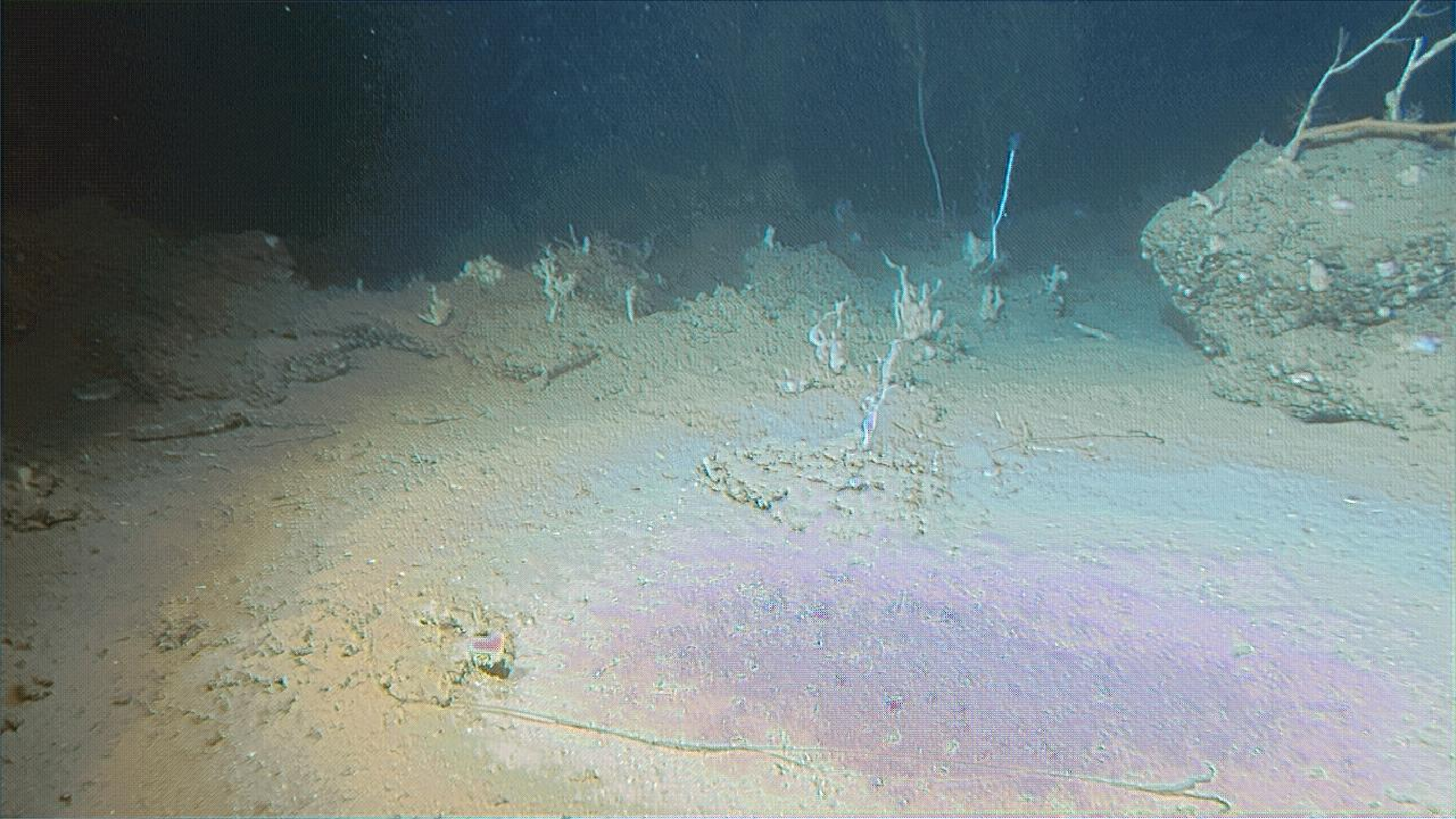

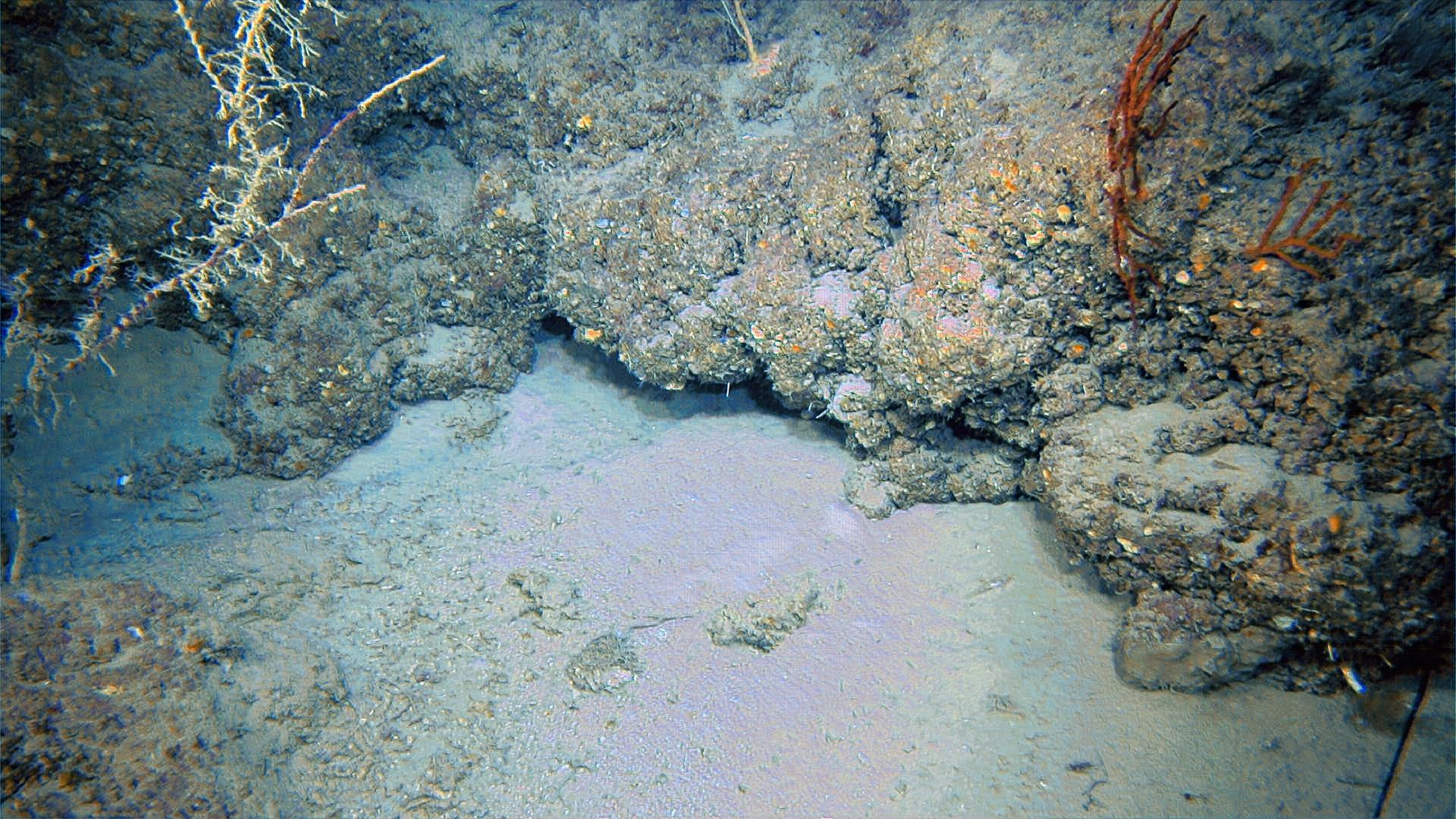

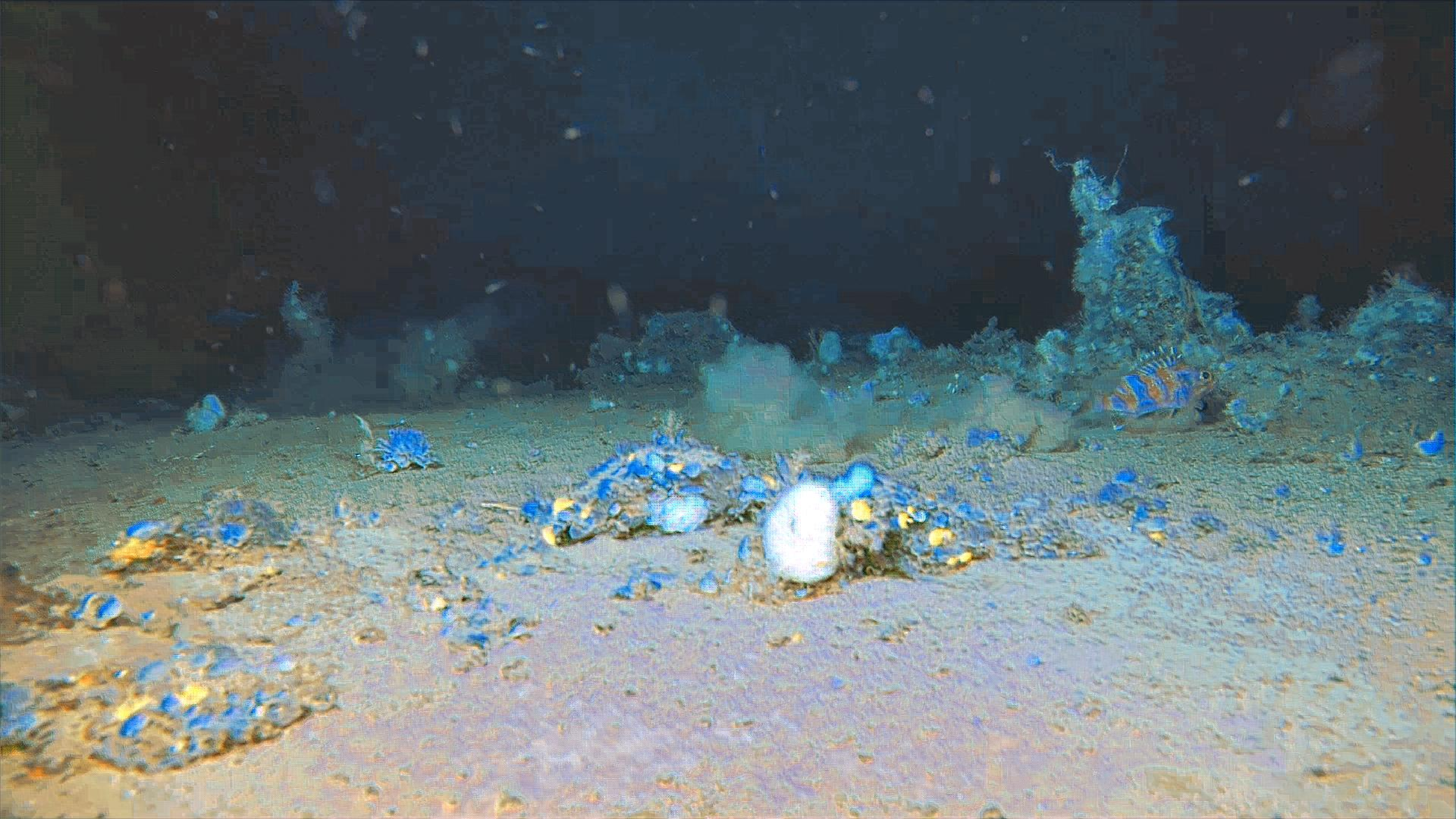

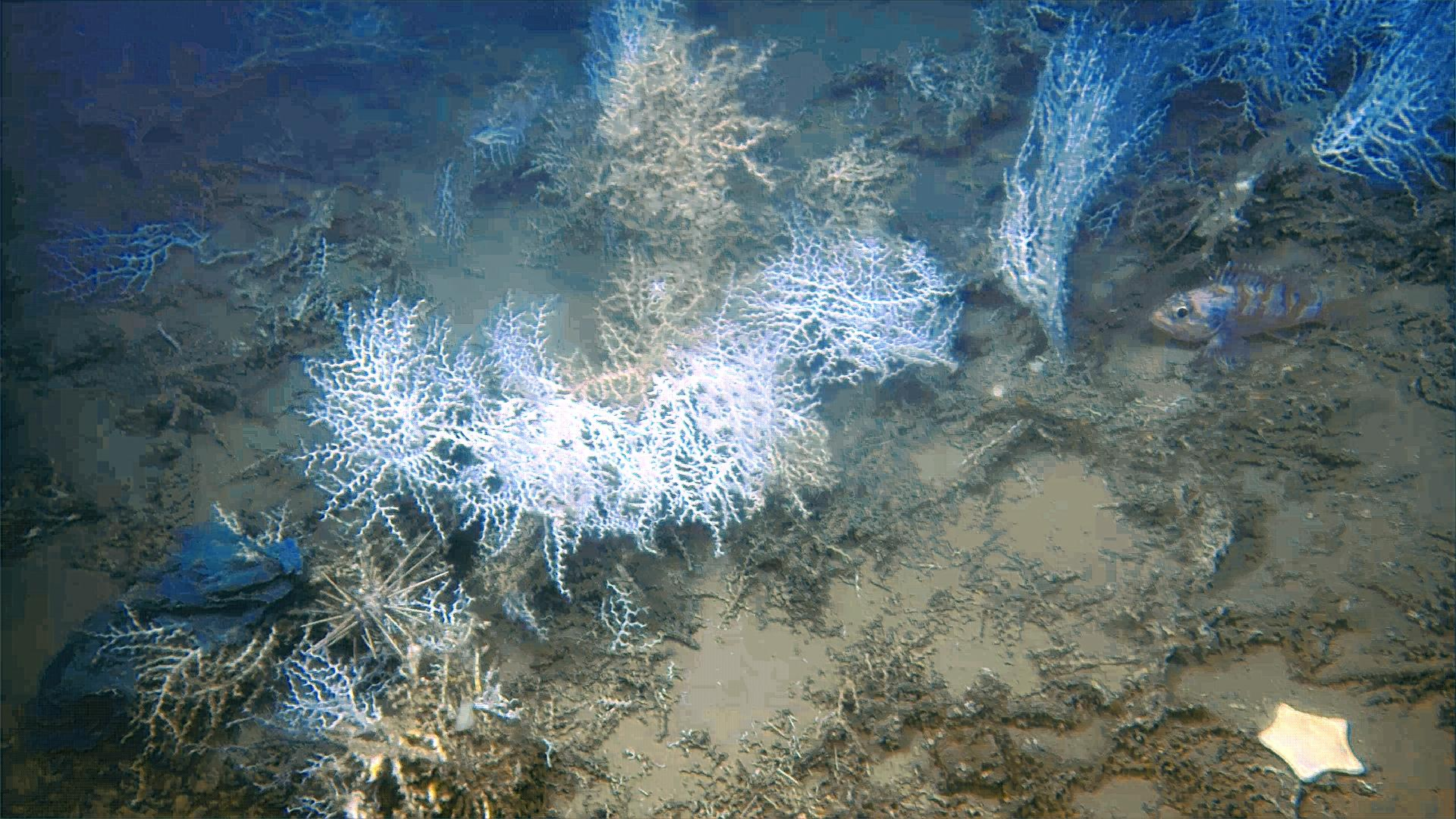

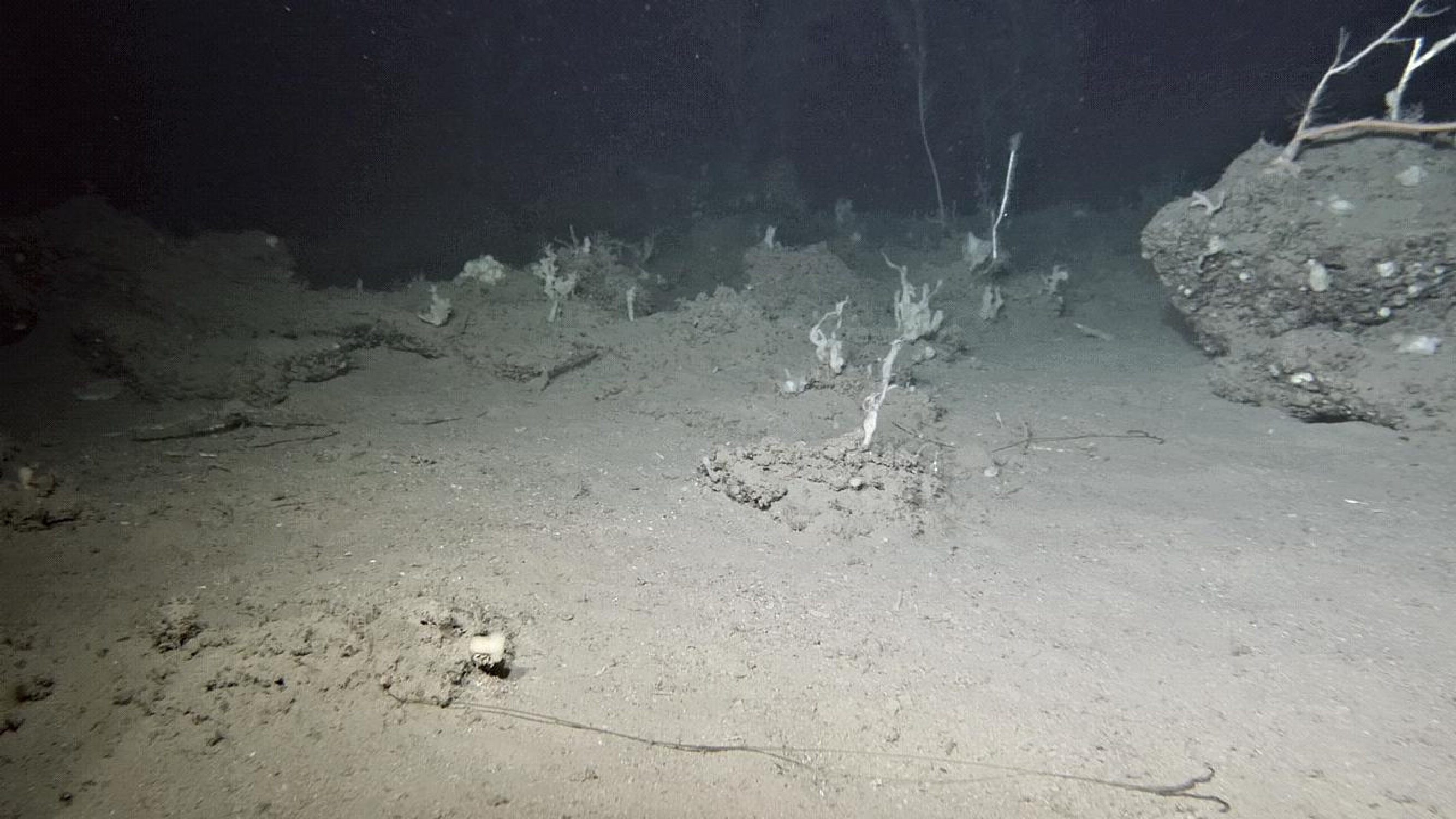

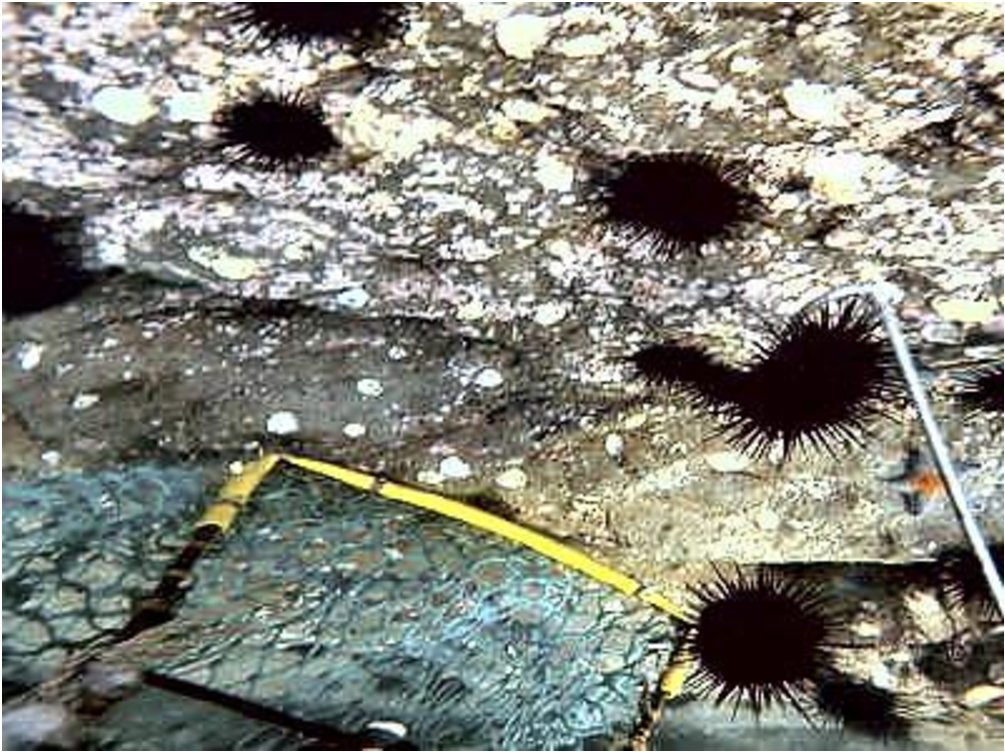

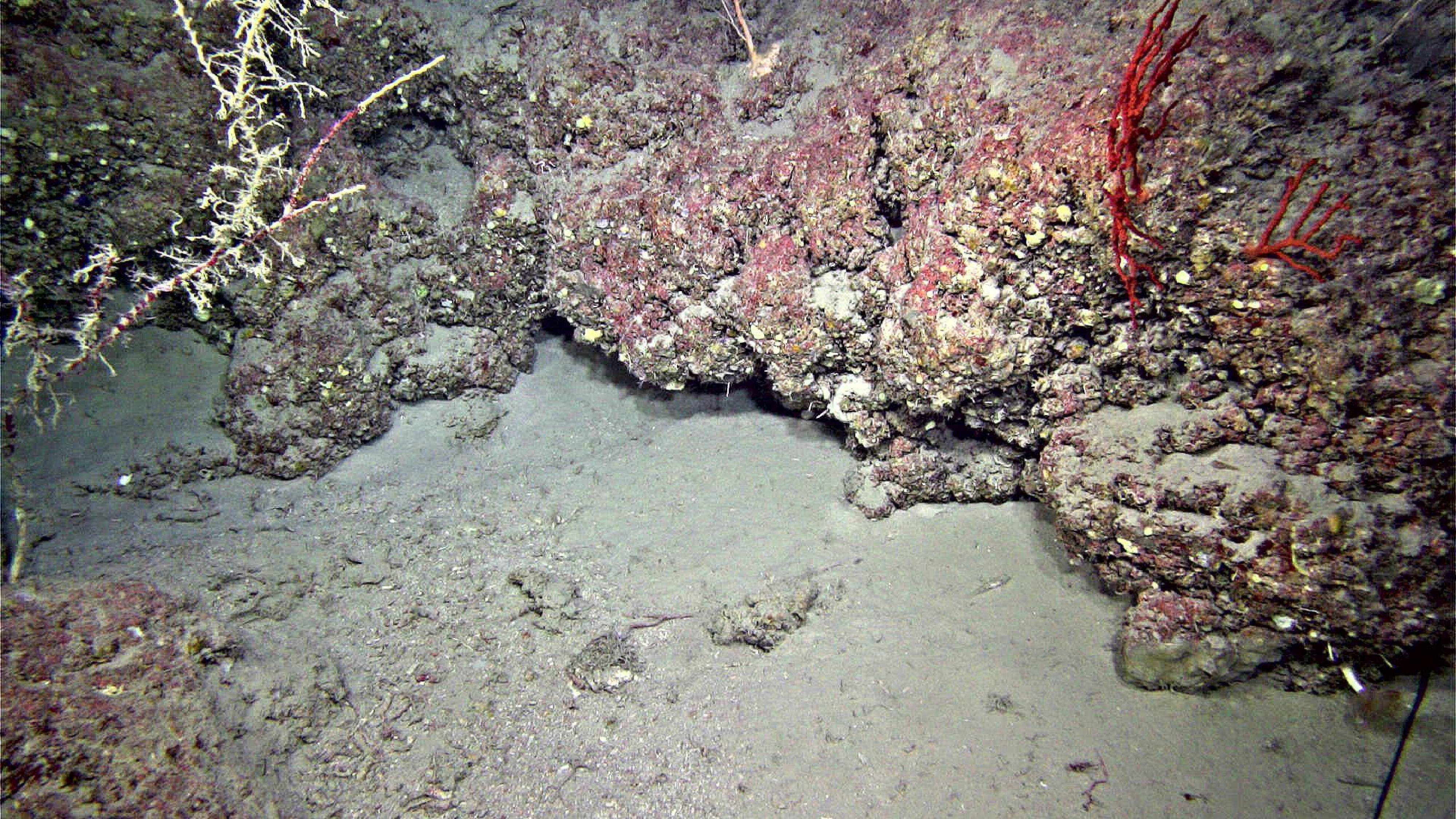

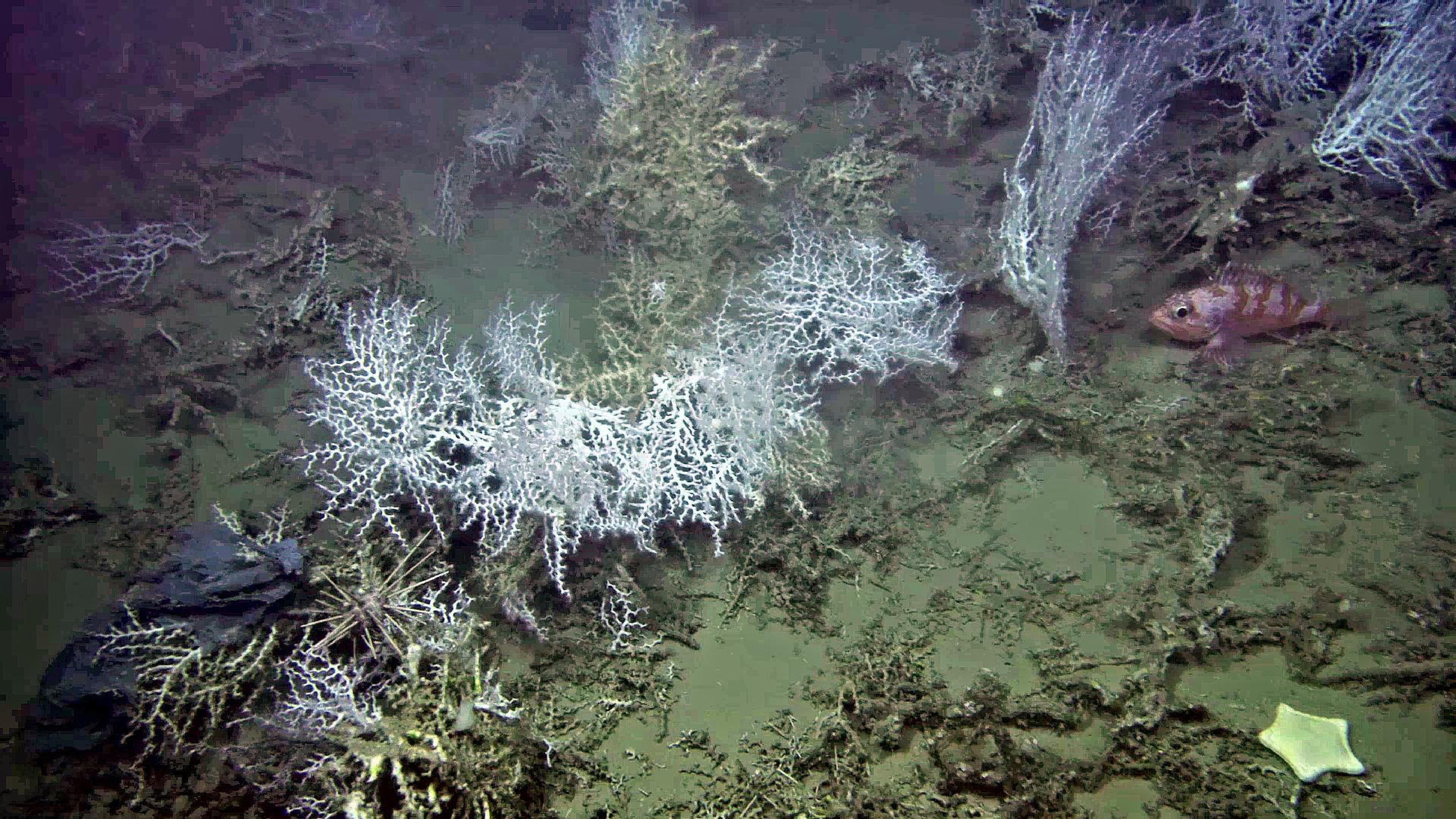

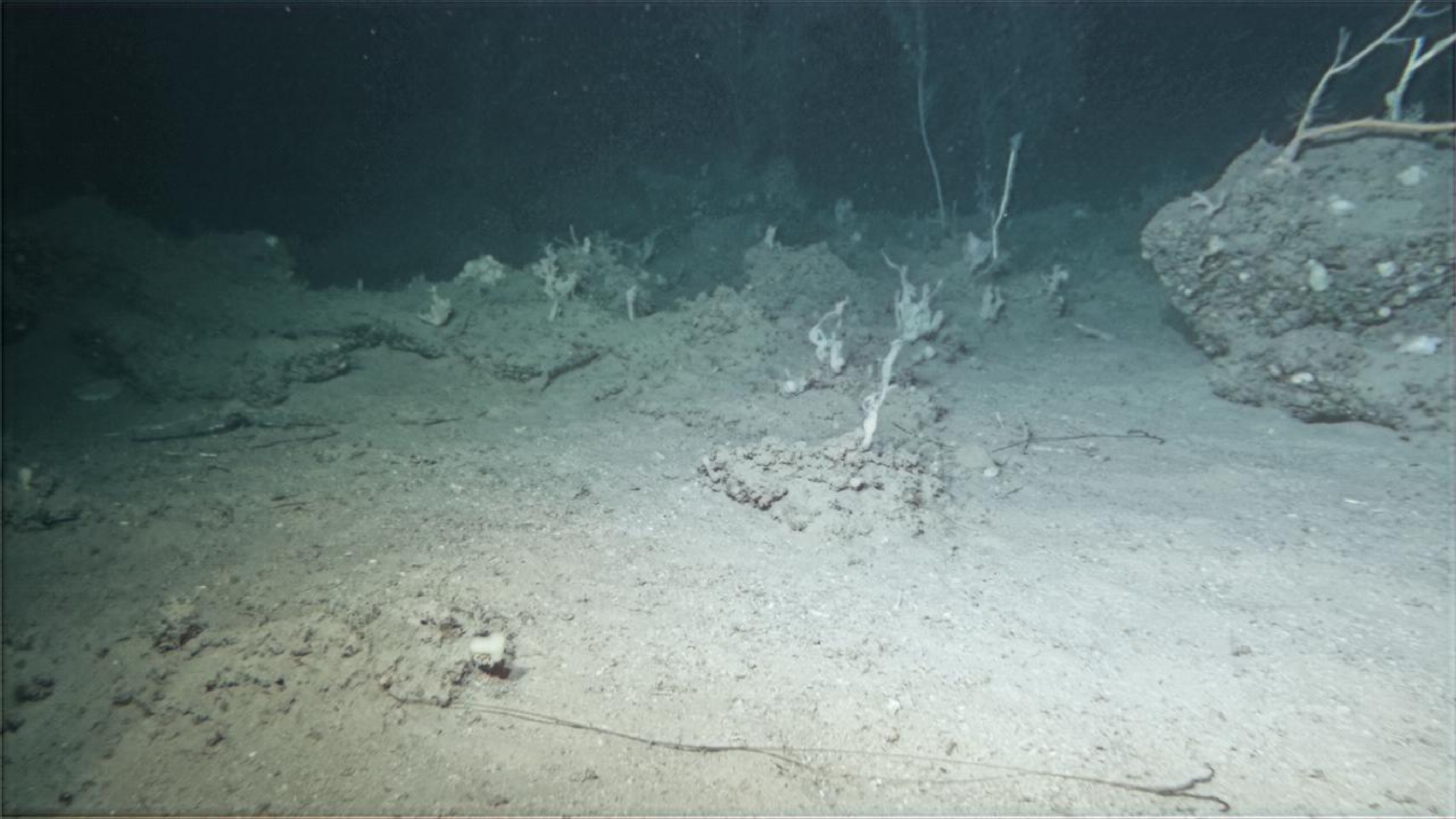

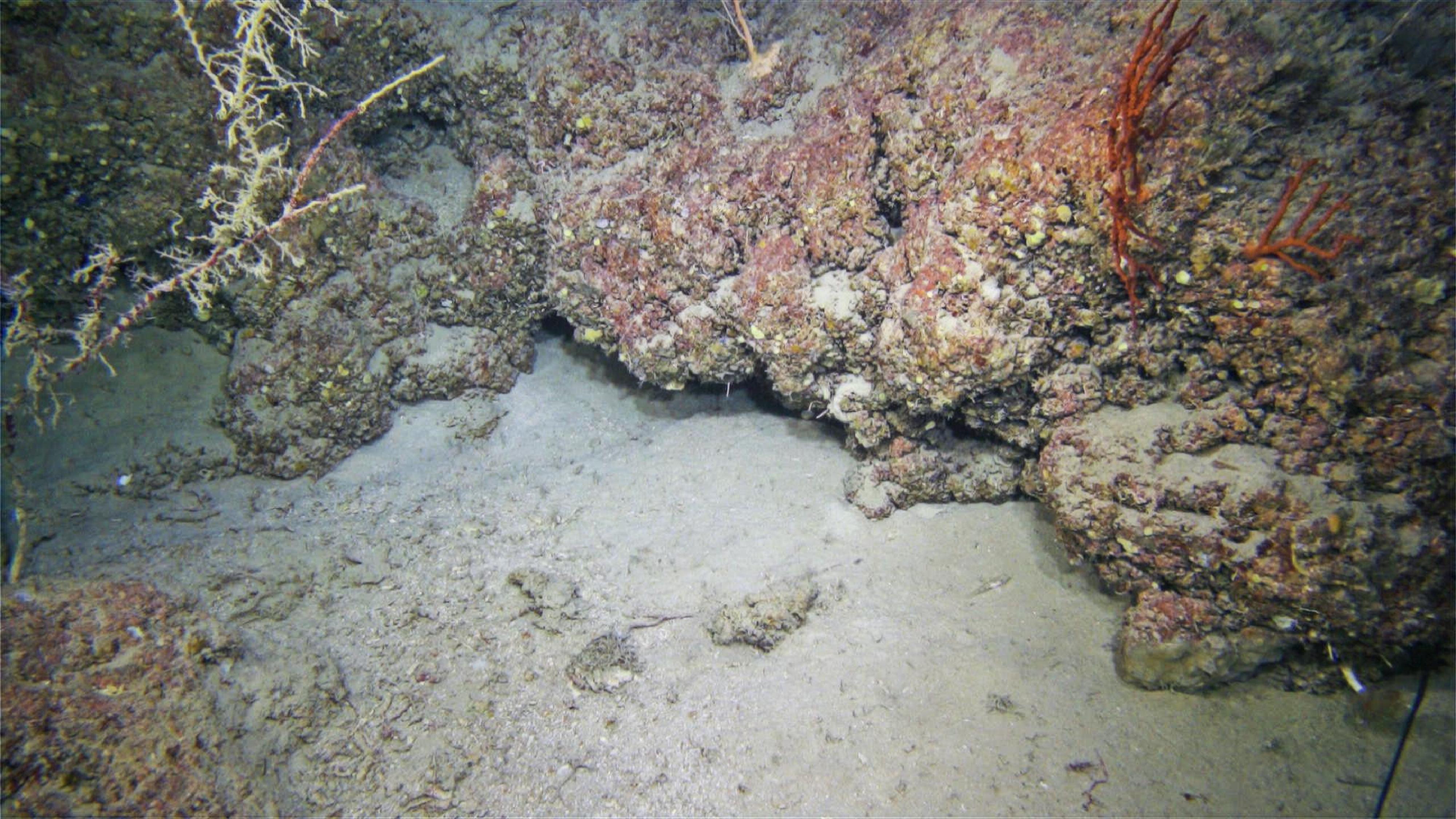

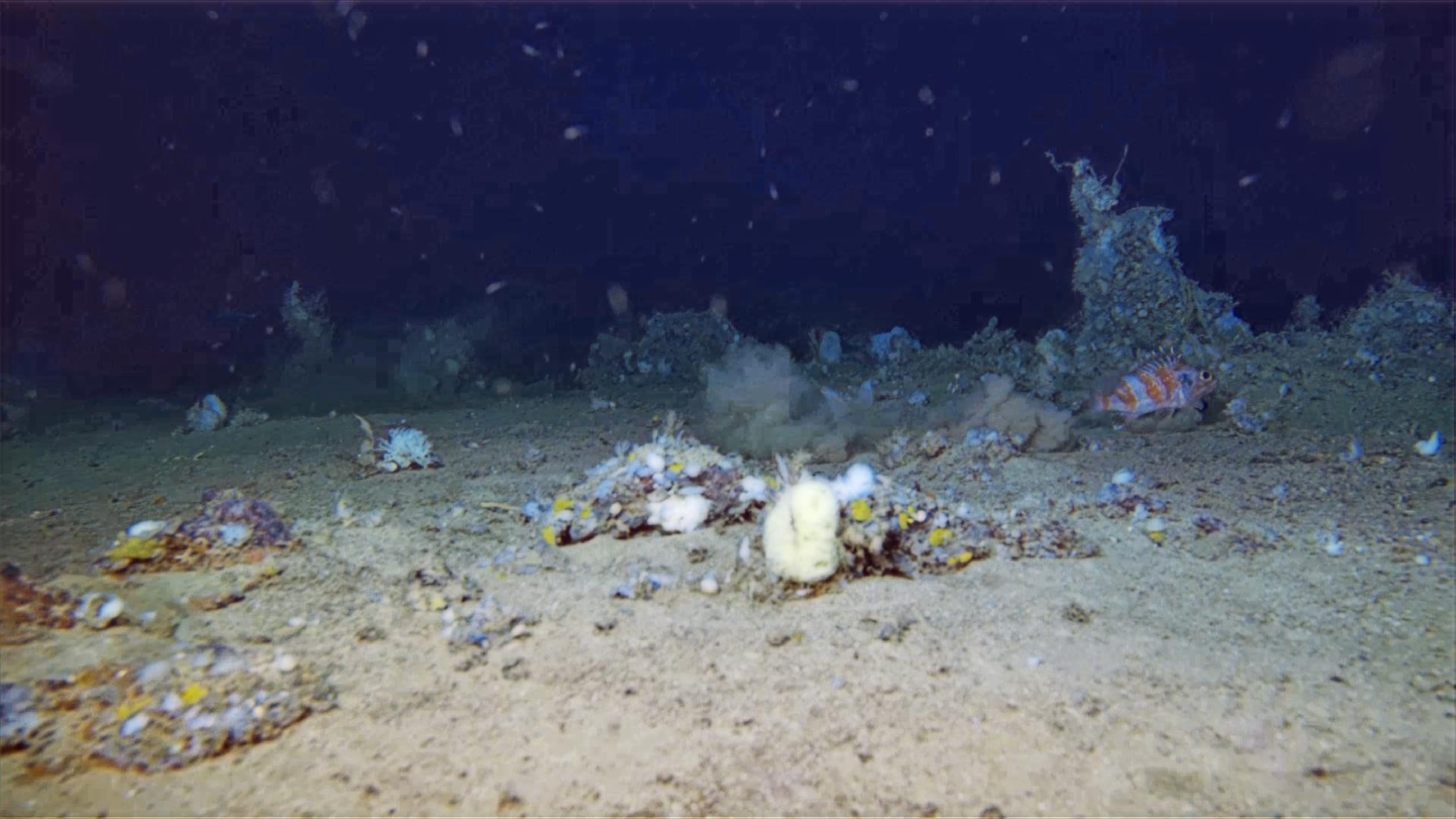

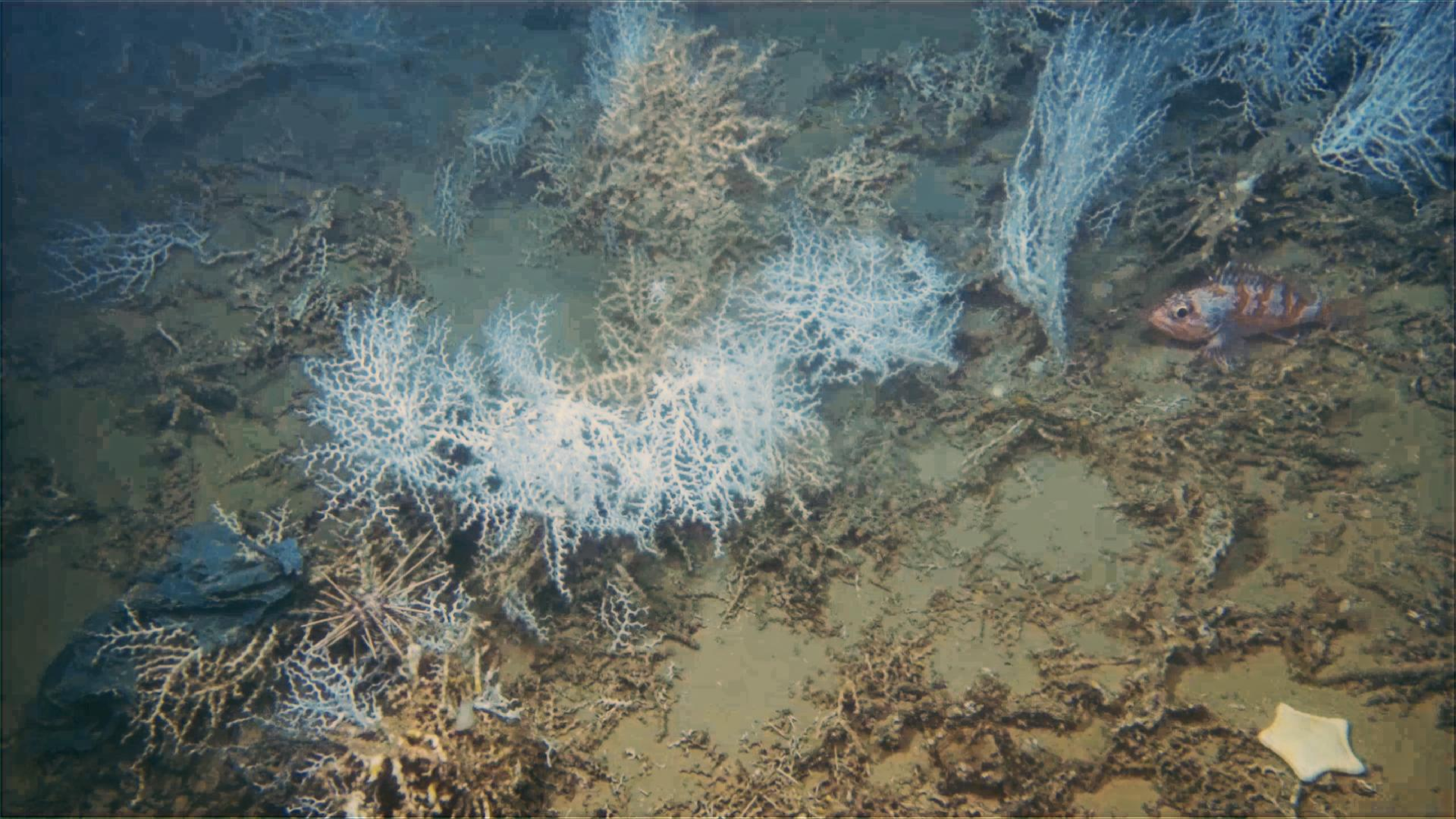

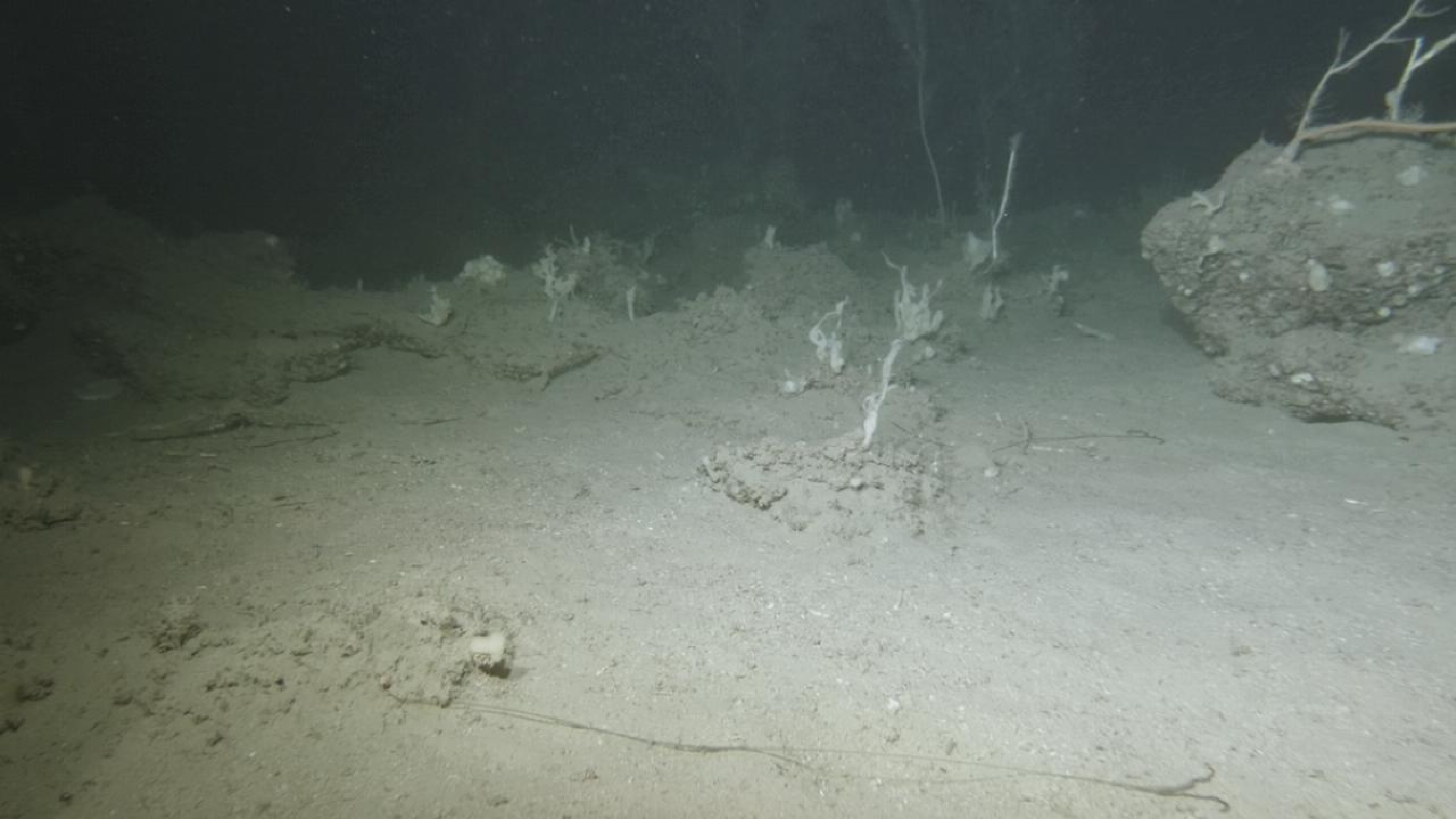

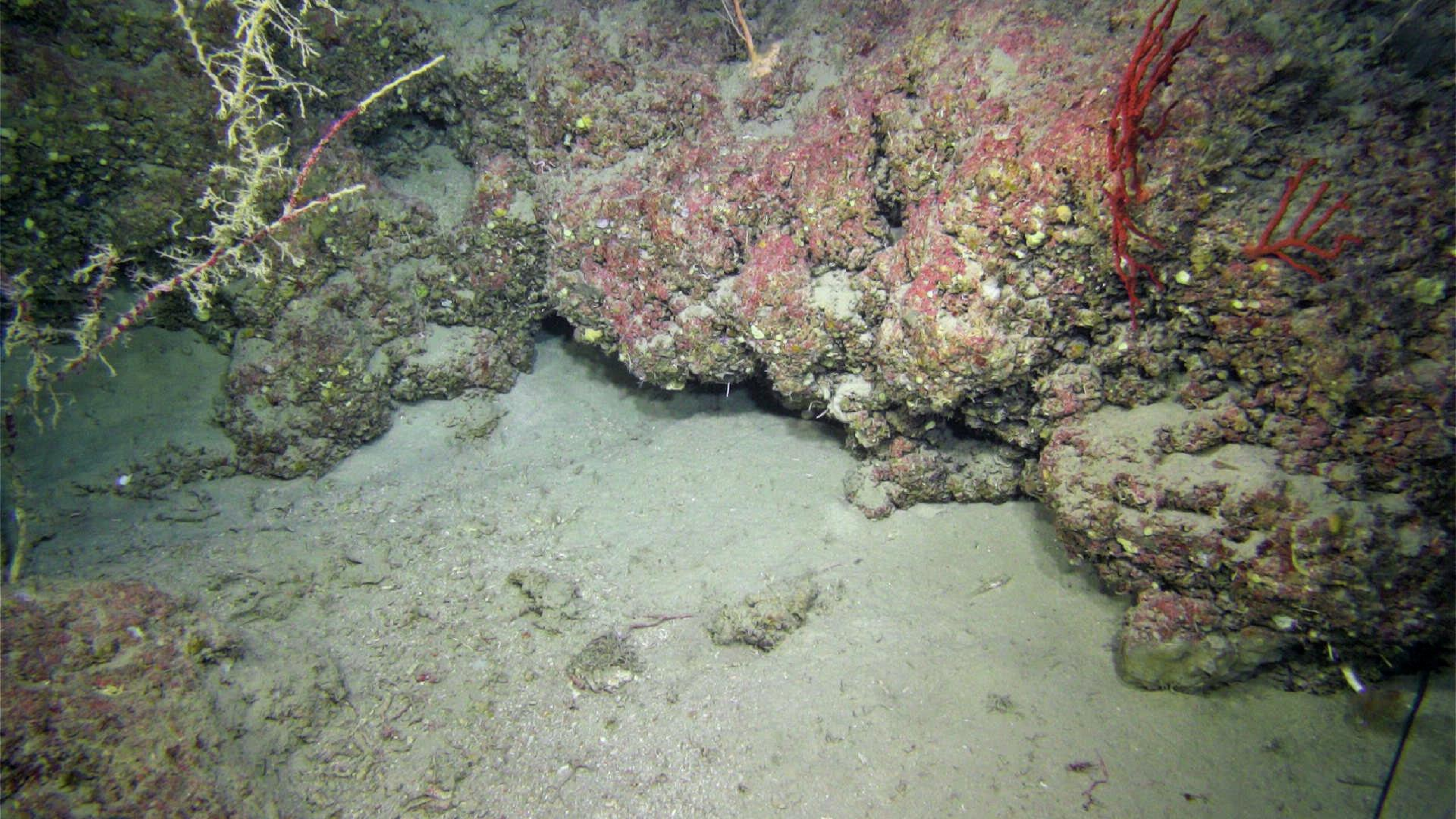

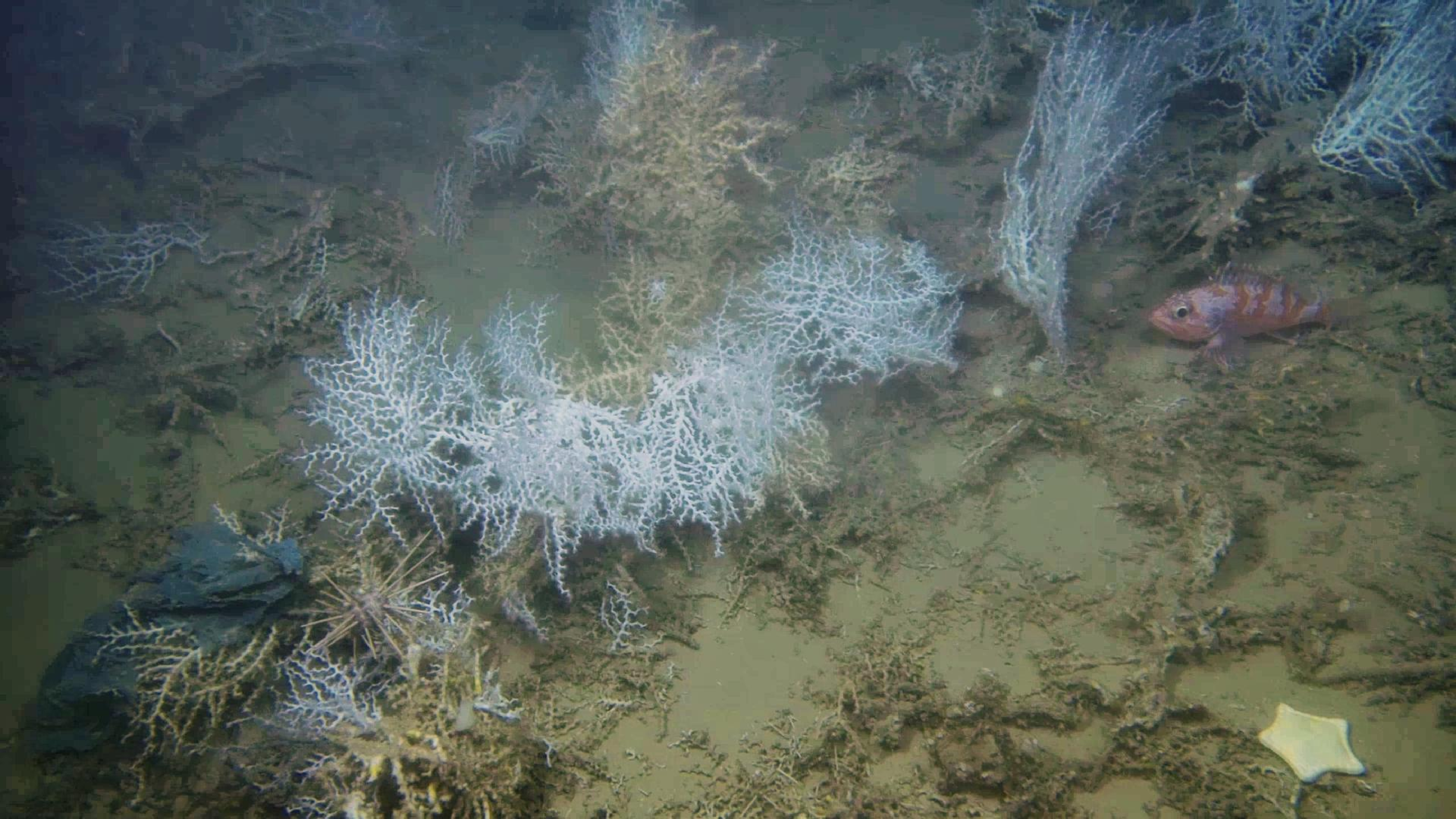

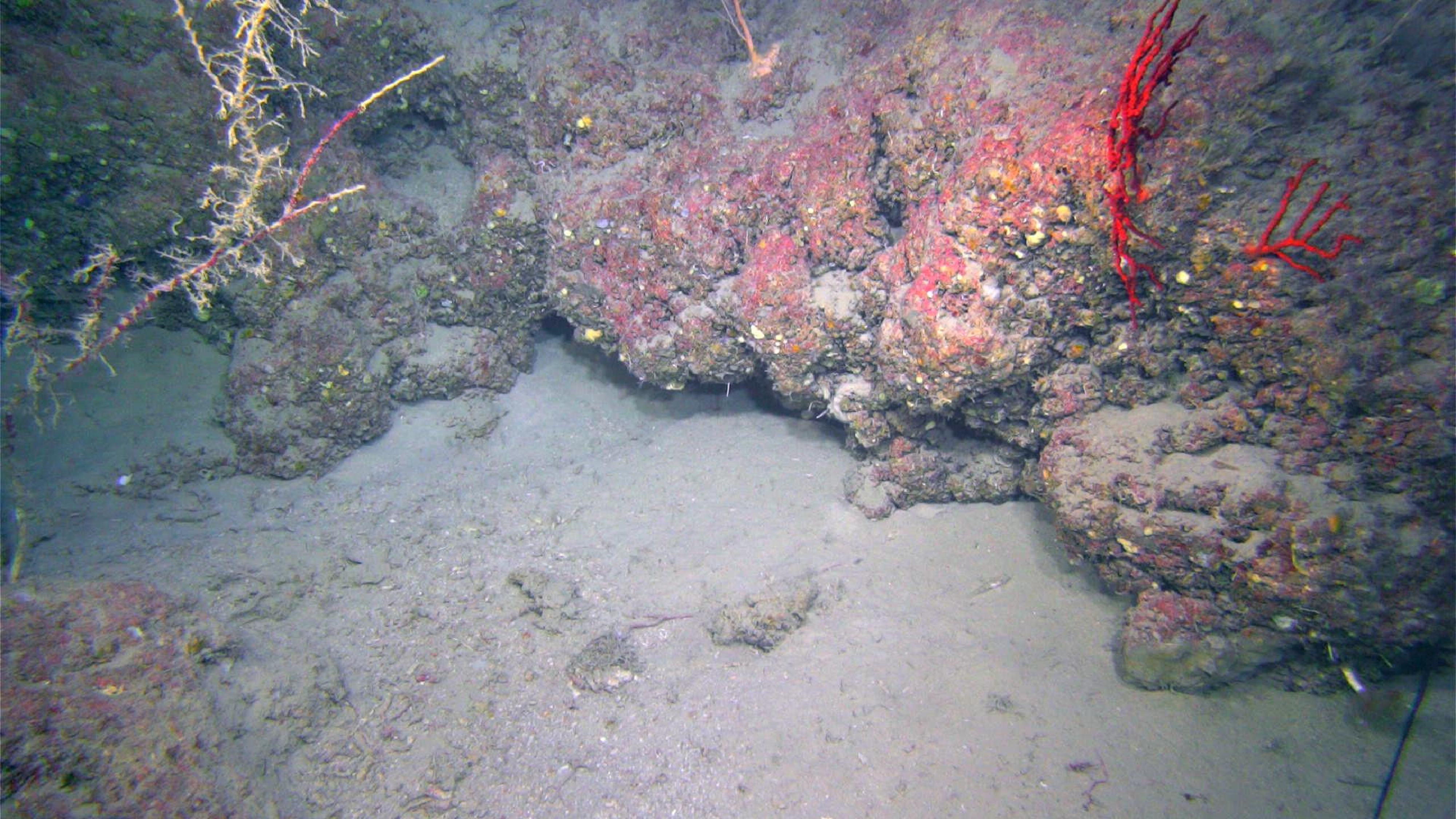

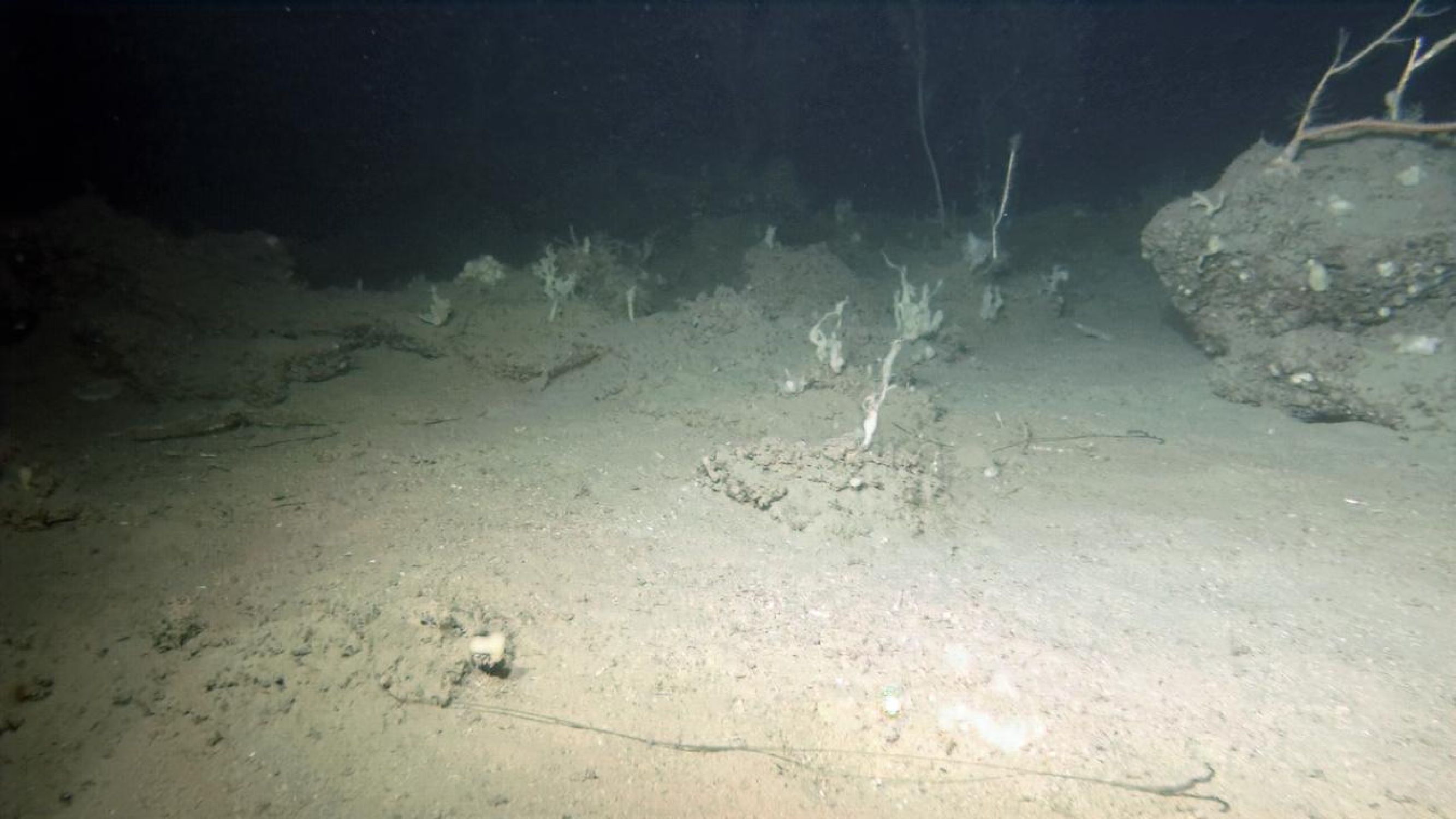

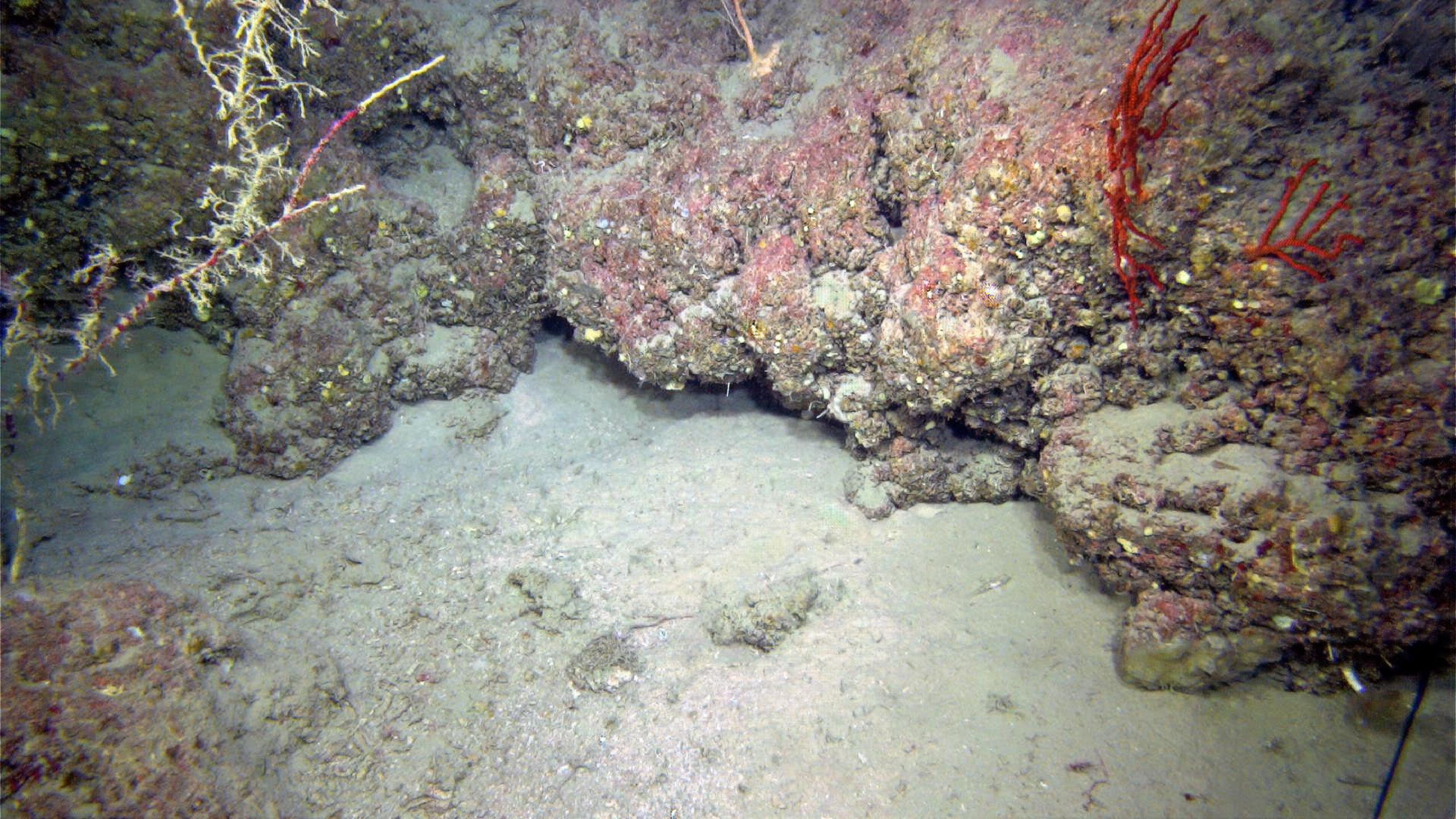

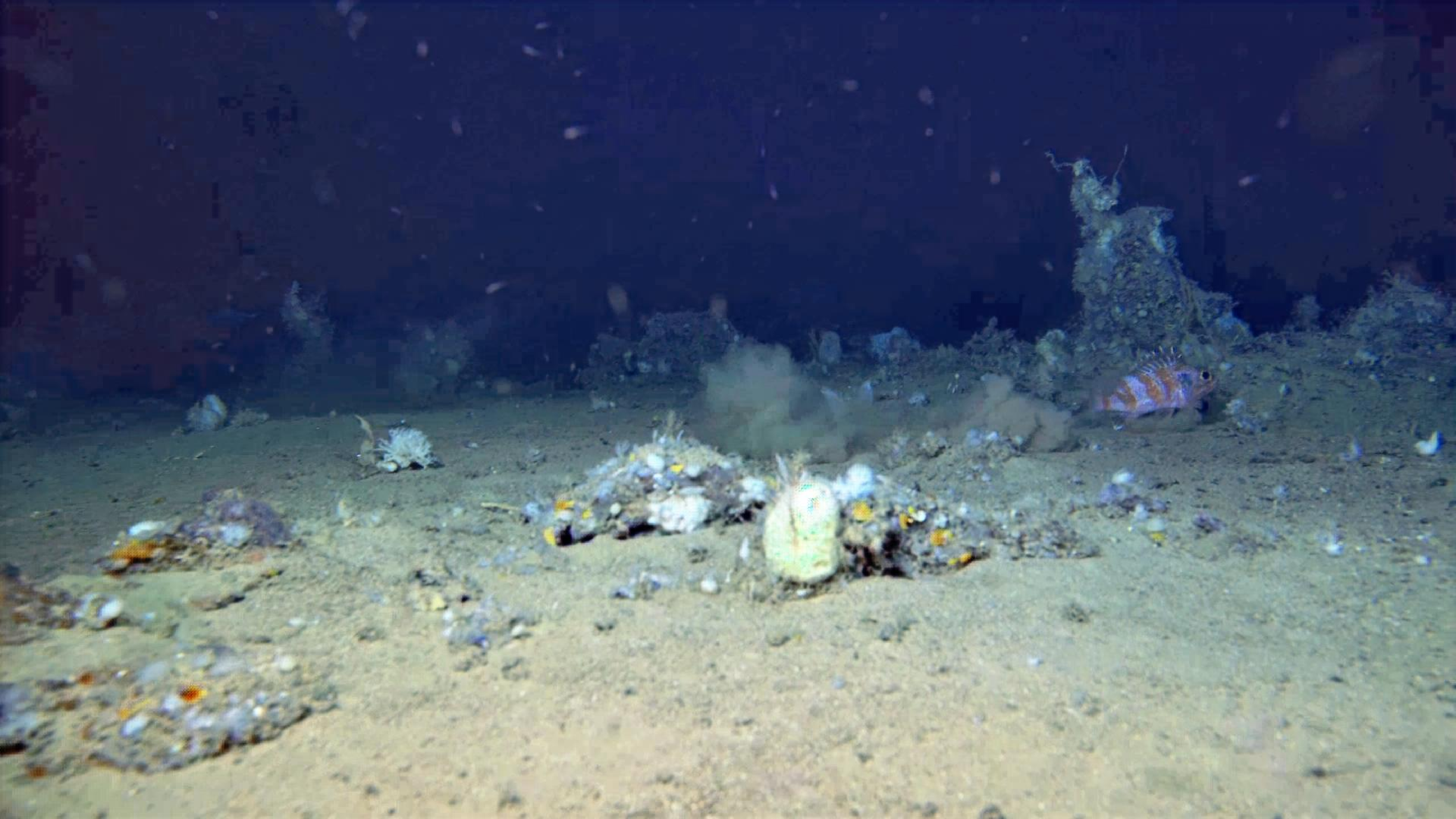

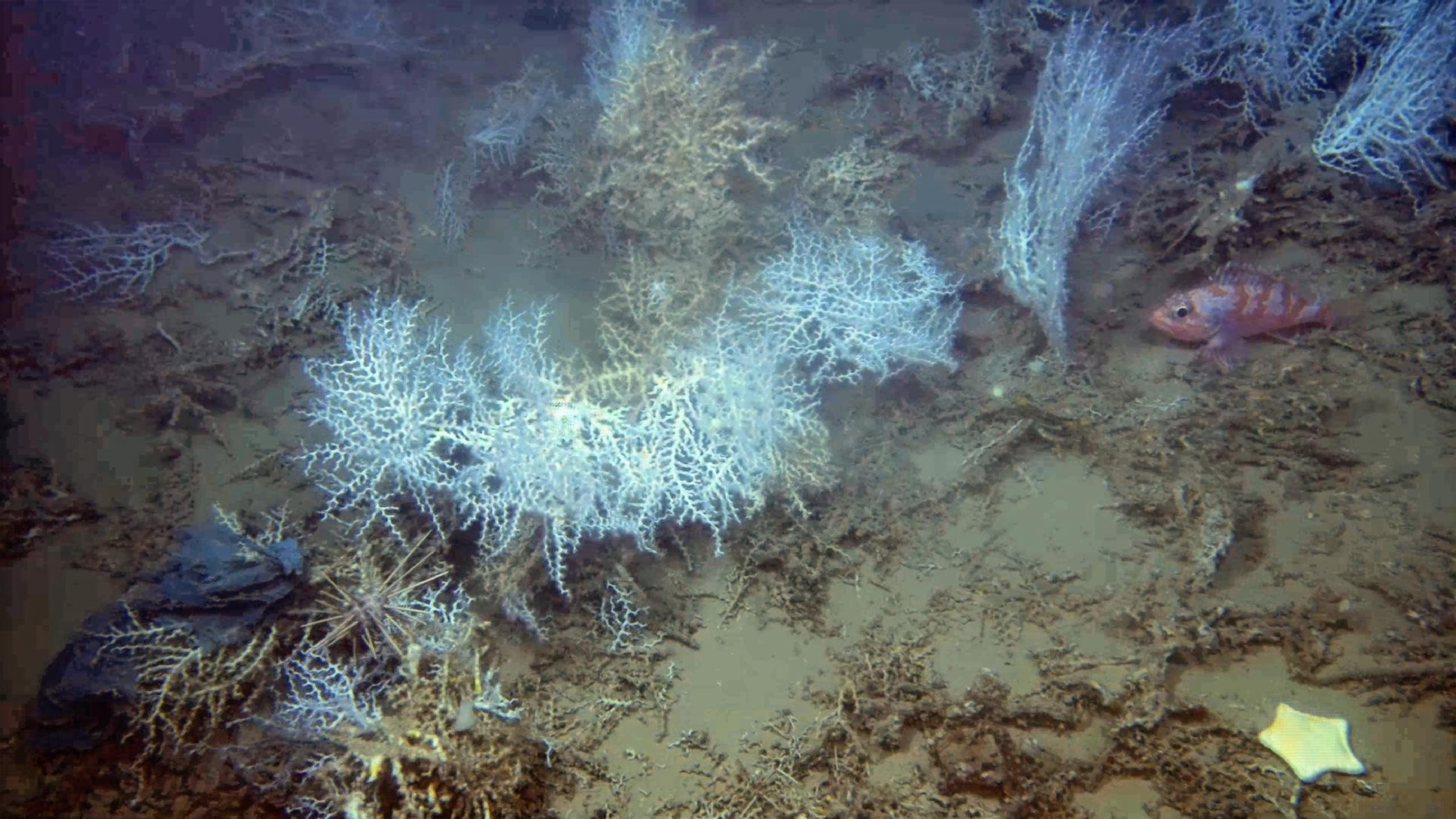

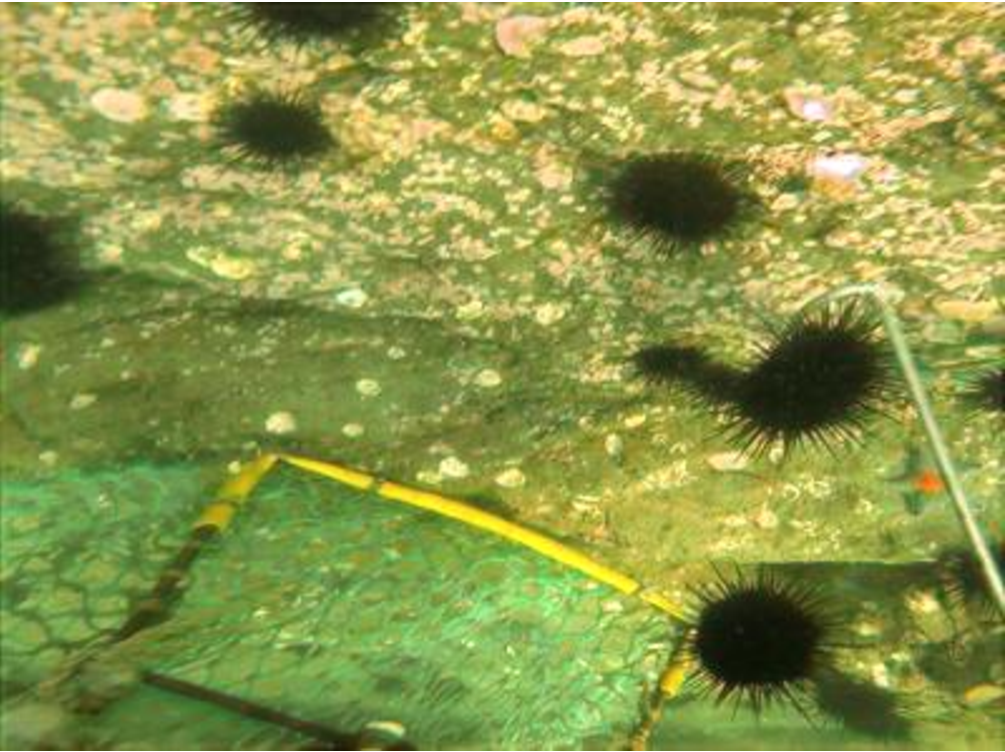

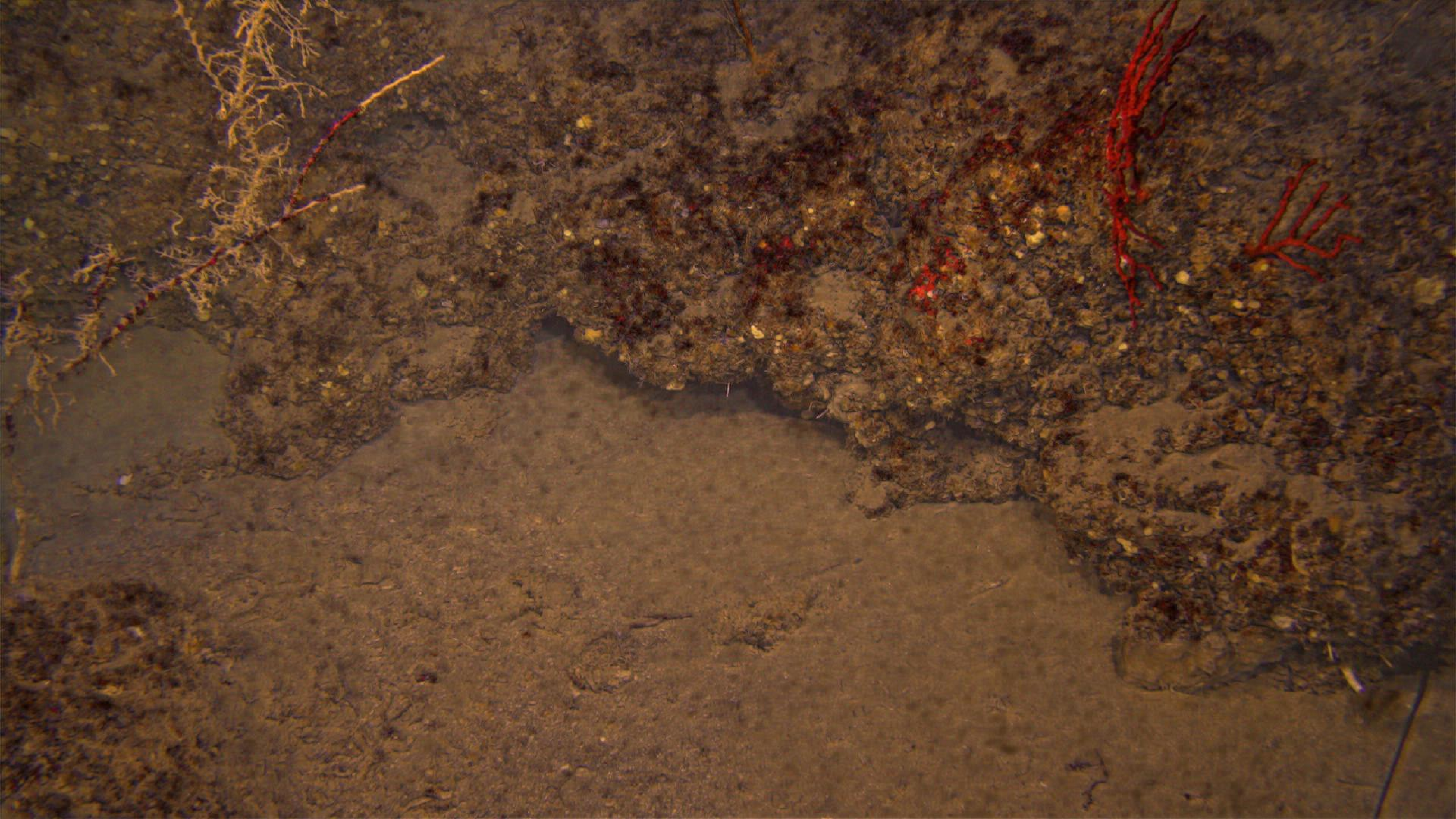

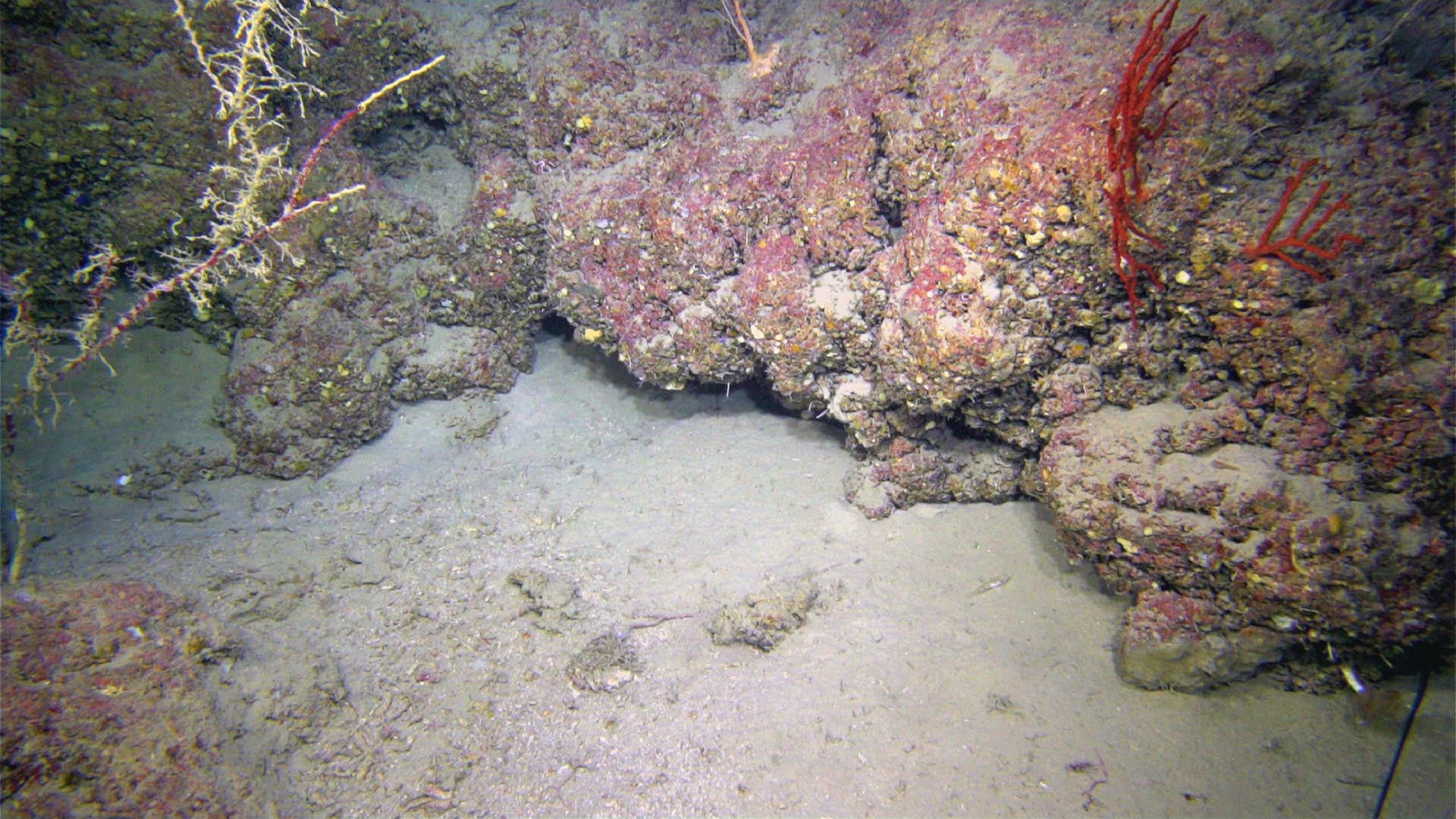

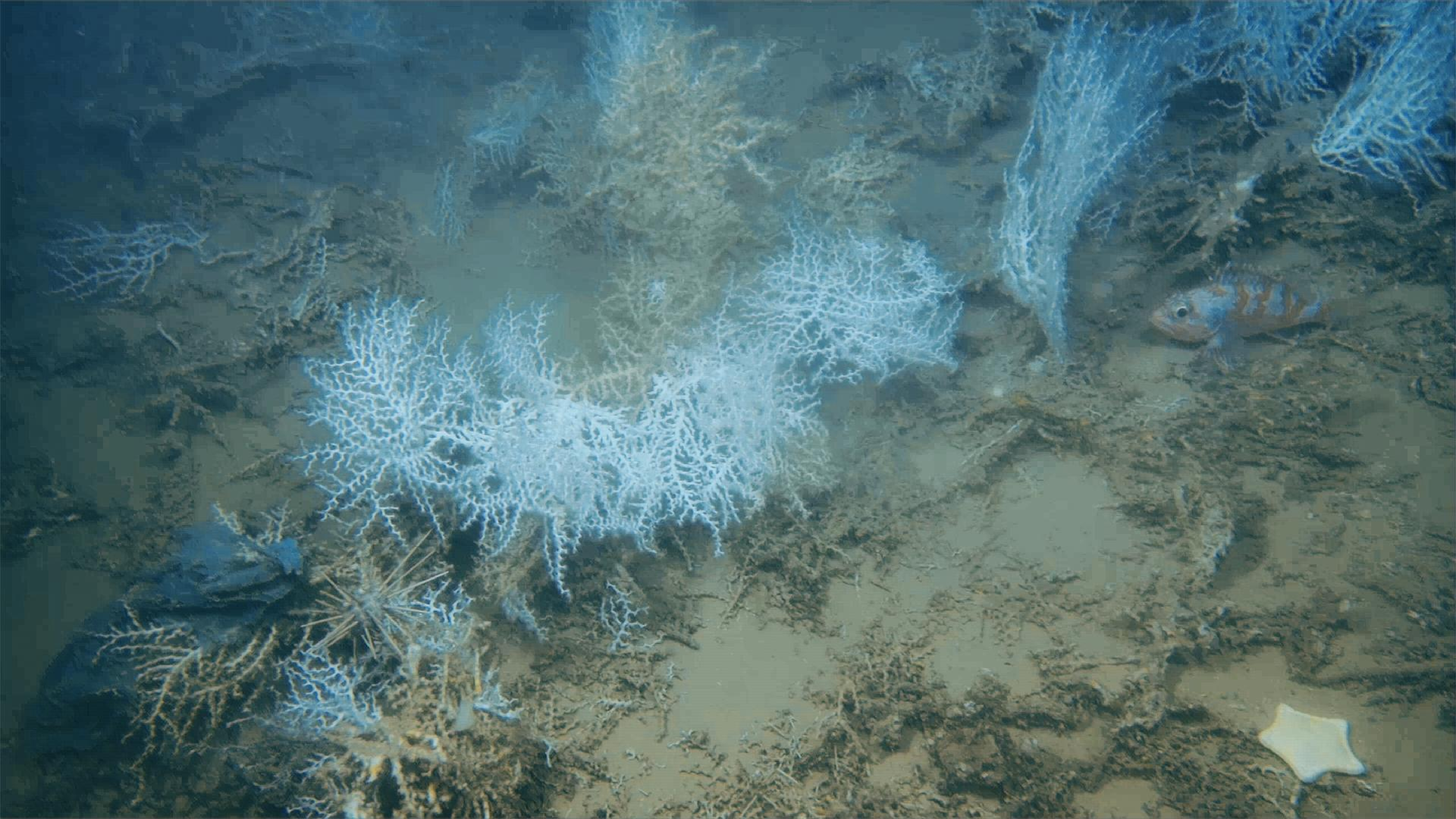

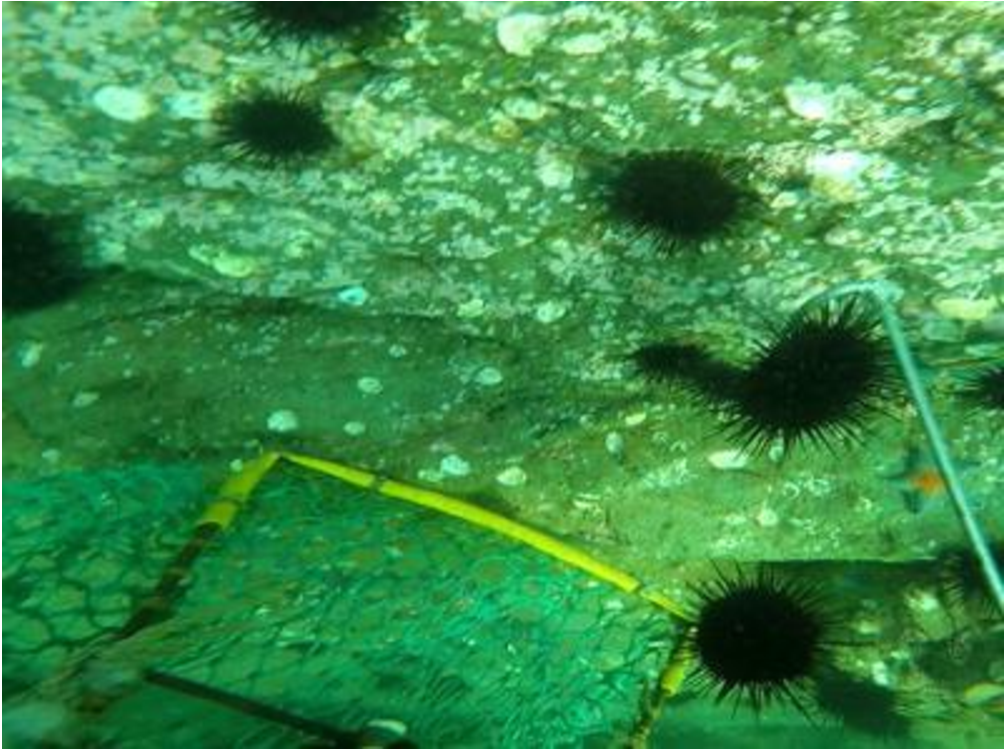

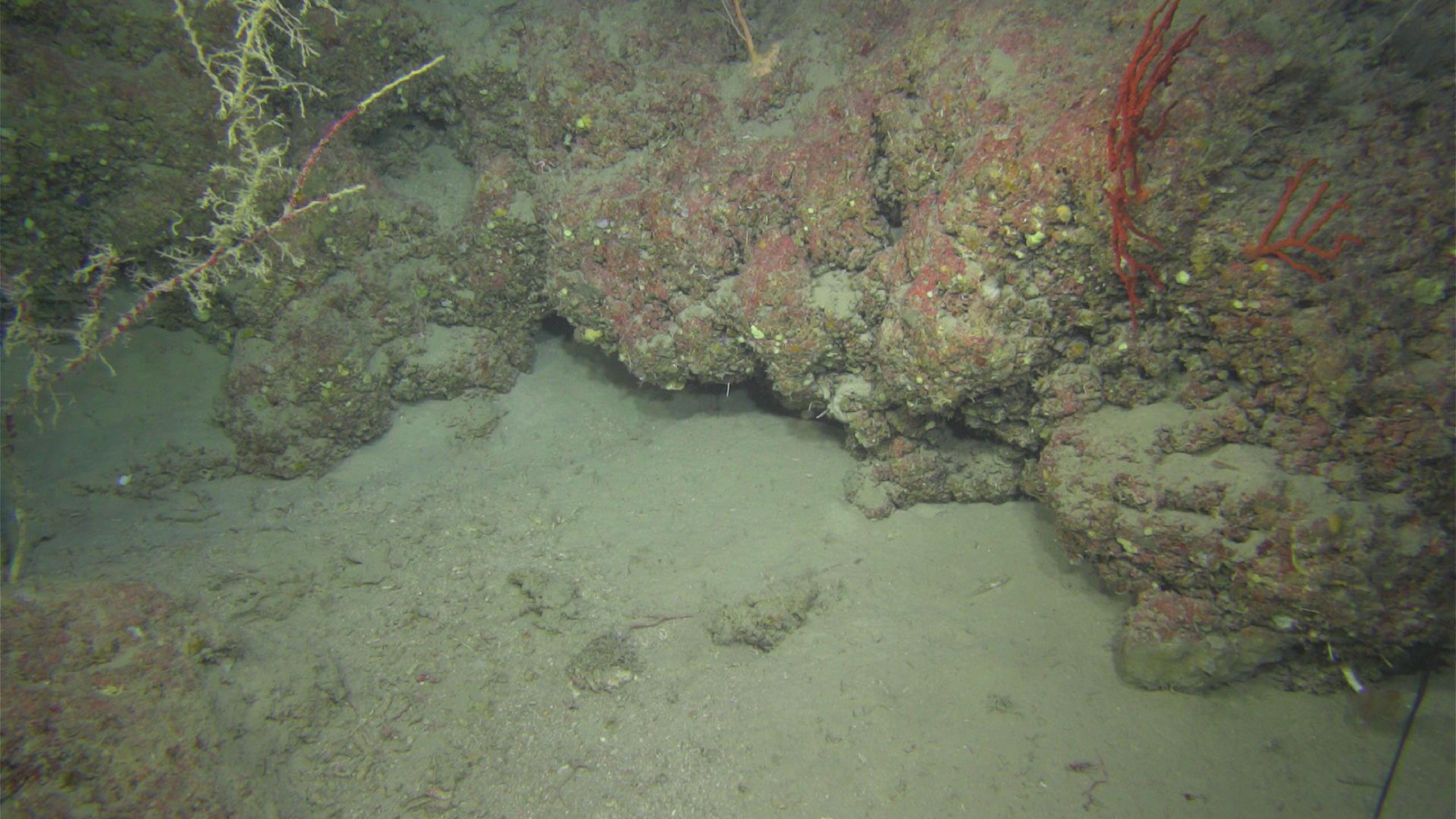

Our design is inspired by recent dual-domain frequency spatial UIE frameworks such as [16], [17], which demonstrate the effectiveness of processing Fourier components to restore texture details and decouple degradation factors in the fre-quency domain. However, neither approach explicitly models illumination imbalance or depth-dependent color attenuation, motivating our integration of an illumination-aware enhancement branch. To better understand these limitations, Figure 1 presents a component-wise evaluation of our framework. The raw underwater inputs exhibit severe wavelength-dependent attenuation, color imbalance, and substantial loss of structural detail as shown in Figure 1a. A conventional encoder-decoder network recovers part of the global illumination but remains ineffective to resolve complex color shifts or suppress lowfrequency scattering, resulting in visually inconsistent reconstructions as shown in Figure 1b. Incorporating a frequency decomposition branch improves edge sharpness and restores suppressed textures, yet it lacks the contextual awareness required to regulate low-frequency haze and stabilize global color correction, as shown in Figure 1c. Our complete architecture, AQUA-Net, unifies these complementary cues by combining a refined encoder-decoder backbone for global correction, a frequency-guided enhancement block to recover fine-scale structures, and an illumination estimation branch that stabilizes brightness across depth-varying regions as depicted in Figure 1d. This coordinated design produces a more coherent and visually accurate reconstruction, recovering balanced colors, restoring scene contrast, and preserving high-frequency texture across diverse underwater conditions. Motivated by these observations, we propose AQUA-Net, a unified UIE framework designed to jointly address illumination imbalance, structural degradation, and wavelength-dependent color distortion. Moreover, underwater image and video analysis play a critical role in deep-sea exploration, ecological monitoring, robotic operations, and the evaluation of deep learning models. Existing benchmark datasets [9], [18], [19] have enabled significant advances in UIE and restoration. However, they mainly focus on shallow-water or laboratory conditions and do not adequately represent the challenging environments en-countered in real deep-sea operations. Many existing datasets provide limited cov

This content is AI-processed based on open access ArXiv data.