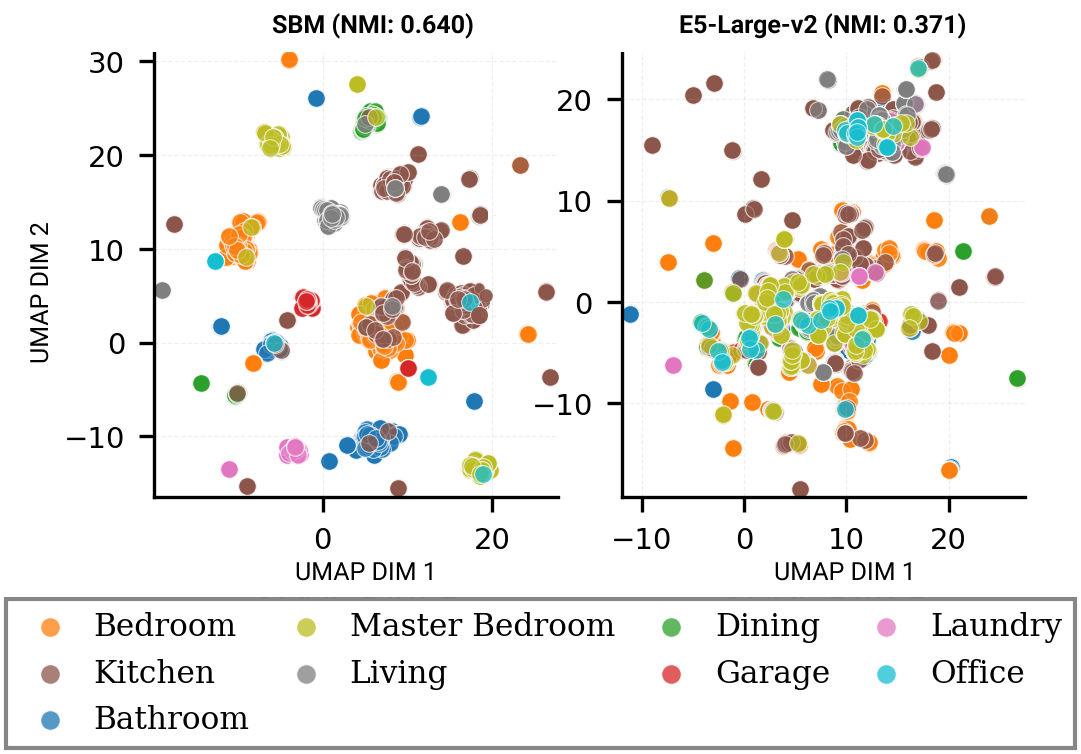

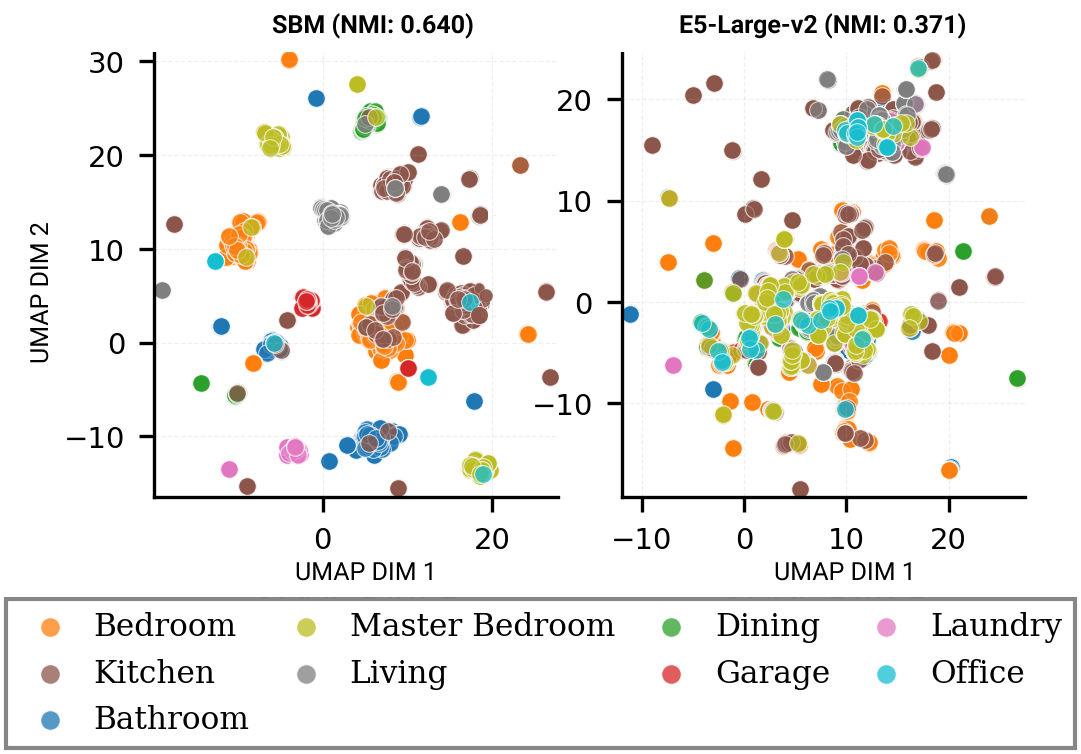

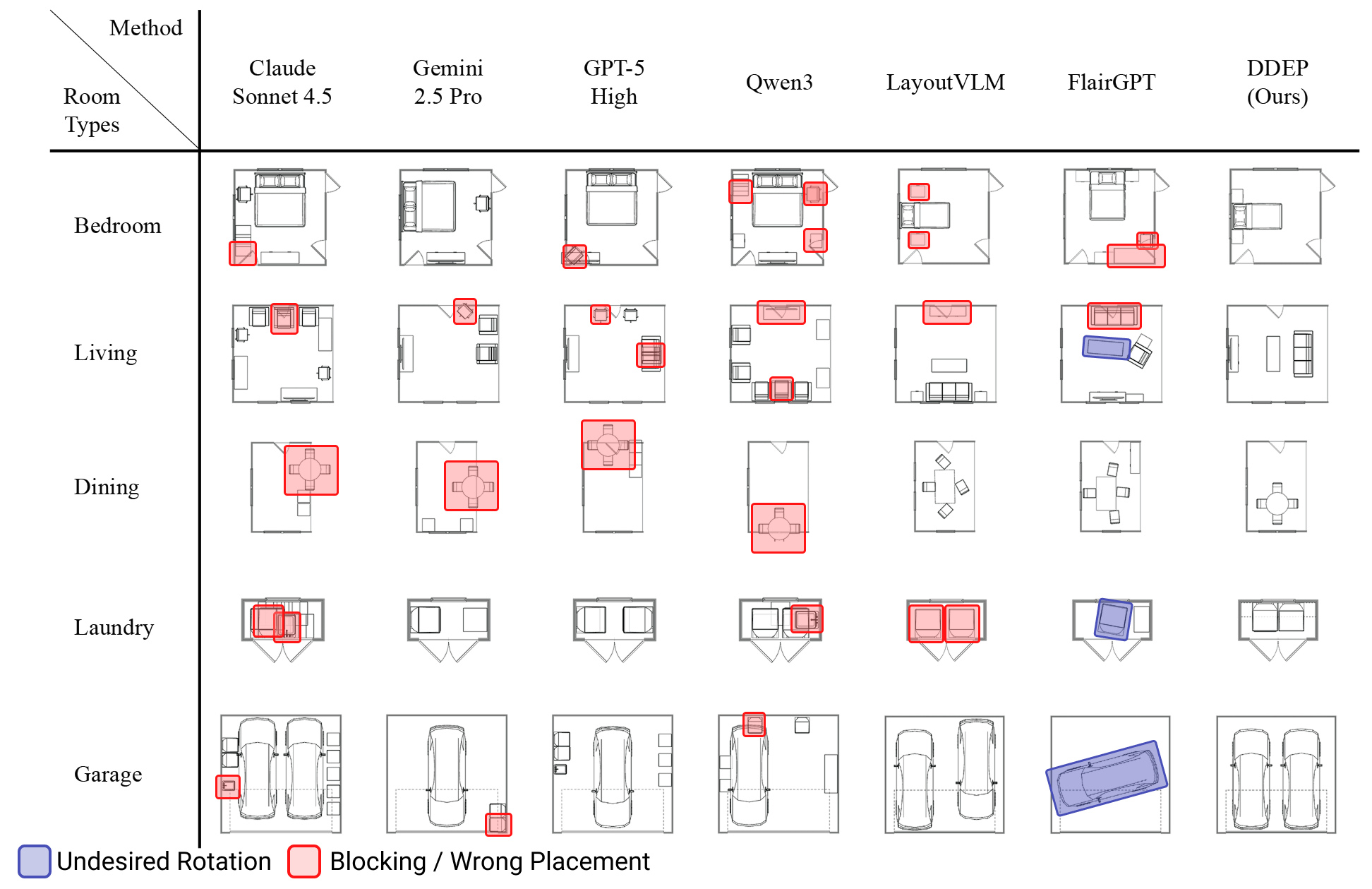

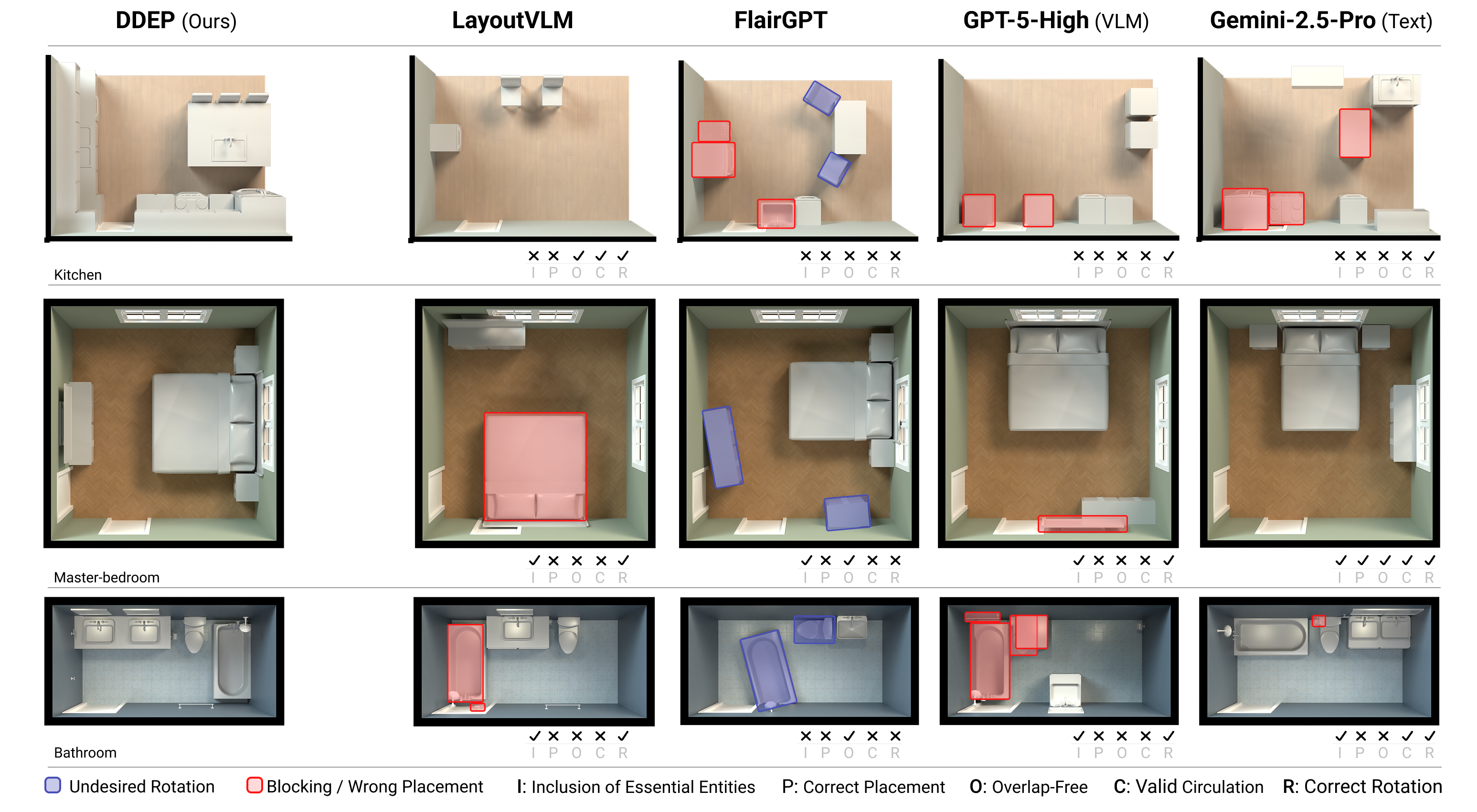

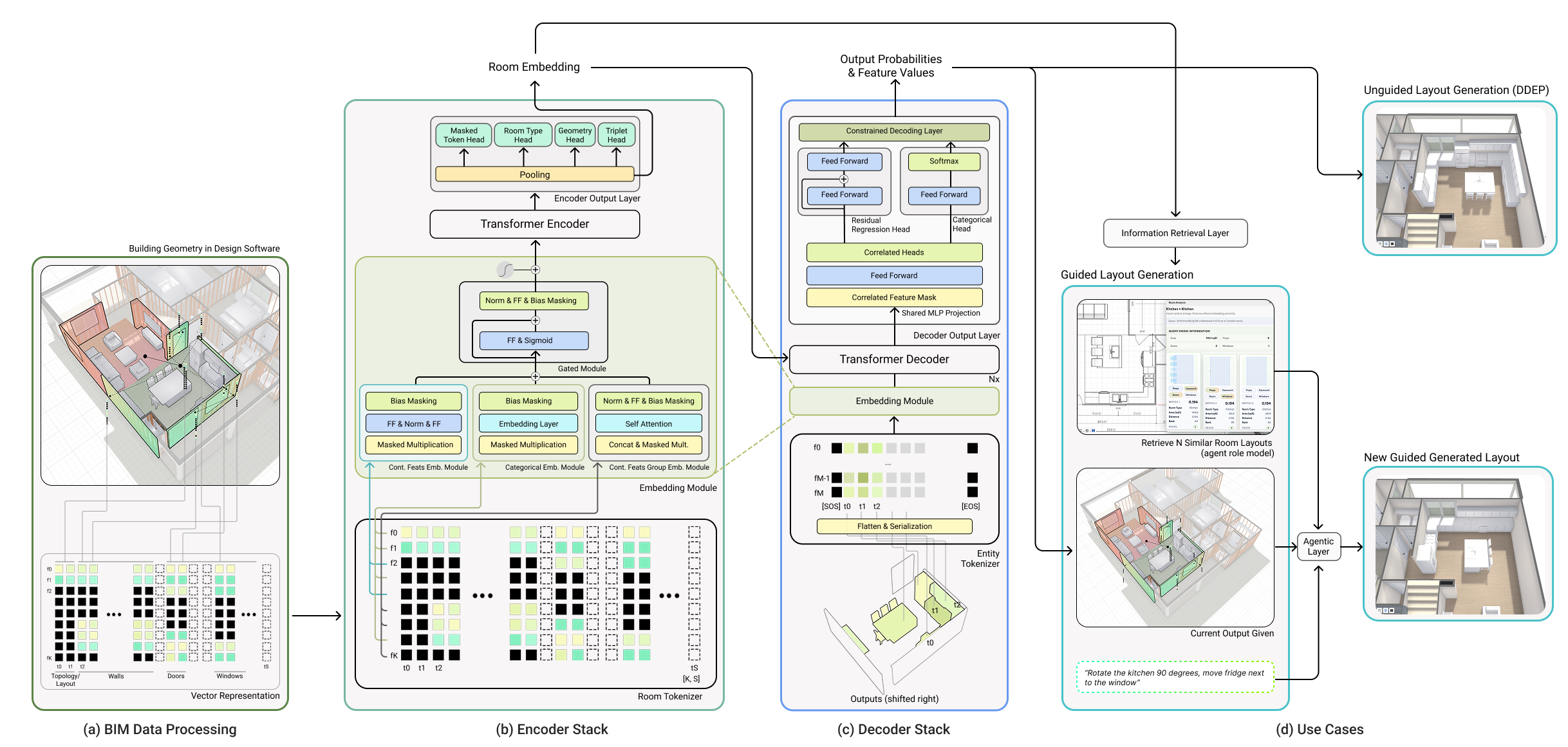

We introduce Small Building Model (SBM), a Transformer-based architecture for layout synthesis in Building Information Modeling (BIM) scenes. We address the question of how to tokenize buildings by unifying heterogeneous feature sets of architectural elements into sequences while preserving compositional structure. Such feature sets are represented as a sparse attribute-feature matrix that captures room properties. We then design a unified embedding module that learns joint representations of categorical and possibly correlated continuous feature groups. Lastly, we train a single Transformer backbone in two modes: an encoder-only pathway that yields high-fidelity room embeddings, and an encoder-decoder pipeline for autoregressive prediction of room entities, referred to as Data-Driven Entity Prediction (DDEP). Experiments across retrieval and generative layout synthesis show that SBM learns compact room embeddings that reliably cluster by type and topology, enabling strong semantic retrieval. In DDEP mode, SBM produces functionally sound layouts, with fewer collisions and boundary violations and improved navigability.

Professional Computer Aided Design (CAD)/BIM scenes encode rich semantics, hierarchies, and domain constraints, but most strong 3D generators-voxel, mesh, point-cloud, or image-conditioned diffusion models-treat scenes as unstructured geometry [3], yielding visually plausible yet hard-to-edit outputs that frequently violate basic validity rules. Room-scale layout design further demands multiscale reasoning and parametric outputs compatible with downstream BIM tools, reflecting the highly constrained yet repetitive nature of practice, where designers position walls, doors, casework, and circulation to satisfy codes and programs across hundreds of similar rooms [1,2,4,6]. Decades of computational approaches-from CAD macros and parametric families to rule systems, optimization, and learning-based methods-have aimed to automate this process [15], but progress remains bottlenecked not by algorithms themselves but by the representations they operate on. An effective representation must expose structure, generalize across building types, and maintain BIM-level geometric and semantic coherence, underscoring the need to rethink spatial data encoding as the foundation for scalable layout reasoning.

To this end, we introduce a normalized hierarchical tokenizer that encodes room topology, entity attributes, wallreferenced geometry, and relational structure into compact BIM-Token Bundles. We realize these bundles as rows of a sparse attribute-feature matrix and embedded via a mixedtype module that produces a unified token vector for Transformer models. Building on this representation, our SBM provides a Transformer backbone for BIM scenes, supporting both encoder-only analysis of room embeddings at scale and encoder-decoder generation through DDEP, which autoregressively places room-level entities with mixed categorical and continuous attributes while preserving the compositional structure of BIM data.

In summary, we contribute (1) a normalized hierarchical tokenization of BIM scenes with a mixed-type embedding module, (2) a unified Transformer backbone (SBM) that supports both retrieval and generative layout synthesis through encoder-only and encoder-decoder (DDEP) modes, and (3) a comprehensive evaluation on a professional BIM dataset demonstrating consistently higher scene coherence, geometric validity, and constraint satisfaction than recent LLM/VLM and domain-specific baselines.

Rule-based and heuristic scene and layout generation. Early work on automatic furniture layout relied on explicit design rules and expert heuristics encoded as constraints. Merrell et al. [14] incorporated interior design guidelines into a cost function and generated layout suggestions by sampling from it. Similarly, Song et al. [20] divided layouts into a few usage modes and applied mode-specific optimization strategies such as recursive subdivision and floor-field methods. Beyond these deterministic rule systems, some approaches convert design guidelines into objective terms and use search-based optimization-such as genetic algorithms-to explore a wider solution space while still operating on the same rule set [9].

Deep learning-based scene and layout generation. Graph-based generative models represent layouts as scene graphs and synthesize object arrangements by predicting node attributes and relational edges [7,27]. Imageand feature-based neural models-ranging from GANs and transformers [13,24] to hybrid vectorized systems like HouseDiffusion [19]-learn strong statistical priors for furniture placement yet still depend heavily on training distributions and often require post-hoc refinement to satisfy geometric and functional constraints. Diffusion-based models further improve controllability and semantic consistency, via conditional GAN refinement [16], mixed discrete-continuous diffusion for 3D scenes [8], room-maskconditioned semantic diffusion [23], multi-view RGB-D generation [17], and text-to-layout diffusion Transformers [18,21].

Language-based models have recently been applied to spatial layout generation by representing scenes as token sequences. LayoutGPT [5] frames layout synthesis as compositional planning, generating object-relation tokens autoregressively, though it remains reliant on prompting and lacks continuous geometric grounding.

Visionlanguage approaches such as LayoutVLM [22] embed spatial cues jointly with textual descriptions to optimize 3D layouts, yet their coupling of vision features and natural language does not yield a normalized geometric token space.

Other systems use LLMs for guided refinement: House-Tune [29] proposes and linguistically adjusts floorplan candidates, while FlairGPT [12] aligns language tokens with furniture and style attributes for interior design exploration. Multi-modal models like LLM4CAD [10] tokenize CAD elements symbolically to support reasoning but operate at the object level without encoding continuous spatial attributes or wall-referenced coordinates. Recently, FloorPlan-De

This content is AI-processed based on open access ArXiv data.