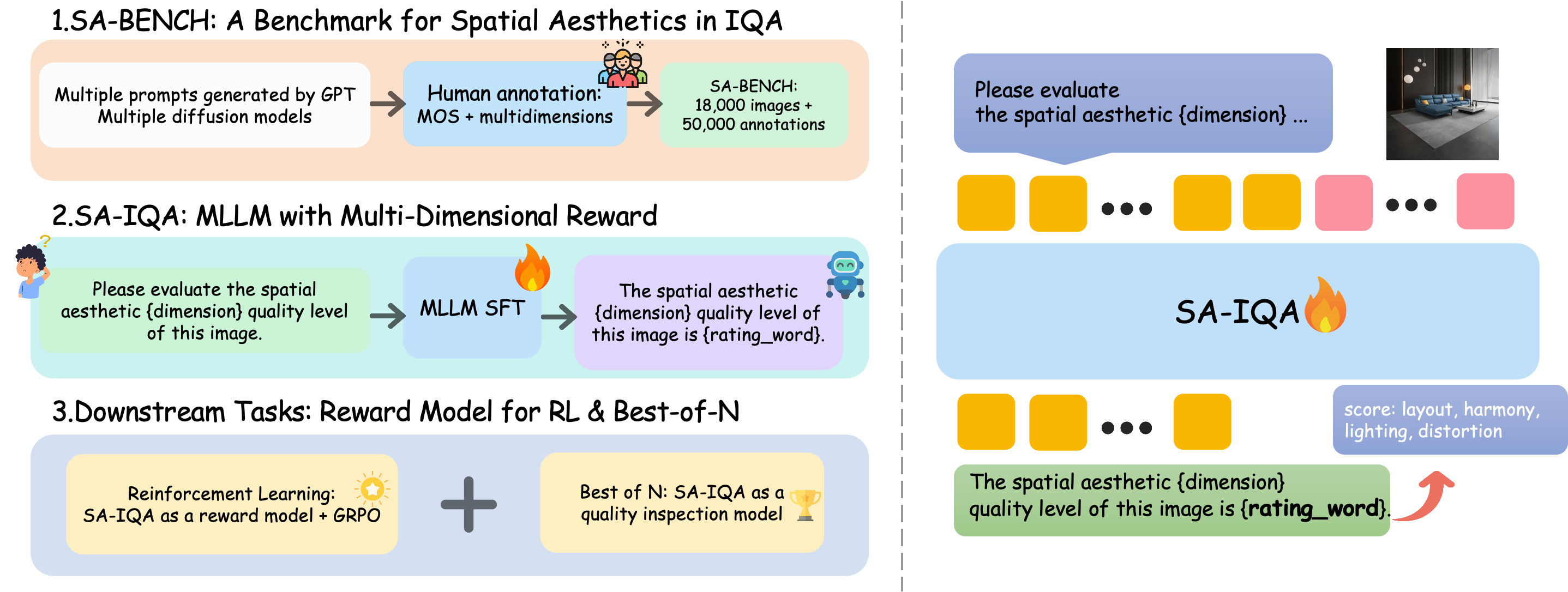

In recent years, Image Quality Assessment (IQA) for AI-generated images (AIGI) has advanced rapidly; however, existing methods primarily target portraits and artistic images, lacking a systematic evaluation of interior scenes. We introduce Spatial Aesthetics, a paradigm that assesses the aesthetic quality of interior images along four dimensions: layout, harmony, lighting, and distortion. We construct SA-BENCH, the first benchmark for spatial aesthetics, comprising 18,000 images and 50,000 precise annotations. Employing SA-BENCH, we systematically evaluate current IQA methodologies and develop SA-IQA, through MLLM fine-tuning and a multidimensional fusion approach, as a comprehensive reward framework for assessing spatial aesthetics. We apply SA-IQA to two downstream tasks: (1) serving as a reward signal integrated with GRPO reinforcement learning to optimize the AIGC generation pipeline, and (2) Best-of-N selection to filter high-quality images and improve generation quality. Experiments indicate that SA-IQA significantly outperforms existing methods on SA-BENCH, setting a new standard for spatial aesthetics evaluation. Code and dataset will be open-sourced to advance research and applications in this domain.

The growth of Generative Artificial Intelligence (AIGC) has made Image Quality Assessment (IQA) increasingly important. IQA has two main roles: one is to filter low-quality generated content, and the second is to be used as a human preference alignment signal for post-training generative models [30]. Therefore, the evaluation content of IQA has also evolved from early traditional image quality (such as blur or noise) [34] to the current stage's complex human preferences, such as image aesthetics [7], human anatomy [16], text-image alignment [38], and the latest instruction following in image editing [6].

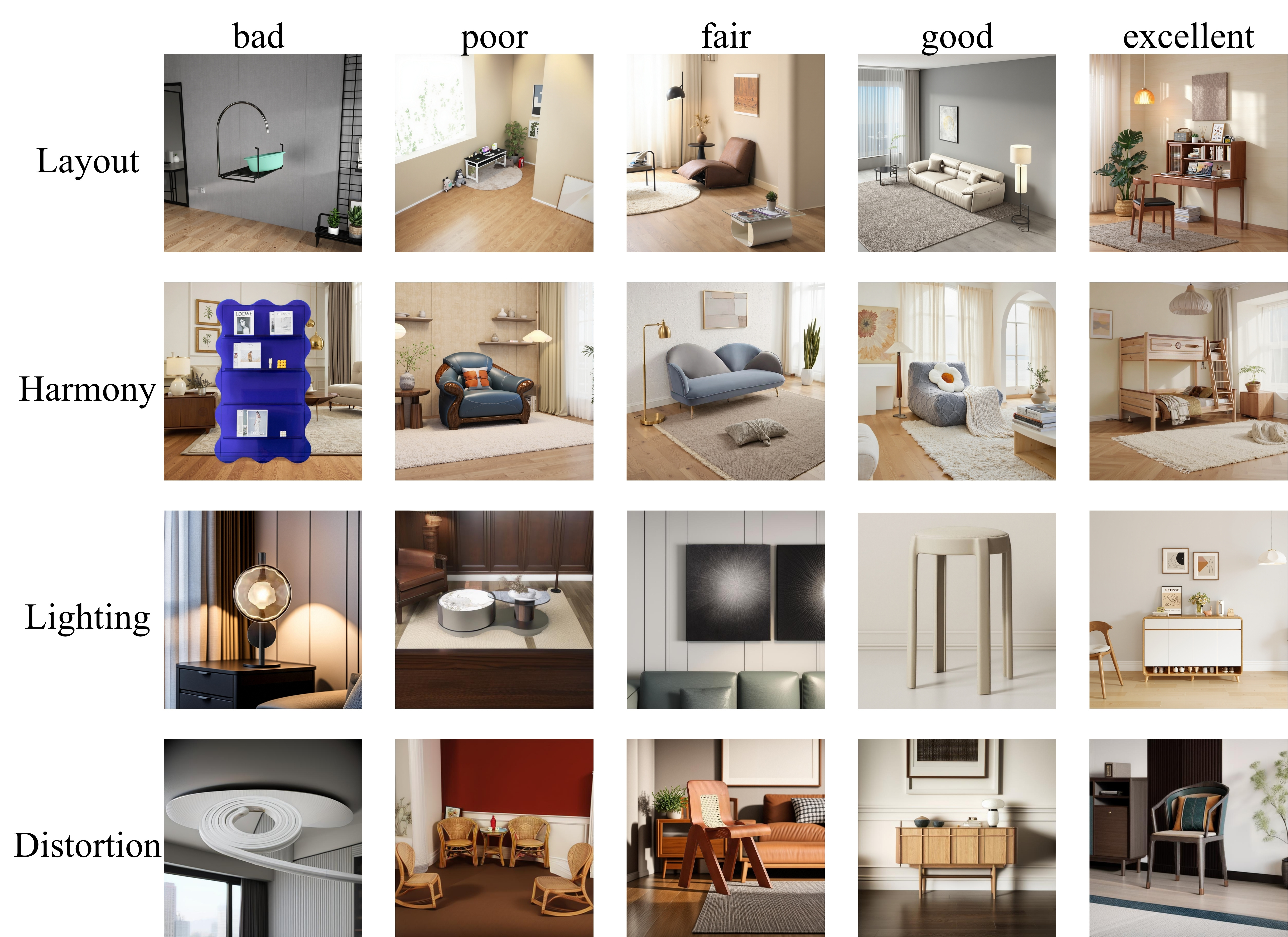

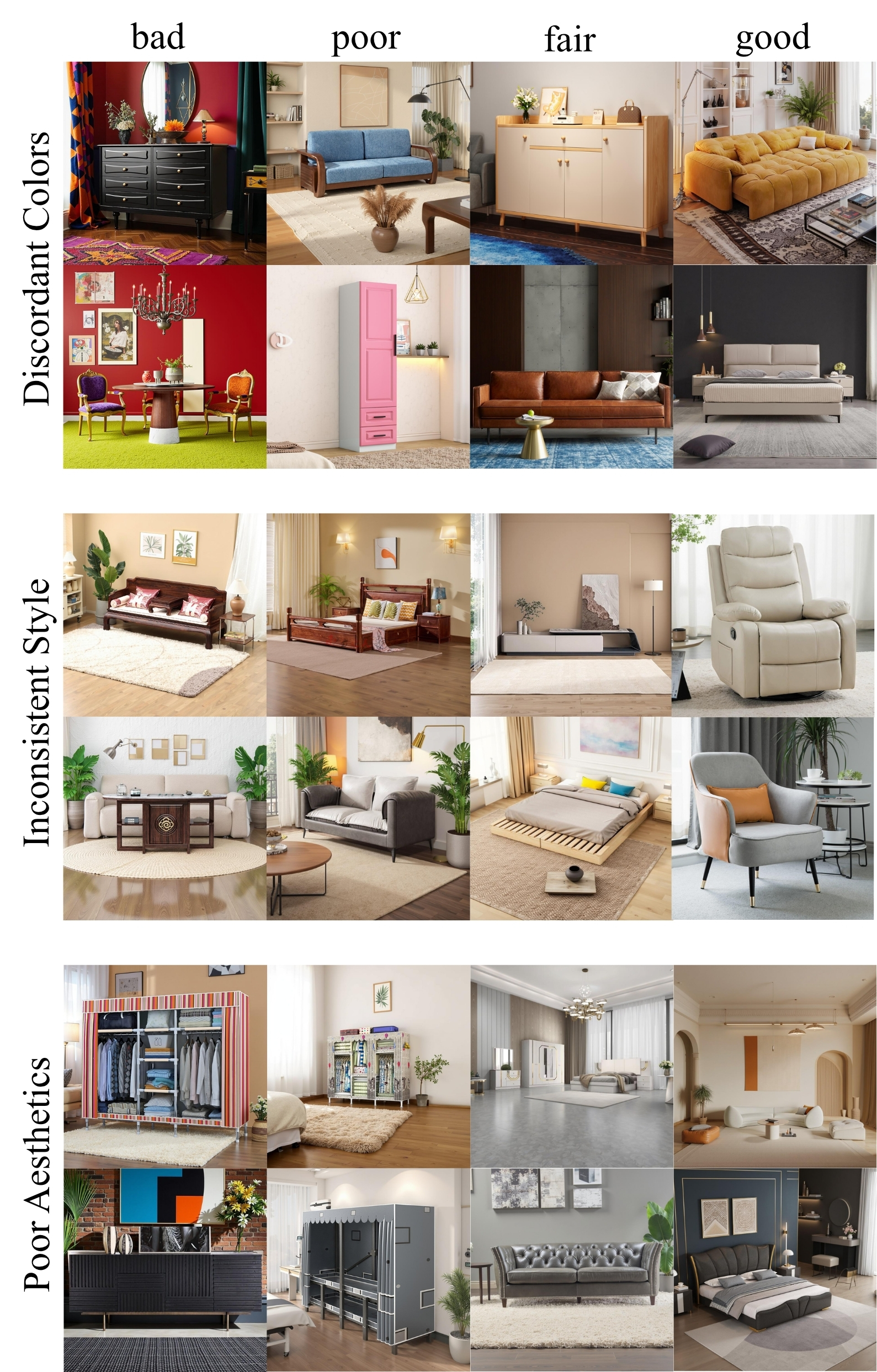

Along with the change in IQA’s research focus, the re-search methods have also evolved from being based on early pre-trained models like CLIP [23] to leveraging Multimodal Large Language Models (MLLMs), like Q-Align [27], which perform finer-grained, human-aligned evaluations by utilizing the capabilities of large pre-trained models. AI is being rapidly and widely adopted in applications like interior design and furniture e-commerce. However, existing IQA methods are often trained on general-purpose datasets [10,14] that cover mass aesthetic preferences. While specialized benchmarks for domains like human figures [16] or artistic style [36] exist, there is currently no dataset or corresponding IQA method dedicated to interior design spatial aesthetics. This evaluation of “Spatial Aesthetics” is extremely complex, demanding the concurrent evaluation of multiple factors: the layout of objects within the space, the harmony of style and color, the consistency and coordination of lighting, and the presence of AI-induced environmental distortions or artifacts.

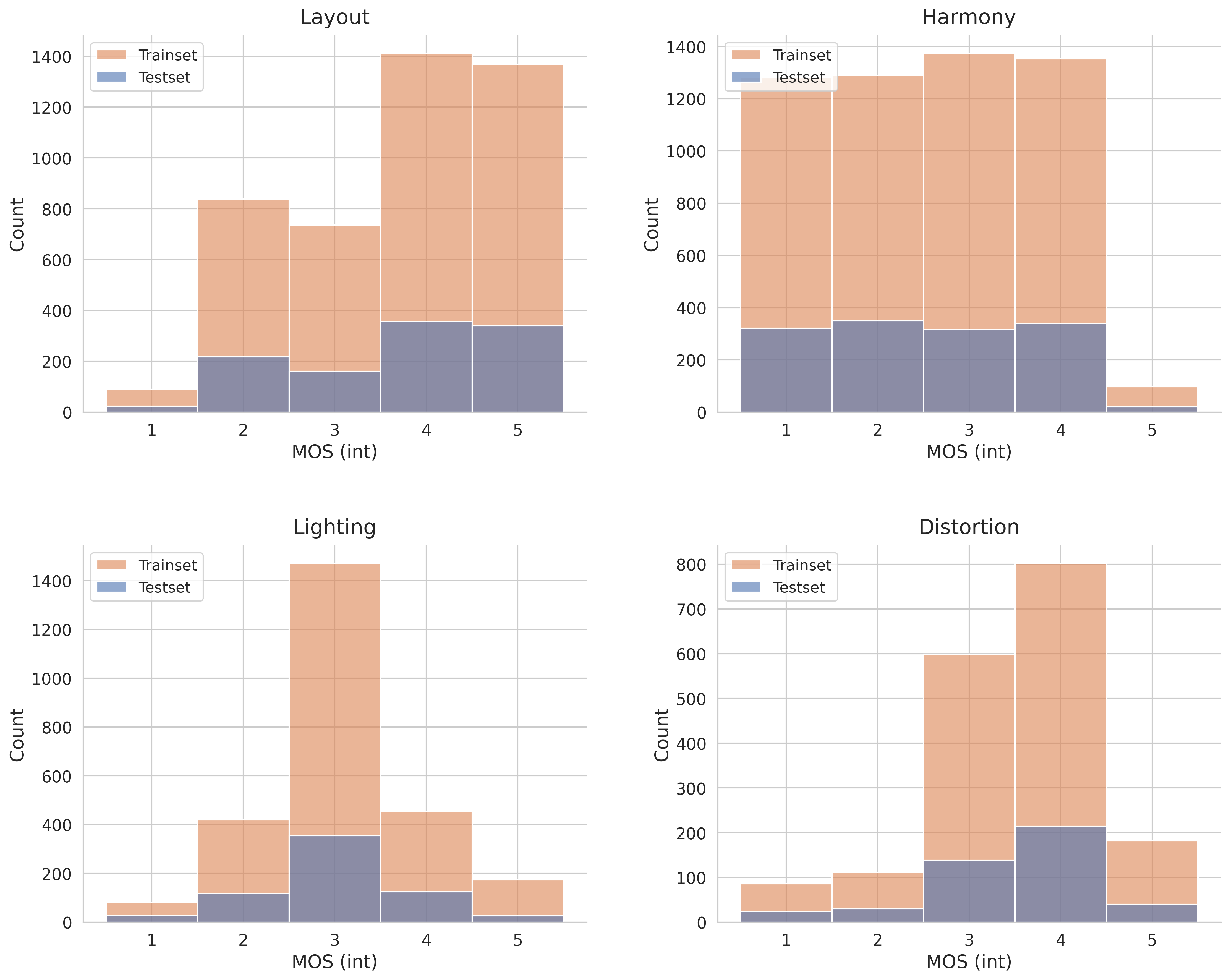

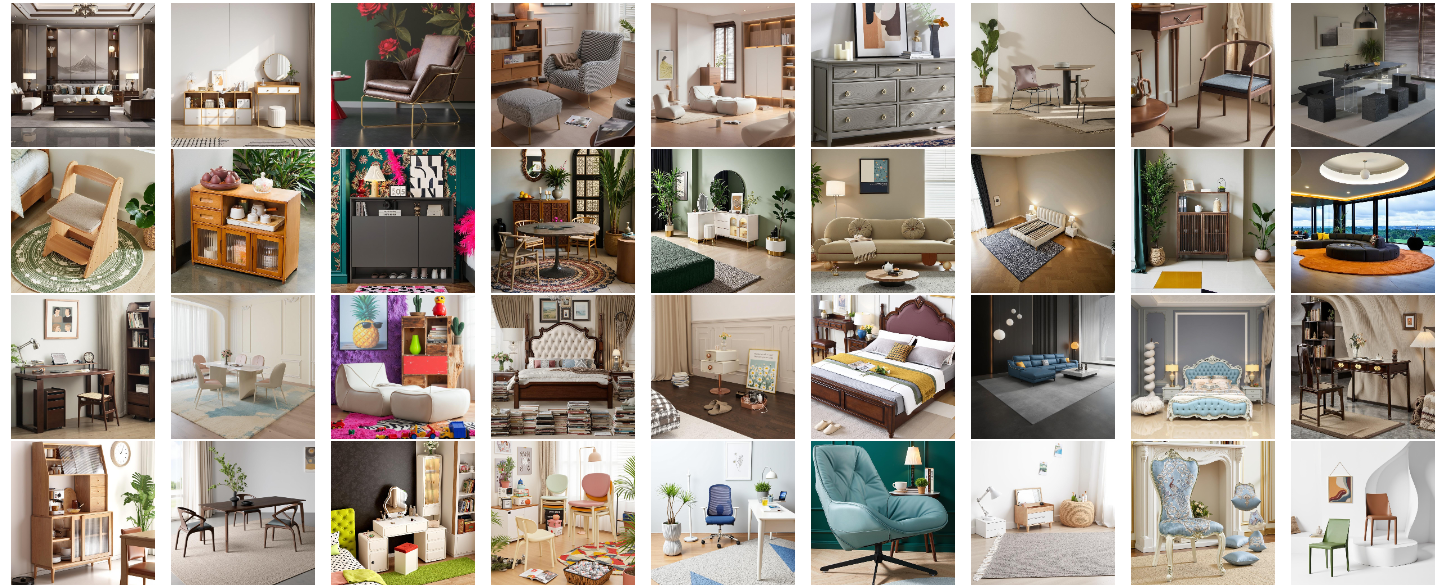

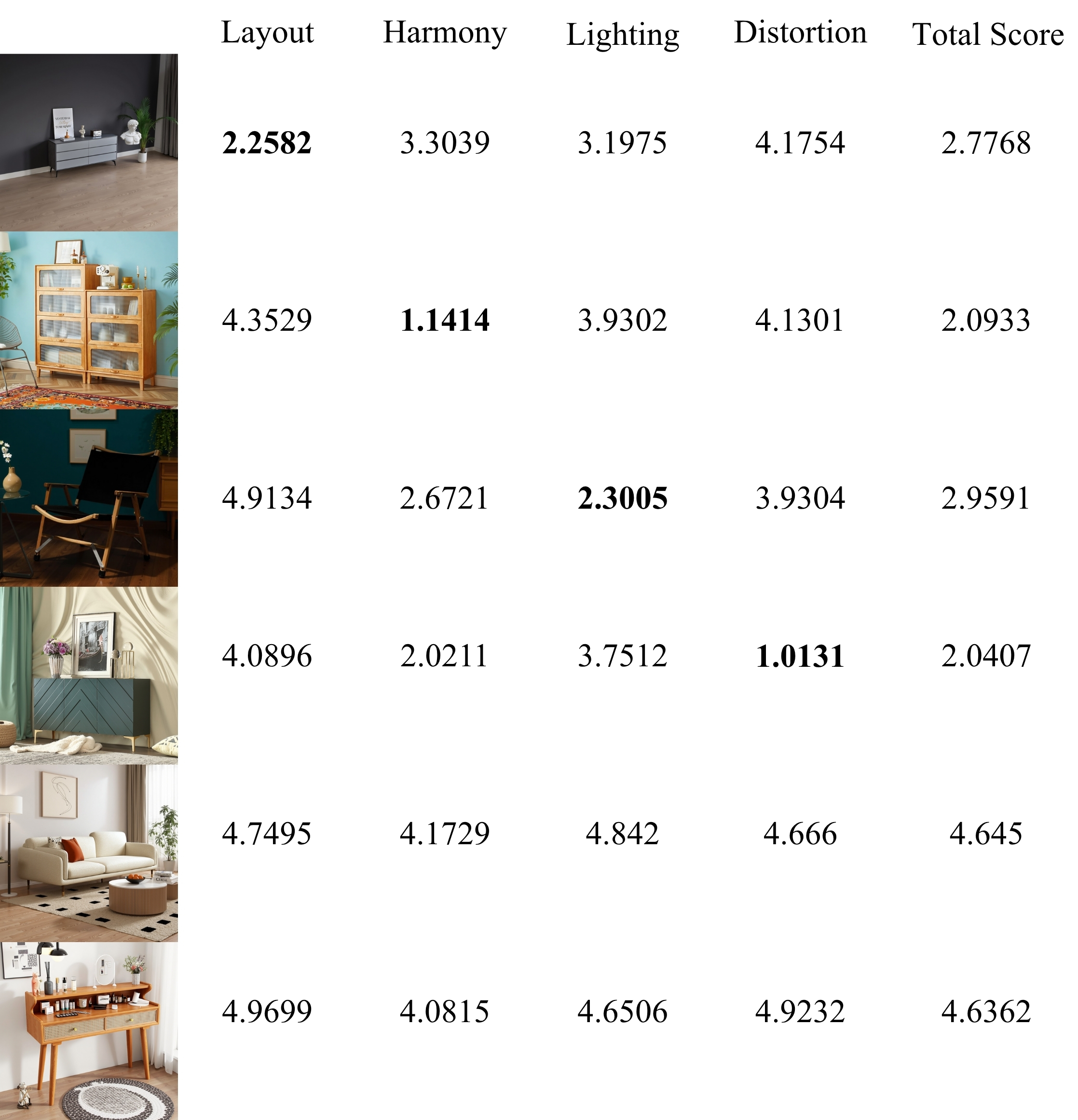

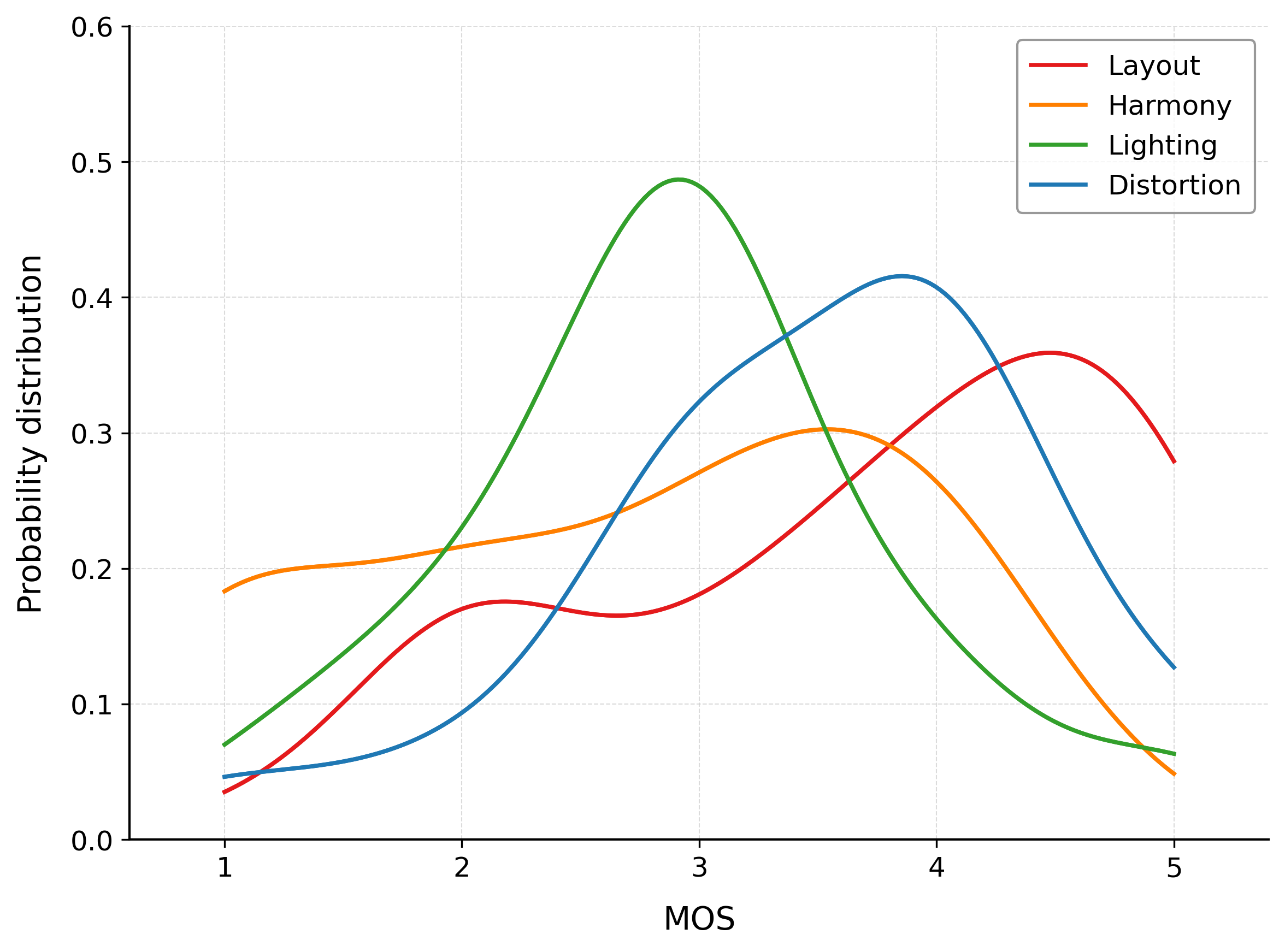

To comprehensively address the application of IQA in spatial aesthetics, evaluate and improve the quality of AIgenerated interior images, we first construct a high-quality dataset with multi-dimensional human annotations and train a IQA model based on this dataset. Specifically, our contributions are: • SA-BENCH: We define the Spatial Aesthetics paradigm along four dimensions-layout, harmony, lighting, and distortion-and construct the first benchmark comprising 18,000 interior images with 50,000 precise human annotations. The visualization of a portion of the dataset is shown in Figure 1. • SA-IQA: We introduce a Spatial Aesthetics IQA model that outputs calibrated, multi-dimensional rewards (layout, harmony, lighting, distortion). Built via MLLM finetuning with expert-aware instructions and a multidimensional fusion optimization, SA-IQA attains state-of-theart PLCC/SROCC on SA-BENCH. • Downstream Applications: We fully validate the effectiveness of our SA-IQA model in two representative downstream tasks: first, we integrate SA-IQA as a reward Figure 1. Visualizing the SA-BENCH dataset. From top to bottom, each row corresponds to the results of different models in the dataset: the first row uses SD1.5-Inpaint [20], the second row is SDXL-BrushNet [8], the third row is FLUX-Inpaint [11], and the fourth row is FLUX EasyControl [41].

signal, in conjunction with GRPO, to optimize a prompt expansion module for image generation task, and second, in Best-of-N selection to filter low-quality images, significantly improving generation quality.

The field of text-to-image (T2I) generation has grown rapidly. Open-source models like Stable Diffusion (SD) [20], SDXL [18], and SD3 [4] continue to improve image realism. Commercial models such as Midjourney and Seedream4.0 [21] can already generate highly realistic images. The training pipeline for these models often includes pre-training, fine-tuning, and reinforcement learning (RL) stages to align with human preferences, which requires collecting large-scale preference data.

Although some open-source aesthetic preference datasets exist, such as ImageReward [31] and HPSV2 [28], this data often reflects general preferences. This can lead to a lack of precision when working in specific domains, like interior design. To fix this, some methods collect domain-specific preference data. For instance, flux-krea [12] collected its data in a “very opinionated manner” to match a specific aesthetic taste and a “clear art direction.” Similarly, other methods have used preference data focused on anatomical distortions to improve how models generate human anatomy [16].

Early IQA benchmarks for AIGI started to define the key evaluation factors. For example, AGIQA-3K [13] and AIG-CIQA2023 [24] were among the first to use multidimensional assessments. They moved beyond a single quality score to measure factors like perceptual quality, authenticity, and text-to-image correspondence. This approach was later scaled up by AIGIQA-20K [14].

However, these benchmarks remain general-purpose and have limits when applied to specific, structurally-complex domains. A key development in the field is therefore the creation of domain-specific IQA. A clear example is AGHI-QA [16], which argues that general-purpose models “fail to deliver fine-graine

This content is AI-processed based on open access ArXiv data.