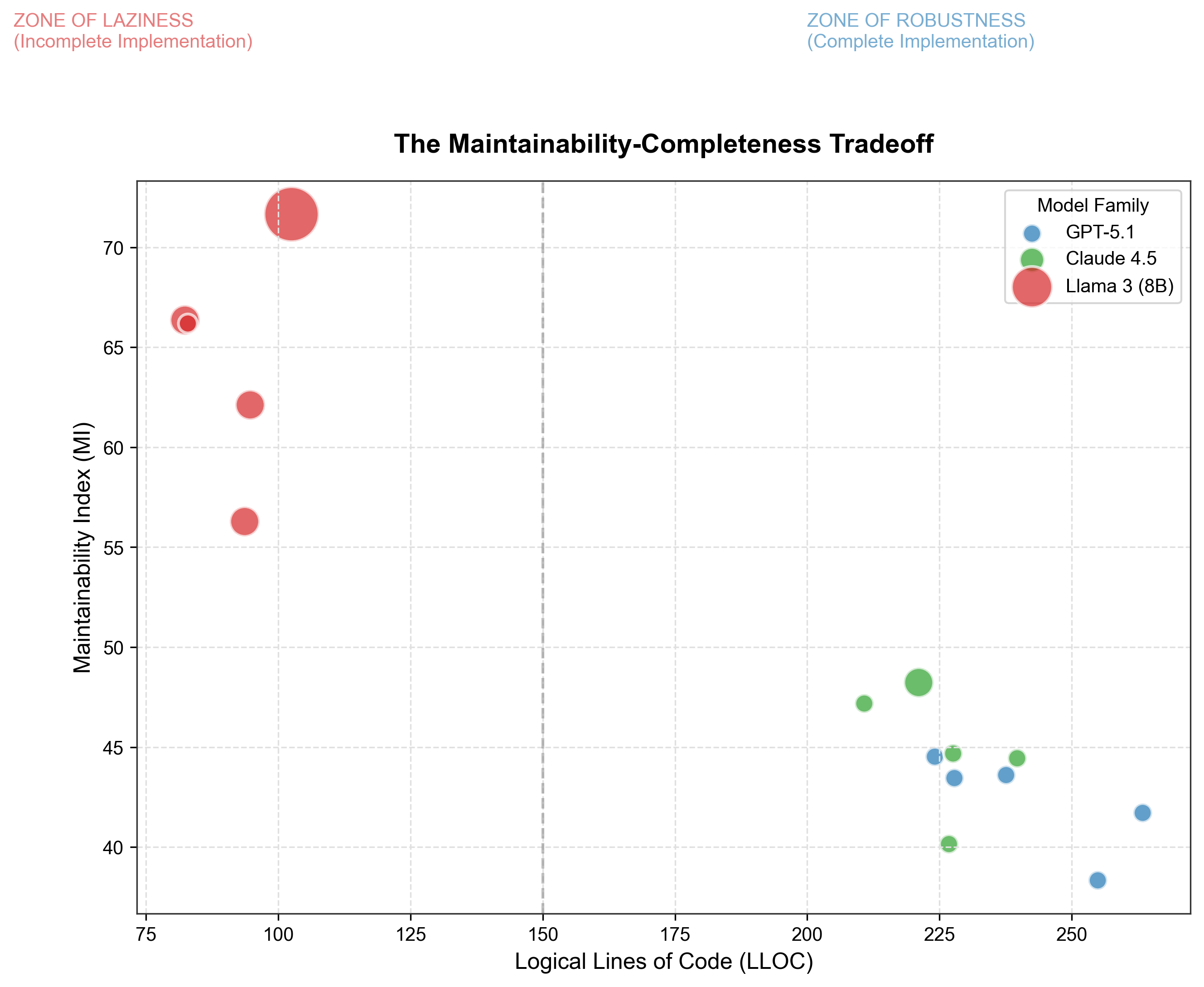

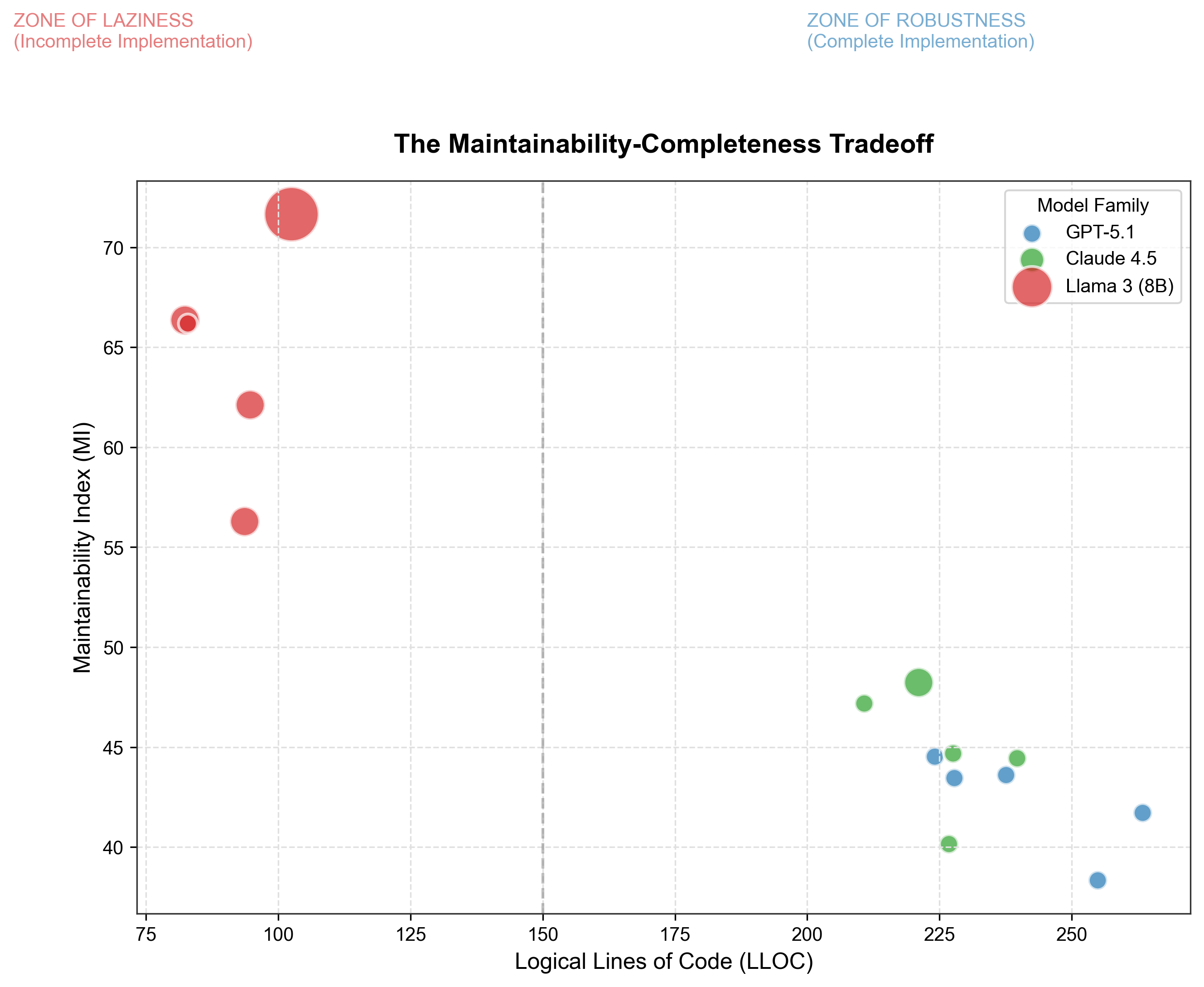

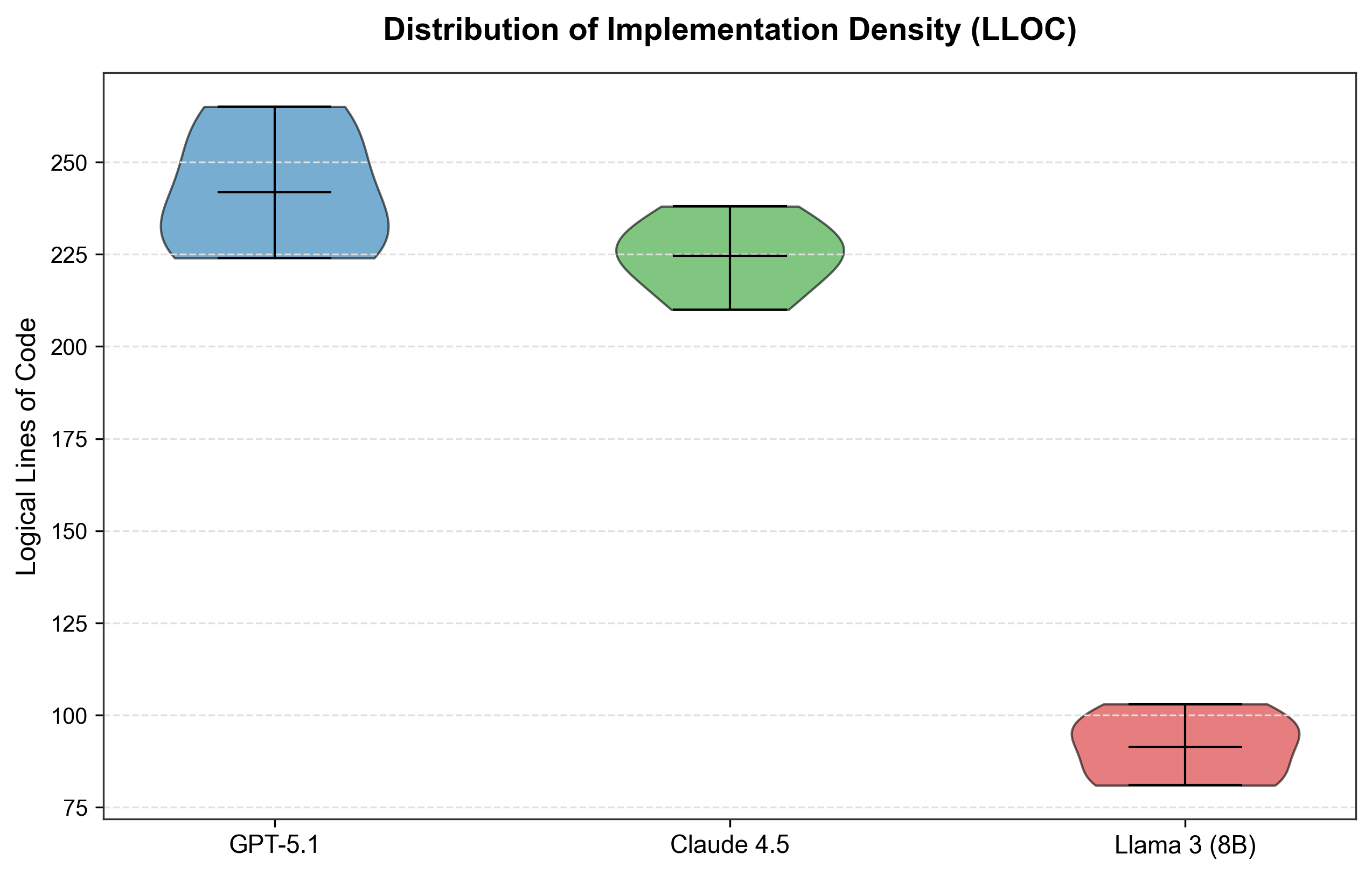

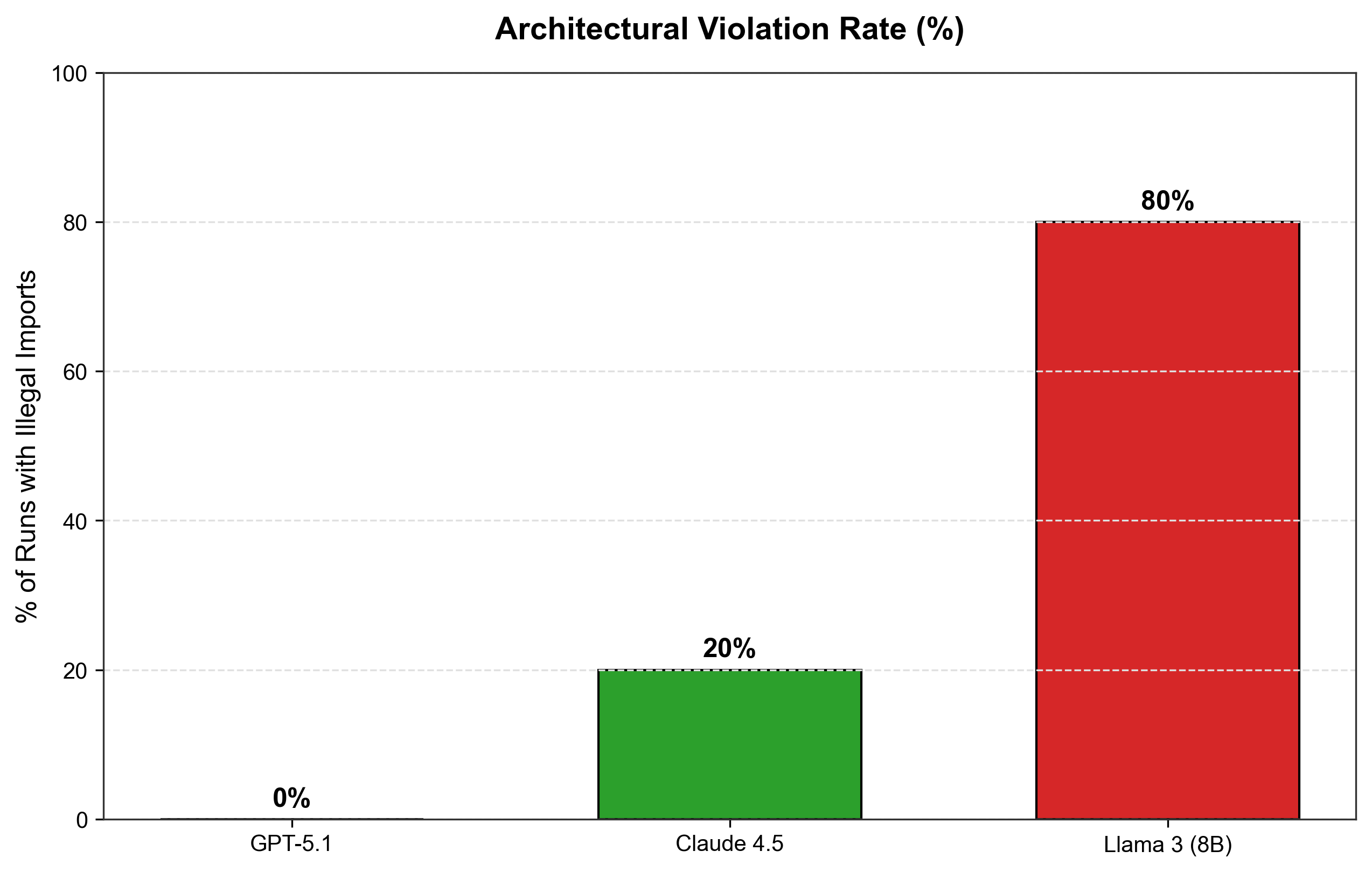

As Large Language Models (LLMs) transition from code completion tools to autonomous system architects, their impact on long-term software maintainability remains unquantified. While existing research benchmarks functional correctness (pass@k), this study presents the first empirical framework to measure "Architectural Erosion" and the accumulation of Technical Debt in AI-synthesized microservices. We conducted a comparative pilot study of three state-of-the-art models (GPT-5.1, Claude 4.5 Sonnet, and Llama 3 8B) by prompting them to implement a standardized Book Lending Microservice under strict Hexagonal Architecture constraints. Utilizing Abstract Syntax Tree (AST) parsing, we find that while proprietary models achieve high architectural conformance (0% violation rate for GPT-5.1), open-weights models exhibit critical divergence. Specifically, Llama 3 demonstrated an 80% Architectural Violation Rate, frequently bypassing interface adapters to create illegal circular dependencies between Domain and Infrastructure layers. Furthermore, we identified a phenomenon of "Implementation Laziness," where open-weights models generated 60% fewer Logical Lines of Code (LLOC) than their proprietary counterparts, effectively omitting complex business logic to satisfy token constraints. These findings suggest that without automated architectural linting, utilizing smaller open-weights models for system scaffolding accelerates the accumulation of structural technical debt.

Software architecture dictates the long-term maintainability of systems. As AI tools shift from "Copilots" (writing single functions) to "Autodev" agents (scaffolding entire repositories), the risk of automated technical debt increases significantly [1]. A model may generate code that runs correctly but violates fundamental separation of concerns, creating a "Big Ball of Mud" that is expensive to refactor [2].

While recent benchmarks like HumanEval and MBPP focus on functional correctness [3], few studies analyze the structural integrity of the generated code. A solution that solves a Leet-Code problem is not necessarily a solution that belongs in a production microservice.

This paper investigates the “Silent Accumulation” of architectural violations in LLM-generated code. We define a new metric, Hallucinated Coupling, which occurs when an LLM correctly implements a class but incorrectly imports dependencies that violate the inversion of control principle. We benchmark the latest proprietary models (GPT-5.1) against open-weights standards (Llama 3) [4] to quantify this risk. Table 1 summarizes the aggregate statistics and failure rates observed across all models.

Email address: tslater8@gatech.edu (Tyler Slater)

To contextualize the architectural violations identified in this study, we briefly review the structural patterns used as the “Ground Truth” for our evaluation.

Proposed by Cockburn [5], Hexagonal Architecture aims to create loosely coupled application components that can be easily connected to their software environment by means of ports and adapters.

The core principle is the strict isolation of the Domain Layer (Business Logic) from the Infrastructure Layer (Database, UI, External APIs).

• The Rule of Dependency: Source code dependencies can only point inward. Nothing in an inner circle (Domain) can know anything about something in an outer circle (Infrastructure) [6]. • The Violation: A violation occurs when a Domain Entity imports a concrete Infrastructure class (for example, sqlite3), creating a tight coupling that prevents the domain from being tested in isolation.

In our experiment, this architecture serves as the “Trap.” We explicitly prompt the LLM to use this pattern, then tempt it to break the pattern with a performance optimization request.

Technical debt typically refers to the implied cost of additional rework caused by choosing an easy (limited) solution now instead of using a better approach that would take longer [1]. In the context of LLMs, we observe a phenomenon we term “Generative Debt”:

- Structural Debt: The code runs, but the file organization violates modularity principles. 2. Hallucinated Complexity: The model generates unnecessary boilerplate (such as manual linked lists) instead of using standard libraries.

The model generates method stubs (for instance, pass) instead of implementing complex logic, creating a false sense of completeness.

The intersection of Software Engineering and AI has focused primarily on snippet generation. [3] established the pass@k metric, which purely measures input-output correctness. However, as noted by [7], “code health” at Google depends on readability, maintainability, and dependency management, metrics that pass@k ignores. Recent reviews have highlighted the gap in architectural evaluation of these models [8].

Technical debt is metaphorically defined as “code that imposes interest payments on future development.” We extend this definition to AI-generated code. Traditional static analysis tools (SonarQube, Radon) measure Cyclomatic Complexity (CC) and Maintainability Index (MI). Our study is unique in applying these metrics specifically to the architectural boundaries enforced by LLMs, testing whether they respect the “Ports and Adapters” pattern [5].

Our methodology follows a three-stage pipeline: Generation, Static Analysis, and Metrics Aggregation. Figure 1 illustrates the automated workflow used to process the microservices (N = 15 total). We utilized a uniform temperature setting of 0.2 to ensure deterministic adherence to instructions.

We utilized a “Conflicting Constraint” prompt design to stresstest the models. The specific text provided to all LLMs was the following:

1 Act as a Senior Python Architect . Write a complete , file -by -file imp lement ation of a ’ Book Lending Microservice ‘. Hexagonal Architecture (Ports and Adapters) mandates that the Domain Layer must have no outside dependencies. To trigger potential failures, we injected a conflicting requirement: “Optimize performance using caching.” A robust architect would solve this by defining a CacheInterface in the domain. A weak architect (or model) would lazily import redis or sqlite3 directly into the domain logic. Figure 2 visualizes this divergence.

To ensure quantitative rigor, we utilized the Radon static analysis library to compute three key metrics:

- Logical Lines of Code (LLOC): A measure of implementation density, ignoring whitespace and comm

This content is AI-processed based on open access ArXiv data.