We investigate input normalization methods for Time-Series Foundation Models (TSFMs). While normalization is well-studied in dataset-specific time-series models, it remains overlooked in TSFMs where generalization is critical. Time-series data, unlike text or images, exhibits significant scale variation across domains and channels, coupled with non-stationarity, can undermine TSFM performance regardless of architectural complexity. Through systematic evaluation across four architecturally diverse TSFMs, we empirically establish REVIN as the most efficient approach, reducing zero-shot MASE by 89\% relative to an un-normalized baseline and by 44\% versus other normalization methods, while matching the best in-domain accuracy (0.84 MASE) without any dataset-level preprocessing -- yielding the highest accuracy-efficiency trade-off. Yet its effect utilization depends on architectural design choices and optimization objective, particularly with respect to training loss scale sensitivity and model type (probabilistic, point-forecast, or LLM-based models).

Time Series Foundation Models (TSFMs) have emerged as an effective alternative to traditional one-model-per-dataset approaches in time series analysis. By pretraining models on large-scale, diverse time series datasets, TSFMs learn to capture complex temporal dynamics and enable knowledge transfer across domains. TSFMs have shown promising results in zero-shot forecasting and model generalization across various domains and unseen datasets, even challenging or exceeding the performance of full-shot models at times [1,2].

TSFMs face unique challenge stemming from time series data’s heterogeneous, non-stationary nature [3]. Scale and distribution shifts across domains and intra-domain features challenge generalization ability [4]. Hence, data normalization pivotally impacts TSFMs’ performance and generalization, yet systematic comparisons of normalization methods in TSFMs remain lacking despite advances in data normalization research in domain-specific settings [5,6,7]. Current literature for TSFMs often adopts heuristic normalization choices without evidence-based justification.

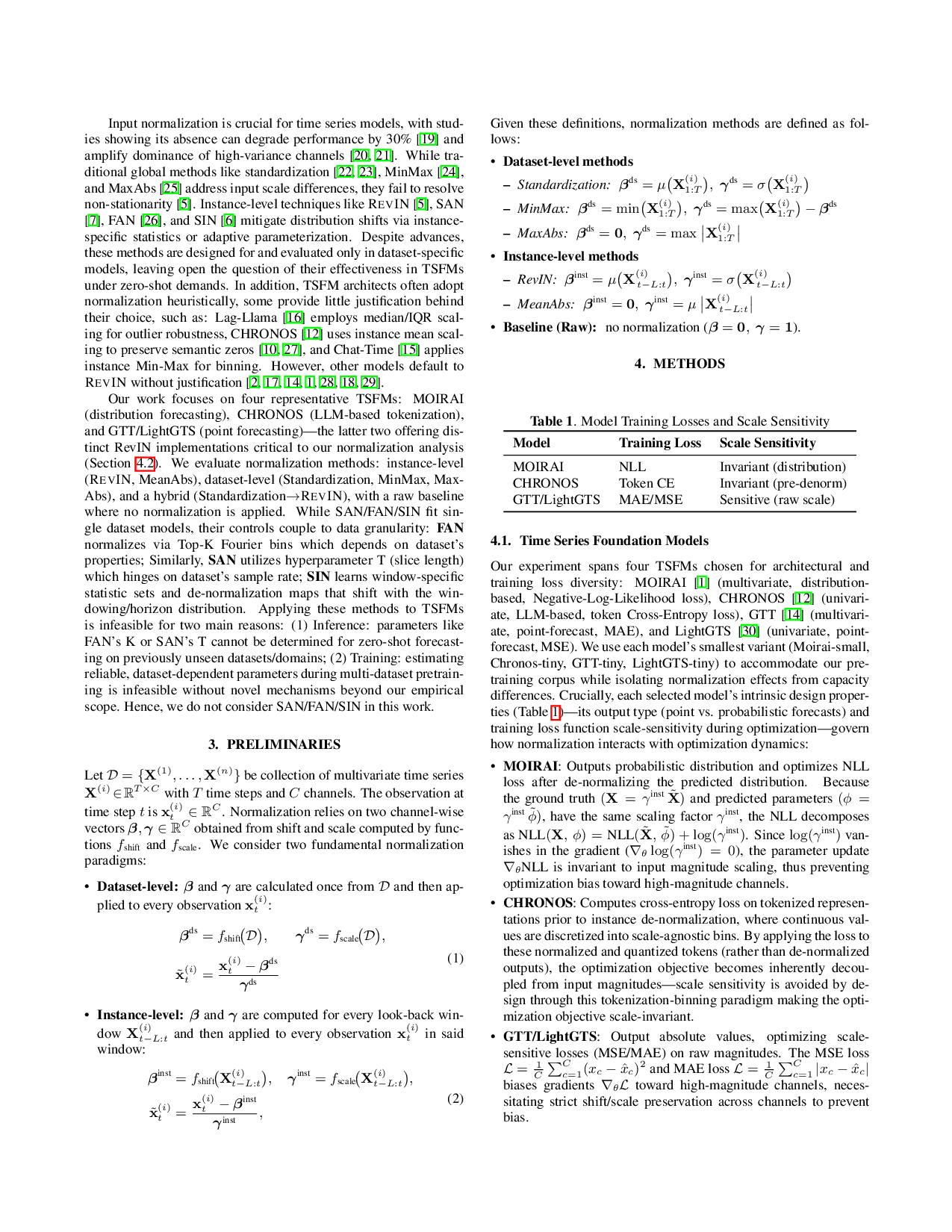

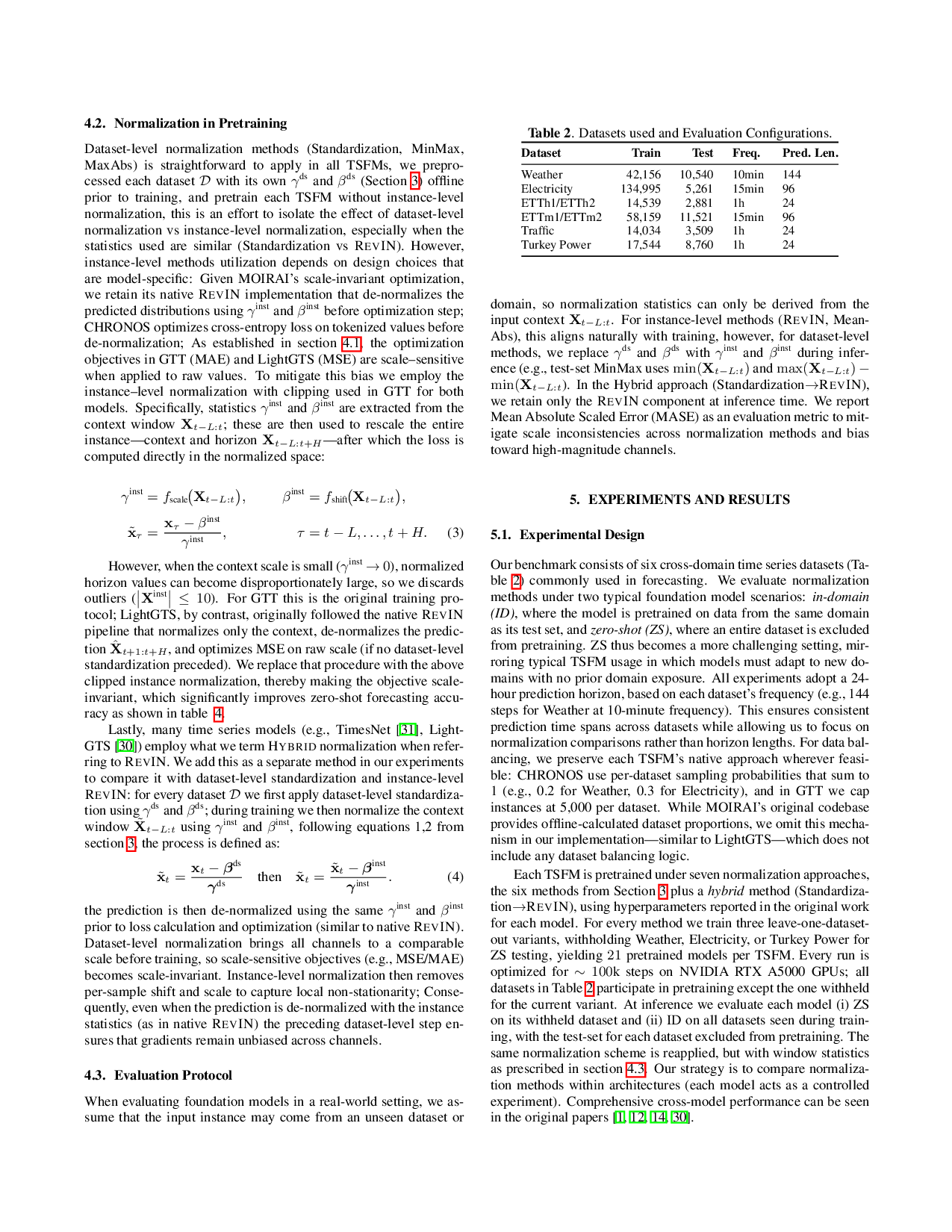

Motivated by this gap, we conduct experiments to quantify data normalization’s effect on TSFMs, and to answer questions: How do alternative normalization choices-specified by (i) the underlying statistics computed (mean -std, min -max, max-absolute, etc.) and (ii) the scope at which those statistics are measured (dataset-level versus instance-level)-influence the performance of TSFMs?, and what guidelines to follow for selecting and adapting normalization methods for optimal cross-domain adaptation?. Our contributions include: (1) a comparative analysis of data normalization in TSFMs, investigating its essential role in cross-domain generalization; (2) systematic evaluation of six normalization methods (REVIN, Mean-Abs, Standardization+REVIN, Standardization, MinMax, MaxAbs) across four TSFMs (MOIRAI, CHRONOS, GTT, LightGTS) which span different architectural designs and training protocols, using multi-domain datasets spanning energy, transportation, and meteorology; (3) empirically showing that mean/std-based normalization methods consistently outperform others, improving TSFM forecasting accuracy relative to the raw baseline by approximately 89% (zero-shot) and 79% (in-domain), and by approximately 44% (zero-shot) and 28% (in-domain) relative to alternative normalization methods. (4) empirical insights into how REVIN’s effective utilization varies with model architecture design and optimization objective scale-sensitivity.

Zero-shot generalization in time series forecasting is challenged by non-stationarity and distribution shifts-a problem amplified in Time Series Foundation Models (TSFMs) by heterogeneous pretraining corpora that mix sampling rates and channel counts [3,8,9] as well as significant variation in numerical magnitudes across different datasets and channels [10,11,12]. MOIRAI counters this with frequency-aware patch in/out projections, Any-variate Attention, and a mixture output head to handle arbitrary horizons and dimensions [1]. MOIRAI-MoE adds token-level sparse experts that specialize to regimes and frequencies without manual routing [9]. Time-MoE scales sparse experts to billion-parameter capacity with multi-resolution heads, activating only a few experts per token [13]. CHRONOS normalizes cross-dataset scale by performing instance scaling+quantization for each variate to a fixed vocabulary and then use a T5-style LLM backbone [12]. GTT predicts next curve shape using temporal attention as well as cross-channel attention [14]. LightGTS aligns heterogeneous periods via cyclic tokenization and periodical decoding [15]. Toto uses patch-wise causal scaling, factorized time-variate attention, and pretrain on a large mixed-frequency corpus [2]. Lag-Llama conditions on explicit lag covariates for portable zero/few-shot use [16]. Time-LLM reprograms frozen LLMs with prompt/prefix tuning [17]. MOMENT pretrains with masked patches on diverse corpora for frequencyagnostic transfer, and Chat-Time discretizes series for multimodal text-conditioned forecasting [18,15]. Input normalization is crucial for time series models, with studies showing its absence can degrade performance by 30% [19] and amplify dominance of high-variance channels [20,21]. While traditional global methods like standardization [22,23], MinMax [24], and MaxAbs [25] address input scale differences, they fail to resolve non-stationarity [5]. Instance-level techniques like REVIN [5], SAN [7], FAN [26], and SIN [6] mitigate distribution shifts via instancespecific statistics or adaptive parameterization. Despite advances, these methods are designed for and evaluated only in dataset-specific models, leaving open the question of their effectiveness in TSFMs under zero-shot demands. In addition, TSFM architects often adopt normalization heuristically, some provide little justification behind their choice, such as: Lag-Llama [16] employs median/IQR scaling for

This content is AI-processed based on open access ArXiv data.