The rise of large language models (LLMs) has sparked a surge of interest in agents, leading to the rapid growth of agent frameworks. Agent frameworks are software toolkits and libraries that provide standardized components, abstractions, and orchestration mechanisms to simplify agent development. Despite widespread use of agent frameworks, their practical applications and how they influence the agent development process remain underexplored. Different agent frameworks encounter similar problems during use, indicating that these recurring issues deserve greater attention and call for further improvements in agent framework design. Meanwhile, as the number of agent frameworks continues to grow and evolve, more than 80% of developers report difficulties in identifying the frameworks that best meet their specific development requirements. In this paper, we conduct the first empirical study of LLM-based agent frameworks, exploring real-world experiences of developers in building AI agents. To compare how well the agent frameworks meet developer needs, we further collect developer discussions for the ten previously identified agent frameworks, resulting in a total of 11,910 discussions. Finally, by analyzing these discussions, we compare the frameworks across five dimensions: development efficiency, functional abstraction, learning cost, performance optimization, and maintainability, which refers to how easily developers can update and extend both the framework itself and the agents built upon it over time. Our comparative analysis reveals significant differences among frameworks in how they meet the needs of agent developers. Overall, we provide a set of findings and implications for the LLM-driven AI agent framework ecosystem and offer insights for the design of future LLM-based agent frameworks and agent developers.

Agent frameworks are software toolkits and libraries that provide standardized components, abstractions, and orchestration mechanisms to simplify the construction of agents [56]. With the rapid progress of large language models (LLMs), the landscape of agent development has expanded substantially. More than 100 open-source agent frameworks have emerged on GitHub [42], collectively accumulating over 400,000 stars and 70,000 forks. These frameworks enable developers to build autonomous agents for diverse purposes such as reasoning, tool use, and collaboration [16,70,83].

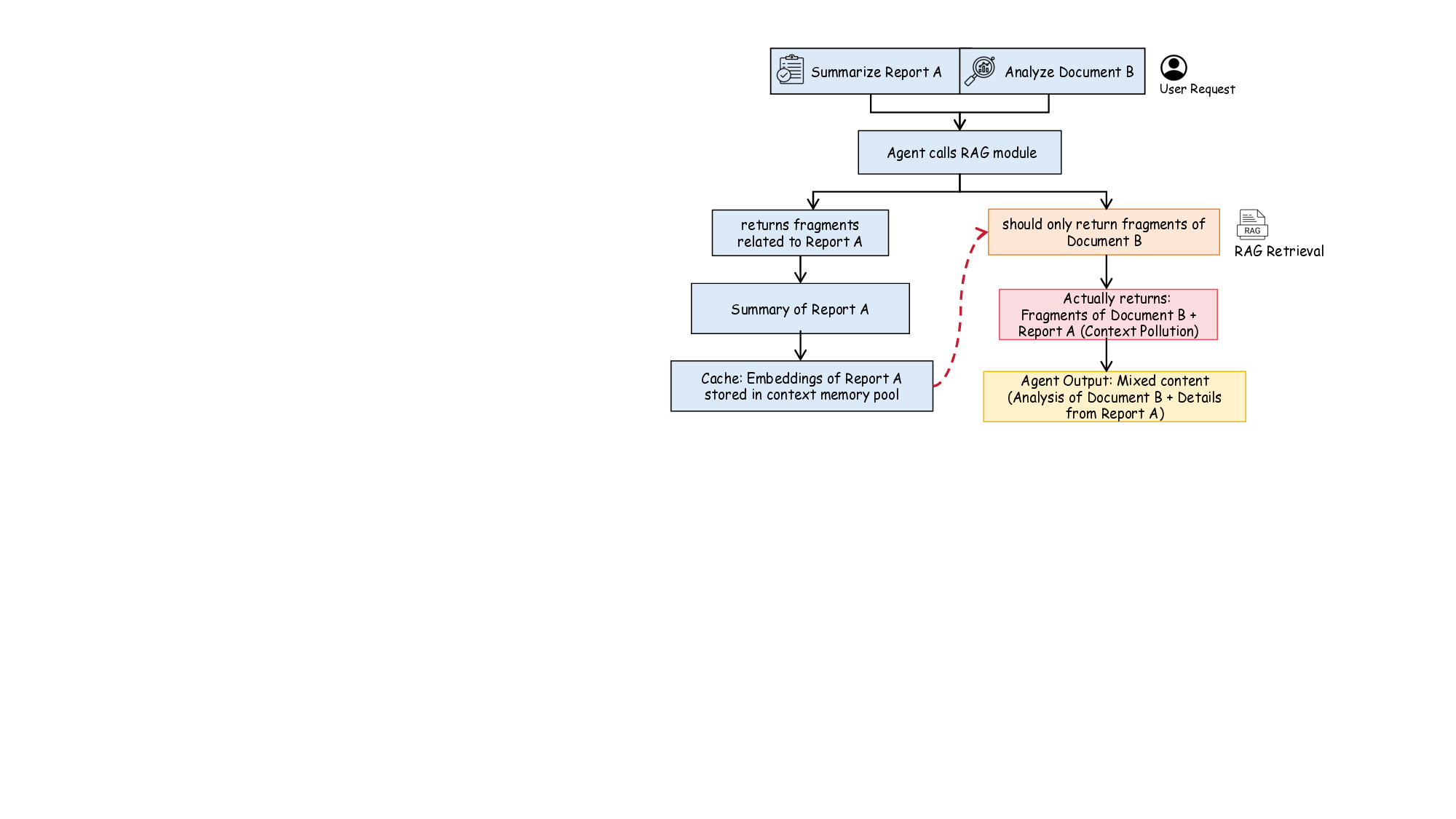

Research on intelligent agents has made significant progress, primarily focusing on architectural design and application domains of agents, system robustness, and multi-agent coordination [6,38,40,54]. However, how agent development frameworks affect agent developers and the overall agent development process is still underexplored. Agent development, like conventional software engineering, follows the software development lifecycle (SDLC), which spans stages such as design, implementation, testing, deployment, and maintenance. The lack of understanding of how agent development frameworks influence the agent development process has led developers to face various challenges at different stages of the SDLC.

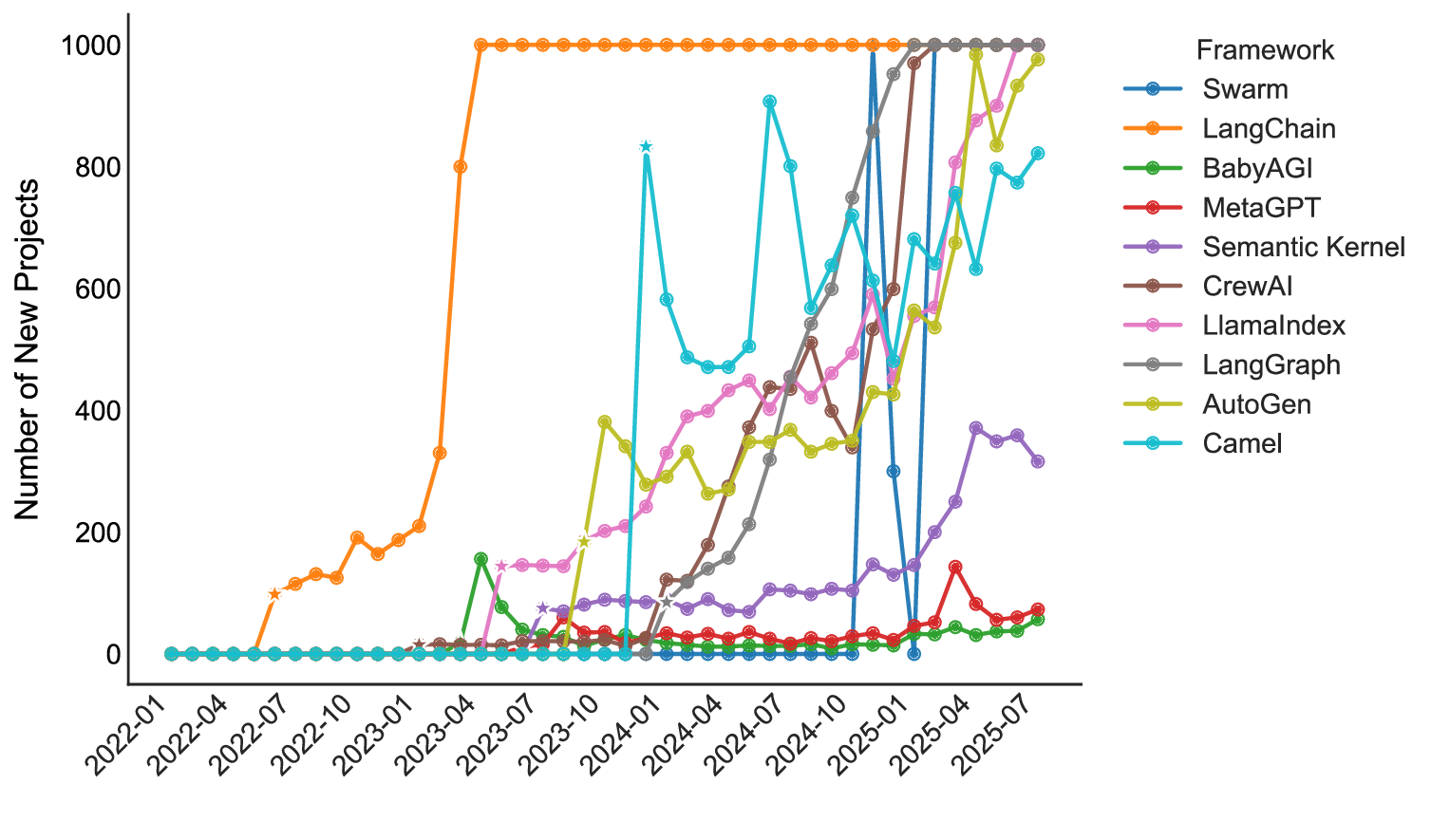

To fill this gap, we conduct the first empirical study to understand the challenges that developers encounter when building agents using frameworks. Our empirical study seeks to inform the optimization directions for agent framework designers, and further supports agent developers in selecting frameworks that are best suited to their specific requirements. To this end, we collect 1,575 LLM-based agent projects and 8,710 related developer discussions, from which we identify ten widely used agent frameworks. We then further collect and analyze 11,910 developer discussions specifically related to these frameworks.

Through this empirical investigation, we aim to answer the following three research questions that guide our study.

RQ1. How are LLM-based agent frameworks adopted and utilized by developers in real-world projects?

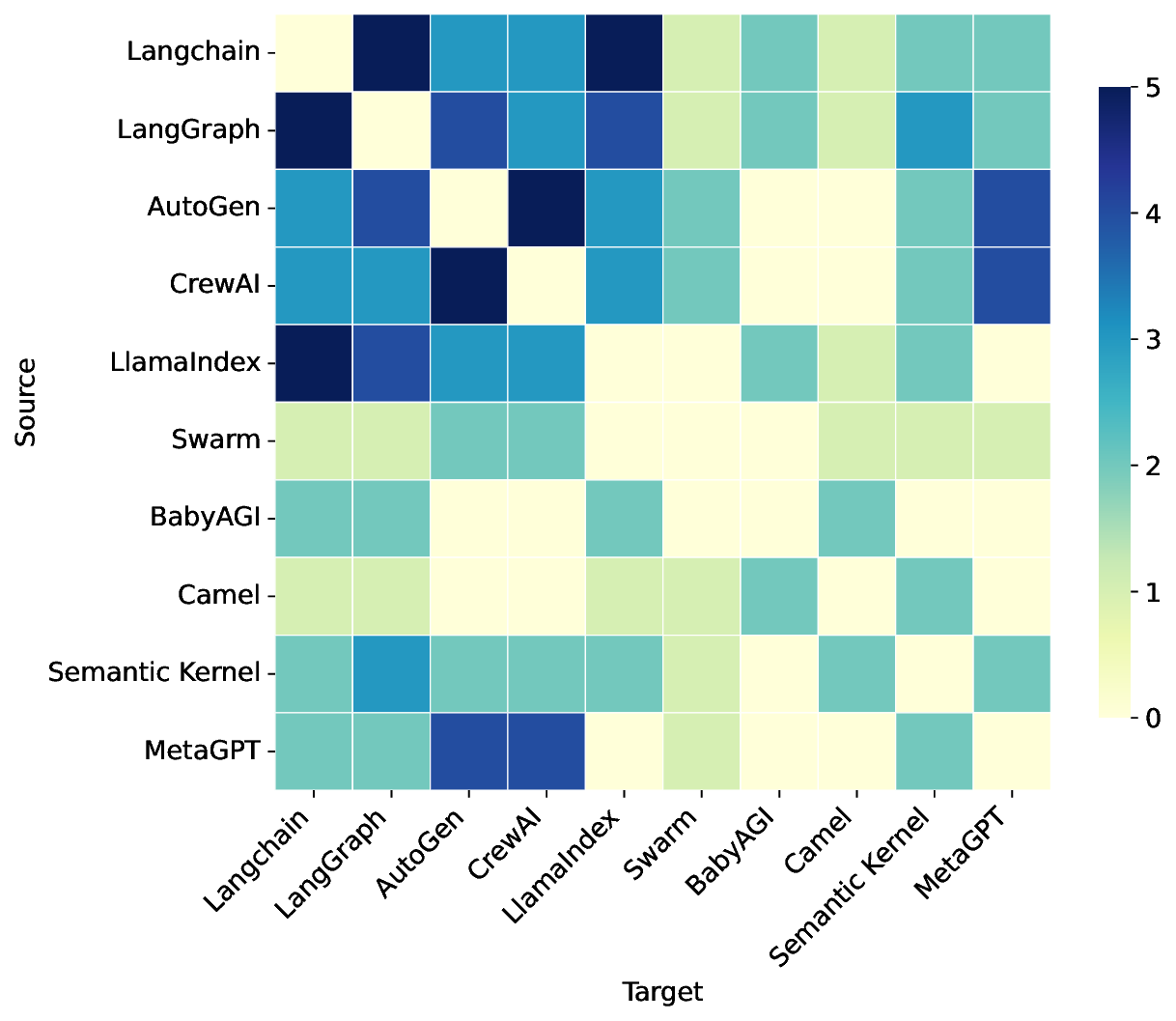

To lay a foundational understanding of the current agent framework landscape, we first aim to uncover how LLM-based agent development frameworks are actually adopted and utilized by developers in practice. Specifically, we analyze the specific roles they play in real-world applications, their adoption across projects, and trends in popularity over time. Specifically, we examine 8,710 discussion threads from three perspectives: (i) functional roles, meaning the kinds of development tasks each framework primarily supports; (ii) usage patterns, which describe the typical ways frameworks are integrated in practice; and (iii) community popularity, refers to the level of developer discussion and attention. Our findings reveal that when selecting an agent framework, developers should prioritize ecosystem robustness, long-term maintenance activity, and practical adoption in real projects, rather than focusing solely on short-term community popularity. We also observe that combining multiple frameworks with different functions has become the dominant approach to agent development.

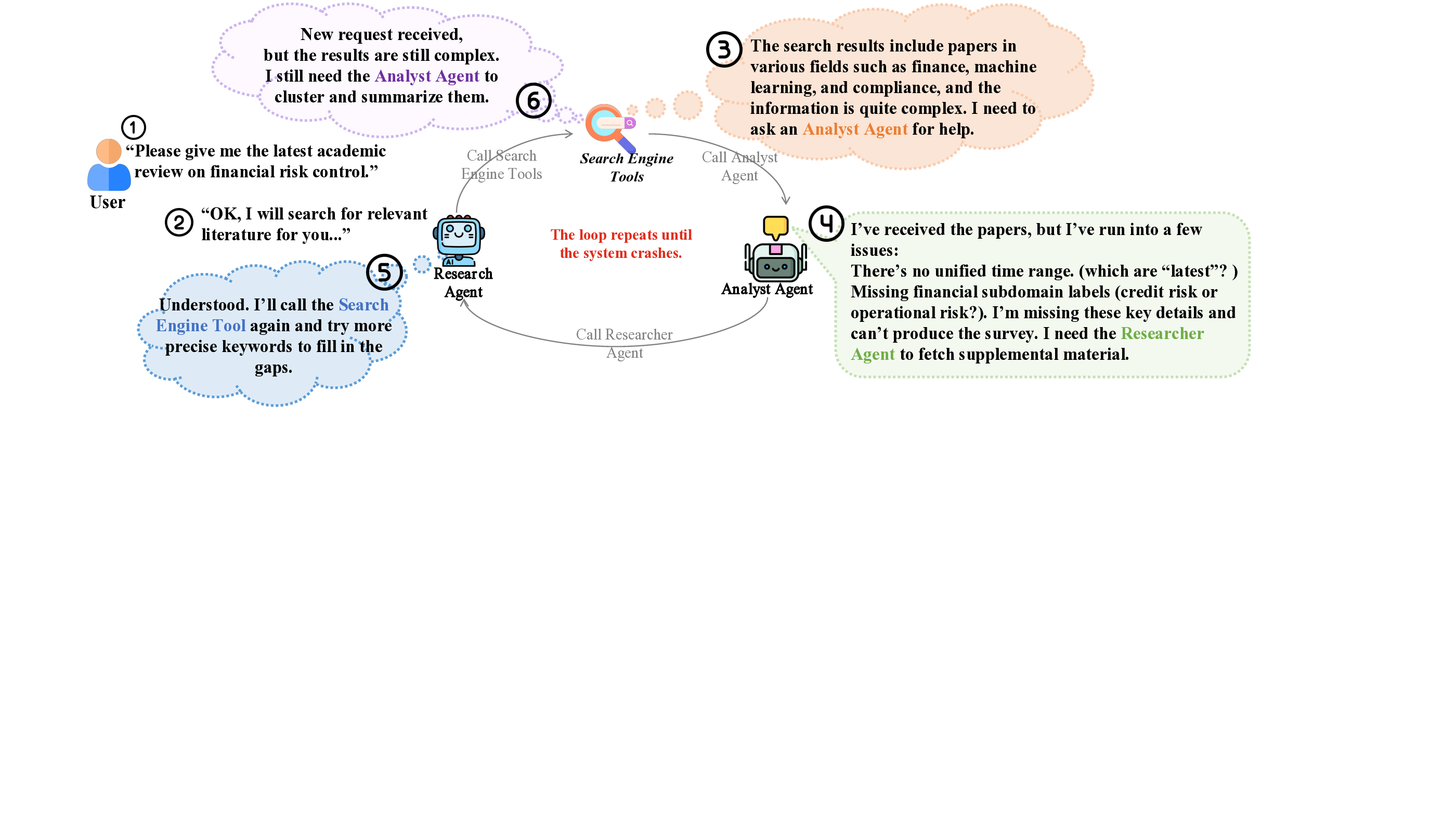

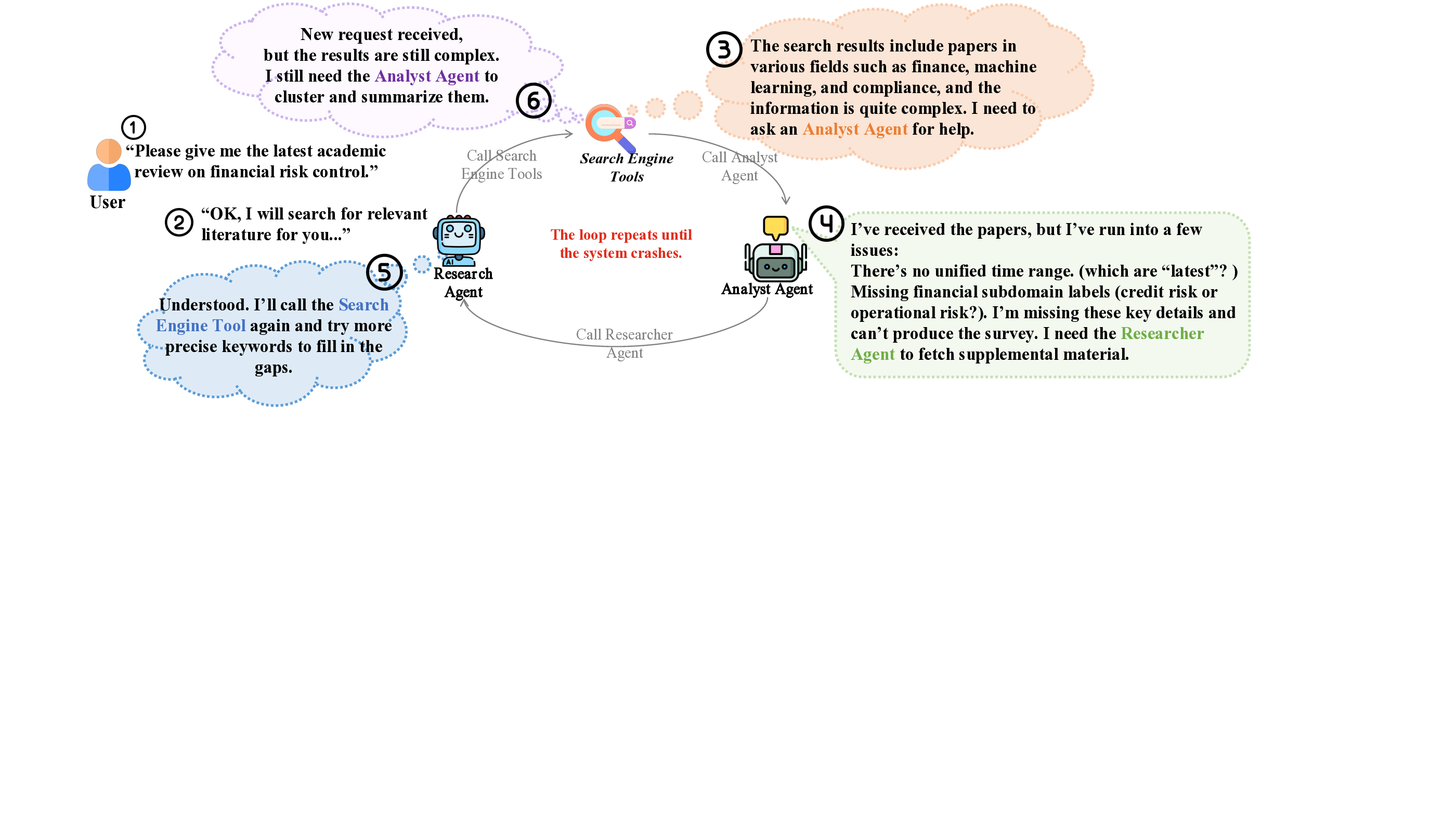

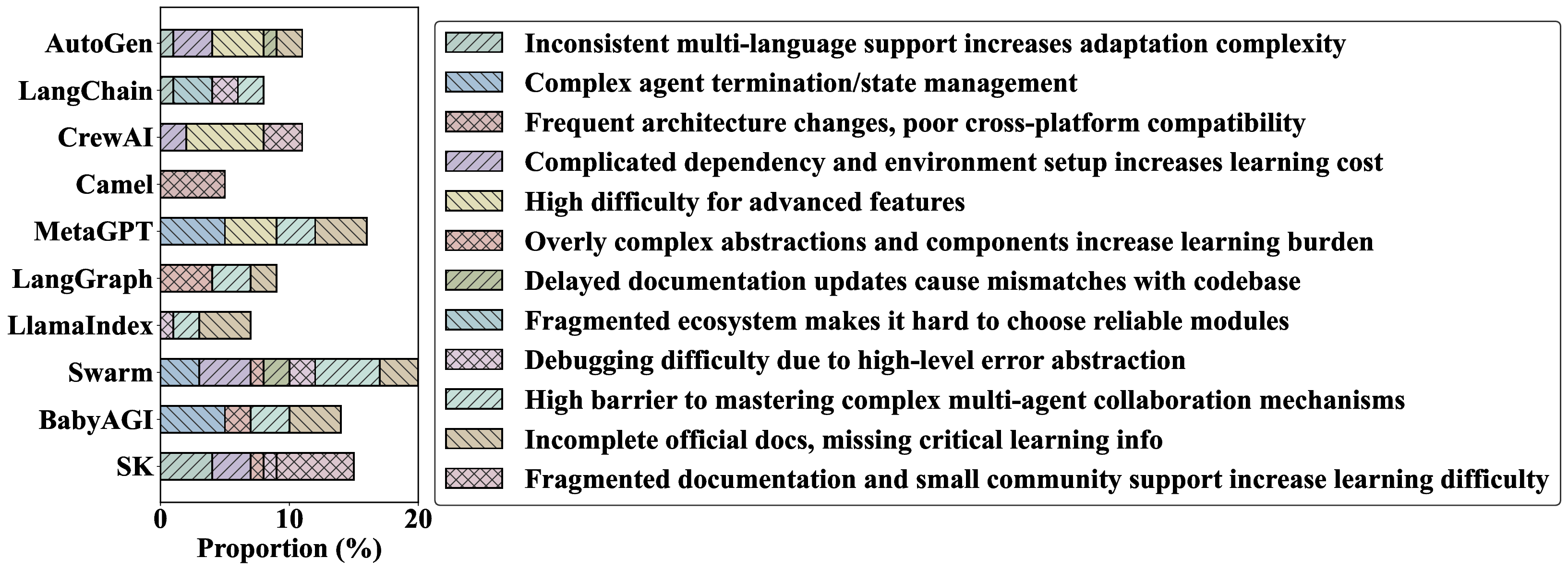

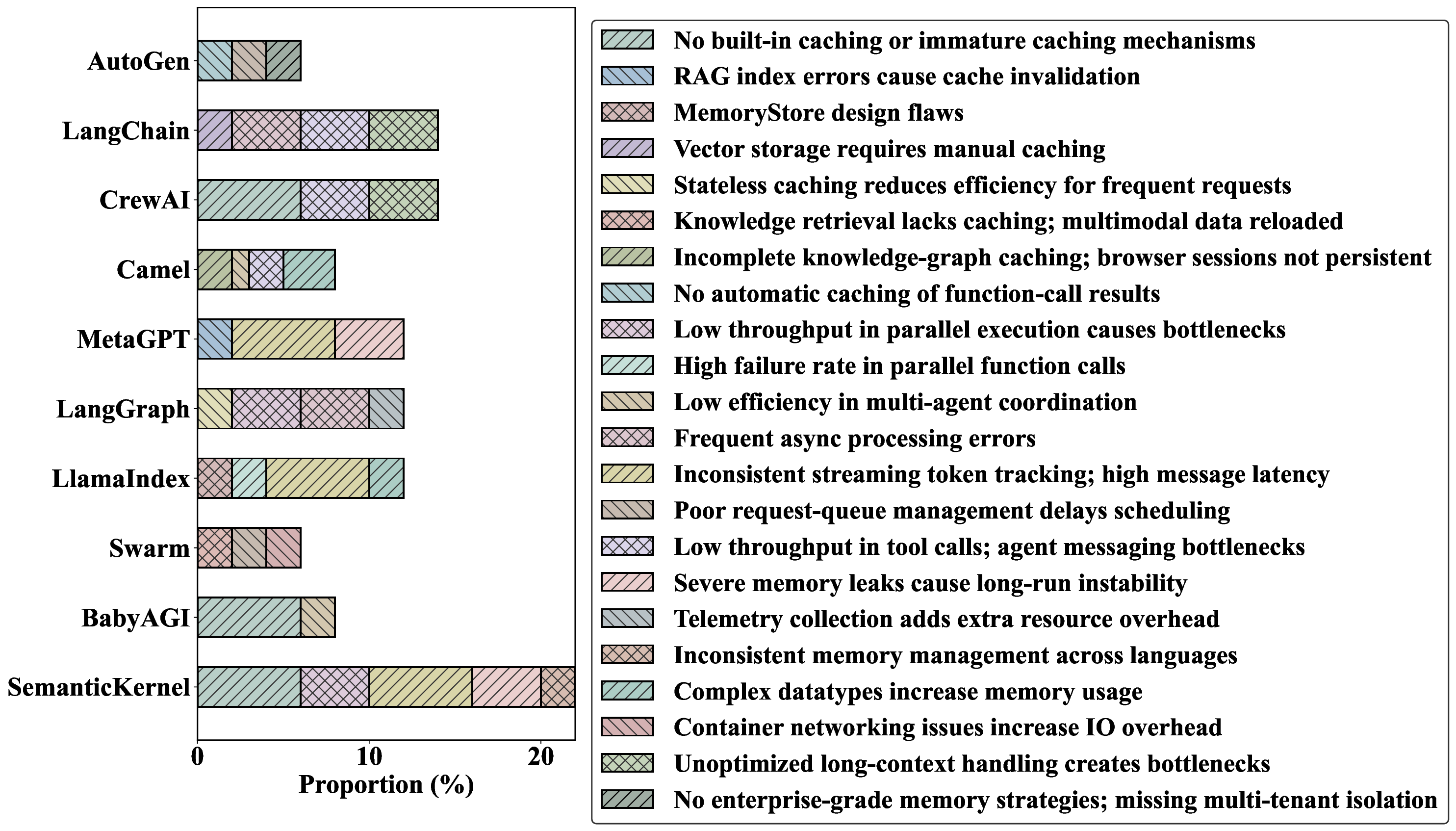

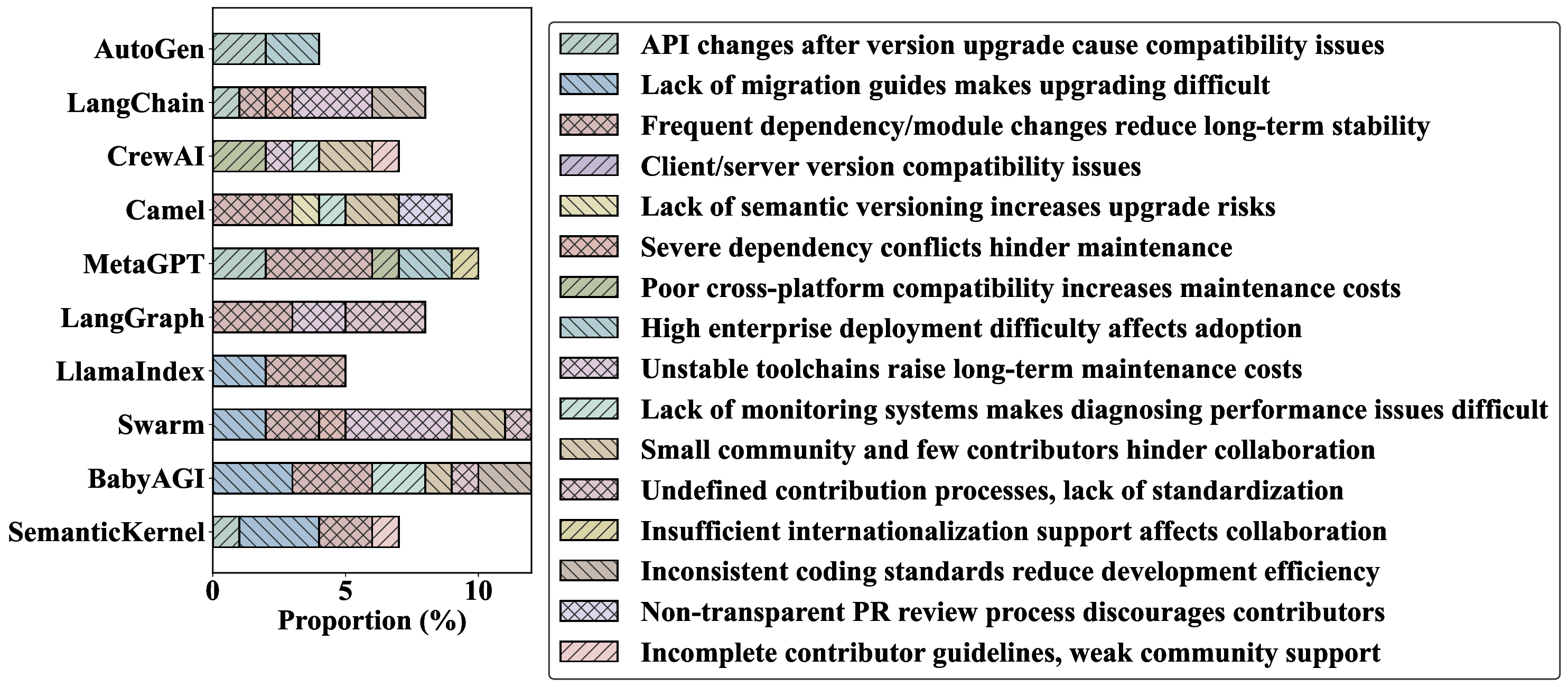

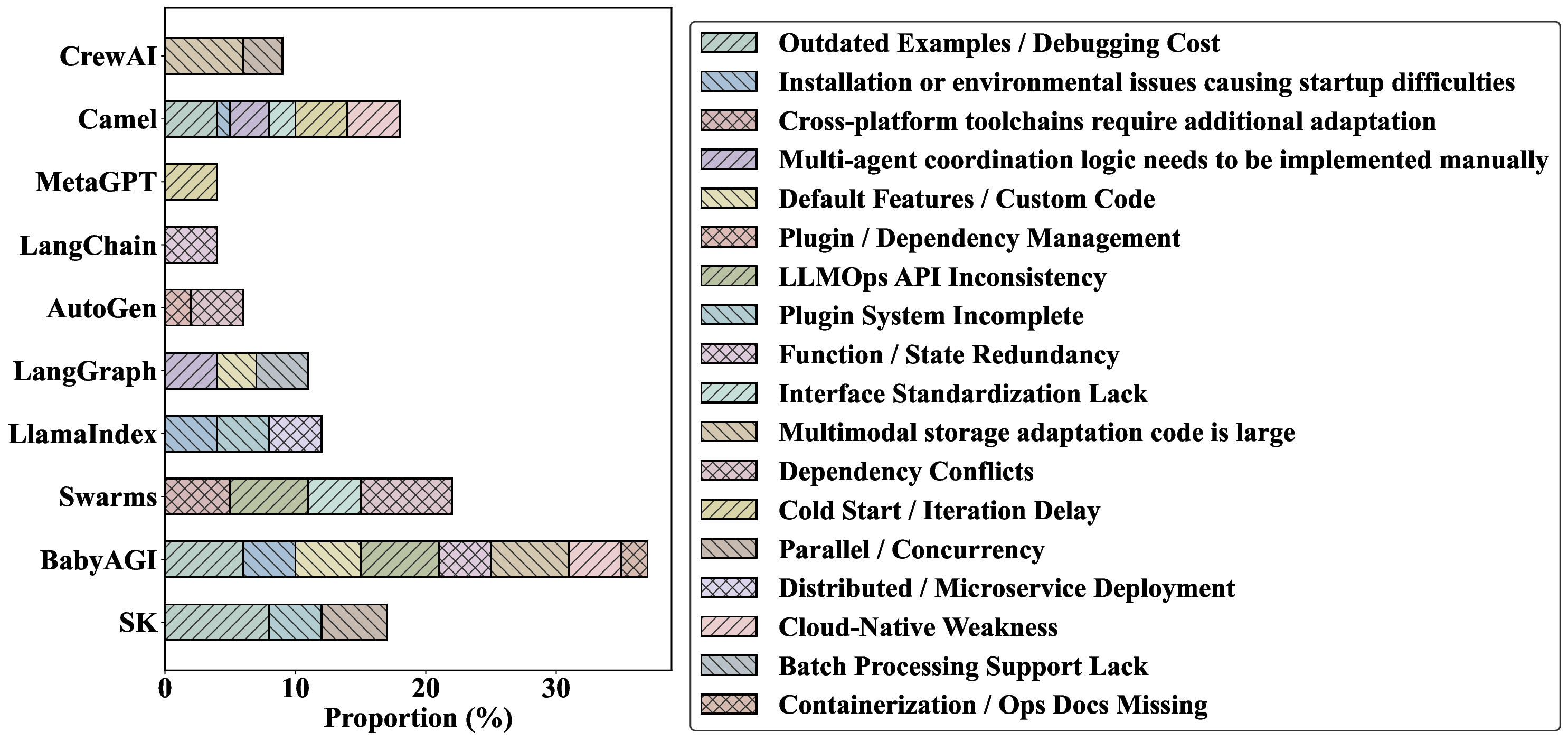

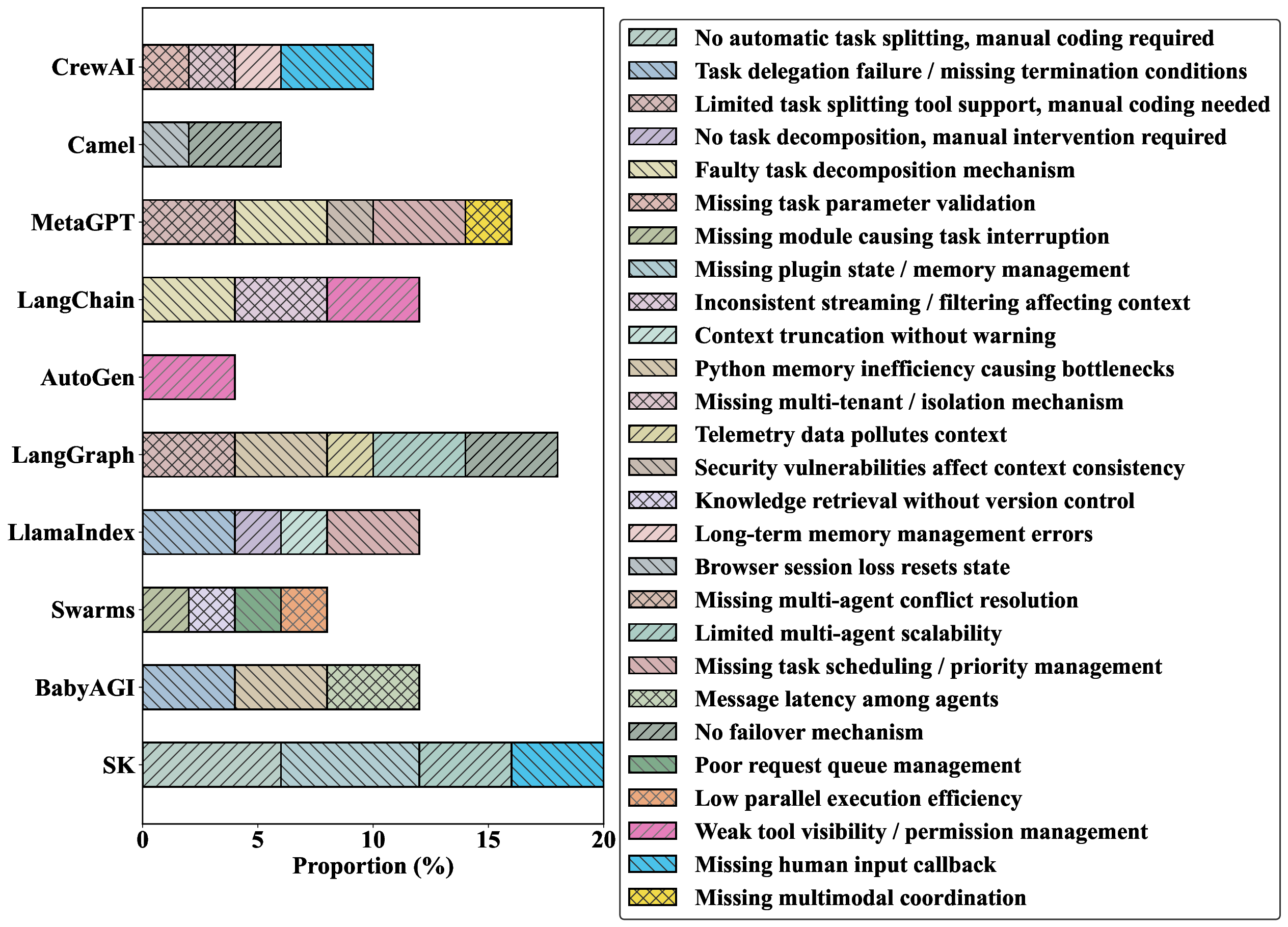

RQ2. What challenges do developers face across the SDLC when building AI agents with frameworks? Building on the foundational landscape of frameworks and usage trends established in RQ1, to further understand the challenges when developers use these frameworks in real-world development, we extract challenges from 8,710 GitHub discussions and classify them into stages of the software development lifecycle (SDLC). Our results show that developers encounter challenges in four main domain: (i) Logic: Logic-related failures summarize those faults that originate from deficiencies in the agent’s internal logic control mechanisms. This domain primarily aligns with the stages of the software development lifecycle, from early design to deployment. Issues related to task termination policies account for 25.6%, reflecting insufficient support in current frameworks for task flow management and infinite loop prevention. (ii) Tool: Tool-related failures summarize those faults that originate from deficiencies in the agent’s tool integration and interaction mechanisms. Spanning the implementation, testing, and deployment stages, tool-related issues account for 14% of reported problems. Examples include API limitations, permission errors, and third-party library mismatches. (iii) Performance: Performance-related failures summarize those faults that originate from inefficiencies or limitations in the agent’s resource management and execution performance. Emerging during implementation to deployment phases, these challenges represent 25% of all challenges and manifest as failures in context retention, loss of dialogue history, and memory management errors. (iv) Version: Version-related failures summarize those faults that originate from incompatibilities caused by agent

This content is AI-processed based on open access ArXiv data.