Spoken Language Models (SLMs) aim to learn linguistic competence directly from speech using discrete units, widening access to Natural Language Processing (NLP) technologies for languages with limited written resources. However, progress has been largely English-centric due to scarce spoken evaluation benchmarks and training data, making cross-lingual learning difficult. We present a cross-lingual interleaving method that mixes speech tokens across languages without textual supervision. We also release an EN-FR training dataset, TinyStories (~42k hours), together with EN-FR spoken StoryCloze and TopicCloze benchmarks for cross-lingual semantic evaluation, both synthetically generated using GPT-4. On 360M and 1B SLMs under matched training-token budgets, interleaving improves monolingual semantic accuracy, enables robust cross-lingual continuation, and strengthens cross-lingual hidden-state alignment. Taken together, these results indicate that cross-lingual interleaving is a simple, scalable route to building multilingual SLMs that understand and converse across languages. All resources will be made open-source to support reproducibility.

Spoken Language Models (SLMs) aim to learn language directly from speech, without relying on textual supervision. This line of work emerged from 'textless' NLP [1], which combines discrete speech representations [2] and neural language modelling [3,4] to capture both acoustic and linguistic regularities from audio alone. Within this framework, Generative Spoken Language Modelling (GSLM) formalises the objective by training an autoregressive LM on sequences of discrete speech units and reconstructing waveforms with a unit vocoder, with the motivation of broadening access to NLP in settings where written resources are scarce.

Despite rapid progress, the cross-lingual setting, which aims to train a single SLM over multiple languages, remains comparatively under-explored [5][6][7]. Two practical bottlenecks persist: (i) evaluations are predominantly English-centric, hindering measurement of cross-lingual semantic competence; and (ii) training methods that encourage sharing across languages often depend on text, for example via speech-text interleaving in Text-Speech LMs (TSLMs) [5,[8][9][10], which is not directly compatible with ’textless’ SLMs. As a result, the original promise of ’textless’ NLP learning from audio alone at scale and across languages has not yet been fully realised.

We address these limitations by introducing a cross-lingual interleaving scheme that mixes speech tokens from different languages within the same training sequence, using sentence-level alignments but no text tokens. Exposure to interleaved multilingual contexts encourages a shared representational subspace and promotes transfer, while remaining compatible with pure ’textless’ pipelines. To make this practical and measurable, we curate bilingual, sentence-aligned spoken resources and new spoken semantic benchmarks. Our contributions are as follows:

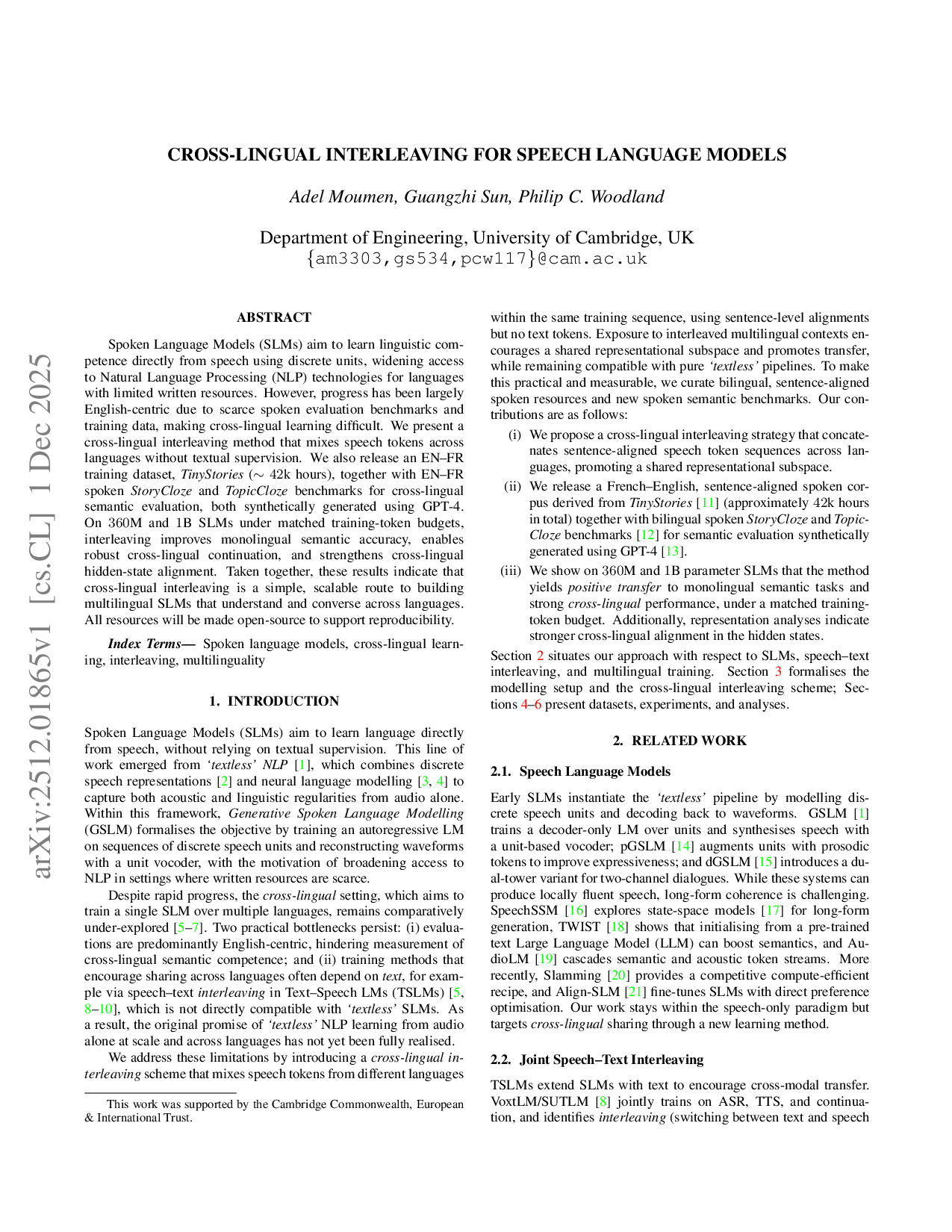

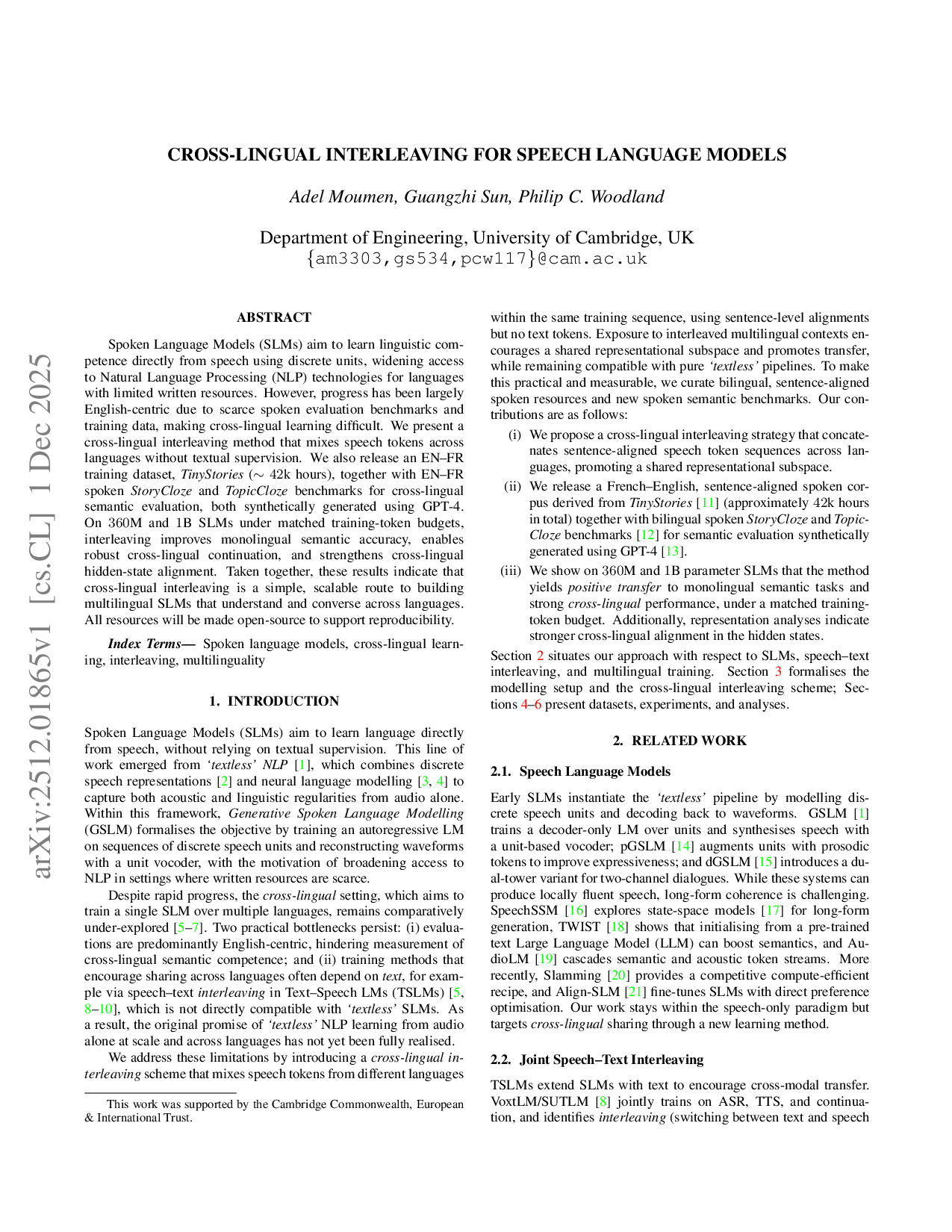

(i) We propose a cross-lingual interleaving strategy that concatenates sentence-aligned speech token sequences across languages, promoting a shared representational subspace. (ii) We release a French-English, sentence-aligned spoken corpus derived from TinyStories [11] (approximately 42k hours in total) together with bilingual spoken StoryCloze and Topic-Cloze benchmarks [12] for semantic evaluation synthetically generated using GPT-4 [13]. (iii) We show on 360M and 1B parameter SLMs that the method yields positive transfer to monolingual semantic tasks and strong cross-lingual performance, under a matched trainingtoken budget. Additionally, representation analyses indicate stronger cross-lingual alignment in the hidden states. Section 2 situates our approach with respect to SLMs, speech-text interleaving, and multilingual training. Section 3 formalises the modelling setup and the cross-lingual interleaving scheme; Sections 4-6 present datasets, experiments, and analyses.

Early SLMs instantiate the ’textless’ pipeline by modelling discrete speech units and decoding back to waveforms. GSLM [1] trains a decoder-only LM over units and synthesises speech with a unit-based vocoder; pGSLM [14] augments units with prosodic tokens to improve expressiveness; and dGSLM [15] introduces a dual-tower variant for two-channel dialogues. While these systems can produce locally fluent speech, long-form coherence is challenging. SpeechSSM [16] explores state-space models [17] for long-form generation, TWIST [18] shows that initialising from a pre-trained text Large Language Model (LLM) can boost semantics, and Au-dioLM [19] cascades semantic and acoustic token streams. More recently, Slamming [20] provides a competitive compute-efficient recipe, and Align-SLM [21] fine-tunes SLMs with direct preference optimisation. Our work stays within the speech-only paradigm but targets cross-lingual sharing through a new learning method.

TSLMs extend SLMs with text to encourage cross-modal transfer. VoxtLM/SUTLM [8] jointly trains on ASR, TTS, and continuation, and identifies interleaving (switching between text and speech arXiv:2512.01865v1 [cs.CL] 1 Dec 2025 inside a sequence) as particularly effective for building a shared space. Spirit-LM [5] scales this recipe and emphasises interleaving as a key quality driver; SmolTolk [9] proposes architecture refinements for vocabulary expansion; and GLM4-Voice [6] leverages a text-to-token model [10] to generate audio tokens on-the-fly, sidestepping explicit aligners [22,23]. However, these approaches depend on text and interleave within a single language, limiting direct applicability to textless, cross-lingual SLM training. We instead interleave speech tokens across languages without introducing text.

Multilingual training for speech LMs is gaining traction but remains under-evaluated on spoken semantic understanding beyond English. Spirit-LM [5] includes multiple languages at scale but reports only English spoken evaluations; GLM4-Voice [6] trains on Chinese and English yet evaluates English semantics; and scheduled interleaving for speech-to-speech translation [7

This content is AI-processed based on open access ArXiv data.