📝 Original Info 📝 Abstract We present CycliST, a novel benchmark dataset designed to evaluate Video Language Models (VLM) on their ability for textual reasoning over cyclical state transitions. CycliST captures fundamental aspects of real-world processes by generating synthetic, richly structured video sequences featuring periodic patterns in object motion and visual attributes. CycliST employs a tiered evaluation system that progressively increases difficulty through variations in the number of cyclic objects, scene clutter, and lighting conditions, challenging state-of-the-art models on their spatio-temporal cognition. We conduct extensive experiments with current state-of-the-art VLMs, both open-source and proprietary, and reveal their limitations in generalizing to cyclical dynamics such as linear and orbital motion, as well as time-dependent changes in visual attributes like color and scale. Our results demonstrate that present-day VLMs struggle to reliably detect and exploit cyclic patterns, lack a notion of temporal understanding, and are unable to extract quantitative insights from scenes, such as the number of objects in motion, highlighting a significant technical gap that needs to be addressed. More specifically, we find no single model consistently leads in performance: neither size nor architecture correlates strongly with outcomes, and no model succeeds equally well across all tasks. By providing a targeted challenge and a comprehensive evaluation framework, CycliST paves the way for visual reasoning models that surpass the state-of-the-art in understanding periodic patterns.

💡 Deep Analysis

📄 Full Content CycliST: A Video Language Model Benchmark

for Reasoning on Cyclical State Transitions

Simon Kohaut1,2,∗

SIMON.KOHAUT@CS.TU-DARMSTADT.DE

Daniel Ochs1,∗

DANIEL.OCHS@CS.TU-DARMSTADT.DE

Shun Zhang1

SHUN.ZHANG@STUD.TU-DARMSTADT.DE

Benedict Flade3

BENEDICT.FLADE@HONDA-RI.DE

Julian Eggert3

JULIAN.EGGERT@HONDA-RI.DE

Kristian Kersting1,5,6,7

KERSTING@CS.TU-DARMSTADT.DE

Devendra Singh Dhami4

D.S.DHAMI@TUE.NL

1Artificial Intelligent and Machine Learning Lab, TU Darmstadt

2Konrad Zuse School of Excellence in Learning and Intelligent Systems (ELIZA)

3Honda Research Institute Europe GmbH, Offenbach, Germany

4Uncertainty in Artificial Intelligence Group, TU Eindhoven

5Hessian Center for AI (hessian.AI)

6Center for Cognitive Science

7German Center for Artificial Intelligence (DFKI)

*Authors contributed equally

Reviewed on OpenReview: https://openreview.net/forum?id=XXXX

Abstract

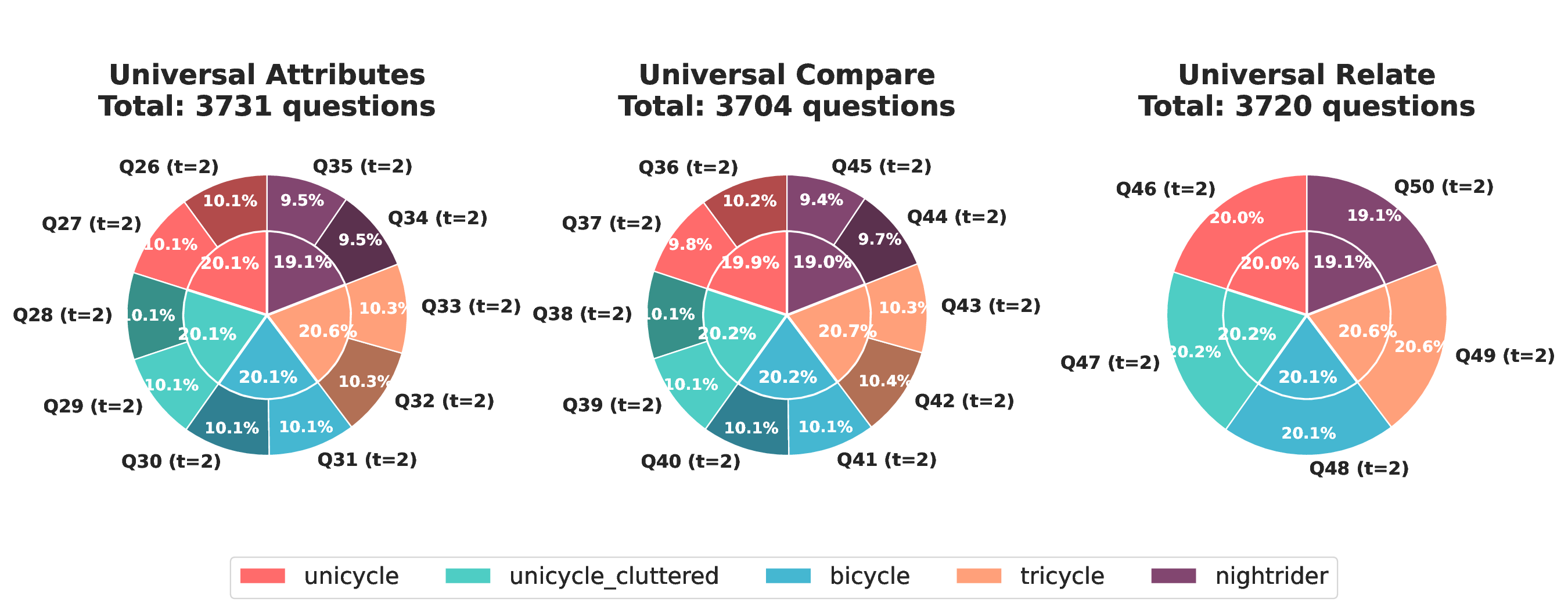

We present CycliST, a novel benchmark dataset designed to evaluate Video Language Models (VLM)

on their ability for textual reasoning over cyclical state transitions. CycliST captures fundamental

aspects of real-world processes by generating synthetic, richly structured video sequences featuring

periodic patterns in object motion and visual attributes. CycliST employs a tiered evaluation system

that progressively increases difficulty through variations in the number of cyclic objects, scene clutter,

and lighting conditions, challenging state-of-the-art models on their spatio-temporal cognition. We

conduct extensive experiments with current state-of-the-art VLMs, both open-source and proprietary,

and reveal their limitations in generalizing to cyclical dynamics such as linear and orbital motion, as

well as time-dependent changes in visual attributes like color and scale. Our results demonstrate that

present-day VLMs struggle to reliably detect and exploit cyclic patterns, lack a notion of temporal

understanding, and are unable to extract quantitative insights from scenes, such as the number

of objects in motion, highlighting a significant technical gap that needs to be addressed. More

specifically, we find no single model consistently leads in performance: neither size nor architecture

correlates strongly with outcomes, and no model succeeds equally well across all tasks. By providing

a targeted challenge and a comprehensive evaluation framework, CycliST paves the way for visual

reasoning models that surpass the state-of-the-art in understanding periodic patterns.

Keywords: Video Question Answering, Scene Understanding, Spatio-Temporal Reasoning, Bench-

mark Dataset

©2025 Simon Kohaut, Daniel Ochs, Shun Zhang, Benedict Flade, Julian Eggert, Kristian Kersting, Devendra Singh Dhami.

arXiv:2512.01095v1 [cs.CV] 30 Nov 2025

S. KOHAUT, D. OCHS, B. FLADE, J. EGGERT, K. KERSTING, D. S. DHAMI

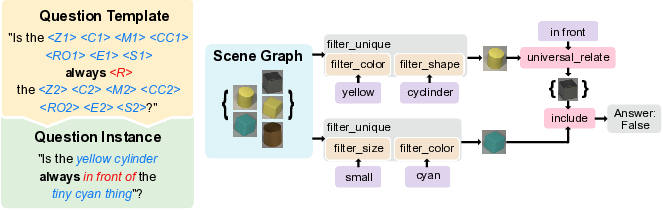

LLM Judge

Video Language

Model

Task: Describe the scene.

Scene

Understanding

Video Question

Answering

Q: What color is the orbiting object?

Question

Generation

Scene Graph

Scene Generation

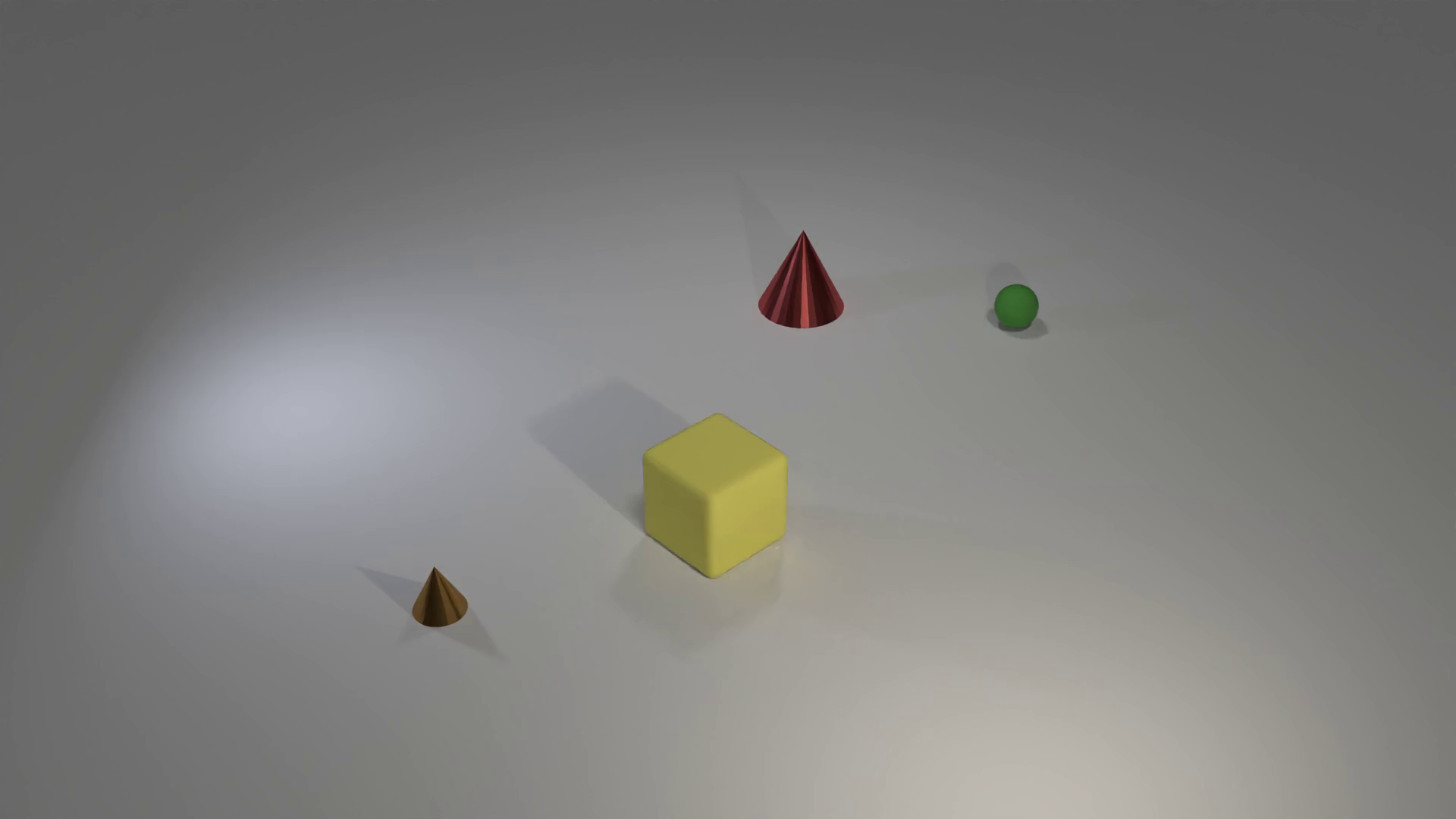

Color Change

Orientation Change

Linear

Motion Cycles

Light Cycles

Orbiting

Size Change

Attribute Cycles

- Cyclical State Transitions

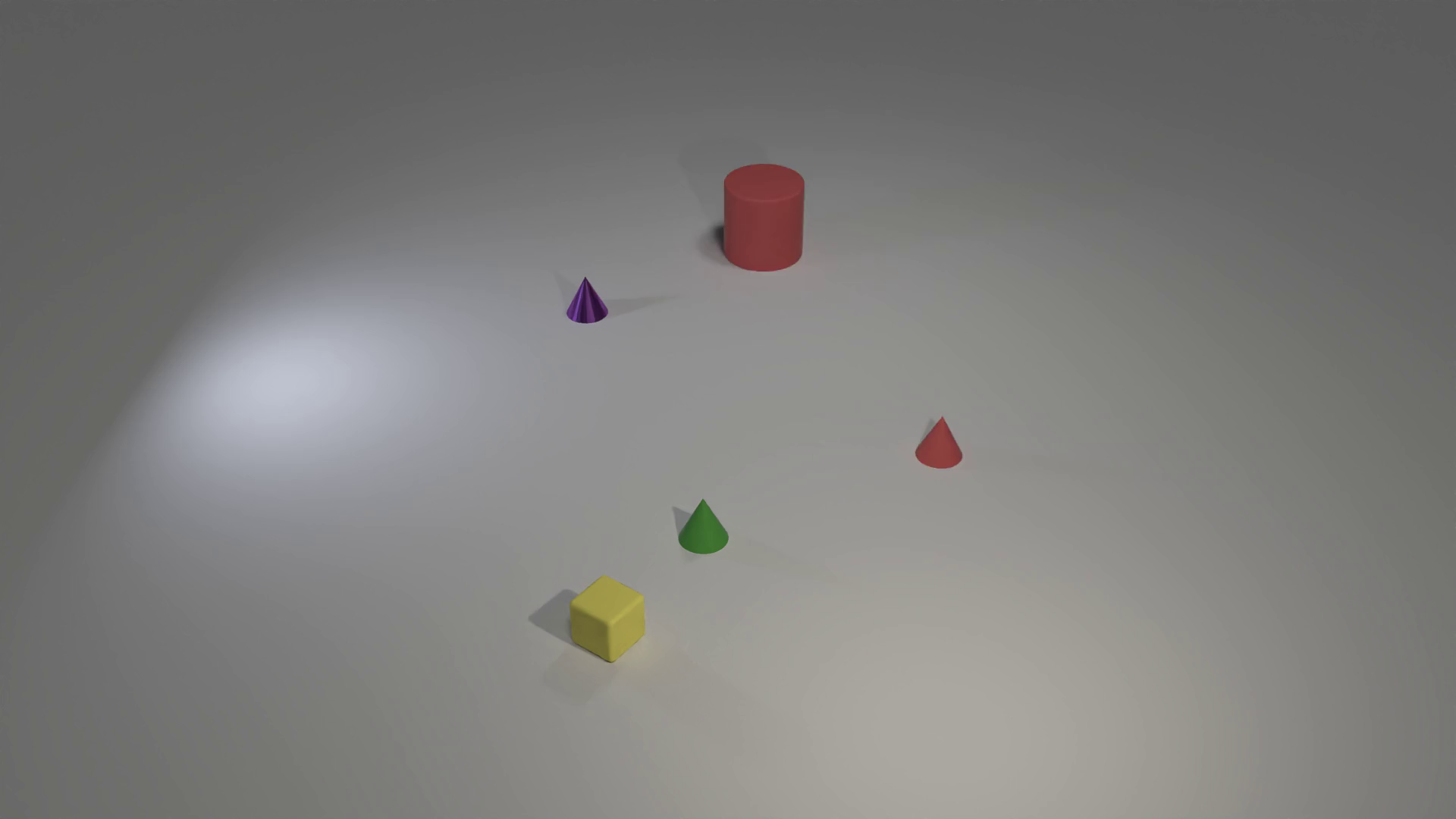

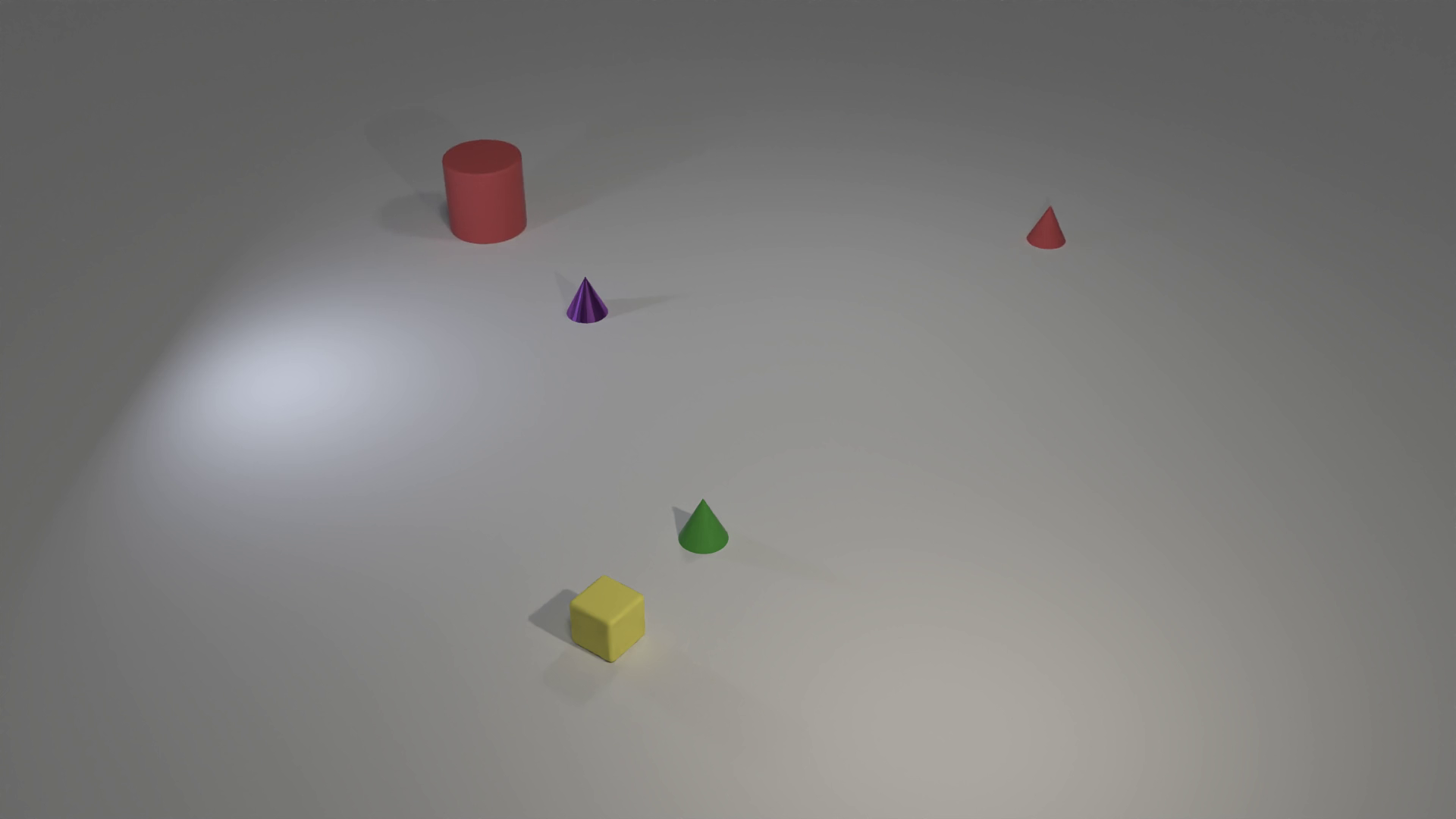

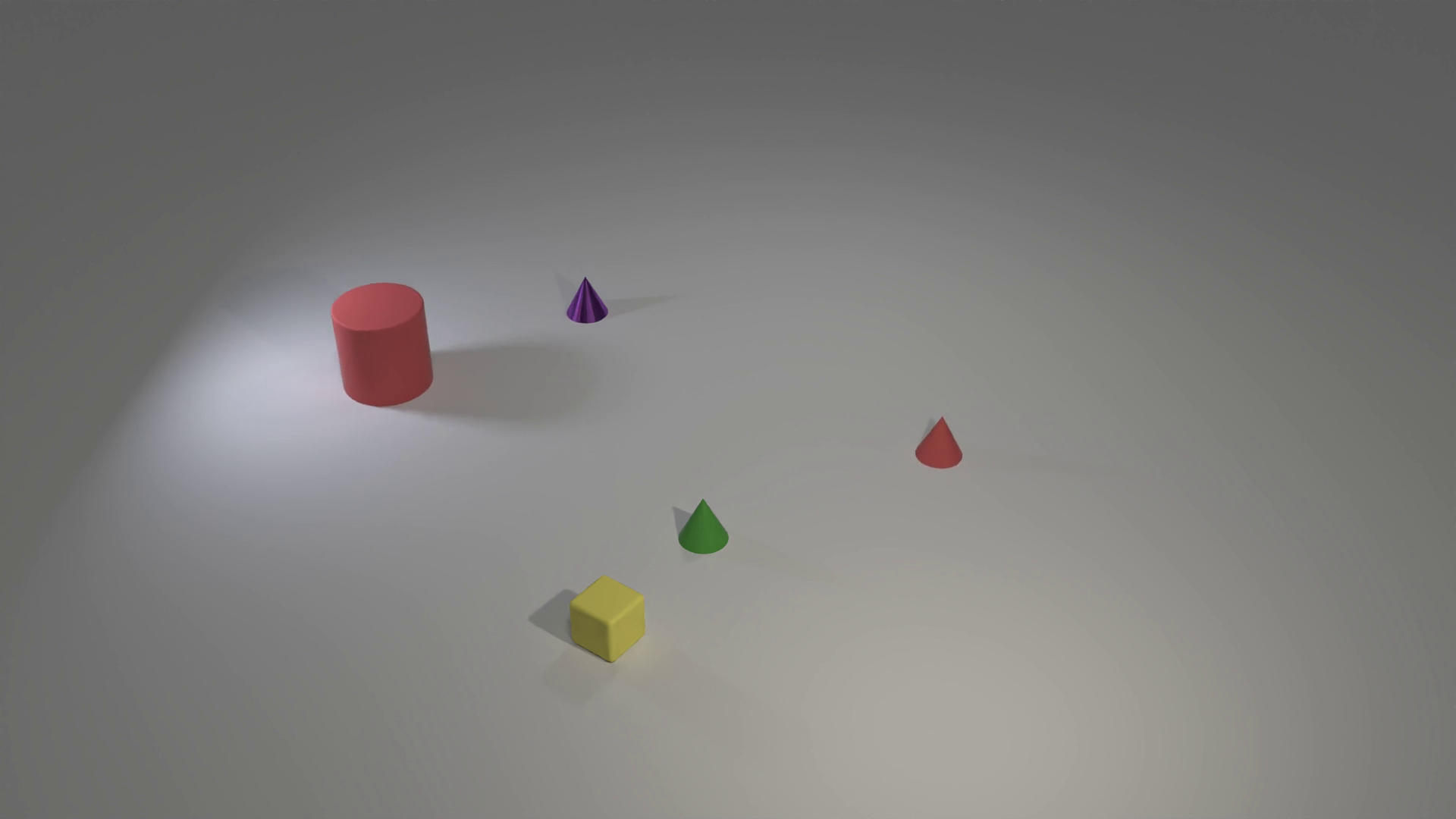

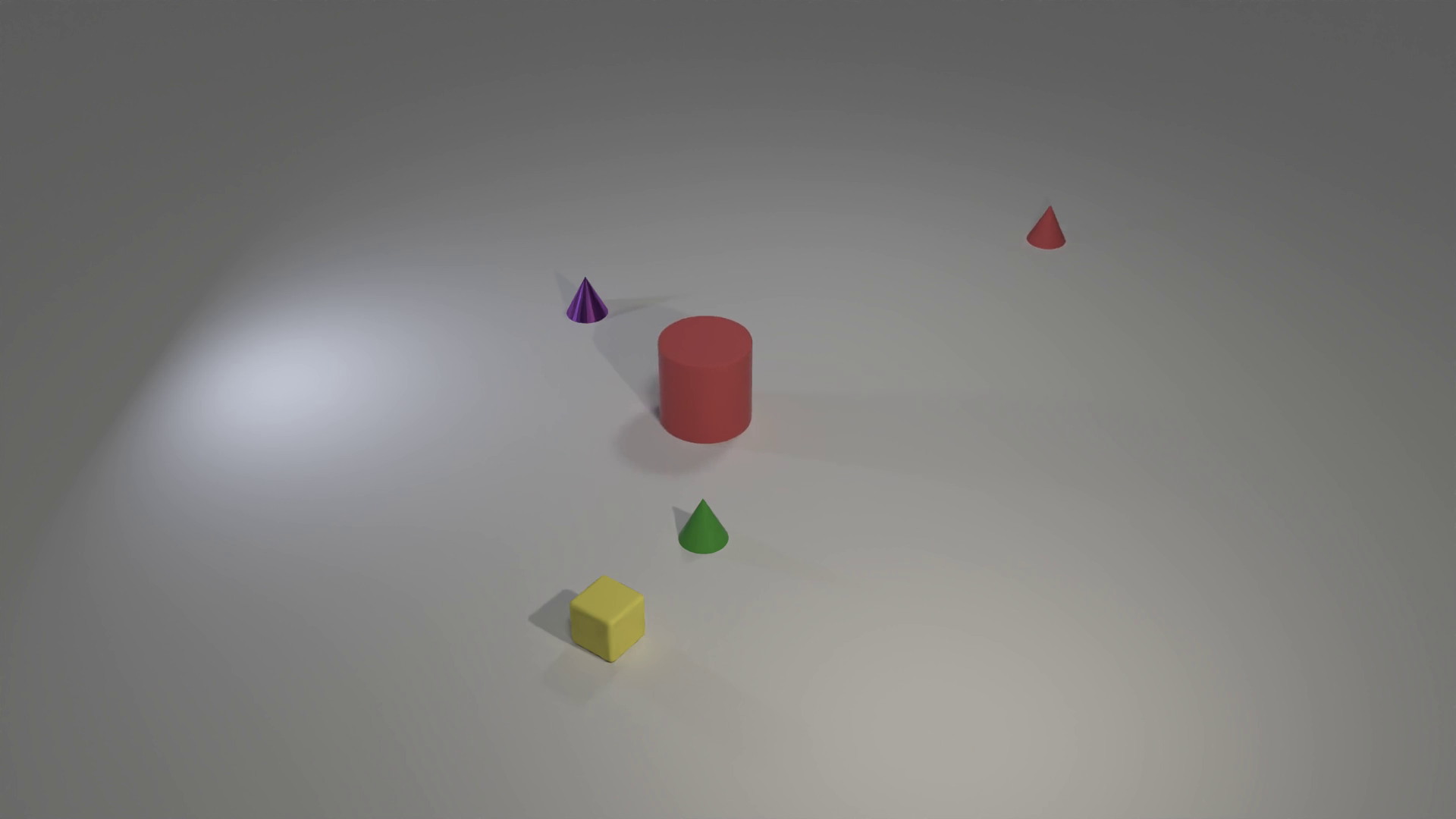

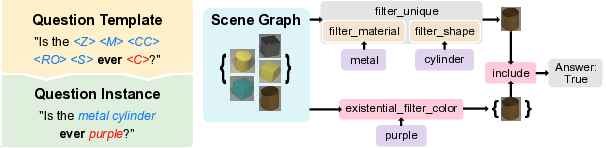

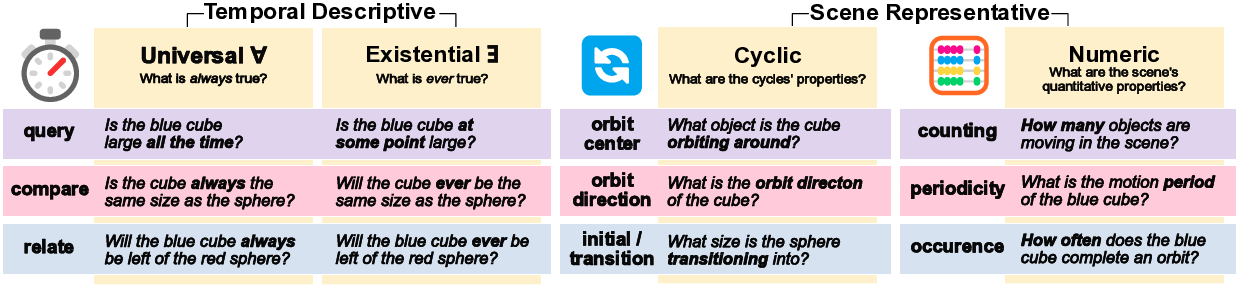

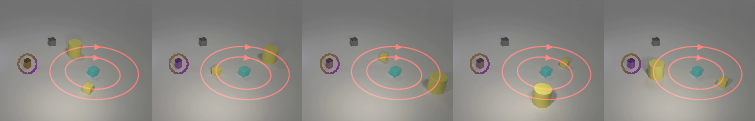

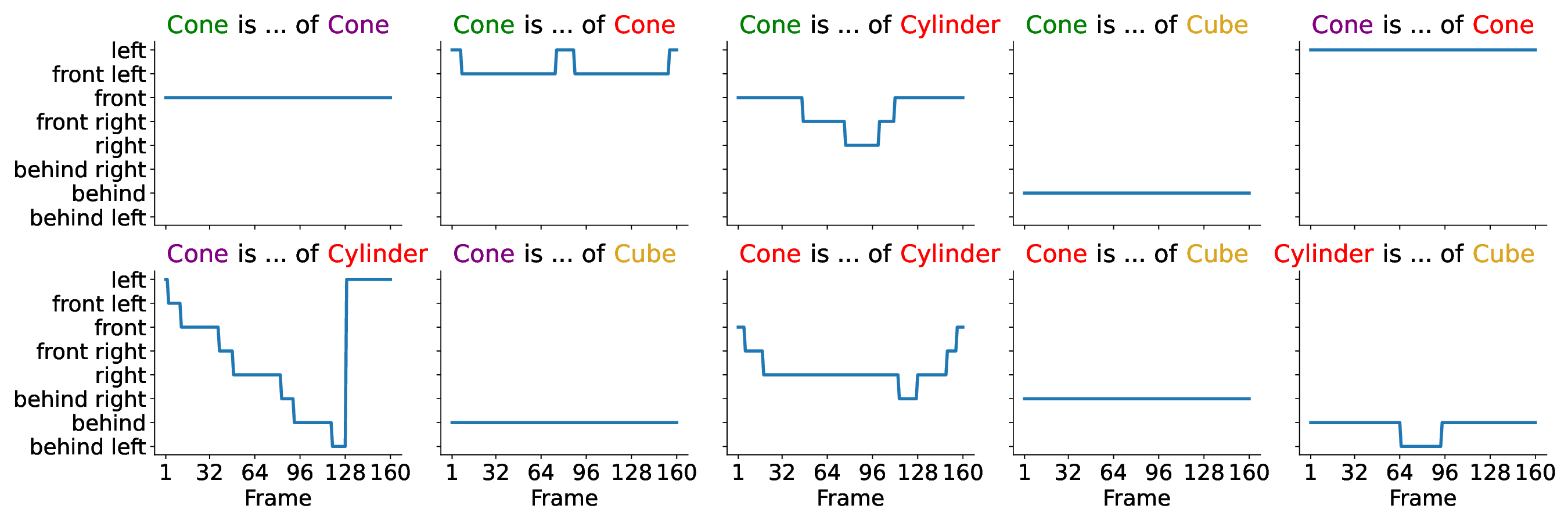

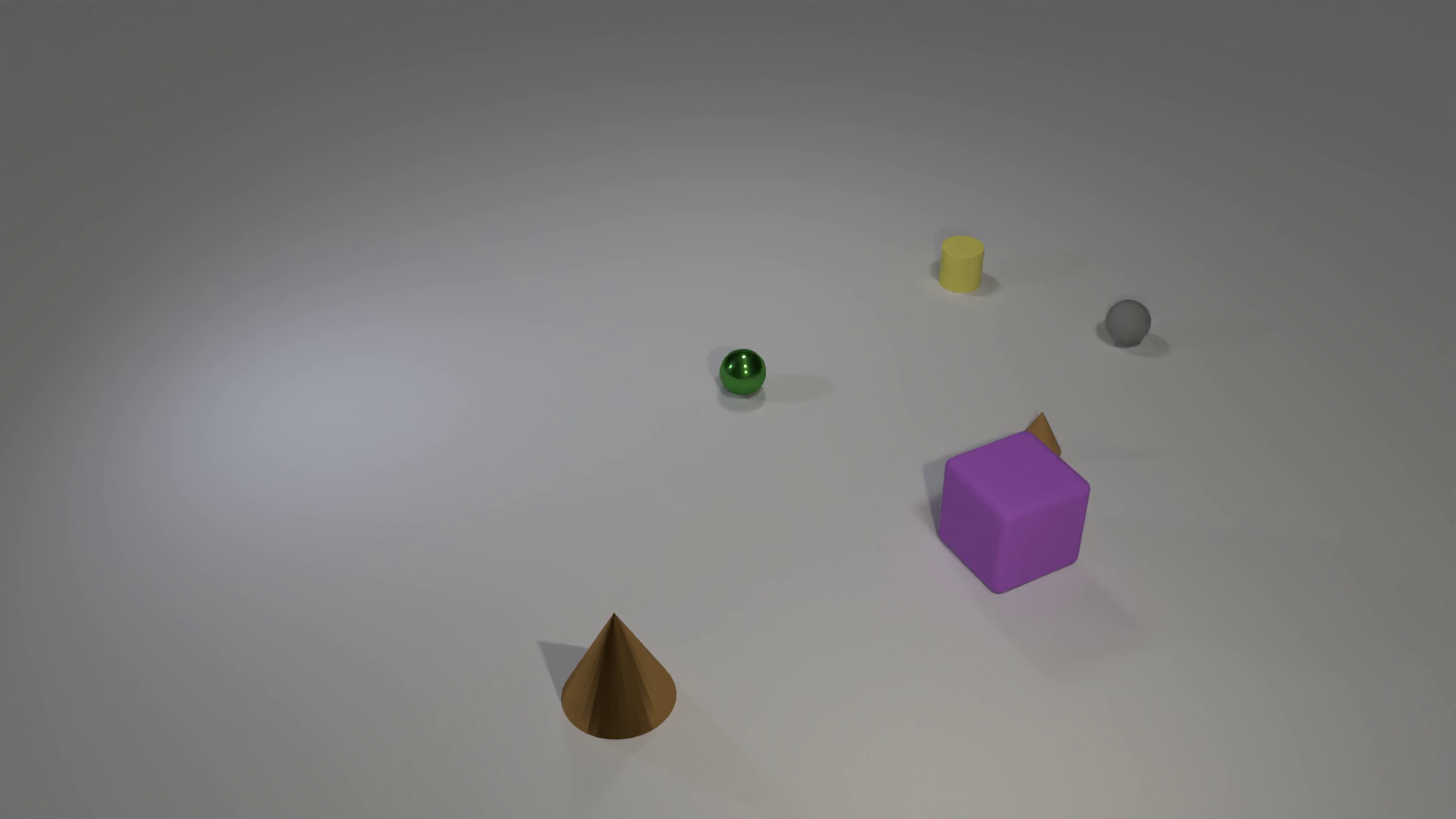

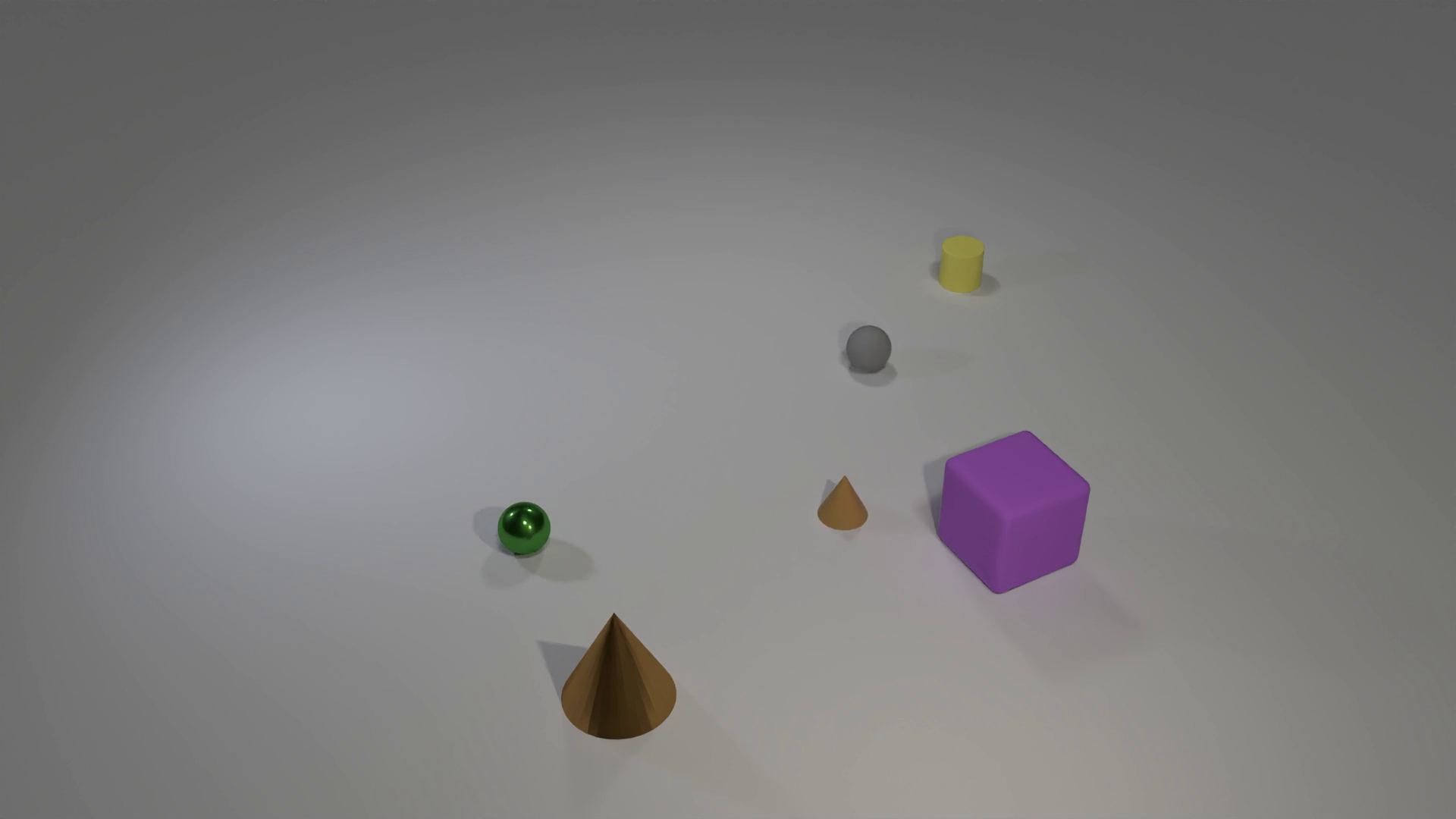

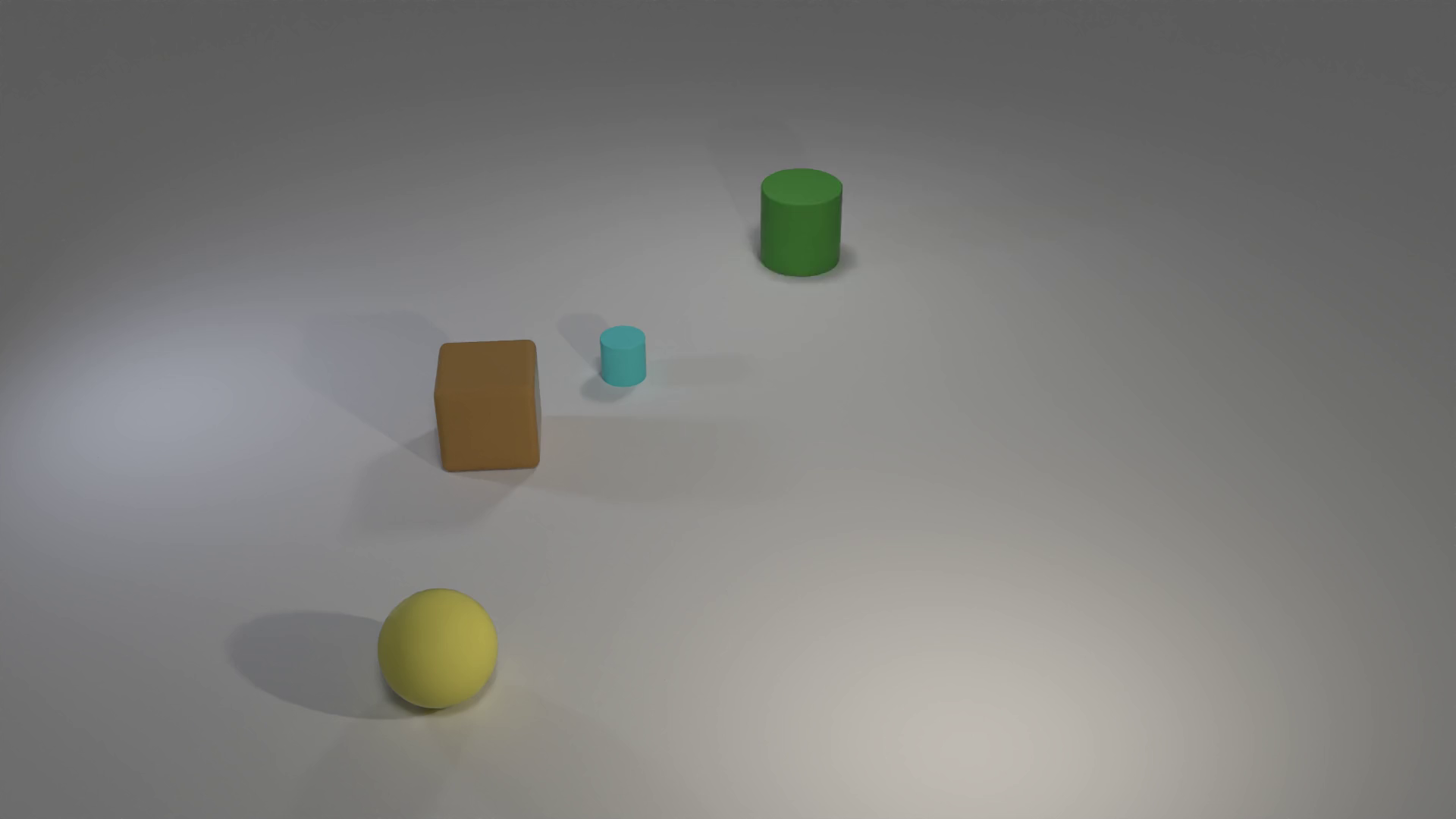

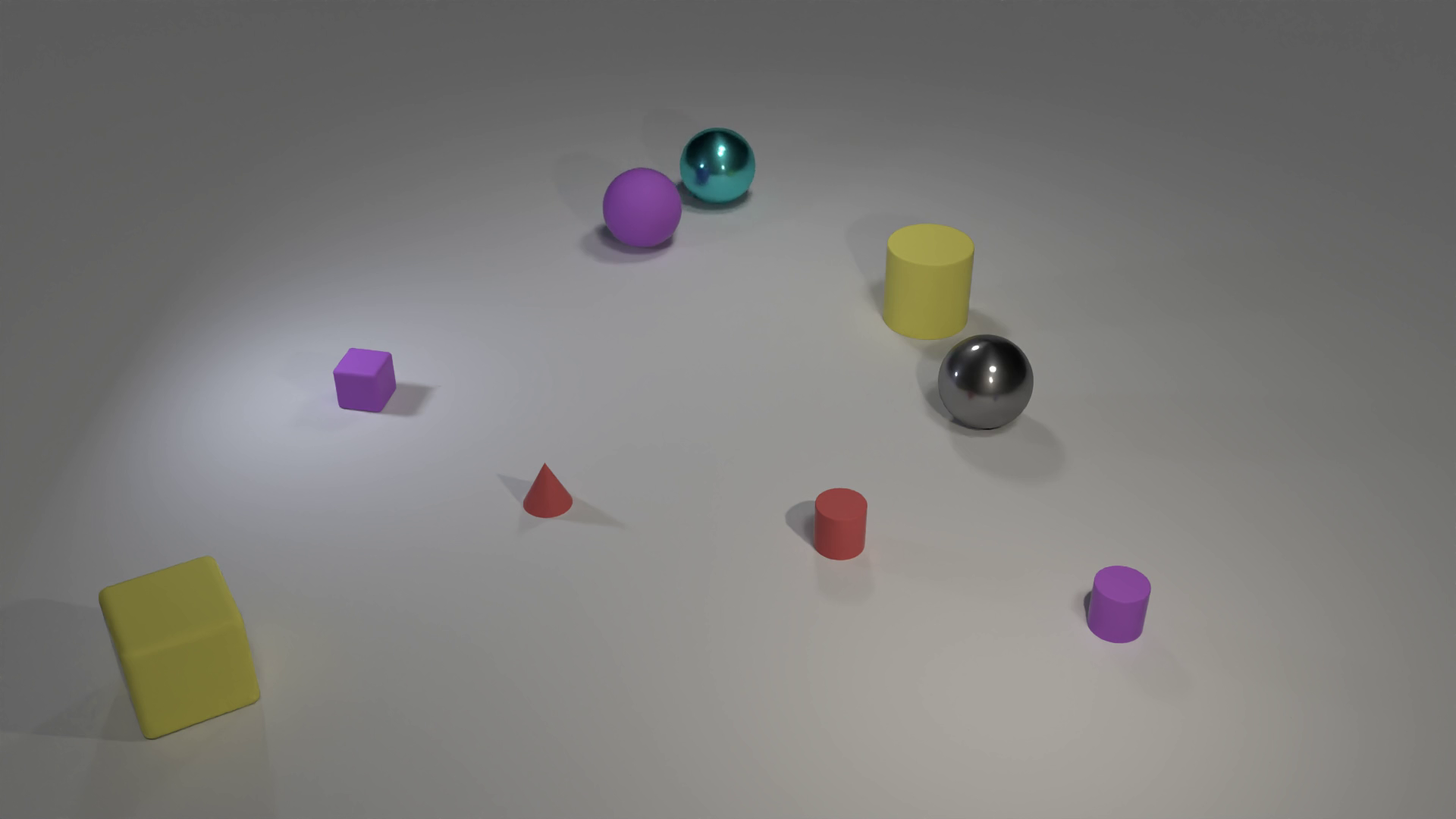

Figure 1: CycliST: A diagnostic Video Question Answering and Scene Understanding benchmark

for Video Language Models. As CycliST’s scenes underly periodic and smooth changes in position

and visual attributes, they always return to each configuration at regular intervals.

1 Introduction

Cyclical patterns are a common feature of our environment, and recognizing and interpreting these

patterns is fundamental for future Artificial Intelligence models to match human cognition.

Whether it’s traffic lights on the daily commute or the orbital period of satellites, repetitive events

are everywhere around us. These recurring phenomena are crucial in many fields. For example, in

traffic management, recognizing the timing of traffic lights or the arrival of other vehicles at the

intersection is essential for safe navigation of autonomous vehicles (Gautam and Kumar, 2023).

Similarly, by measuring the orbital period of the moonlet Dimorphos around the asteroid Didymos

before and after the DART impact, scientists were able to precisely quantify its deflection (Johnson

et al., 2023). In industrial environments, packages on conveyor belts exhibit cyclical patterns that

require monitoring to detect and treat anomalies, such as incorrect timing or variations in package

sizes. In healthcare, cyclical patterns, such as heartbeats, are analyzed through medical imaging to

detect abnormalities (Gupta et al., 2022).

By thoroughly understanding and analyzing these cyclical patterns, we can, to some extent, save

computational resources by compressing this repetitive information. That is, with a reliable model of

a cyclical pattern, an agent may allocate fewer resources and attend to more important matters than

to monitoring the respective state’s evolution. Although cyclical phenomena are widespread, current

diagnostic datasets mainly cover a variety of video contexts but

📸 Image Gallery

Reference This content is AI-processed based on open access ArXiv data.