Network-on-Chip (NoC) design requires exploring a high-dimensional configuration space to satisfy stringent throughput requirements and latency constraints. Traditional design space exploration techniques are often slow and struggle to handle complex, non-linear parameter interactions. This work presents a machine learning-driven framework that automates NoC design space exploration using BookSim simulations and reverse neural network models. Specifically, we compare three architectures - a Multi-Layer Perceptron (MLP),a Conditional Diffusion Model, and a Conditional Variational Autoencoder (CVAE) to predict optimal NoC parameters given target performance metrics. Our pipeline generates over 150,000 simulation data points across varied mesh topologies. The Conditional Diffusion Model achieved the highest predictive accuracy, attaining a mean squared error (MSE) of 0.463 on unseen data. Furthermore, the proposed framework reduces design exploration time by several orders of magnitude, making it a practical solution for rapid and scalable NoC co-design.

W ITH the rise of multi-core and many-core processors, Network-on-Chip (NoC) architectures have become the backbone of on-chip communication systems [1]. However, designing an efficient NoC remains a major challenge due to the vast number of configuration parameters and their nontrivial interactions. Designers must often trade off latency, throughput, and power while designing routing algorithms, buffer sizes, virtual channels, for given traffic injection rates [2], [3]. Traditional NoC design methodologies rely heavily on bruteforce simulations or heuristic algorithms, which are computationally expensive and slow to converge, especially for large design spaces [4]. Furthermore, these approaches typically operate in the forward direction-predicting performance from given parameters-but this fails to address a more pressing inverse problem: given desired performance, what are the optimal parameters? Recent advances in machine learning (ML) offer new tools for automating hardware design [5], [6]. However, most prior work in ML-for-NoC focuses on forward modeling or classification tasks with little attention given to design. [Moreover, few studies compare how different ML models handle the many-to-one mapping that arises in reverse prediction.

An extensive literature survey has been carried out and the state-of-art is presented under the following heads.

Traditional NoC design space exploration has relied primarily on simulation-based approaches combined with optimization heuristics. Early work on NoC topology optimization using genetic algorithms, [7], while subsequent research has explored various metaheuristic approaches including particle swarm optimization [8] and simulated annealing [9]. Several analytical models have been proposed to reduce simulation overhead. Queueing theory-based models for NoC performance prediction [10], and support vector regressionbased models for latency modeling for NoC [11] have been presented. However, these analytical approaches often sacrifice accuracy for speed, particularly when modeling complex traffic patterns and buffer dynamics.

The application of machine learning to hardware design optimization has gained significant traction in recent years. Neural network-based performance prediction for processor architectures have been developed [12]. Earlier literature suggests use of support vector machines for NoC optimization [13], focusing on forward prediction models. More recently, adaptive deep reinforcement learning has been applied to NoC routing algorithm design [14], that failed to address the reverse parameter prediction problem.

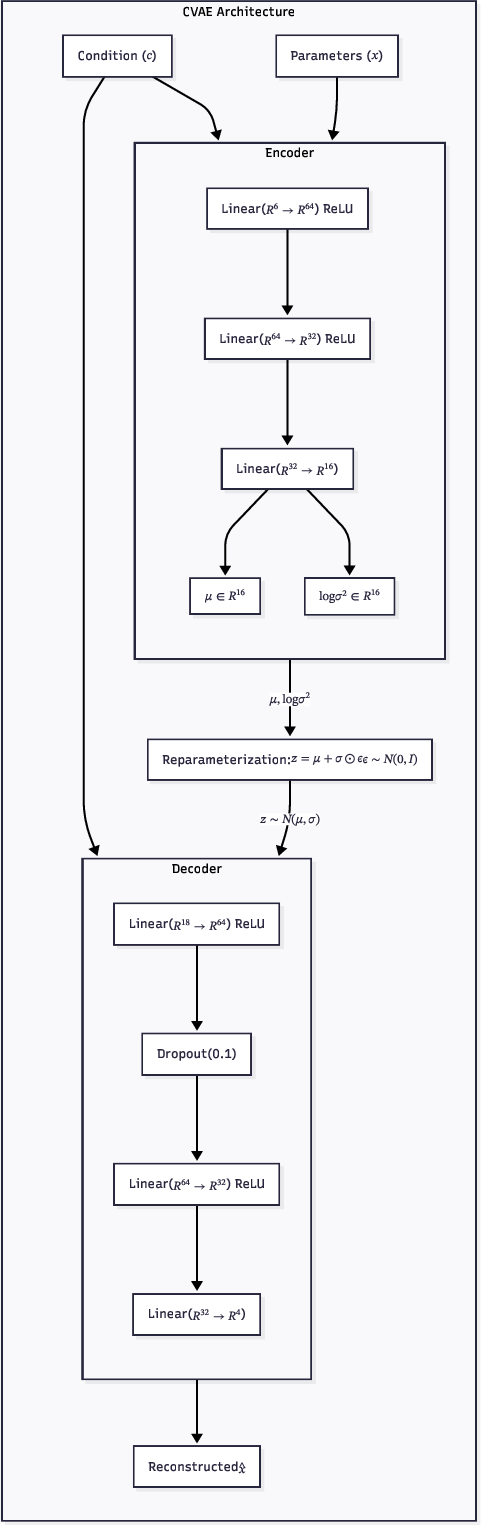

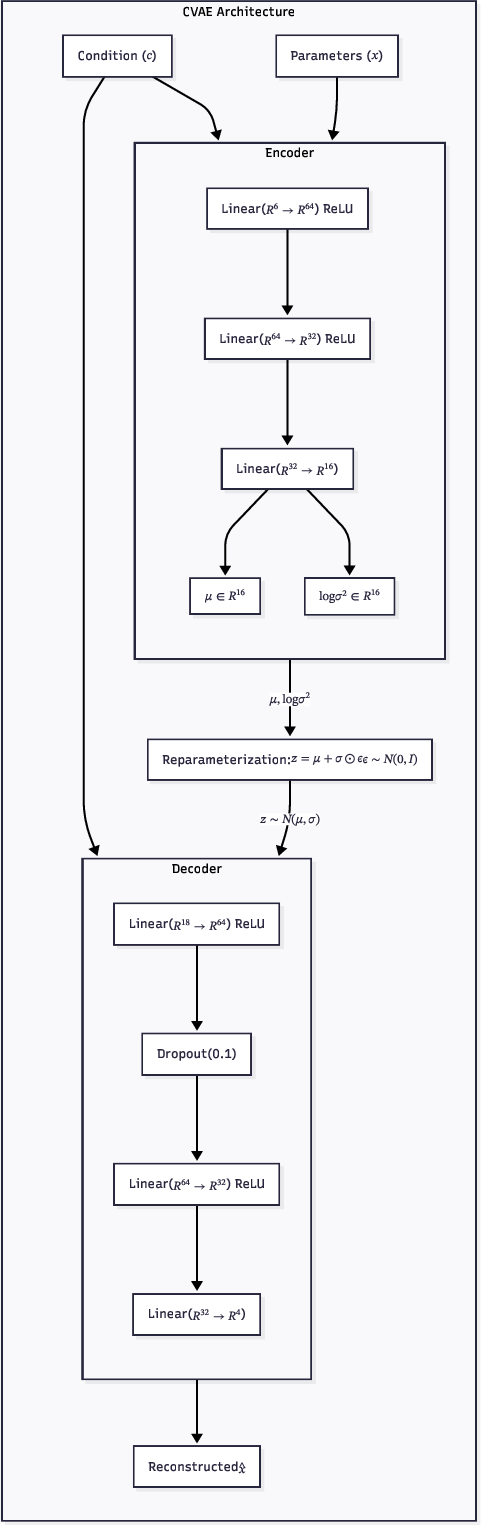

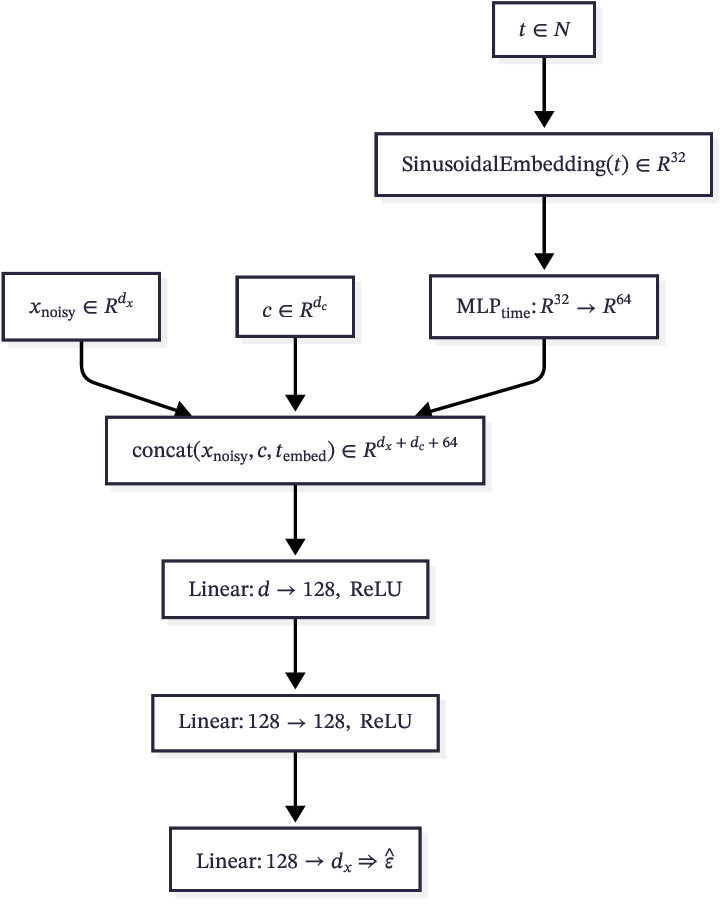

Generative models have shown promise in various design domains. Conditional Variational Autoencoders (CVAEs) have been successfully applied to circuit design [15]. Diffusion models, while newer, have demonstrated superior generation quality in image synthesis [16] and have begun finding applications in engineering design. However, no prior work has systematically compared these generative approaches for NoC parameter optimization, representing a significant gap that the proposed work addresses.

The contributions of the proposed work is summarized as follows:

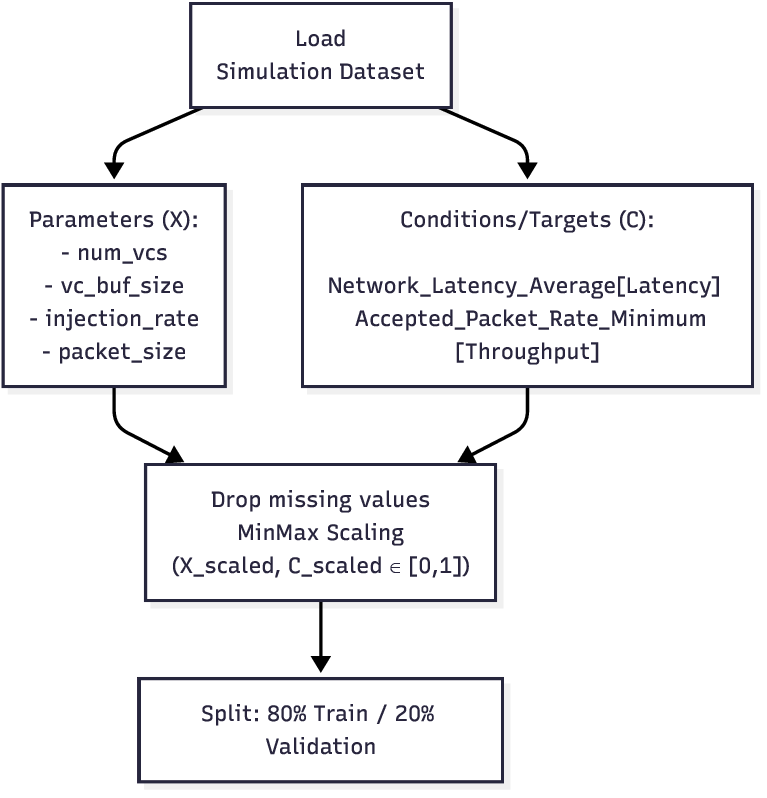

Automated Simulation Framework: This paper presents a Python-based framework for large-scale BookSim simulations with efficient configuration generation, parallel execution, and performance parsing.

Reverse Parameter Prediction: This paper reformulates NoC optimization as a supervised learning problem that predicts parameters from target latency and throughput specifications.

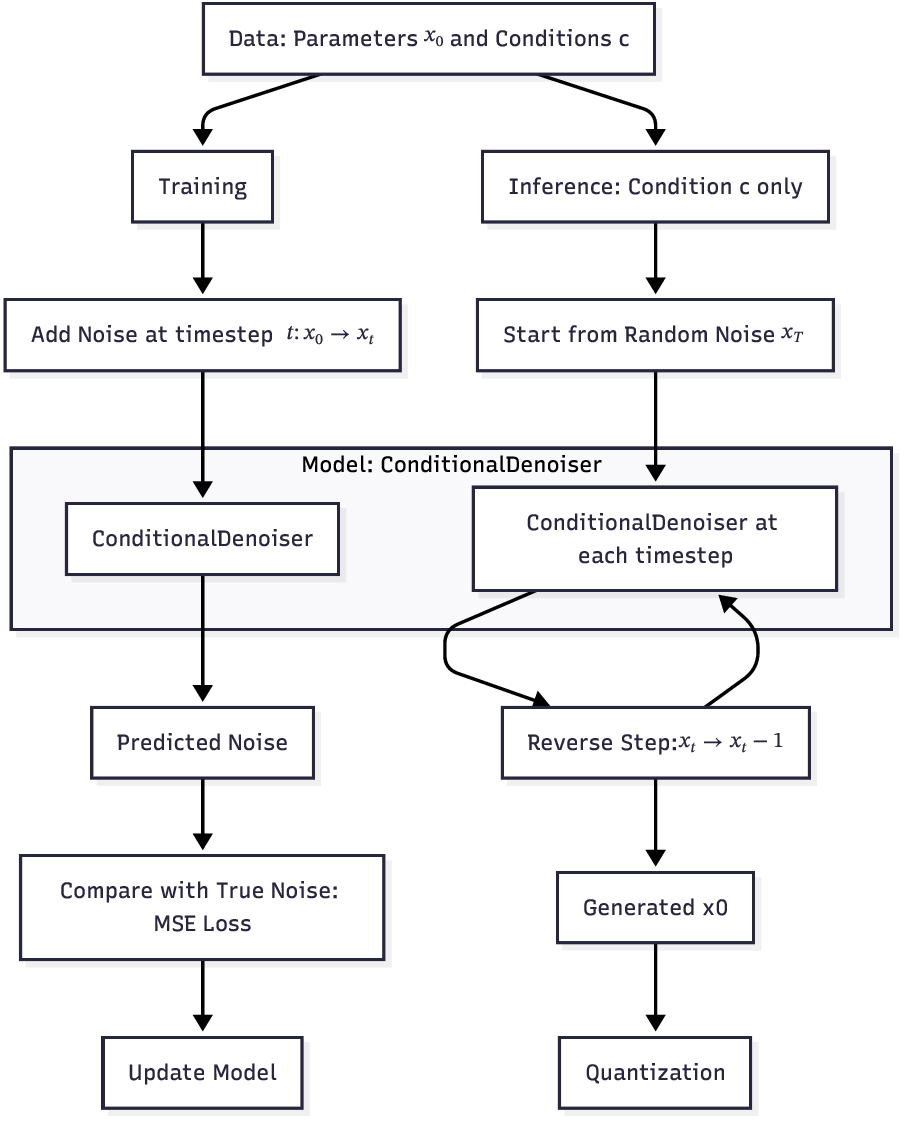

Architecture Comparison: This paper implements and compares three distinct neural network architectures-MLP, Conditional Diffusion Model, and CVAE-providing insights into their relative strengths for NoC design optimization.

Comprehensive Evaluation: This paper demonstrates a framework that achieves fast and accurate reverse predictions, reducing manual exploration and paving the way for AI-guided NoC co-design. The remainder of this paper is organized as follows: Section III describes the methodology and neural network architectures. While Section IV presents the results and analysis, Section V discusses implications and limitations. Section VI concludes with a roadmap for future work.

This paper formulates the Network-on-Chip (NoC) design optimization as a reverse prediction problem. Given target performance specifications P = [latency target , throughput target ], we seek to predict optimal configuration parameters X = [num vcs, vc buf size, injection rate, packet size] that achieve the desired performance. The variable num vcs refer to the number of virtual channels while vc buf size refers to the buffer size, injection rate refers to the packet injection rate and packet size refers to packet size. Formally, we learn a mapping function f : P → X such that when X is used in a NoC simulation, the resulting performance P ′ minimizes the distance ∥P -P ′ ∥.

This paper provides a framework that

This content is AI-processed based on open access ArXiv data.