While recent vision-language models (VLMs) demonstrate strong image understanding, their ability to "think with images", i.e., to reason through multi-step visual interactions, remains limited. We introduce VISTA-Gym, a scalable training environment for incentivizing tool-integrated visual reasoning capabilities in VLMs. VISTA-Gym unifies diverse real-world multimodal reasoning tasks (7 tasks from 13 datasets in total) with a standardized interface for visual tools (e.g., grounding, parsing), executable interaction loops, verifiable feedback signals, and efficient trajectory logging, enabling visual agentic reinforcement learning at scale. While recent VLMs exhibit strong text-only reasoning, both proprietary and open-source models still struggle with tool selection, invocation, and coordination. With VISTA-Gym, we train VISTA-R1 to interleave tool-use with agentic reasoning via multi-turn trajectory sampling and end-to-end reinforcement learning. Extensive experiments across 11 public reasoning-intensive VQA benchmarks show that VISTA-R1-8B outperforms state-of-the-art baselines with similar sizes by 9.51%-18.72%, demonstrating VISTA-Gym as an effective training ground to unlock the tool-integrated reasoning capabilities for VLMs.

Recent progress in VLMs has demonstrated strong performance across tasks such as visual question answering, multimodal reasoning, and grounded mathematical problem solving [3,14,20,68]. Many of these advancements stem from incorporating text-based reasoning paradigms, particularly the Chain-of-Thought (CoT) [73] and reinforcement learning (RL) [18], which decomposes complex tasks into intermediate text reasoning steps for problem solving, and leverages outcome-based rewards to refine reasoning quality [22,32,36,38,54,62,63,89].

Most of the existing VLM reasoning processes still rely on static visual embeddings and shallow cross-modal alignment. As a result, their text-only reasoning struggles to capture the fine-grained visual structures, spatial relationships, and quantitative dependencies present in real-world scenes [16,90]. These limitations underscore the need for thinking-with-image paradigms [67], where reasoning is tightly coupled with visual perception, enabling richer cross-modal interaction and step-by-step visual reasoning.

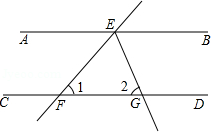

To enhance visual-centric reasoning and encourage thinking-with-image behaviors in VLMs, tool-integrated reasoning (TIR) [19,79,90] has been introduced recently. TIR equips models with external tools, such as grounding, zoom-in, and search, to facilitate fine-grained perception and reasoning over object interactions. Despite its promise, current open-sourced VLMs still remain inadequate at leveraging these tools for effective reasoning, as shown in Figure 1. This limitation highlights the urgent need for an effective training environment and strategies to select, invoke, and coordinate visual tools dynamically. While there are several studies dedicated to improving tool usage capabilities for VLMs [16,66,74,90], those works are often narrow in scope, often confined to specific tasks (e.g., using image search for knowledge or zoom-in for object grounding). In parallel, recent efforts in gaming and robotics [9,70] have introduced unified environments for training VLM agents. These platforms provide controllable dynamics, grounded feedback, and structured task spaces, offering clear advantages for robot learning and conceptually aligning with TIR. However, the simulation of toolintegrated thinking in VLMs-especially for open-domain visual reasoning-remains largely underexplored.

Motivated by these challenges, we introduce VISTA-Gym (Visual-centric Tool-integrated Agentic training environment), a scalable, agentic training environment designed to systematically enhance the reasoning and decision-

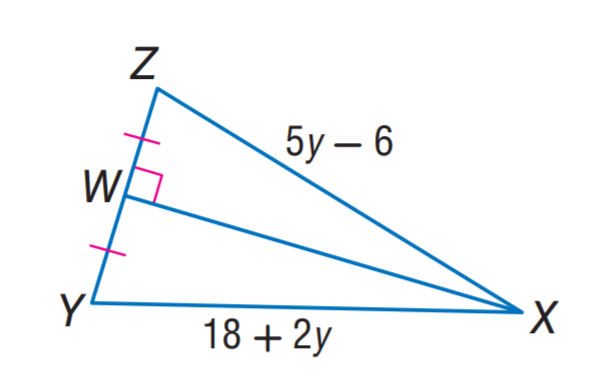

Internvl3 w/ T Internvl3 w/ R Internvl3 w/ T&R (b) Open-source VLM (Internvl3-8B).

Figure 1. Directly augmenting VLMs with tools significantly degrades accuracy (w/ T), yet intrinsic reasoning offers limited gains on complex VQA (w/ R). Supplying tool-selection prior knowledge and interleaving reasoning with tool execution improve performance (w/ T&R); gains are task-dependent for commercial VLMs, while small open-source VLMs remain particularly struggling.

making capabilities of VLM agents across complex, realworld scenarios. Specifically, VISTA-Gym wraps up visual tool operations and provides textual feedback on receiving tool call commands by VLMs, and features:

• Diverse multi-modal reasoning tasks. VISTA-Gym provides a comprehensive VQA suite spanning 7 reasoning tasks across 13 public datasets, supporting training and evaluation of agents with strong generalization and tool-integration skills. • Unified, extensible tool interface. VISTA-Gym exposes a standardized API over 26 pre-defined tools that support perception, symbolic manipulation, chart and document interpretation, grounding high-level reasoning in structured intermediate results. • Scalable interactive infrastructure. VISTA-Gym accelerates agent training via multithreading, parallel execution, and sequential sampling, enabling efficient trajectory collection and large-scale automated evaluation compatible with diverse agent scaffolds. Building on VISTA-Gym, we further develop VISTA-R1, a VLM-based agent trained for robust, tool-augmented reasoning. Across five in-domain and six out-of-domain reasoning-intensive VQA benchmarks, VISTA-R1-8B surpasses state-of-the-art open-source baselines of comparable size by 9.51%-18.72%. VISTA-Gym proves to be an effective and scalable solution for developing VLM agents that can robustly interleave reasoning and tool use to solve complex, multi-step visual problems.

RL for VLM Reasoning. Improving the reasoning capabilities for VLMs has been an active research front. [78] synthesize reasoning chains via distilling reasoning knowledge from teacher models. Inspired by the success of R1style training [18], several works attempt to leverage RL [22,31,35,38,61] to improve VLM reasoning capabilities on visual tasks, including general visual understanding and mathematical reasoning. RL for Tool-Integrated Reasoning in VLMs. To better characterize the visual information, recent works exp

This content is AI-processed based on open access ArXiv data.