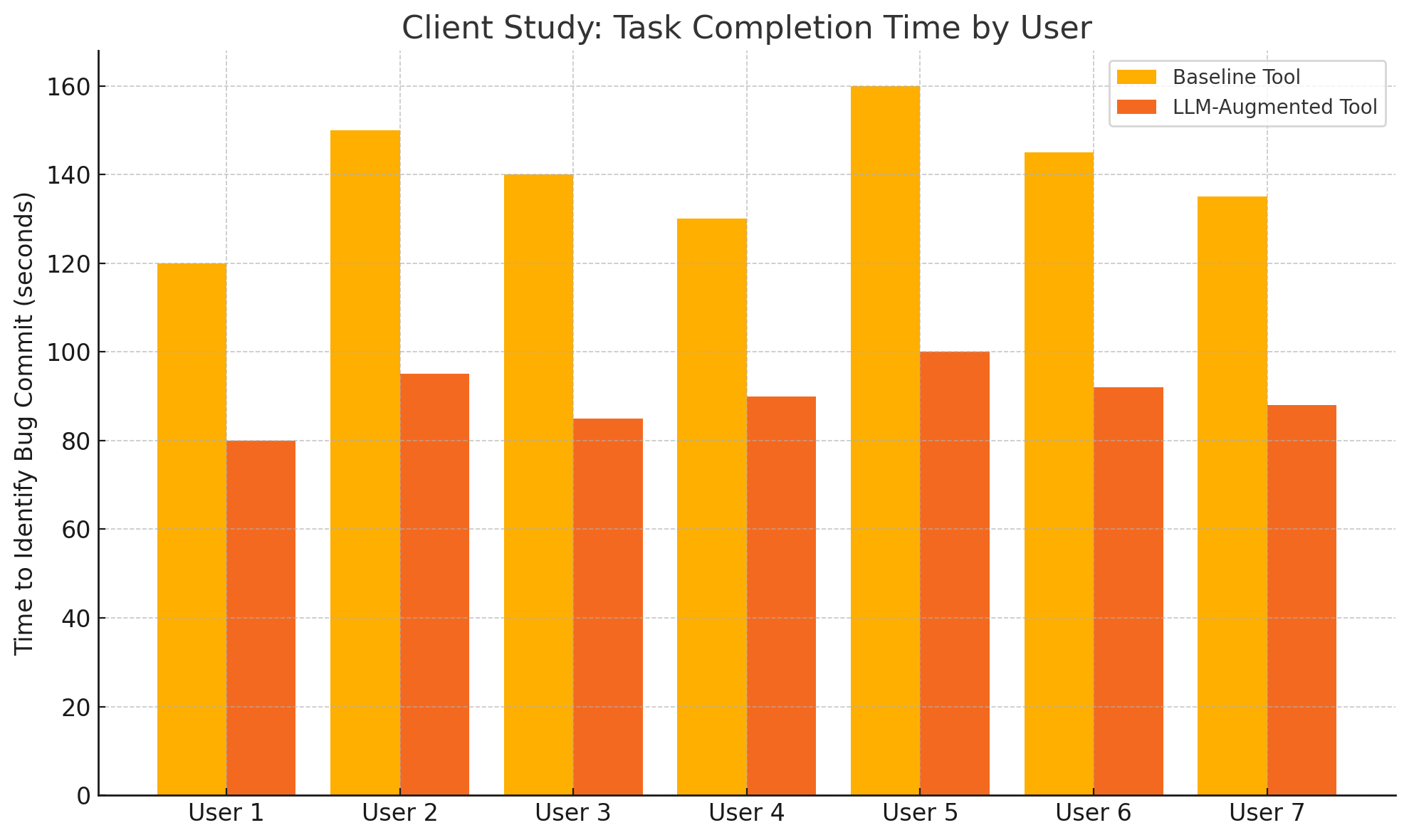

We present a novel framework that integrates Large Language Models (LLMs) into the Git bisect process for semantic fault localization. Traditional bisect assumes deterministic predicates and binary failure states assumptions often violated in modern software development due to flaky tests, nonmonotonic regressions, and semantic divergence from upstream repositories. Our system augments bisect traversal with structured chain of thought reasoning, enabling commit by commit analysis under noisy conditions. We evaluate multiple open source and proprietary LLMs for their suitability and fine tune DeepSeekCoderV2 using QLoRA on a curated dataset of semantically labeled diffs. We adopt a weak supervision workflow to reduce annotation overhead, incorporating human in the loop corrections and self consistency filtering. Experiments across multiple open source projects show a 6.4 point absolute gain in success rate from 74.2 to 80.6 percent, leading to significantly fewer failed traversals and by experiment up to 2x reduction in average bisect time. We conclude with discussions on temporal reasoning, prompt design, and finetuning strategies tailored for commit level behavior analysis.

As artificial intelligence (AI) continues to transform software engineering, developers are spending less time writing new code and more time verifying, debugging, and tracing bug regressions [4]. Tools like Git's bisect provide a principled way to localize faults by binary search over commit history [8]; yet their effectiveness hinges on idealized assumptions: that tests behave deterministically, failures persist monotonically, and behavior can be captured in a simple binary true-or-false predicate [2].

In practice, there are more scenarios where these assumptions do not hold. Flaky tests, partial regressions, and non-monotonic error propagation are common in large code repositories, particularly those involving parallel development, upstream dependencies, and downstream forks [1]. Developers are frequently left to manually examine semantic changes across commits to understand where behavior diverged.

We propose a Large Language Model (LLM) augmented bisect framework that integrates an LLM into the git bisect commit traversal process. At each step, the model inspects code diffs, reasons about potential behavioral impact, and classifies commits as “good” or “bad” using structured chain-of-thought prompts.

Unlike prior fault localization tools such as AutoFL and LLMAO-which analyze static snapshots of code [10]-our system dynamically traverses version history, enabling temporal localization of regressions. We fine-tune open-source LLMs on a curated dataset of semantically tagged code changes using a two-stage pipeline. Our results show that this approach reduces bisect steps by up to 2× and improves robustness under flaky or heuristic predicates.

Git’s bisect command enables automated fault localization by performing a binary search over a project’s commit history [8]. Let C = {c 0 , c 1 , . . . , c n } be a totally ordered sequence of commits, where c 0 is the oldest and c n is the most recent. The goal of bisect is to identify a commit c k that first introduces a behavioral regression.

A user-defined predicate function

determines whether each commit exhibits correct behavior:

Under the monotonicity assumption-that all commits prior to c k are good and all commits from c k onward are bad-the bisect algorithm localizes the offending commit in O(log n) steps [2].

In practice, the monotonicity assumption rarely holds [2]. Regressions may exhibit flaky behavior, tests may be nondeterministic, and the predicate function P may not provide consistent outputs across runs. These issues introduce a flaky region between the last known-good and first known-bad commits, where the predicate behaves inconsistently.

Moreover, real-world predicates are often heuristic or behavior-based rather than binary pass/fail. Examples include performance degradations, UI glitches, or semantic regressions that are difficult to capture in traditional test frameworks. Consequently, P may act as a noisy oracle:

thereby violating the monotonicity requirement and breaking the correctness guarantees of binary search.

Modern software development frequently involves parallel evolution of forked repositories. A canonical example is Microsoft Edge and Google Chromium, which share a common codebase but diverge in platform-specific adaptations, telemetry systems, and feature sets [1].

In such cases, a downstream fork may introduce changes to meet its unique requirements. When updates from the upstream are later merged, interactions between upstream and downstream changes can introduce regressions that are specific to the fork. Because the upstream remains functional, the developer has no straightforward way to determine which of their prior changes caused the conflict-making it difficult to define a consistent predicate over time.

Recent advances have explored the use of LLMs for fault localization. Tools such as AutoFL and LLMAO leverage LLMs to identify potentially faulty code regions by analyzing a static snapshot of the codebase. While effective in controlled settings, these methods fail to exploit the temporal nature of version histories.

Moreover, they assume the existence of a clean labeling function or test oracle. In environments with flaky tests or partial regressions, such assumptions break down [6]. As a result, these methods cannot precisely localize the commit responsible for the regression when behavior evolves across time.

Our work builds on these insights by integrating LLMs directly into the bisect process, enabling dynamic commit-bycommit analysis under real-world noise and inconsistency.

To select the best base model for our use case, we evaluate candidates based on their ability to write correct high-level code using the Pass@1 metric-where the model is given a single chance to solve each of two benchmark tests [5]. Benchmark results are shown in Table I • MBPP: A set of 1,000 basic programming tasks that test a model’s ability to generate correct code for common problems. High scores reflect strong general-pur

This content is AI-processed based on open access ArXiv data.