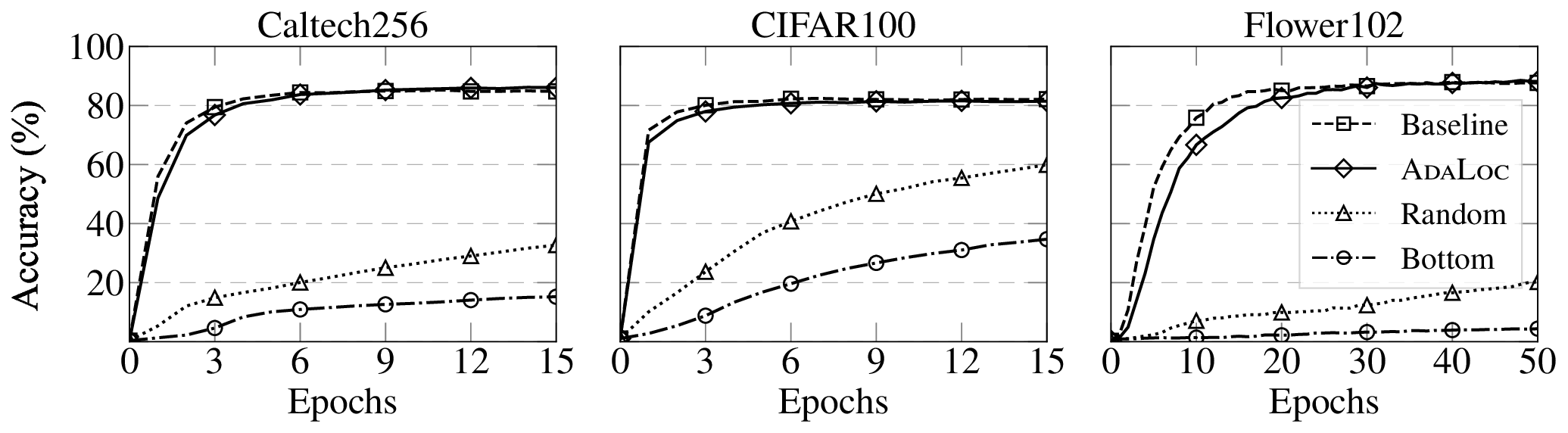

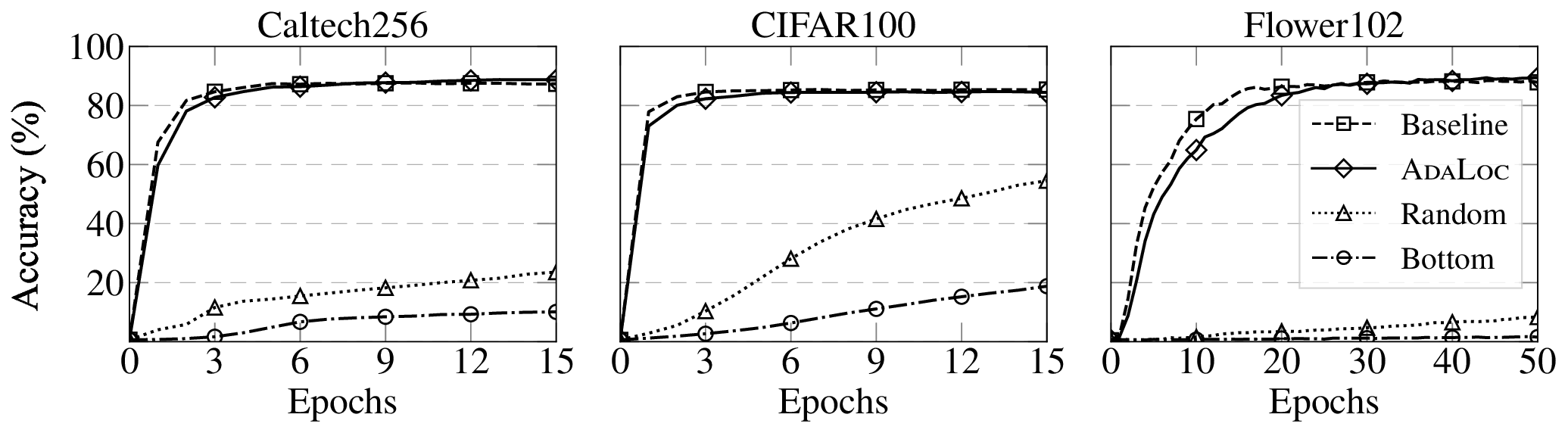

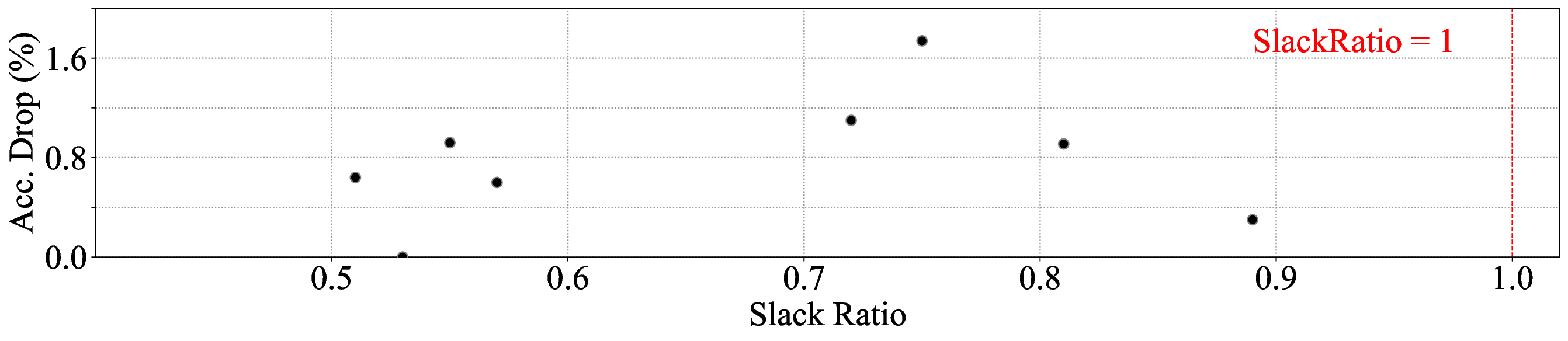

Deep neural networks (DNNs) have become valuable intellectual property of model owners, due to the substantial resources required for their development. To protect these assets in the deployed environment, recent research has proposed model usage control mechanisms to ensure models cannot be used without proper authorization. These methods typically lock the utility of the model by embedding an access key into its parameters. However, they often assume static deployment, and largely fail to withstand continual post-deployment model updates, such as fine-tuning or task-specific adaptation. In this paper, we propose ADALOC, to endow key-based model usage control with adaptability during model evolution. It strategically selects a subset of weights as an intrinsic access key, which enables all model updates to be confined to this key throughout the evolution lifecycle. ADALOC enables using the access key to restore the keyed model to the latest authorized states without redistributing the entire network (i.e., adaptation), and frees the model owner from full re-keying after each model update (i.e., lock preservation). We establish a formal foundation to underpin ADALOC, providing crucial bounds such as the errors introduced by updates restricted to the access key. Experiments on standard benchmarks, such as CIFAR-100, Caltech-256, and Flowers-102, and modern architectures, including ResNet, DenseNet, and ConvNeXt, demonstrate that ADALOC achieves high accuracy under significant updates while retaining robust protections. Specifically, authorized usages consistently achieve strong task-specific performance, while unauthorized usage accuracy drops to near-random guessing levels (e.g., 1.01% on CIFAR-100), compared to up to 87.01% without ADALOC. This shows that ADALOC can offer a practical solution for adaptive and protected DNN deployment in evolving real-world scenarios.

Major AI and cloud providers such as Google, Microsoft, and Amazon have increasingly emphasized hardware-backed execution as a foundational component of secure AI deployment. For example, Google's recent "Private AI Compute" places models such as Gemini within TPU-based enclaves, which harden the computational environment against external compromise [1]. Although these trusted execution infrastructures substantially improve platform security, they secure only the runtime environment and not the model artifact itself. Once a high-value model is distributed, whether through licensing, integration into downstream pipelines, or deployment on customer-managed infrastructure, a fully functional copy ♢ Zihan Wang is supported by the Google PhD Fellowship. can leave the enclave boundary. At that point, intellectualproperty control becomes structurally fragile because unrestricted copies may propagate across organizations or jurisdictions, and dishonest parties can continue operating, reselling, or embedding the model at full fidelity [2]- [5]. Such leakage compromises the model owner's competitive advantage, devalues the substantial computational and data investment embodied in the model, and exposes an inherent limitation in relying solely on hardware isolation or access control. In summary, hardware-centric protections do not mitigate misuse once the model is no longer confined to the trusted platform.

This gap indicates a fundamental requirement that goes beyond enclave-style infrastructure, namely a model-intrinsic mechanism for usage control that can restrict a model’s utility even when the hardware boundary is no longer present. Our work adopts this perspective by regulating the functional capacity of the model itself rather than relying solely on control over the execution environment. We demonstrate that hardware enclaves and model-level usage control are complementary layers. Enclaves provide secure storage and execution of secret material, while usage-control mechanisms ensure that the model remains nonfunctional without proper authorization. This layered view forms the central motivation of our work and establishes ADALOC as a missing component in the contemporary AI model-protection stack.

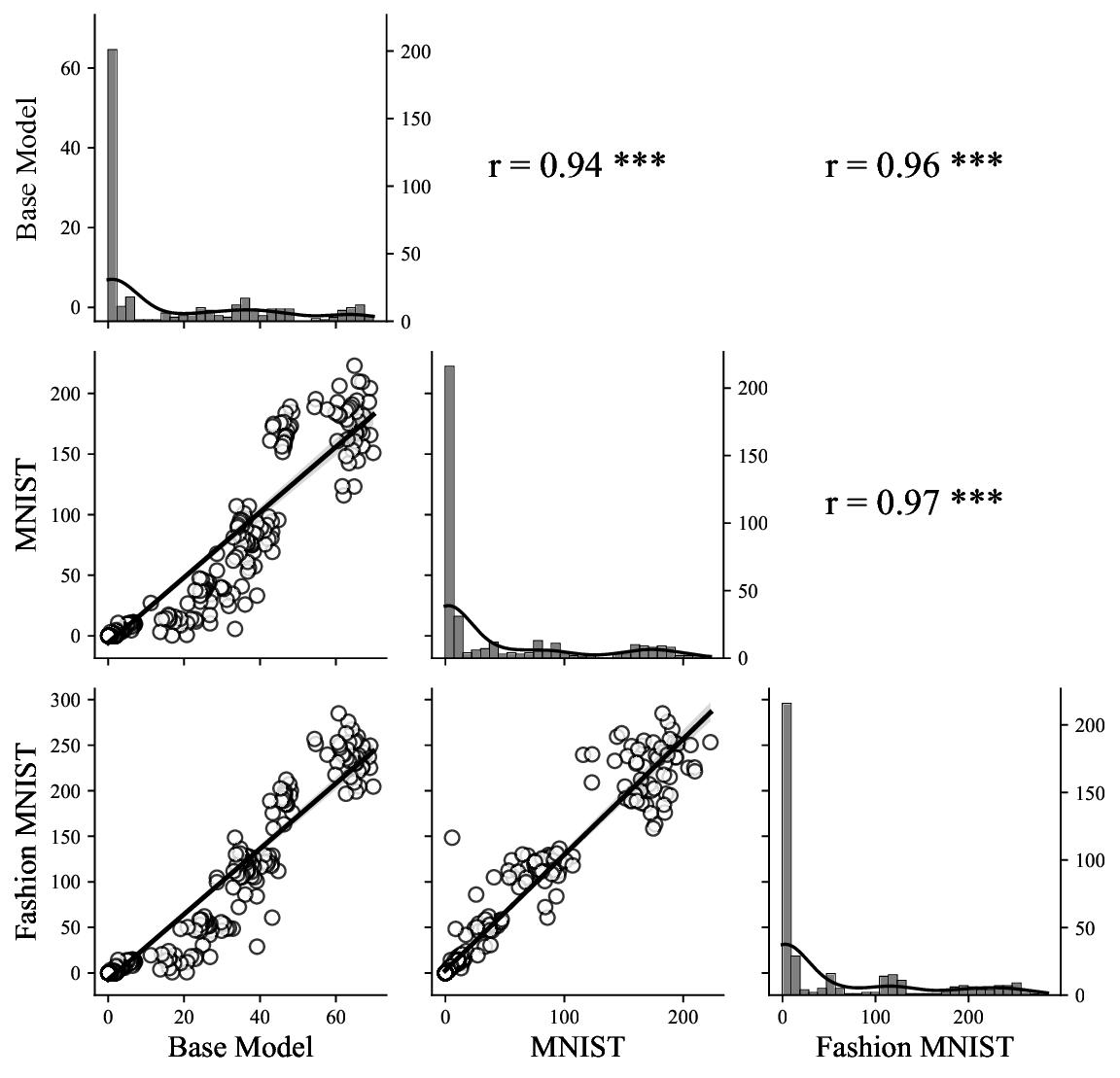

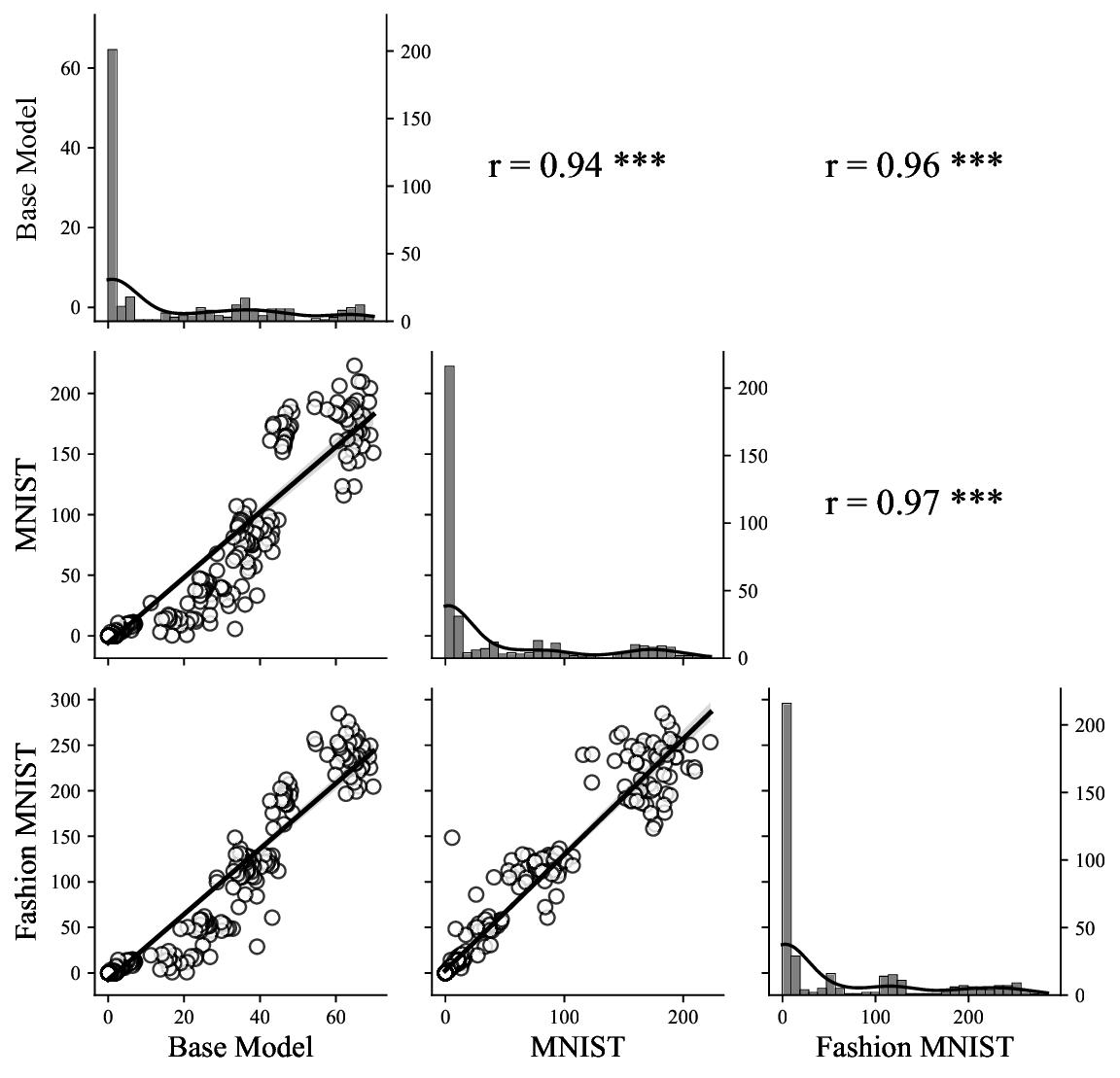

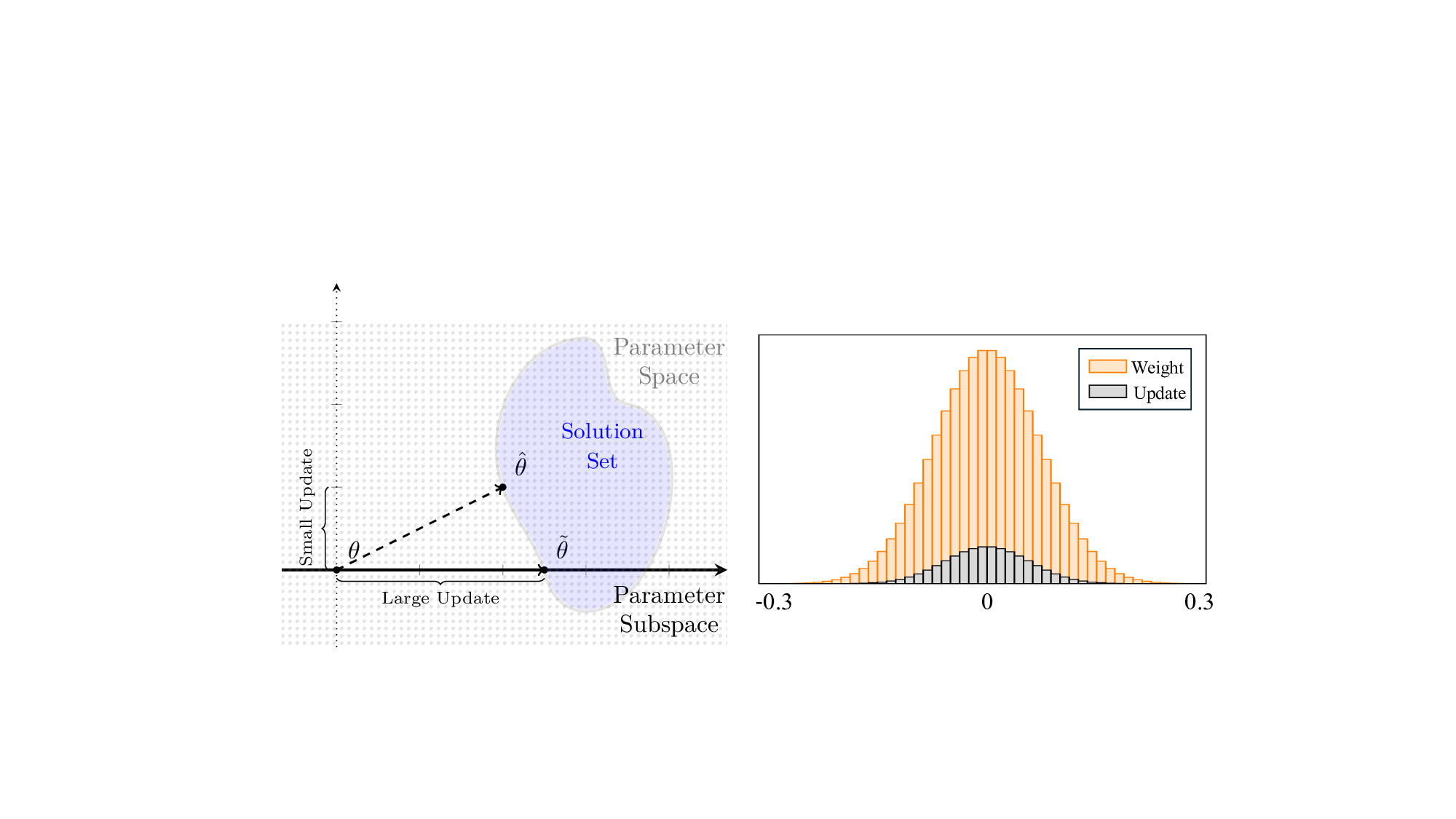

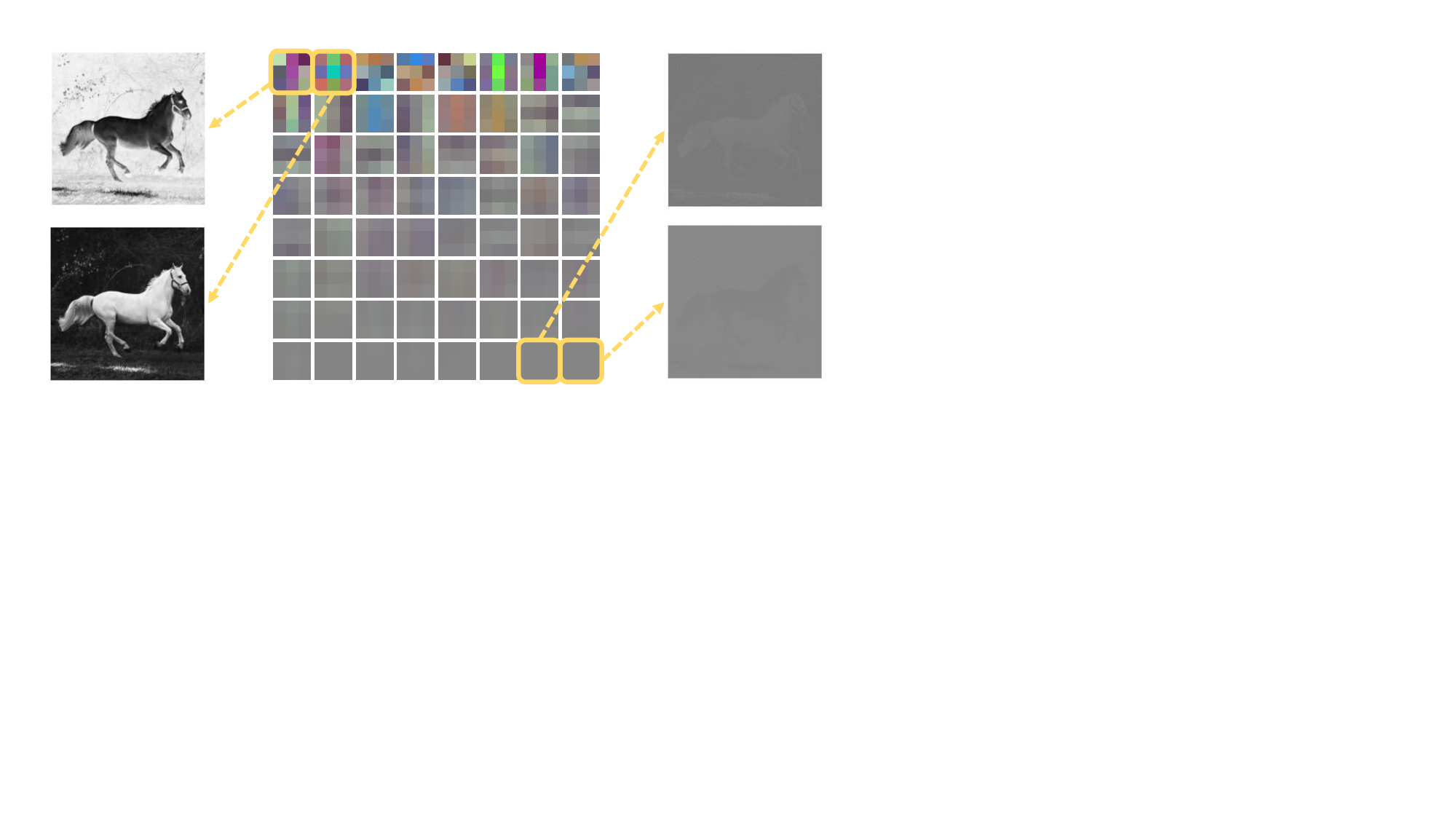

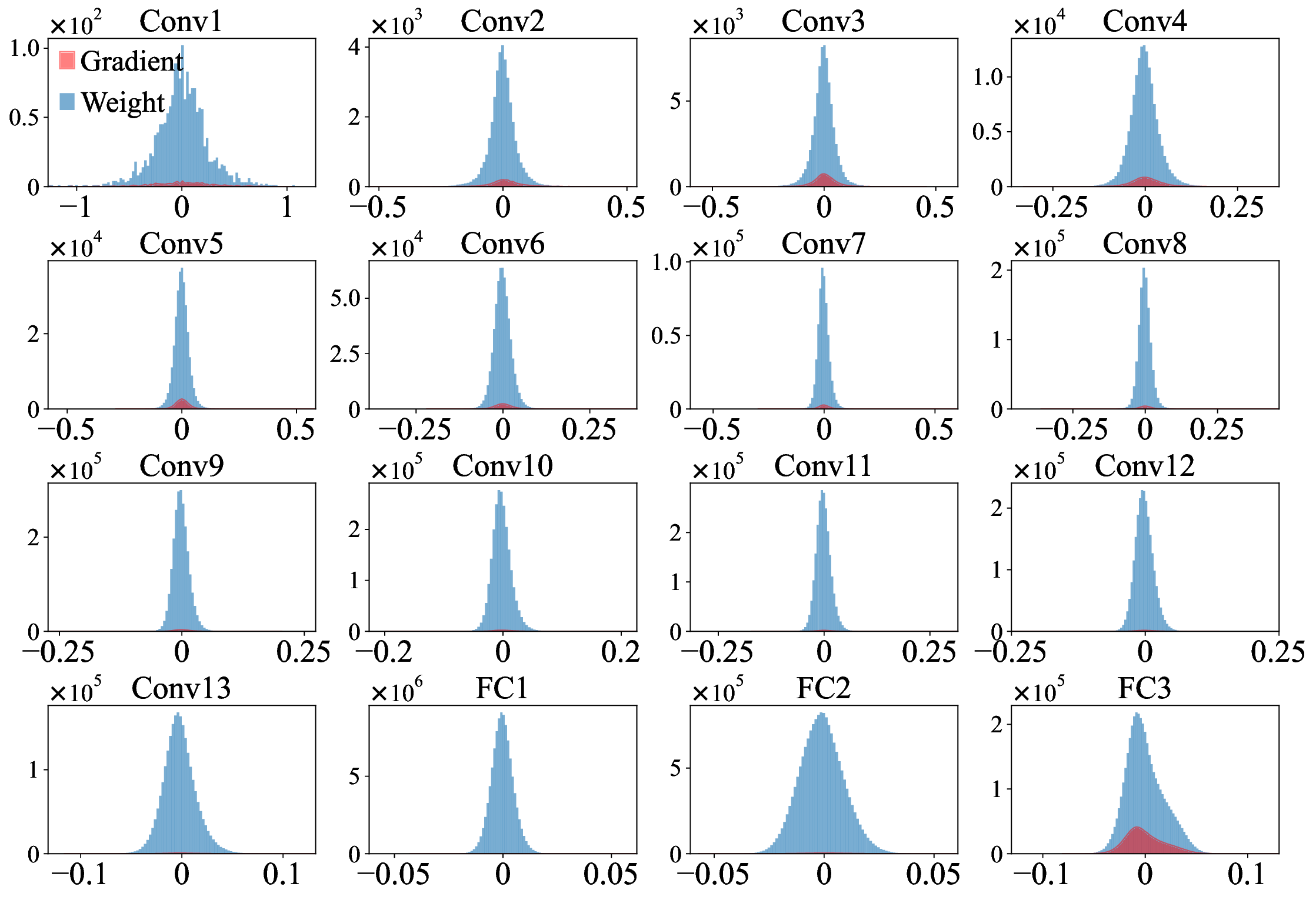

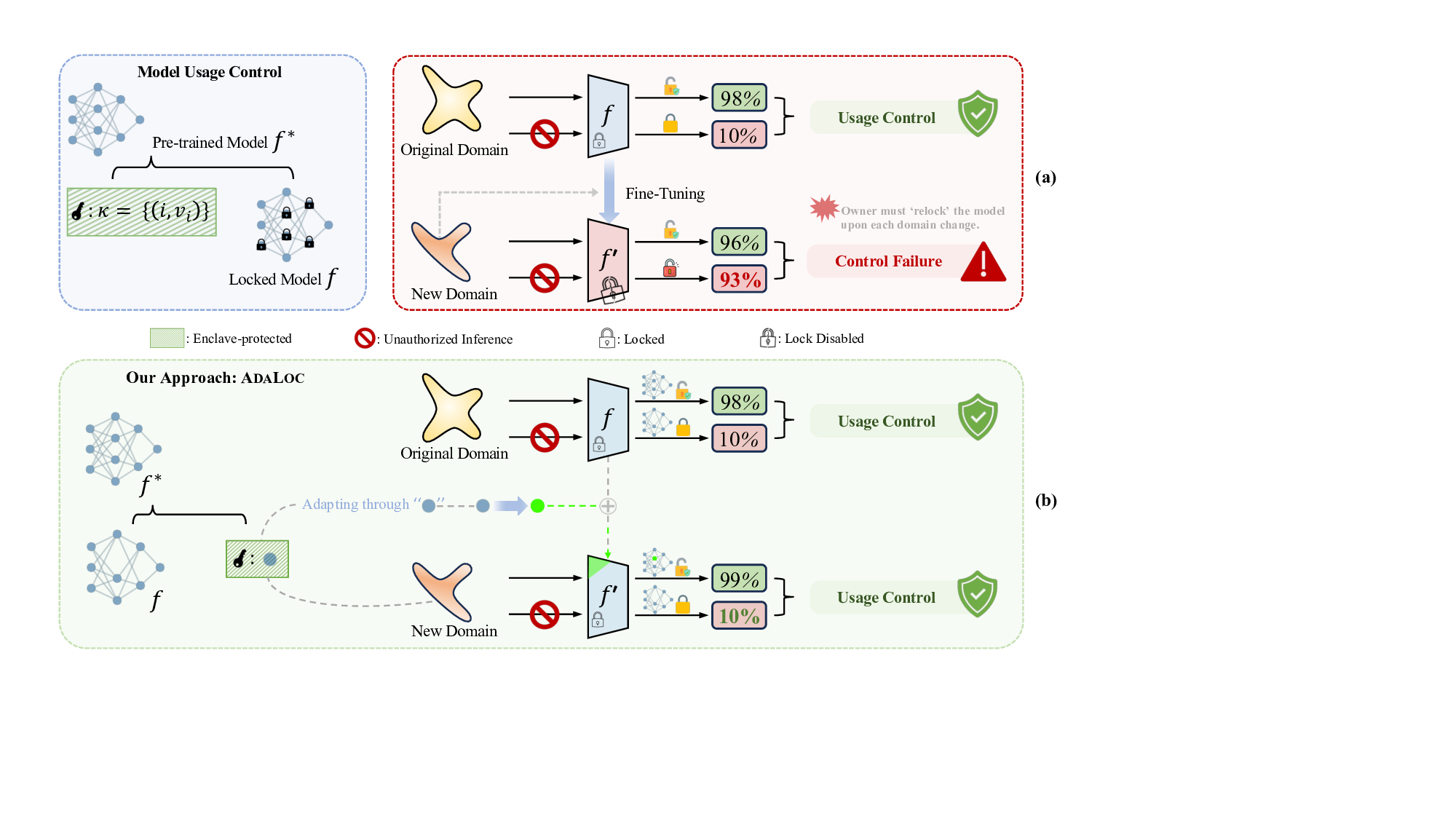

Recent research has approached model usage control through model keying [2], [6]- [9]. It ensures that without the correct access key, 1 the accuracy of the model collapses to near-random guessing, making unauthorized use effectively worthless. Data-based keying mechanisms, such as Pyone et al. [6] and Chen et al. [7], embed a secret key into the training data during preprocessing, and train the model to operate only on key-preprocessed inputs. Model-based mechanisms, such as Xue et al. [8] and Zhou et al. [2] embed the key directly into internal model parameters, undermining the utility of the entire model unless the key is known by the model controller for neutralization. These approaches mainly target the accessibility aspect of model usage control, and have demonstrated their effectiveness in locking model unity in static deployment. However, they largely assume the model Our approach (b). Instead of relying on any “external” locking mechanism, we designate a compact block of neurons themselves as κ, and restrict all updates to that block. Because the rest of the network never changes, the lock remains intact and f ′ is still unusable without the key.

parameters remain unchanged after deployment.

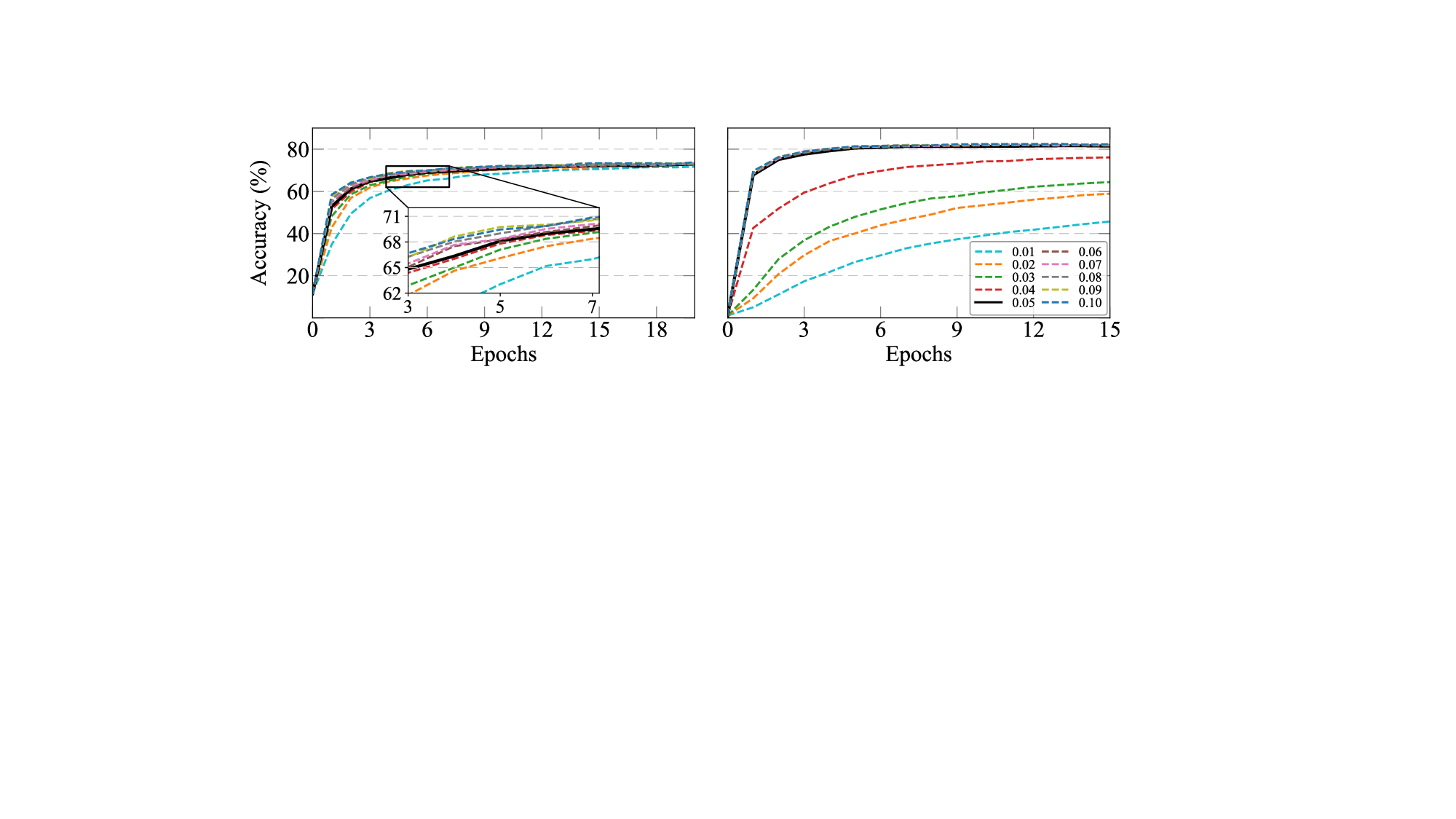

This assumption fails to hold in practical machine learning workflows, where models are frequently fine-tuned or incrementally updated to adapt to new tasks, data distributions, or environments. Indeed, a recent study [10] reports that 44% of organizations piloting AI models in 2024 relied on frequent updates, often weekly or monthly, to fine-tune established backbones (e.g., Vision Transformers [11]). Since even modest amounts of fine-tuning can significantly alter the parameter space, continual model refinement can easily overwrite the protection provided by existing keying schemes (see Figure 1(a)), making costly “re-keying” necessary. This reveals a fundamental limitation in existing model keying mechanisms: accessibility is not complemented by adaptability. An important research problem remains largely unexplored: how to enable model usage control under rapid and continual adaptation cycles? Our work. We develop an adaptable model usage control framework that unifies accessibility and adaptability, named ADALOC (adaptable lock). As illustrated in Figure 1(b), it ef-fectively ensures (i) that model usability is strictly conditioned on possessing the access key, thereby blocking unauthorized usage, and (ii) that authorized users can efficiently update the keyed model, eliminating the costly need to redistribute the entire network after each update. During model updates, ADALOC confines the updates exclusively to the access key (hereafter, the key), avoiding retraining the model or altering other parts of the model.

This content is AI-processed based on open access ArXiv data.