The capacity for highly complex, evidence-based, and strategically adaptive persuasion remains a formidable great challenge for artificial intelligence. Previous work, like IBM Project Debater, focused on generating persuasive speeches in simplified and shortened debate formats intended for relatively lay audiences. We introduce DeepDebater, a novel autonomous system capable of participating in and winning a full, unmodified, two-team competitive policy debate. Our system employs a hierarchical architecture of specialized multi-agent workflows, where teams of LLM-powered agents collaborate and critique one another to perform discrete argumentative tasks. Each workflow utilizes iterative retrieval, synthesis, and self-correction using a massive corpus of policy debate evidence (OpenDebateEvidence) and produces complete speech transcripts, cross-examinations, and rebuttals. We introduce a live, interactive end-to-end presentation pipeline that renders debates with AI speech and animation: transcripts are surface-realized and synthesized to audio with OpenAI TTS, and then displayed as talking-head portrait videos with EchoMimic V1. Beyond fully autonomous matches (AI vs AI), DeepDebater supports hybrid human-AI operation: human debaters can intervene at any stage, and humans can optionally serve as opponents against AI in any speech, allowing AI-human and AI-AI rounds. In preliminary evaluations against human-authored cases, DeepDebater produces qualitatively superior argumentative components and consistently wins simulated rounds as adjudicated by an independent autonomous judge. Expert human debate coaches also prefer the arguments, evidence, and cases constructed by DeepDebater. We open source all code, generated speech transcripts, audio and talking head video here: https://github.com/Hellisotherpeople/DeepDebater/tree/main

Generation of persuasive and strategically coherent argumentation is a long-standing goal in AI (Bench-Capon and Dunne 2007;Dung 1995). Existing research has largely studied competitive persuasive argumentation through evidence light, highly simplified variants of debate aimed at a lay audience (Slonim et al. 2021). We contend this approach avoids the strategic, game-theoretic, and iterative nature of real-world, competitive debate.

To address this, we turn to the uniquely suitable domain of American-style after-school competitive policy debate2 . This format serves as an idealized crucible for AI research for many reasons: it is a popular extracurricular activity (Mc-Grath 2020), lengthy but strictly time-constrained, grounded in a vast body of high quality evidence, and possesses a complex, regimented, and formal structure that demands both long-term strategic planning and second by second tactical decision making. (NSDA 2025). Human competitors have evolved sophisticated techniques3 and tools to manage the immense cognitive load which makes it a premier environment for benchmarking and developing advanced autonomous reasoning agents.

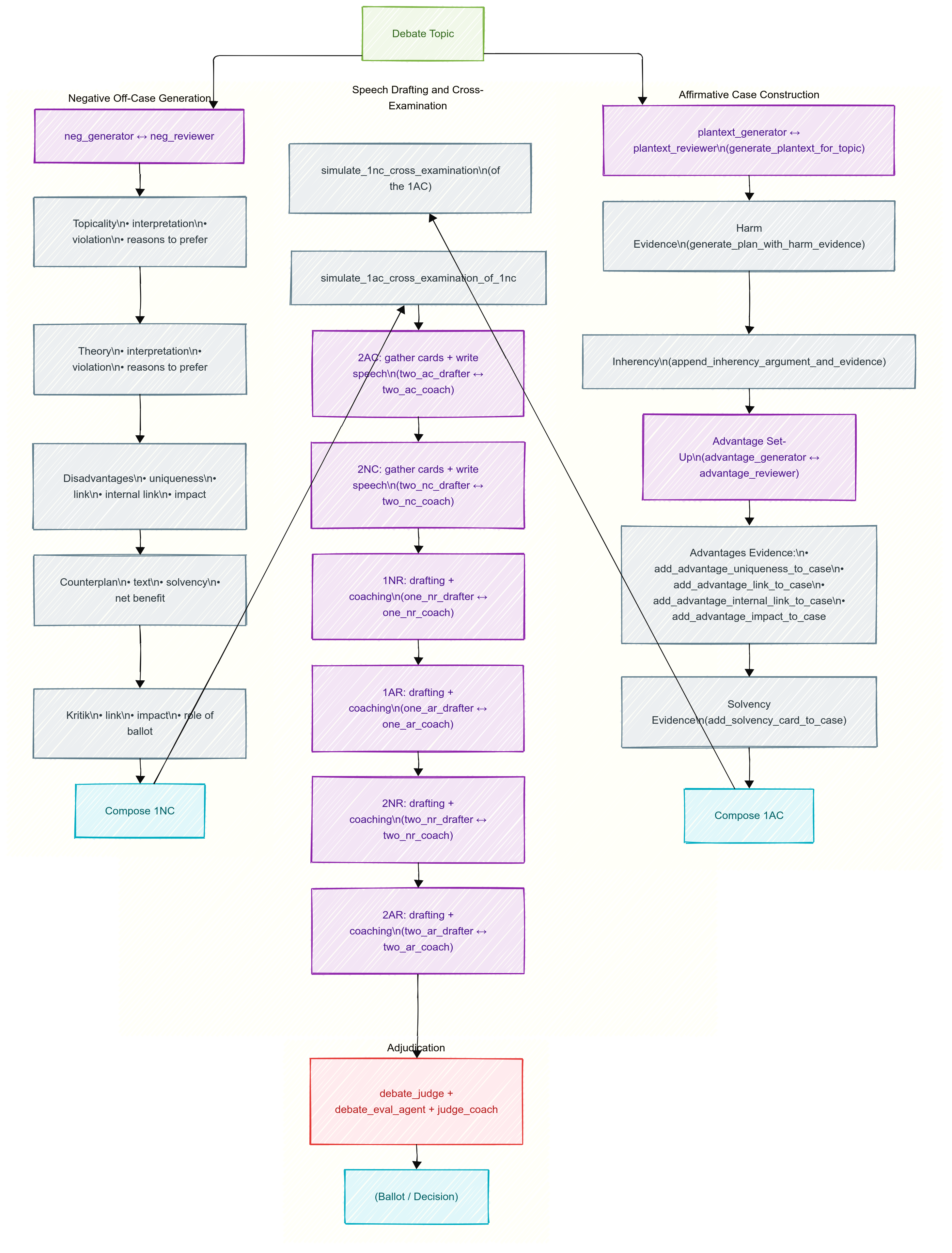

We present DeepDebater: an autonomous and human collaborative system that can create a complete affirmative case from a topic, research and construct a multi-pronged negative strategy, and then execute a full, eight-speech debate, including simulated cross-examinations. Our core contribution is a novel multi-agent architecture where complex creative and strategic tasks are decomposed into a pipeline of specialized workflows. Within each workflow, a team of Large Language Model agents collaborate and critique each other’s outputs.

Our contributions are threefold:

We introduce a hierarchical multi-agent framework for end-to-end generation of complex, evidence-grounded argumentation, modeling the entire lifecycle of a competitive policy debate. We show that by decomposing the creative process into discrete, role-based agent workflows, our system can master the intricate structure and esoteric strategies of an expert argumentative domain.

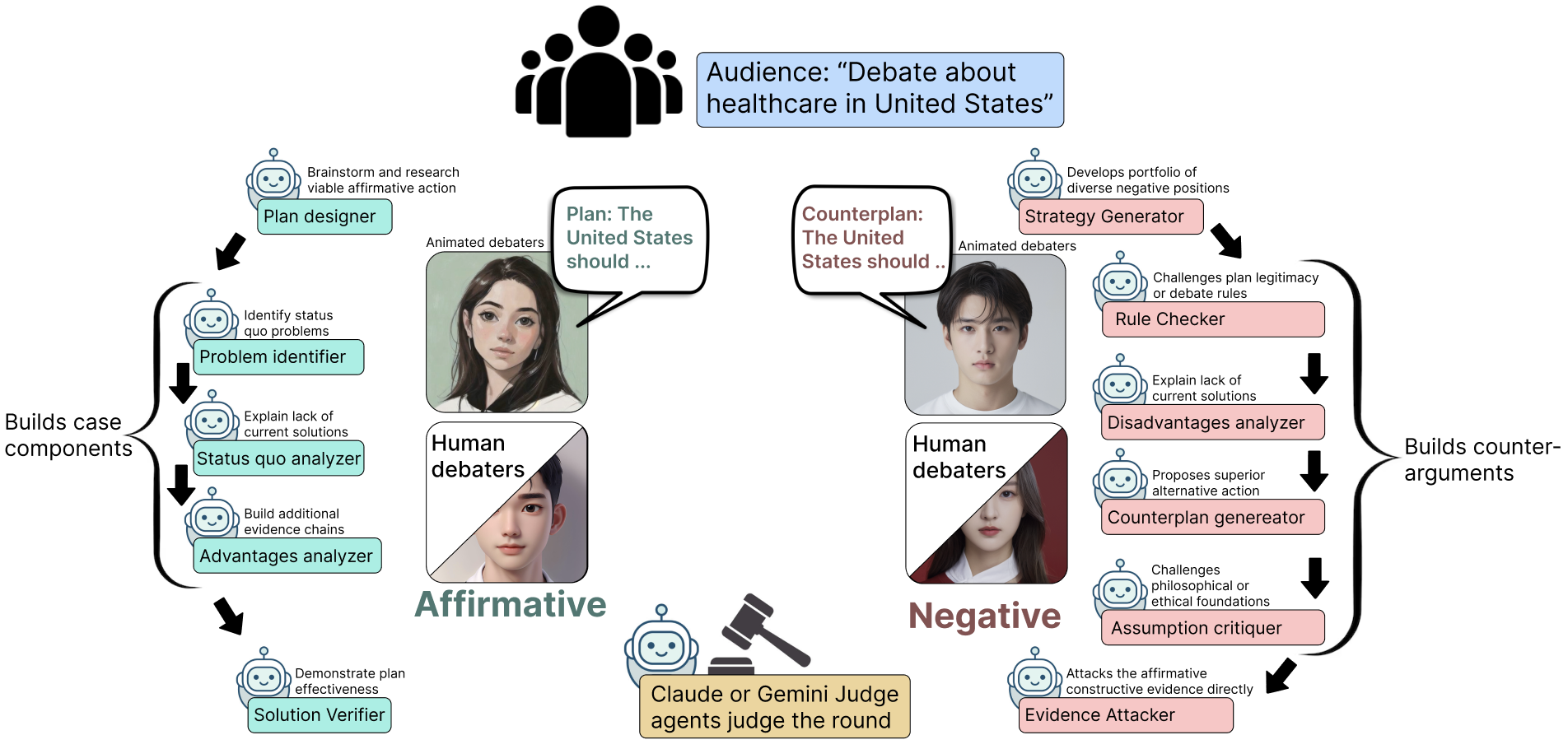

Besides simply generating debate speech transcripts, DeepDebater also uses AI text to speech systems to audibly deliver these speeches out-loud. We create animated Figure 1: Creative System Demonstration: After the audience picks a resolution, two onscreen systems-Team Affirmative (red) and Team Negative (blue)-launch specialist agents (Affirmative: Plan-text, Harms, Inherency, Advantages, Solvency; Negative: Topicality/Theory, Disadvantage, Counterplan, Kritik, On-case Rebuttal). Each team uses gpt-4-mini + OpenDebateEvidence indexed in DuckDB, with a live UI streaming the AG2 agent chats, searches, and evidence as arguments are drafted. Completed speeches are rendered on screen and are voiced through GPT-4o mini TTS text-to-speech and animated with EchoMimic V1 while the other side prepares its reply, cycling through the full debate round. Independent Judge agents (green) powered by Claude or Gemini will judge the round at the end of the speeches. Brave audience volunteers may participate and fill in as one full team, or as a teammate alongside an AI for any speech. They may also propose a new topic (triggering a new debate)

avatars to represent our AI debate agents, which are lipsynced to the AI generated audio. 3. Through empirical comparisons and human expert evaluations, we show that DeepDebater produces argumentative artifacts of superior quality, faithfulness, and strategic coherence compared to strong human baselines, and can consistently win simulated debates.

A complete description of our Creative System Demonstration of DeepDebater is given in Figure 1 Background: The Crucible of Policy Debate American competitive policy debate is a popular afterschool extracurricular team-based activity where two teams, the Affirmative and the Negative, argue over a resolution. The Affirmative presents a specific plan to enact the resolution and argues that it will result in desirable outcomes (Advantages). The Negative’s objective is to refute the Affirmative case and argue that the plan (and sometimes the whole resolution) is a bad idea.

The structure of policy debate is rigidly formalized, comprising constructive speeches, cross-examinations, and rebuttal speeches 4 . The foundation of modern policy debate is evidence, colloquially known as “cards.” A card consists of an (often several page) direct quotation with span-level extractive highlighting from a published source (e.g., academic journals, government reports, news articles), a full citation, and an abstractive “tag” (a short, synthesized claim the evidence is meant to support). Cases are built by chaining these cards together alongside natural language arguments to establish a logical sequence from problem to solution, or from “solution” to other problem.

The pursuit of computational argumentation has a rich history. Early work focused on argument mining and logical formali

This content is AI-processed based on open access ArXiv data.