Harnessing Bounded-Support Evolution Strategies for Policy Refinement

Reading time: 1 minute

...

📝 Original Info

- Title: Harnessing Bounded-Support Evolution Strategies for Policy Refinement

- ArXiv ID: 2511.09923

- Date: 2025-11-13

- Authors: 논문에 명시된 저자 정보가 제공되지 않았습니다.

📝 Abstract

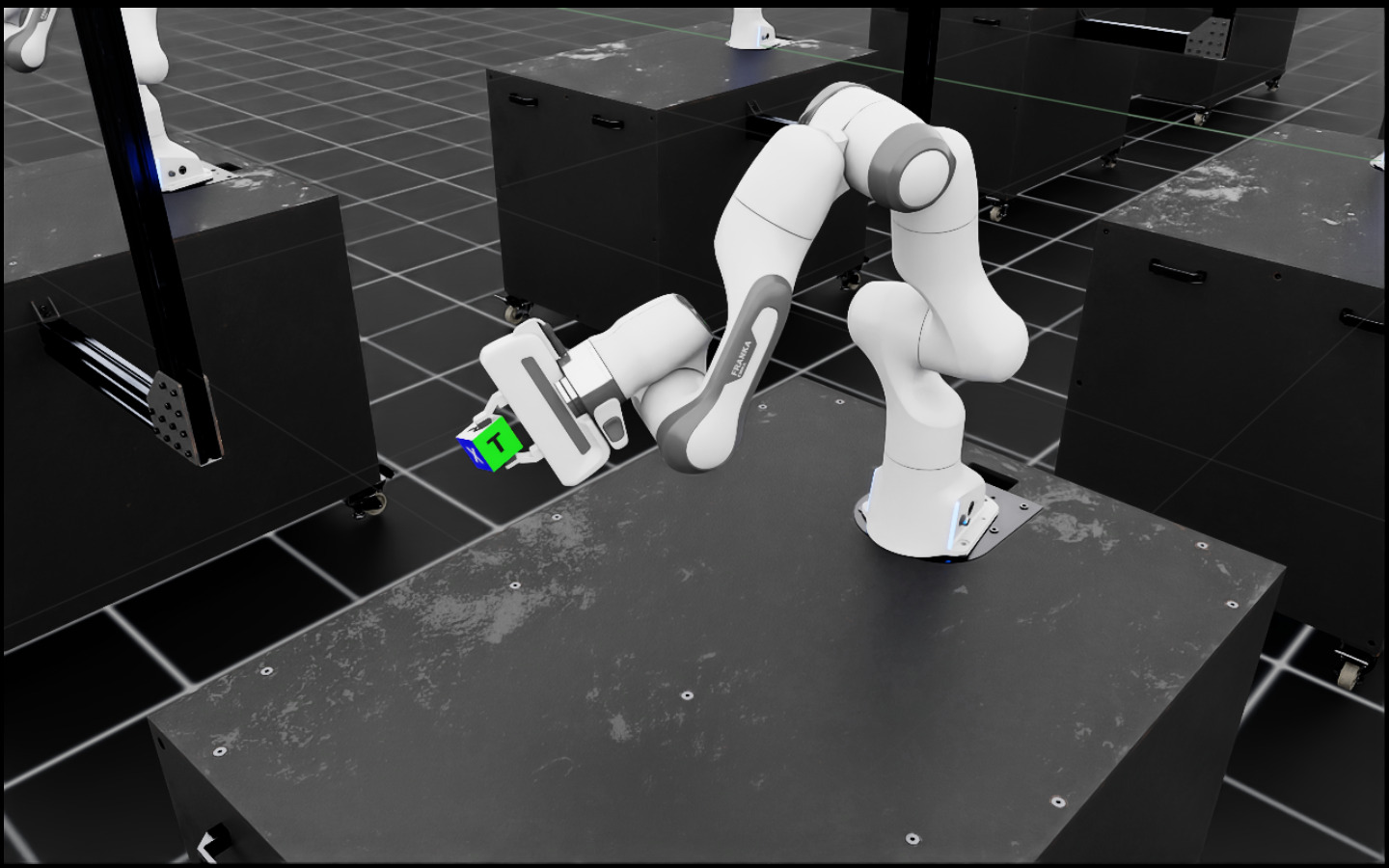

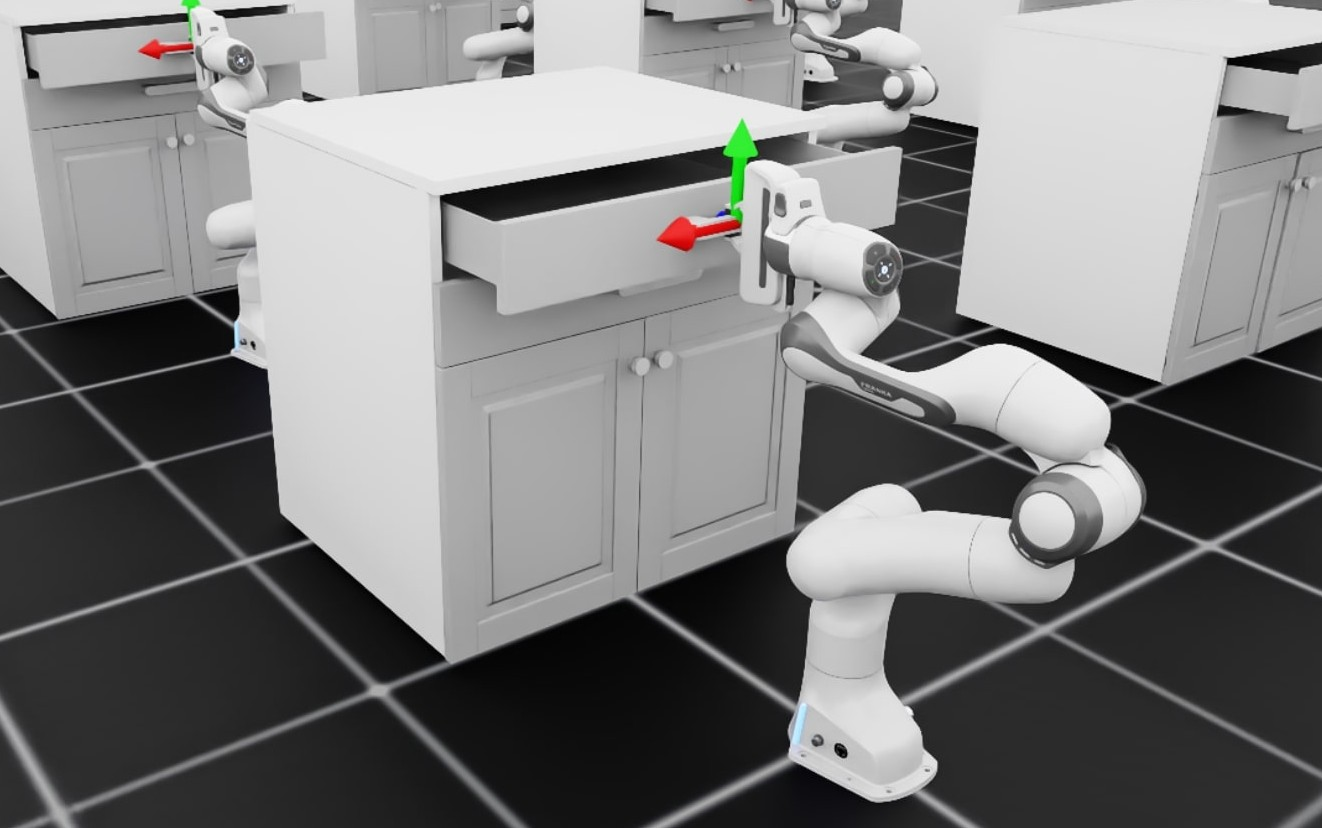

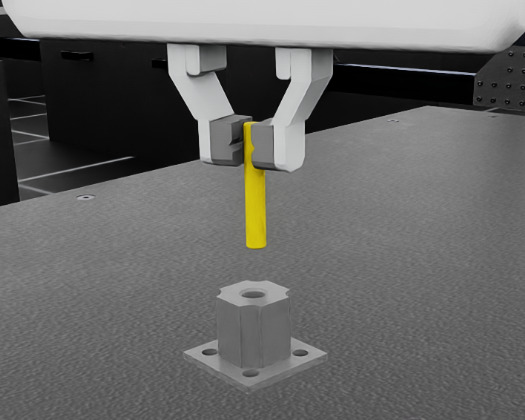

Improving competent robot policies with on-policy RL is often hampered by noisy, low-signal gradients. We revisit Evolution Strategies (ES) as a policy-gradient proxy and localize exploration with bounded, antithetic triangular perturbations, suitable for policy refinement. We propose Triangular-Distribution ES (TD-ES) which pairs bounded triangular noise with a centered-rank finite-difference estimator to deliver stable, parallelizable, gradient-free updates. In a two-stage pipeline - PPO pretraining followed by TD-ES refinement - this preserves early sample efficiency while enabling robust late-stage gains. Across a suite of robotic manipulation tasks, TD-ES raises success rates by 26.5% relative to PPO and greatly reduces variance, offering a simple, compute-light path to reliable refinement.💡 Deep Analysis

📄 Full Content

📸 Image Gallery

Reference

This content is AI-processed based on open access ArXiv data.