Environment Scaling for Interactive Agentic Experience Collection: A Survey

Reading time: 2 minute

...

📝 Original Info

- Title: Environment Scaling for Interactive Agentic Experience Collection: A Survey

- ArXiv ID: 2511.09586

- Date: 2025-11-12

- Authors: ** 논문에 명시된 저자 정보가 제공되지 않았습니다. (저자 명단이 필요하면 원문을 확인해 주세요.) **

📝 Abstract

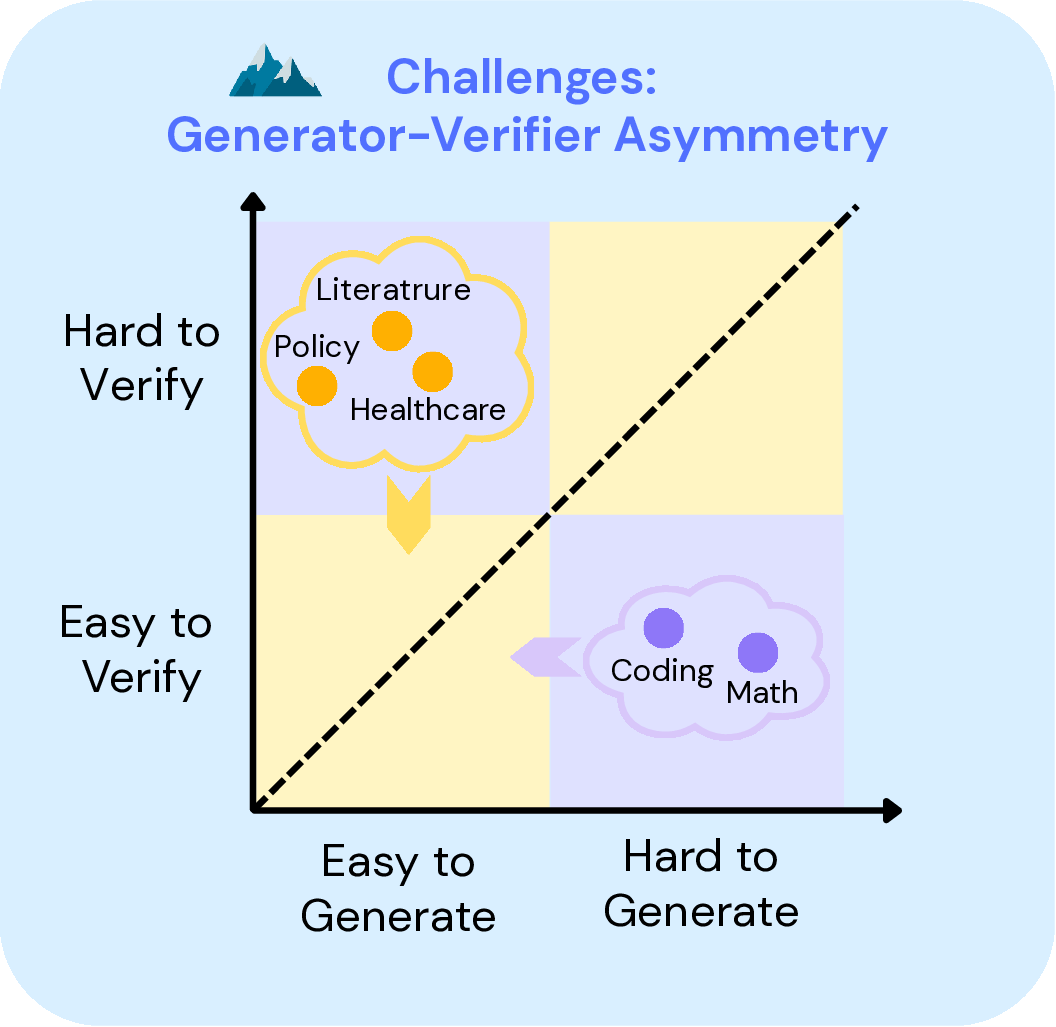

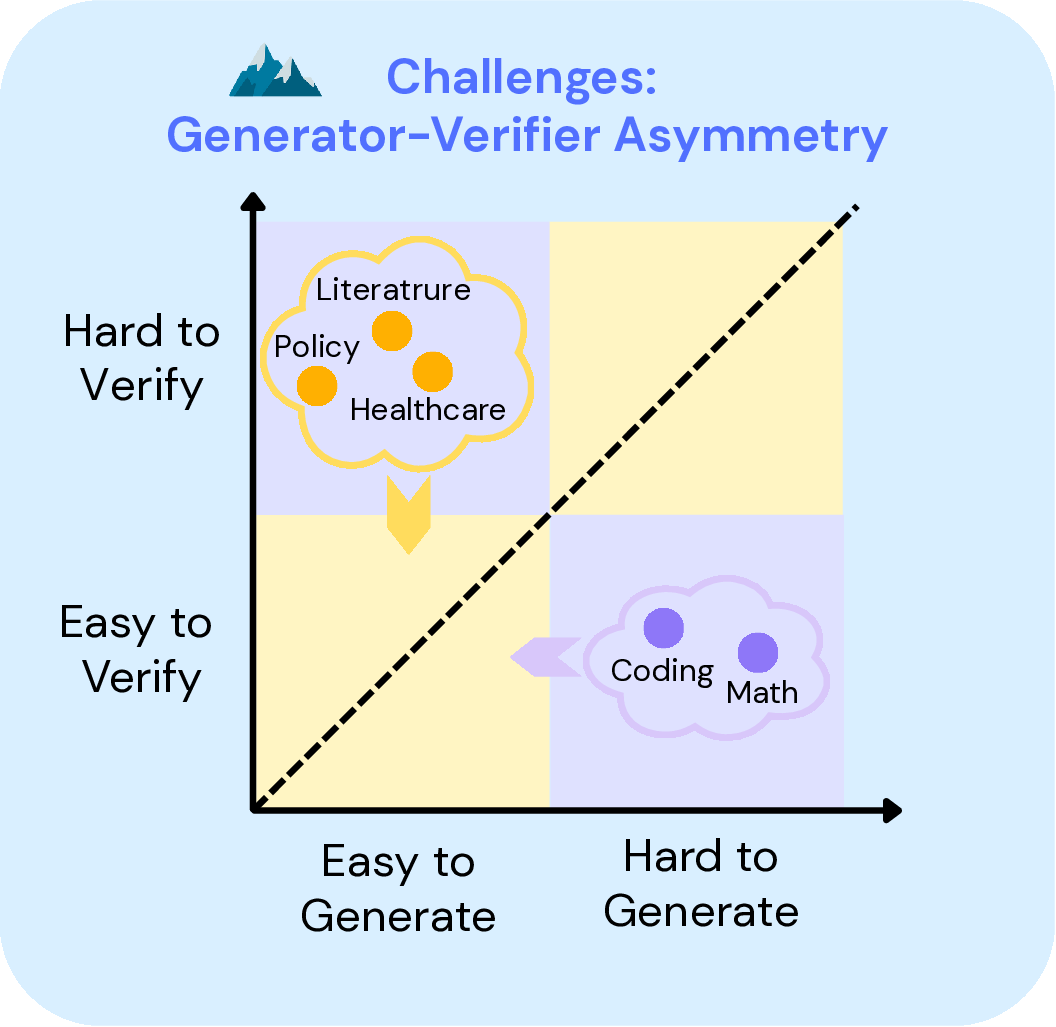

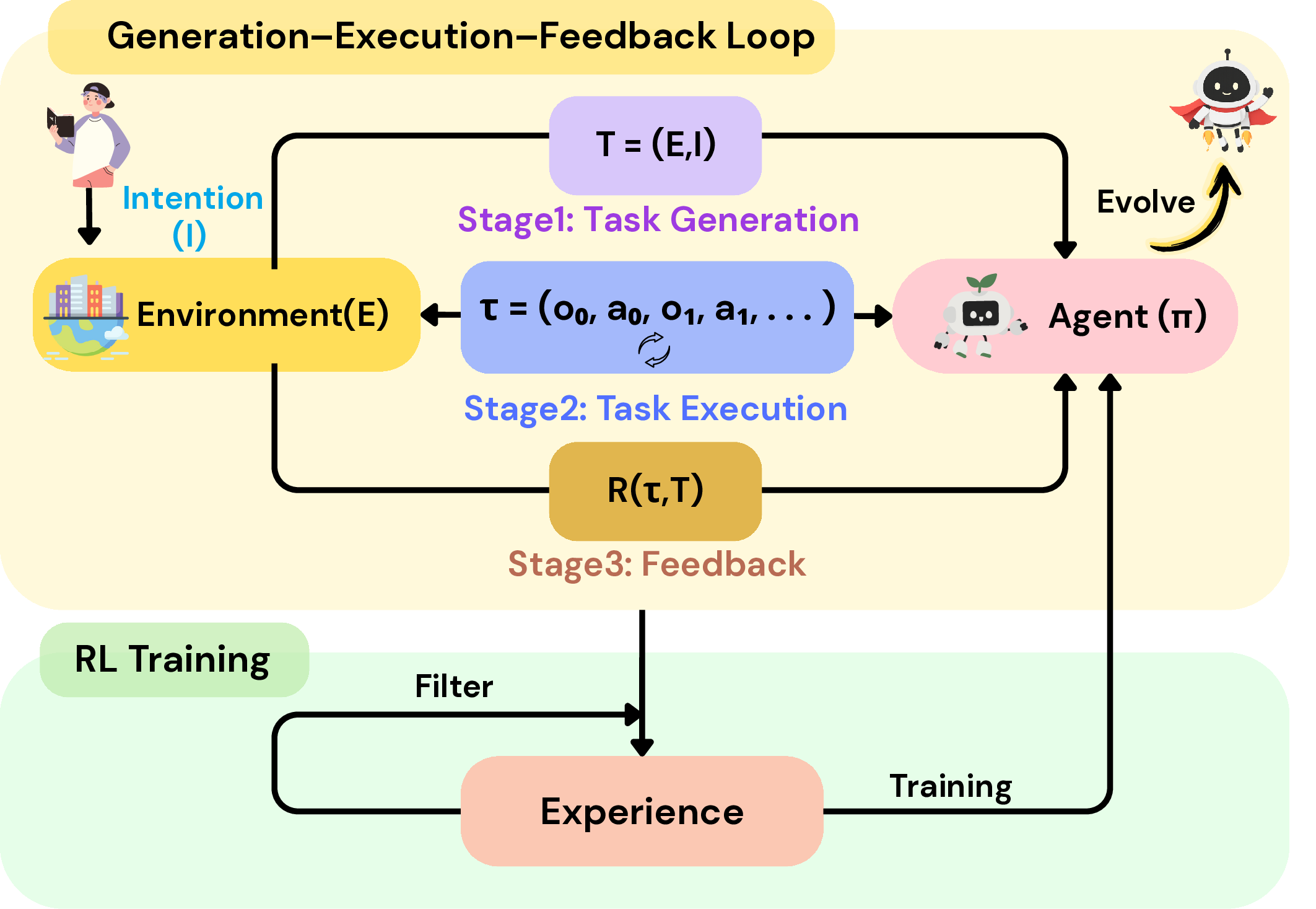

LLM-based agents can autonomously accomplish complex tasks across various domains. However, to further cultivate capabilities such as adaptive behavior and long-term decision-making, training on static datasets built from human-level knowledge is insufficient. These datasets are costly to construct and lack both dynamism and realism. A growing consensus is that agents should instead interact directly with environments and learn from experience through reinforcement learning. We formalize this iterative process as the Generation-Execution-Feedback (GEF) loop, where environments generate tasks to challenge agents, return observations in response to agents' actions during task execution, and provide evaluative feedback on rollouts for subsequent learning. Under this paradigm, environments function as indispensable producers of experiential data, highlighting the need to scale them toward greater complexity, realism, and interactivity. In this survey, we systematically review representative methods for environment scaling from a pioneering environment-centric perspective and organize them along the stages of the GEF loop, namely task generation, task execution, and feedback. We further analyze implementation frameworks, challenges, and applications, consolidating fragmented advances and outlining future research directions for agent intelligence.💡 Deep Analysis

📄 Full Content

📸 Image Gallery

Reference

This content is AI-processed based on open access ArXiv data.