Text-based Aerial-Ground Person Retrieval

Reading time: 1 minute

...

📝 Original Info

- Title: Text-based Aerial-Ground Person Retrieval

- ArXiv ID: 2511.08369

- Date: 2025-11-11

- Authors: ** 정보 없음 (논문에 저자 정보가 제공되지 않음) **

📝 Abstract

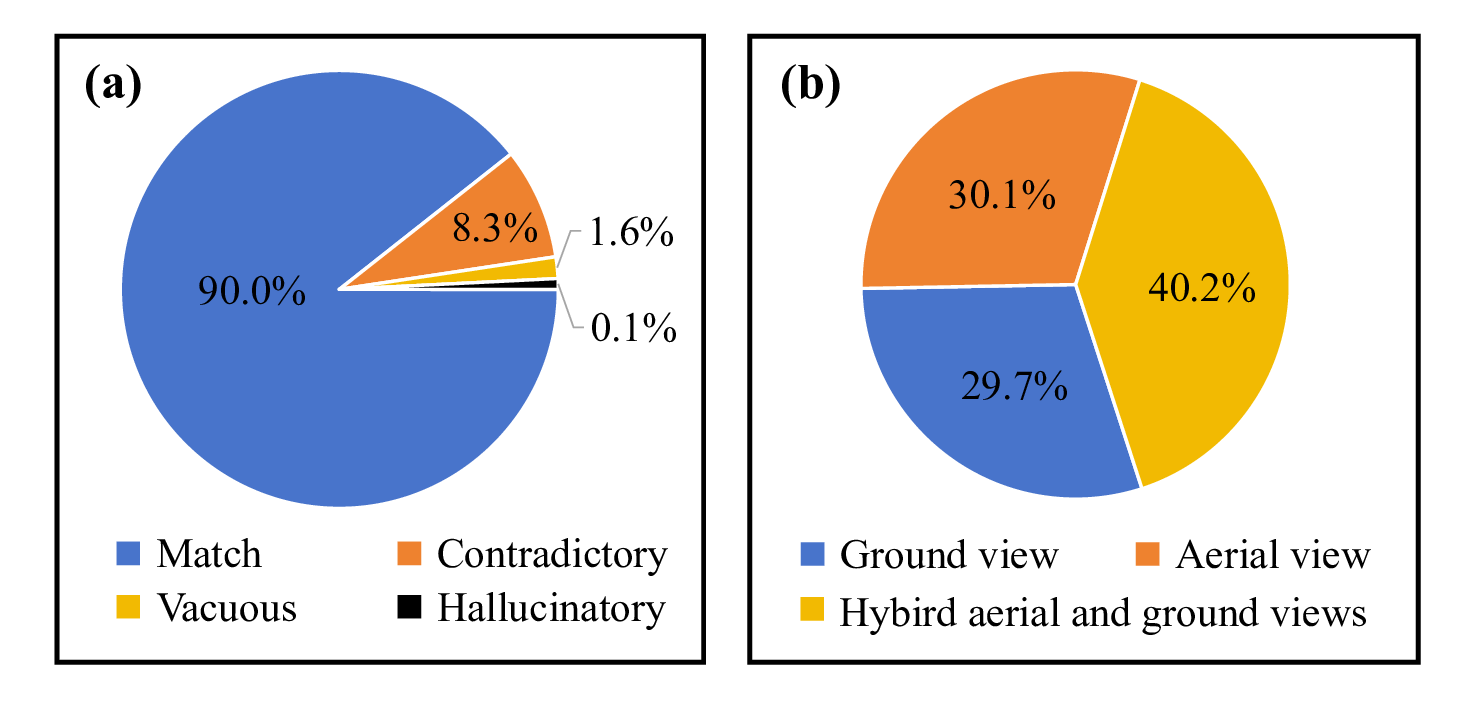

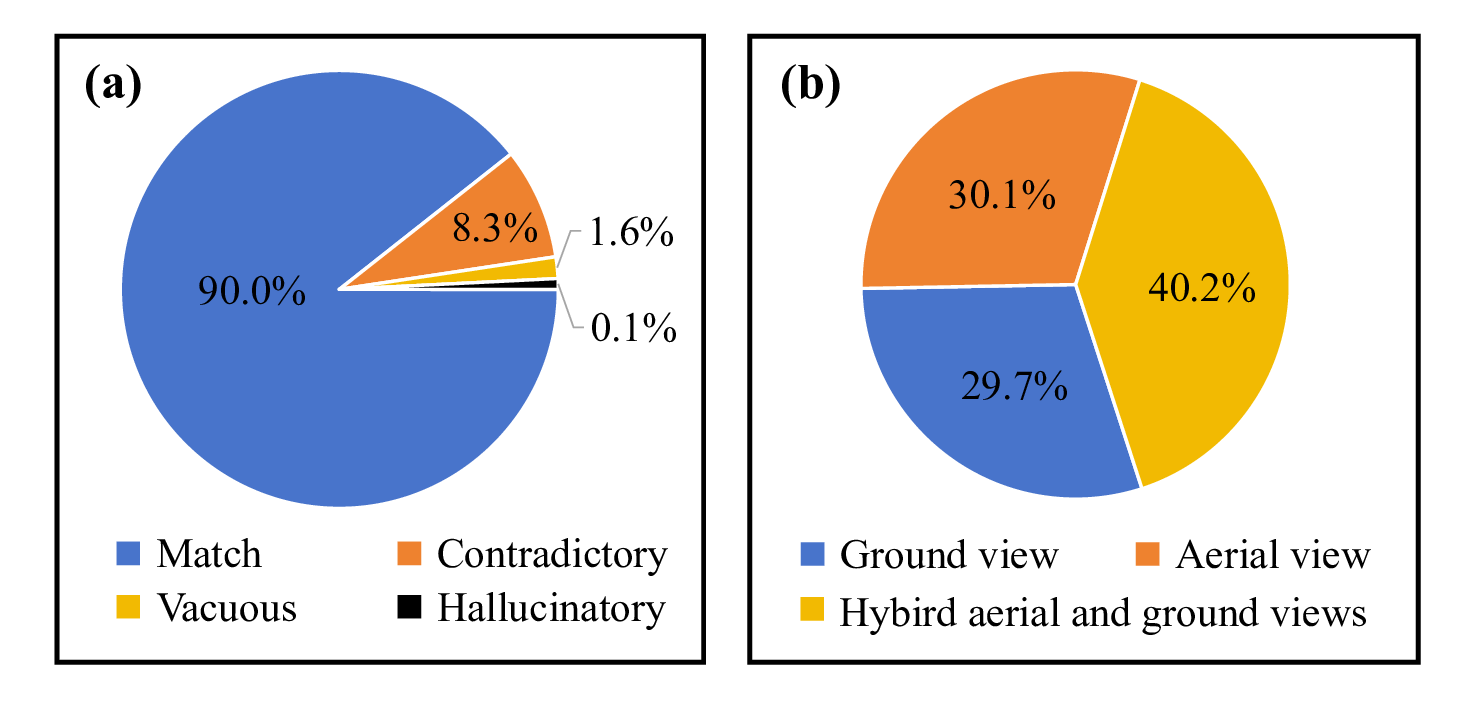

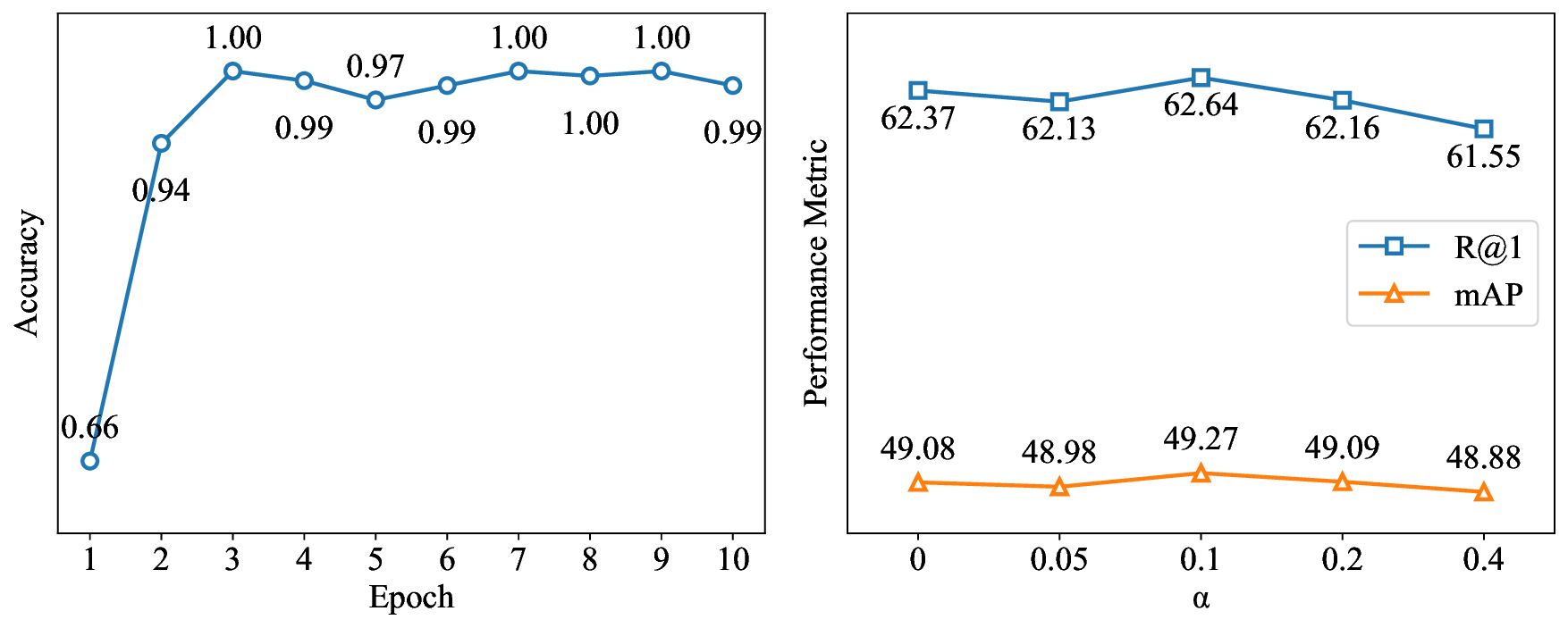

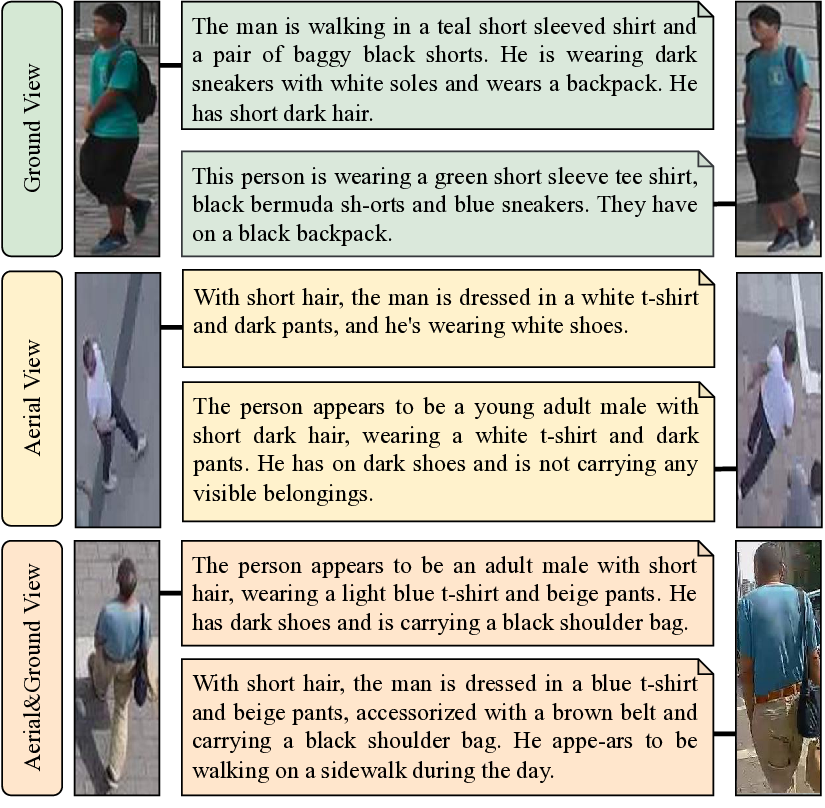

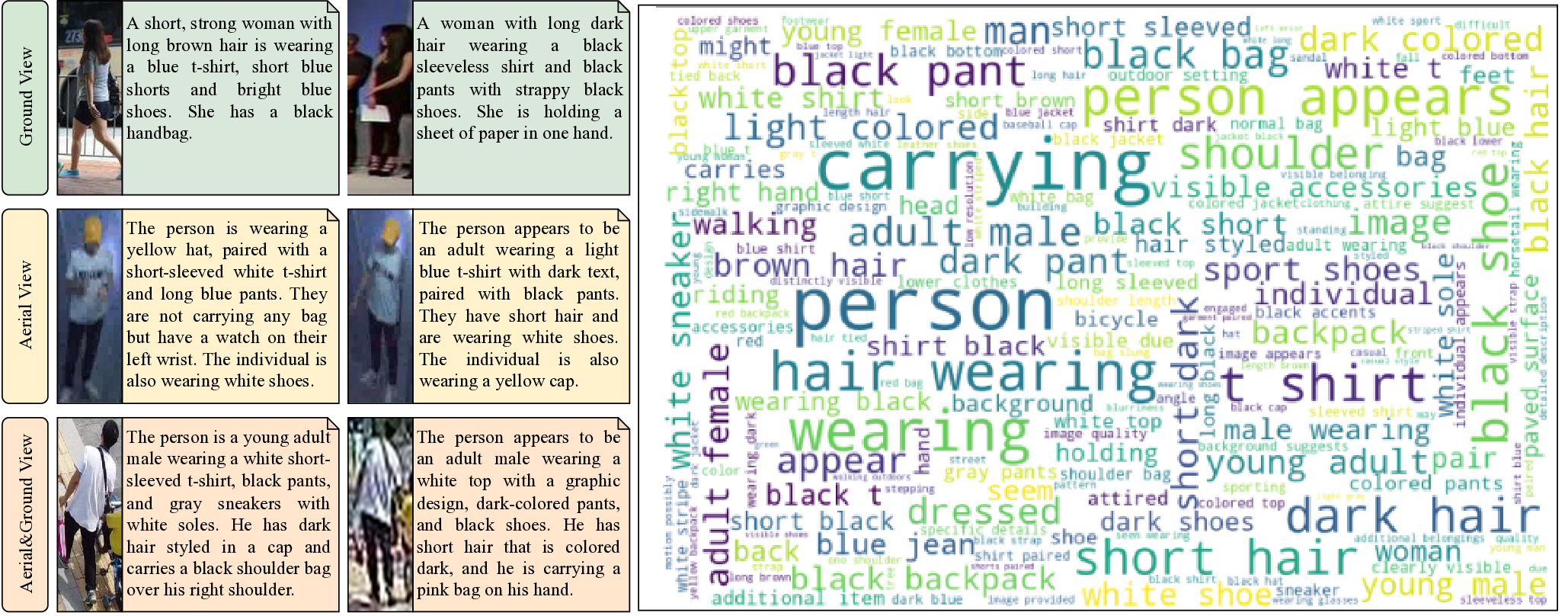

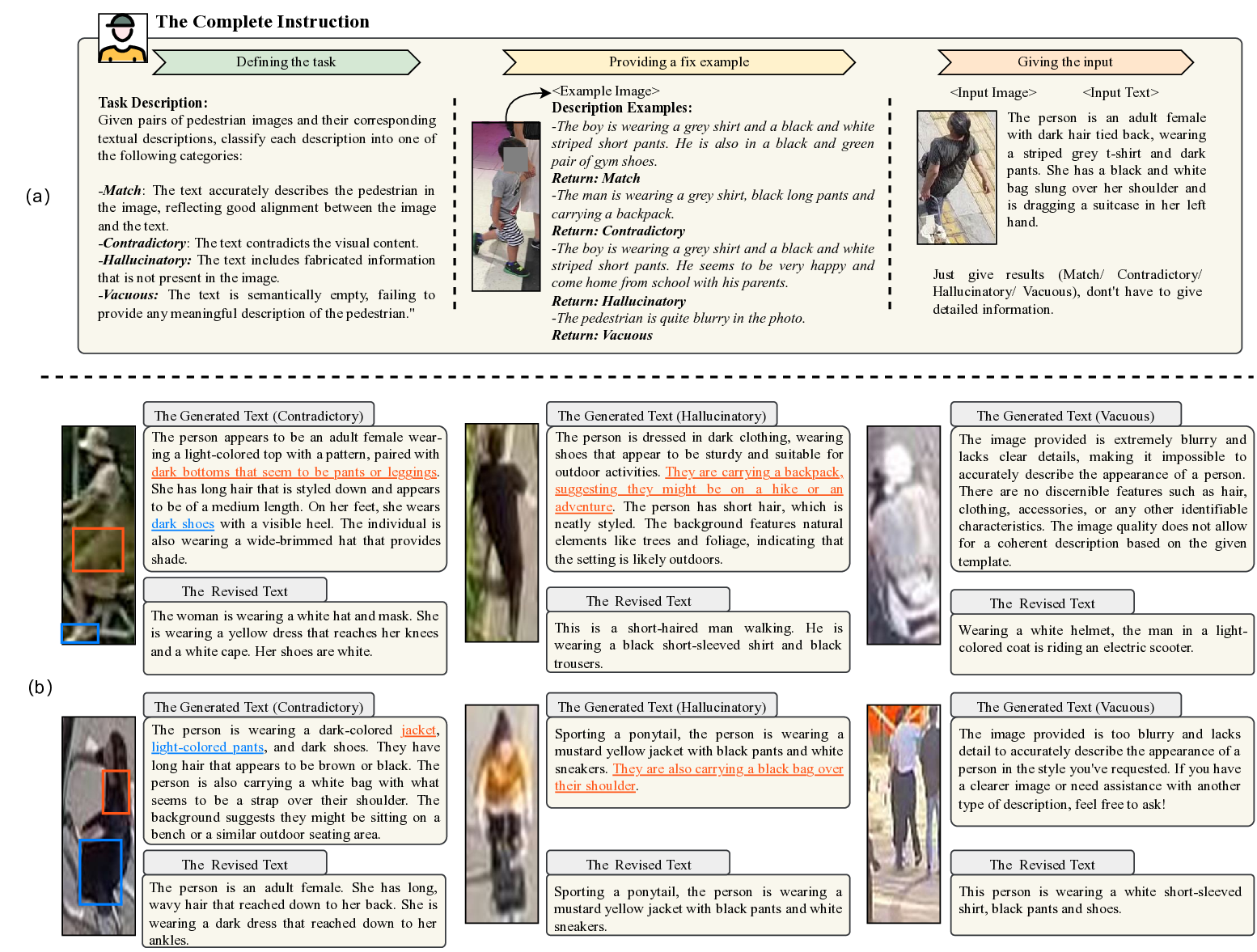

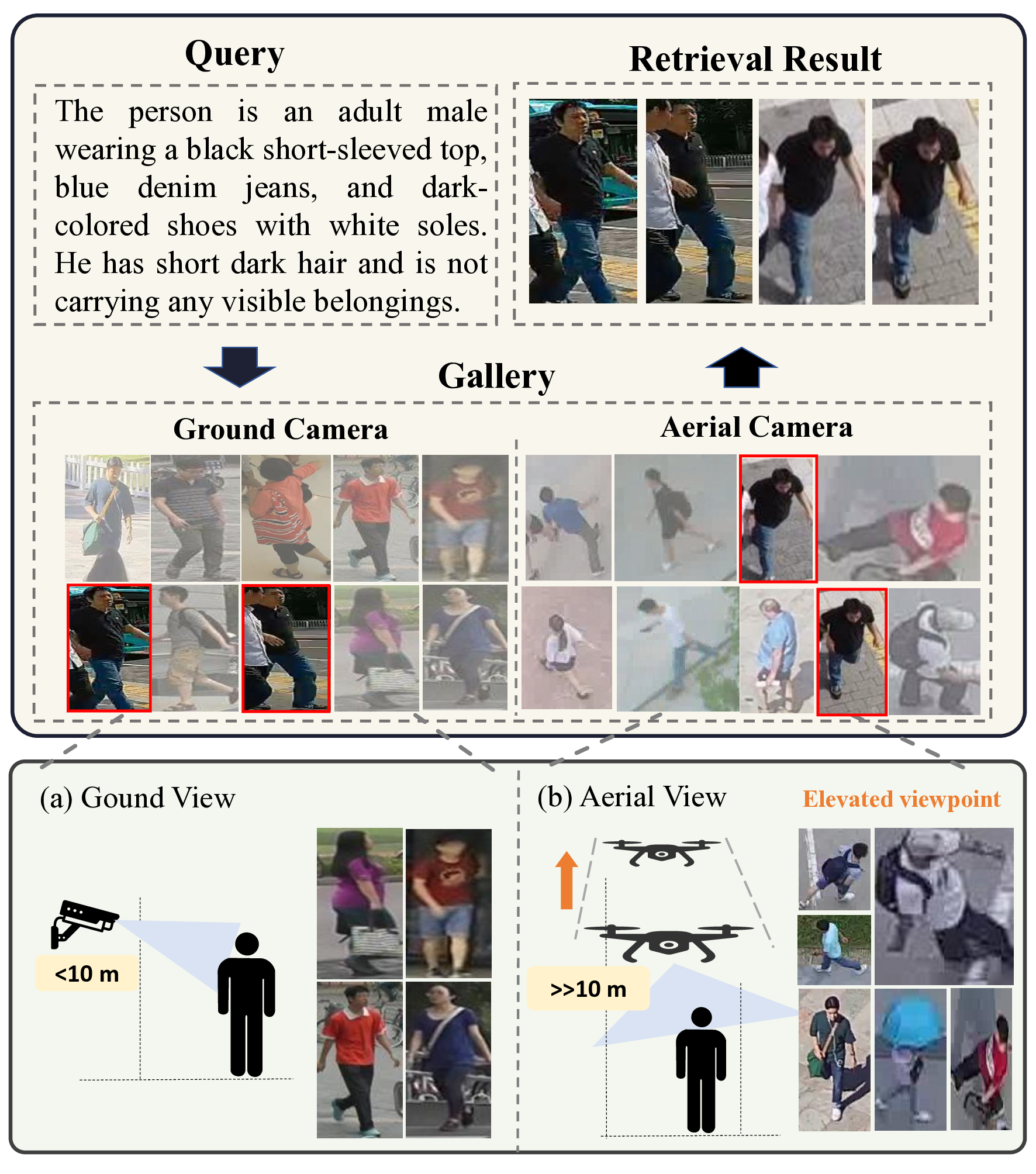

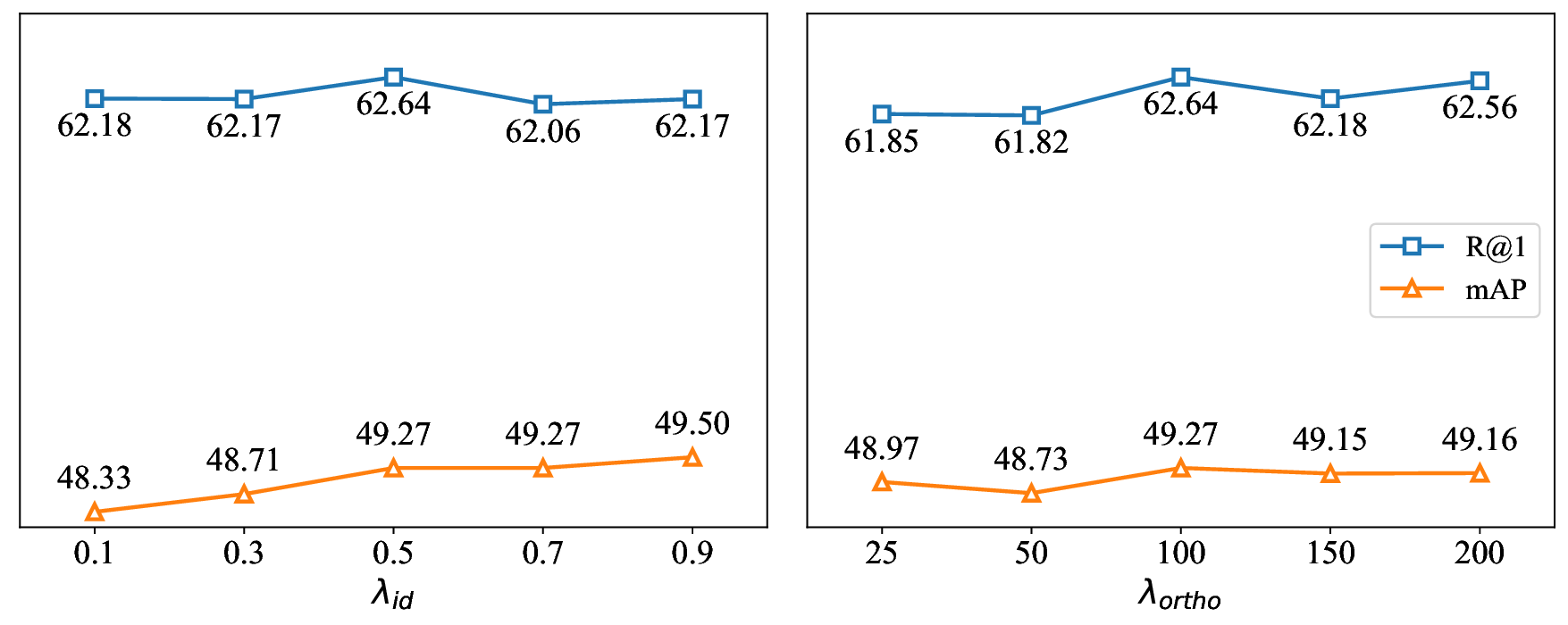

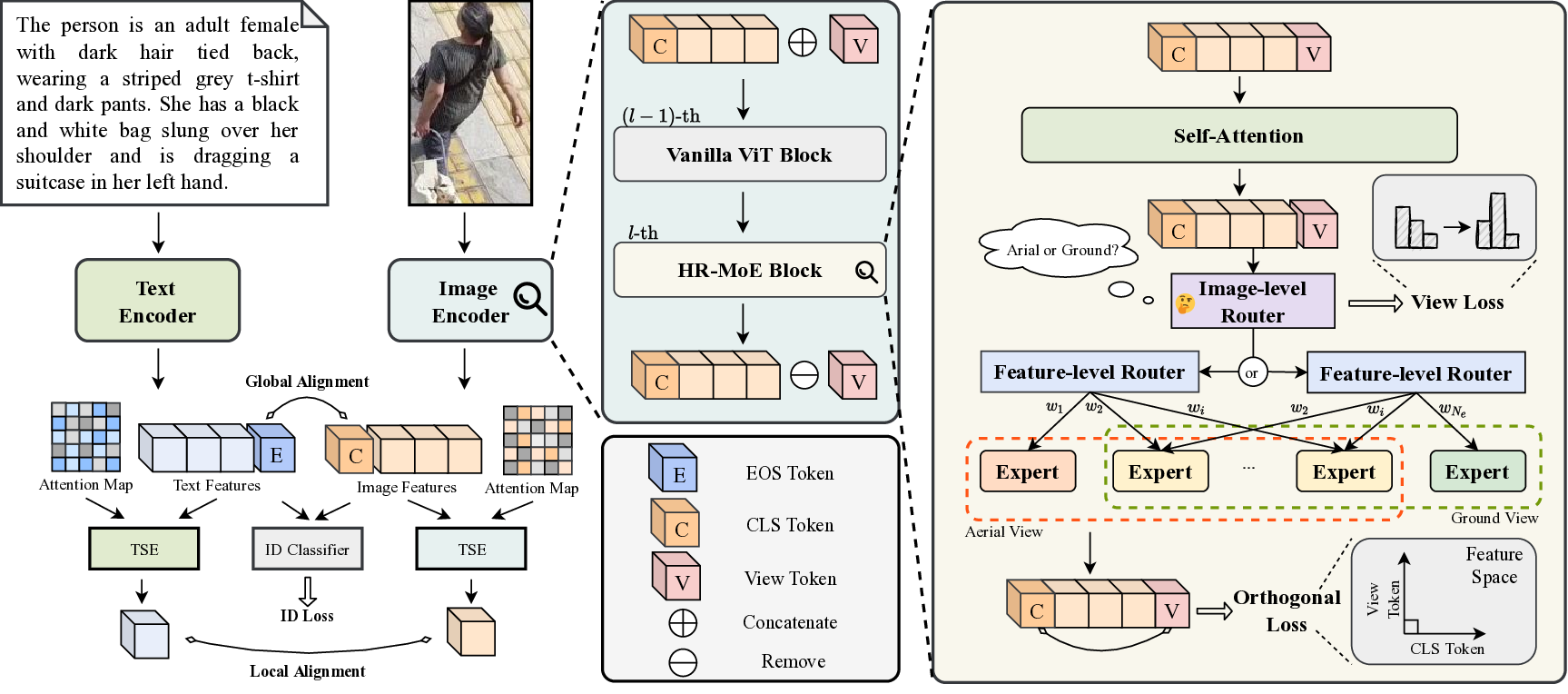

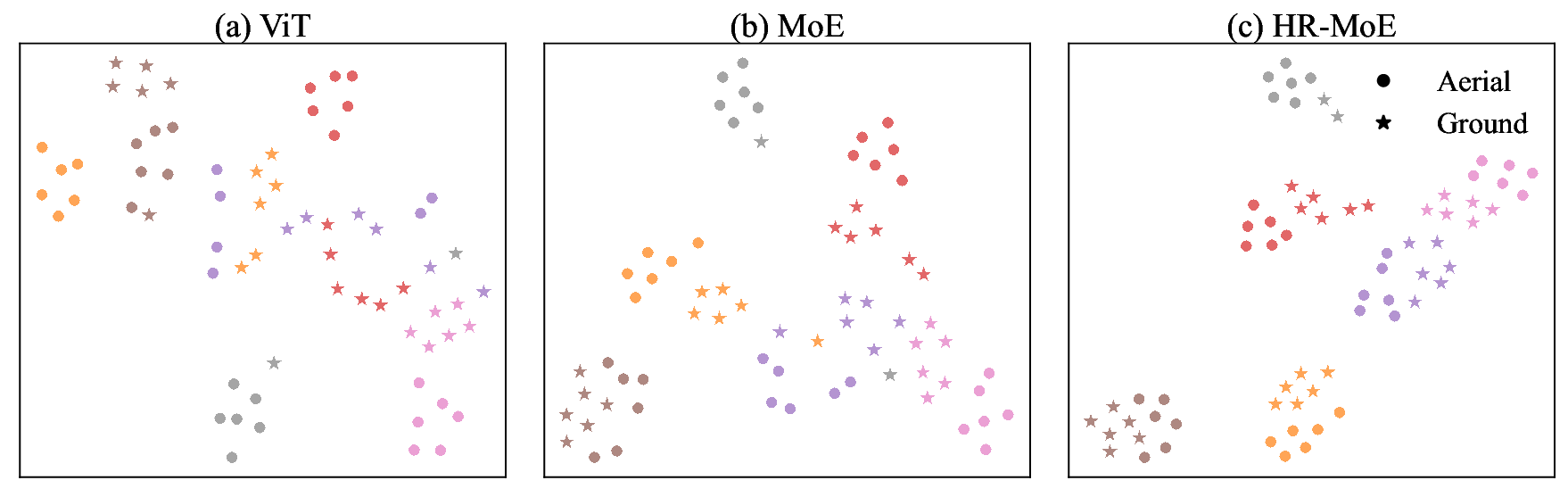

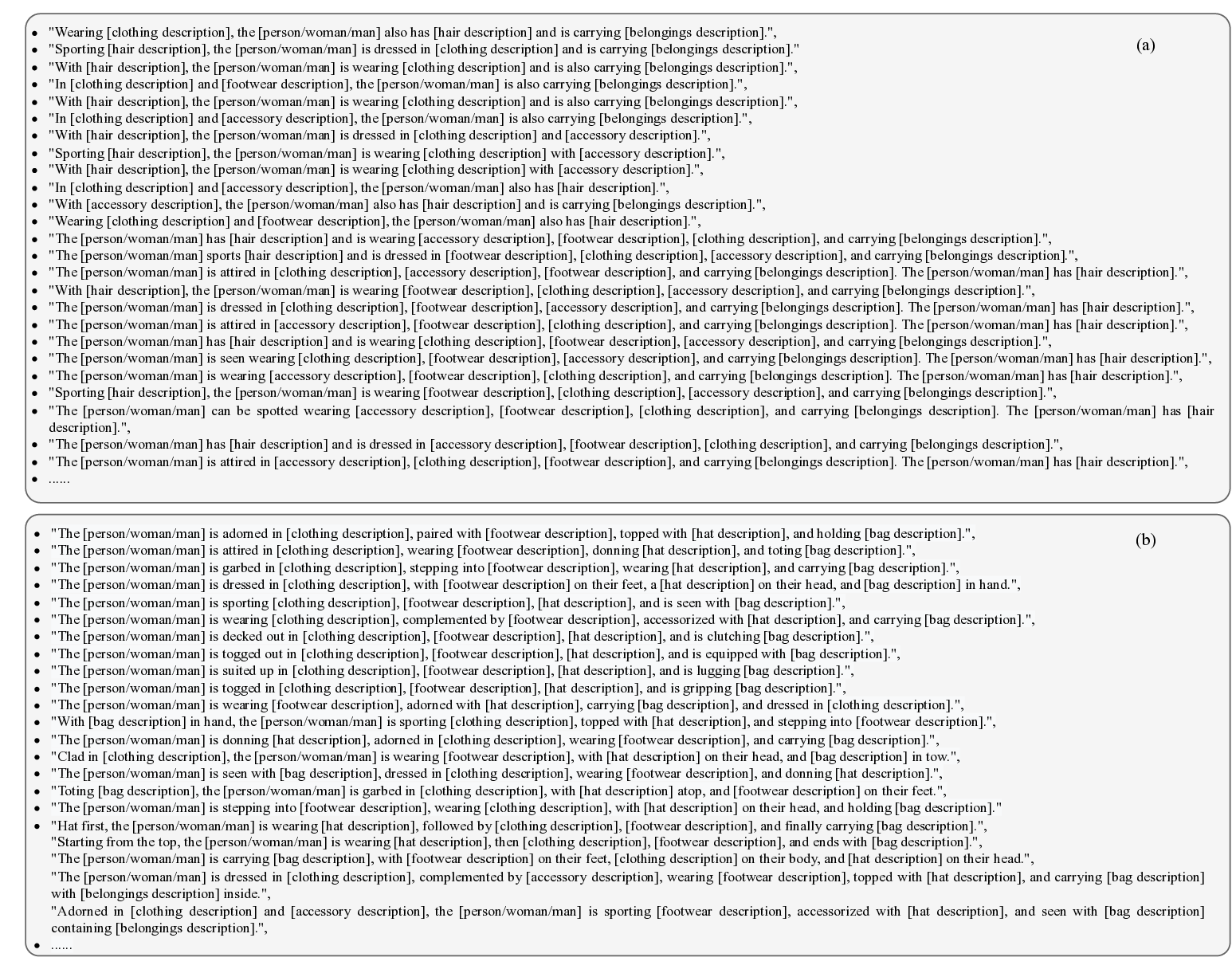

This work introduces Text-based Aerial-Ground Person Retrieval (TAG-PR), which aims to retrieve person images from heterogeneous aerial and ground views with textual descriptions. Unlike traditional Text-based Person Retrieval (T-PR), which focuses solely on ground-view images, TAG-PR introduces greater practical significance and presents unique challenges due to the large viewpoint discrepancy across images. To support this task, we contribute: (1) TAG-PEDES dataset, constructed from public benchmarks with automatically generated textual descriptions, enhanced by a diversified text generation paradigm to ensure robustness under view heterogeneity; and (2) TAG-CLIP, a novel retrieval framework that addresses view heterogeneity through a hierarchically-routed mixture of experts module to learn view-specific and view-agnostic features and a viewpoint decoupling strategy to decouple view-specific features for better cross-modal alignment. We evaluate the effectiveness of TAG-CLIP on both the proposed TAG-PEDES dataset and existing T-PR benchmarks. The dataset and code are available at https://github.com/Flame-Chasers/TAG-PR.💡 Deep Analysis

📄 Full Content

📸 Image Gallery

Reference

This content is AI-processed based on open access ArXiv data.