Toward Robust EEG-based Intention Decoding during Misarticulated Speech in Dysarthria

Reading time: 2 minute

...

📝 Original Info

- Title: Toward Robust EEG-based Intention Decoding during Misarticulated Speech in Dysarthria

- ArXiv ID: 2511.07895

- Date: 2025-11-11

- Authors: ** - (논문에 명시된 저자 정보가 제공되지 않았으므로, 원문에 기재된 저자명을 그대로 기입해 주세요.) **

📝 Abstract

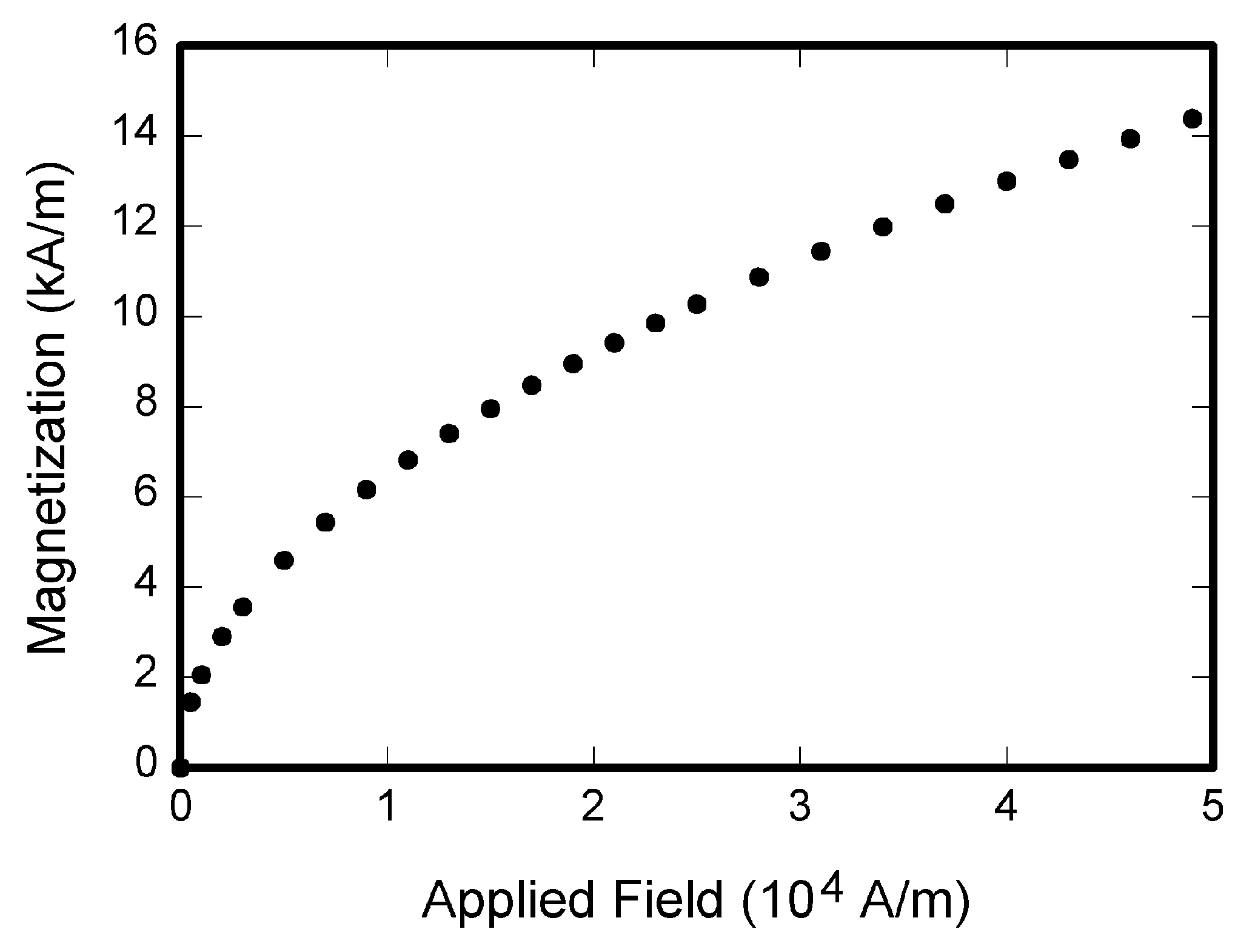

Dysarthria impairs motor control of speech, often resulting in reduced intelligibility and frequent misarticulations. Although interest in brain-computer interface technologies is growing, electroencephalogram (EEG)-based communication support for individuals with dysarthria remains limited. To address this gap, we recorded EEG data from one participant with dysarthria during a Korean automatic speech task and labeled each trial as correct or misarticulated. Spectral analysis revealed that misarticulated trials exhibited elevated frontal-central delta and alpha power, along with reduced temporal gamma activity. Building on these observations, we developed a soft multitask learning framework designed to suppress these nonspecific spectral responses and incorporated a maximum mean discrepancy-based alignment module to enhance class discrimination while minimizing domain-related variability. The proposed model achieved F1-scores of 52.7 % for correct and 41.4 % for misarticulated trials-an improvement of 2 % and 11 % over the baseline-demonstrating more stable intention decoding even under articulation errors. These results highlight the potential of EEG-based assistive systems for communication in language impaired individuals.💡 Deep Analysis

📄 Full Content

📸 Image Gallery

Reference

This content is AI-processed based on open access ArXiv data.