Adapting Large Language Models to Emerging Cybersecurity using Retrieval Augmented Generation

📝 Original Info

- Title: Adapting Large Language Models to Emerging Cybersecurity using Retrieval Augmented Generation

- ArXiv ID: 2510.27080

- Date: 2025-10-31

- Authors: ** 논문에 명시된 저자 정보가 제공되지 않았습니다. (저자명 및 소속을 확인 후 추가 필요) **

📝 Abstract

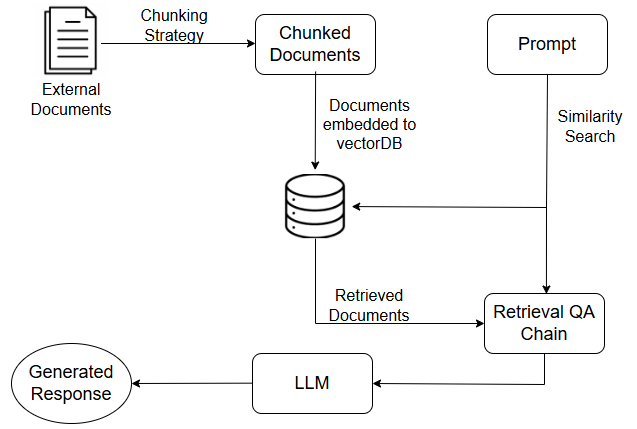

Security applications are increasingly relying on large language models (LLMs) for cyber threat detection; however, their opaque reasoning often limits trust, particularly in decisions that require domain-specific cybersecurity knowledge. Because security threats evolve rapidly, LLMs must not only recall historical incidents but also adapt to emerging vulnerabilities and attack patterns. Retrieval-Augmented Generation (RAG) has demonstrated effectiveness in general LLM applications, but its potential for cybersecurity remains underexplored. In this work, we introduce a RAG-based framework designed to contextualize cybersecurity data and enhance LLM accuracy in knowledge retention and temporal reasoning. Using external datasets and the Llama-3-8B-Instruct model, we evaluate baseline RAG, an optimized hybrid retrieval approach, and conduct a comparative analysis across multiple performance metrics. Our findings highlight the promise of hybrid retrieval in strengthening the adaptability and reliability of LLMs for cybersecurity tasks.💡 Deep Analysis

📄 Full Content

📸 Image Gallery

Reference

This content is AI-processed based on open access ArXiv data.