SSCL-BW: Sample-Specific Clean-Label Backdoor Watermarking for Dataset Ownership Verification

📝 Original Info

- Title: SSCL-BW: Sample-Specific Clean-Label Backdoor Watermarking for Dataset Ownership Verification

- ArXiv ID: 2510.26420

- Date: 2025-10-30

- Authors: ** 논문에 명시된 저자 정보가 제공되지 않았습니다. **

📝 Abstract

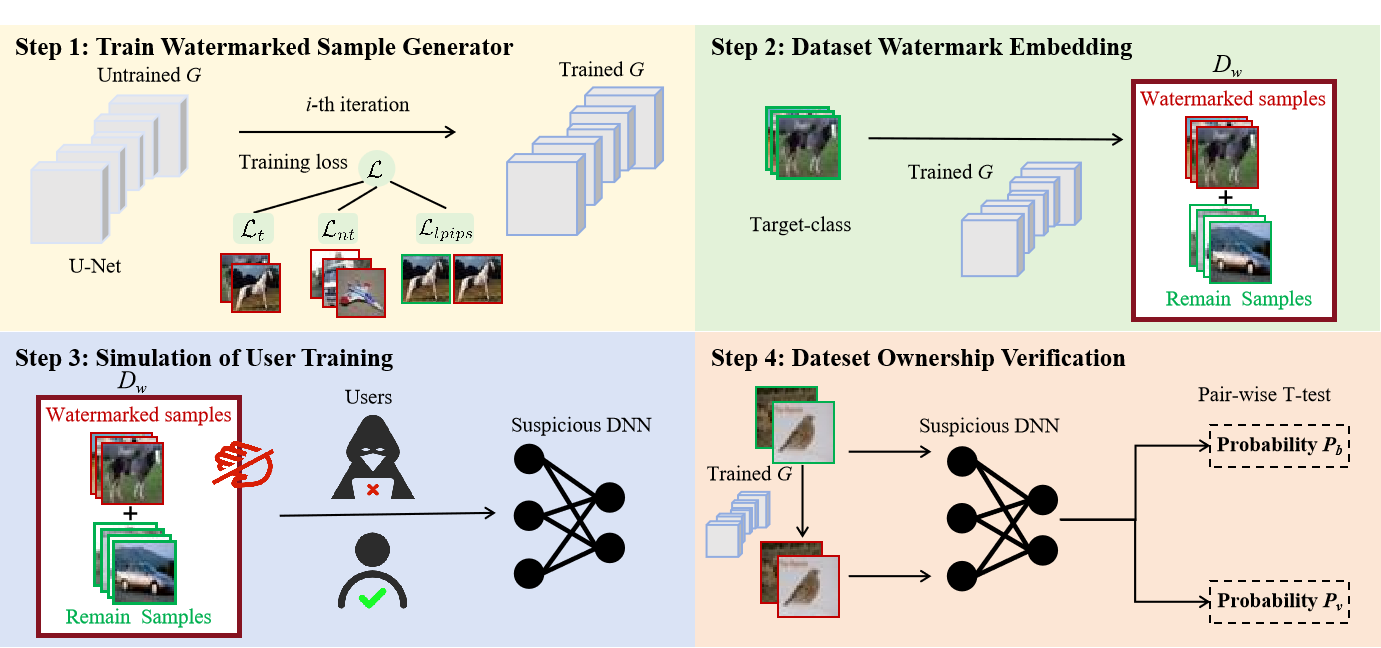

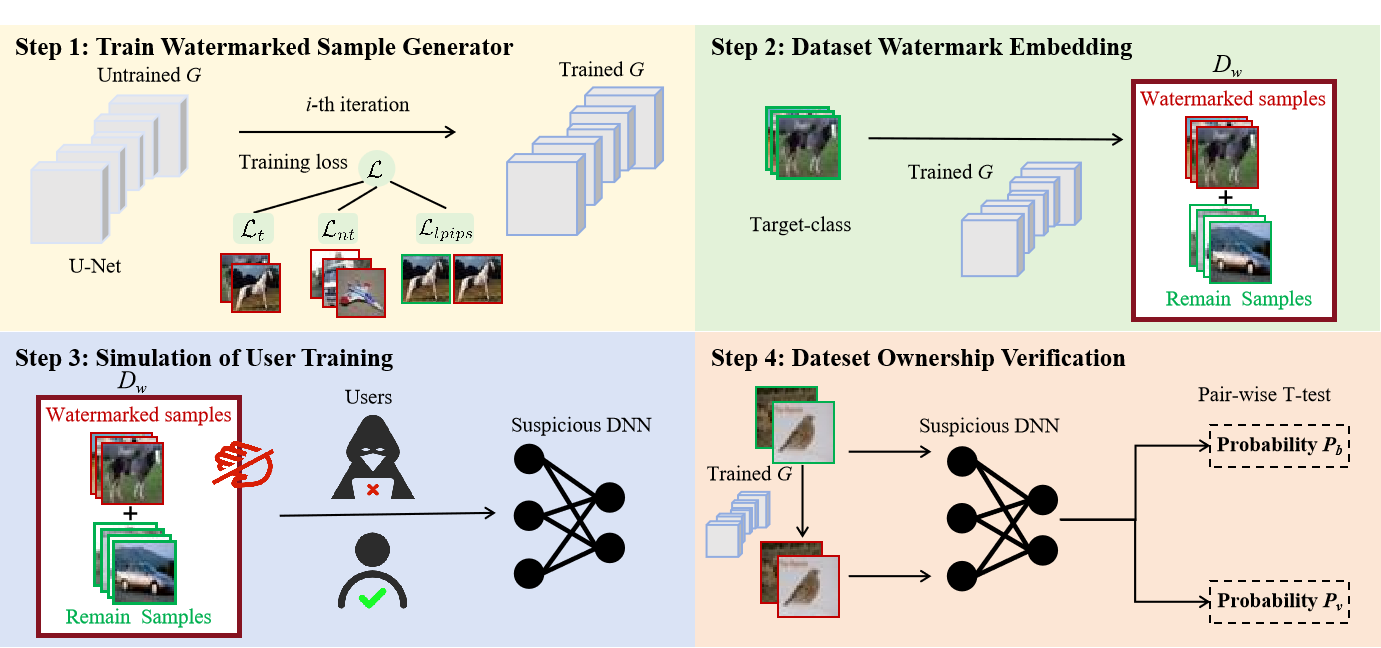

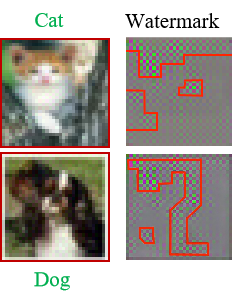

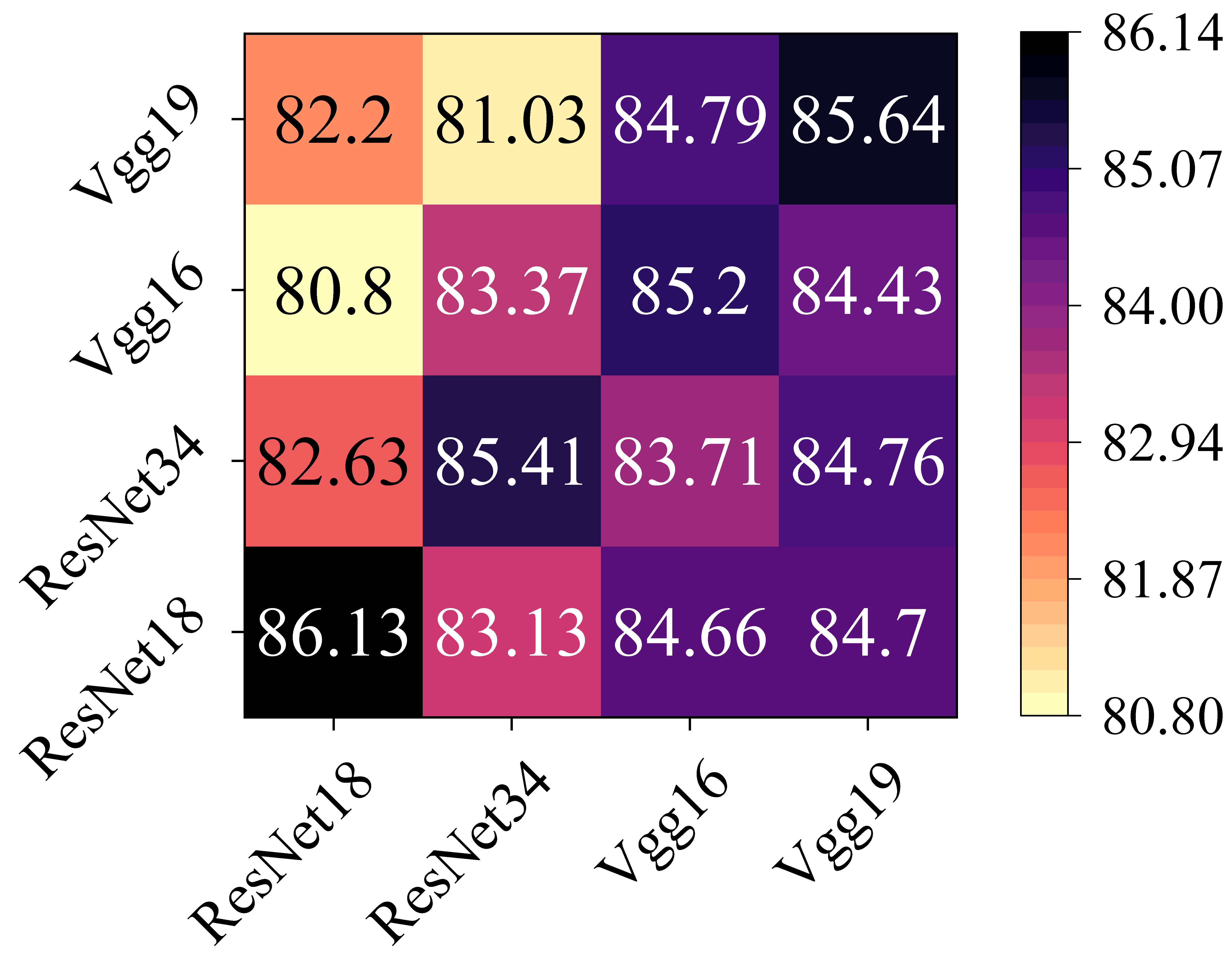

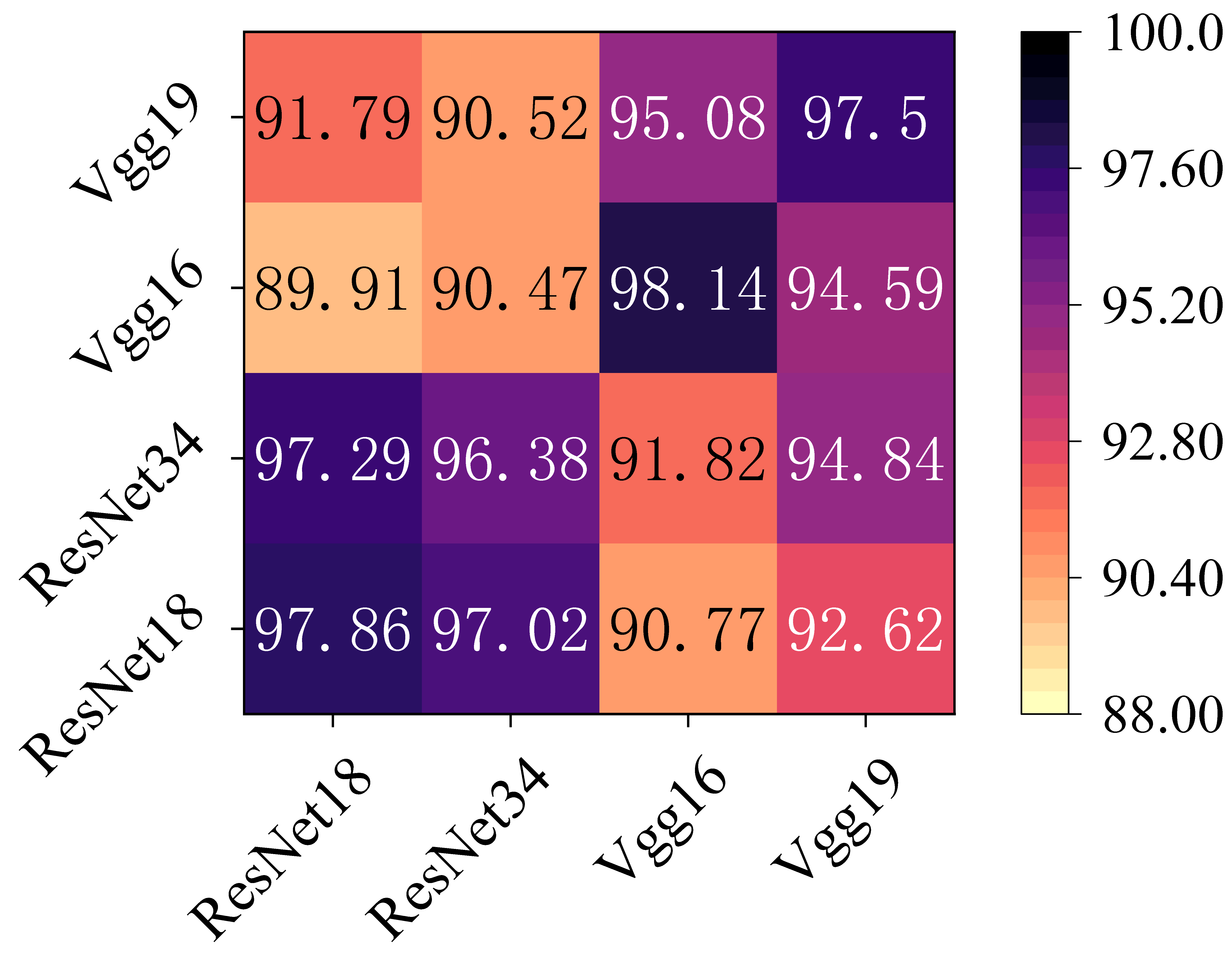

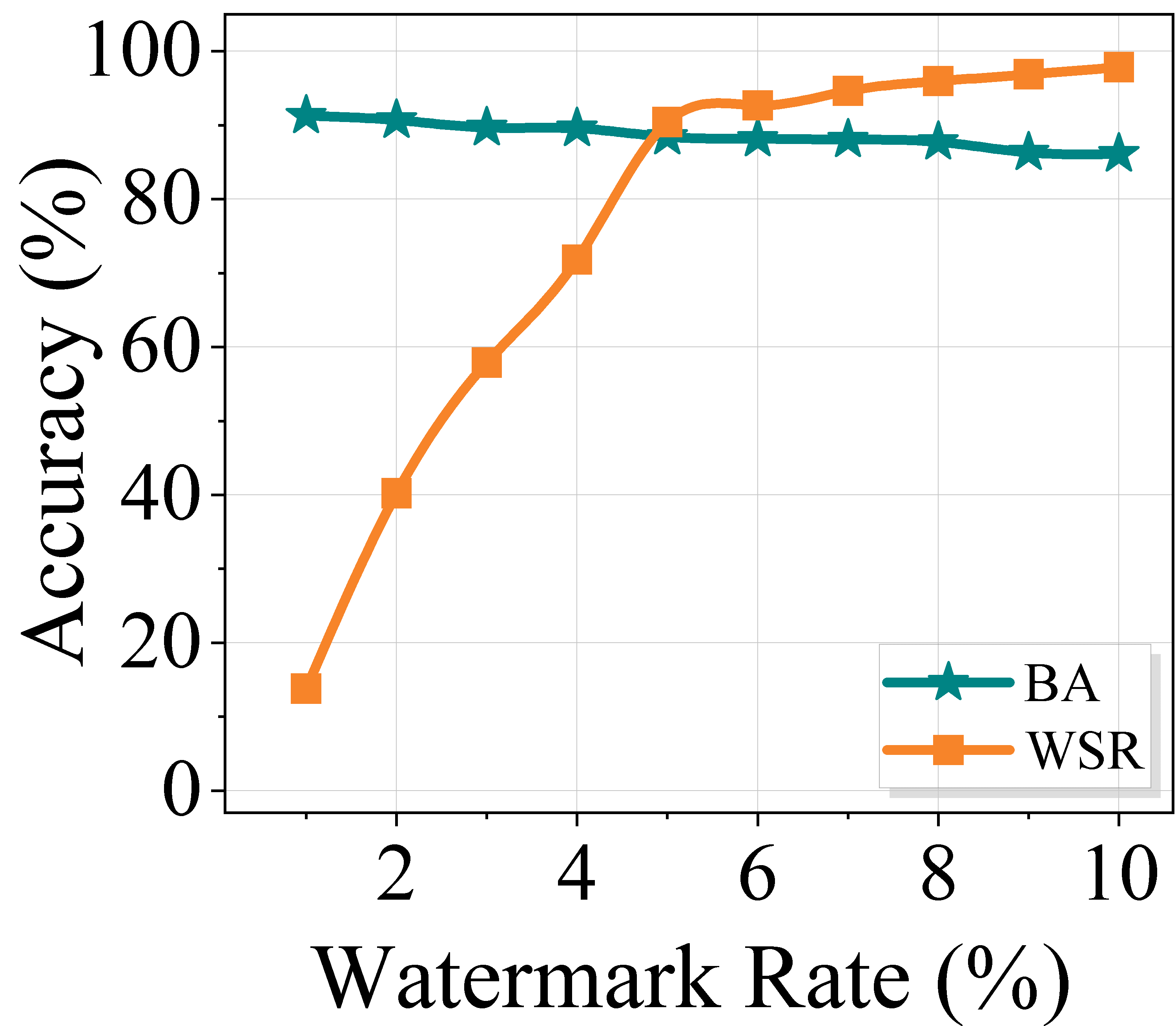

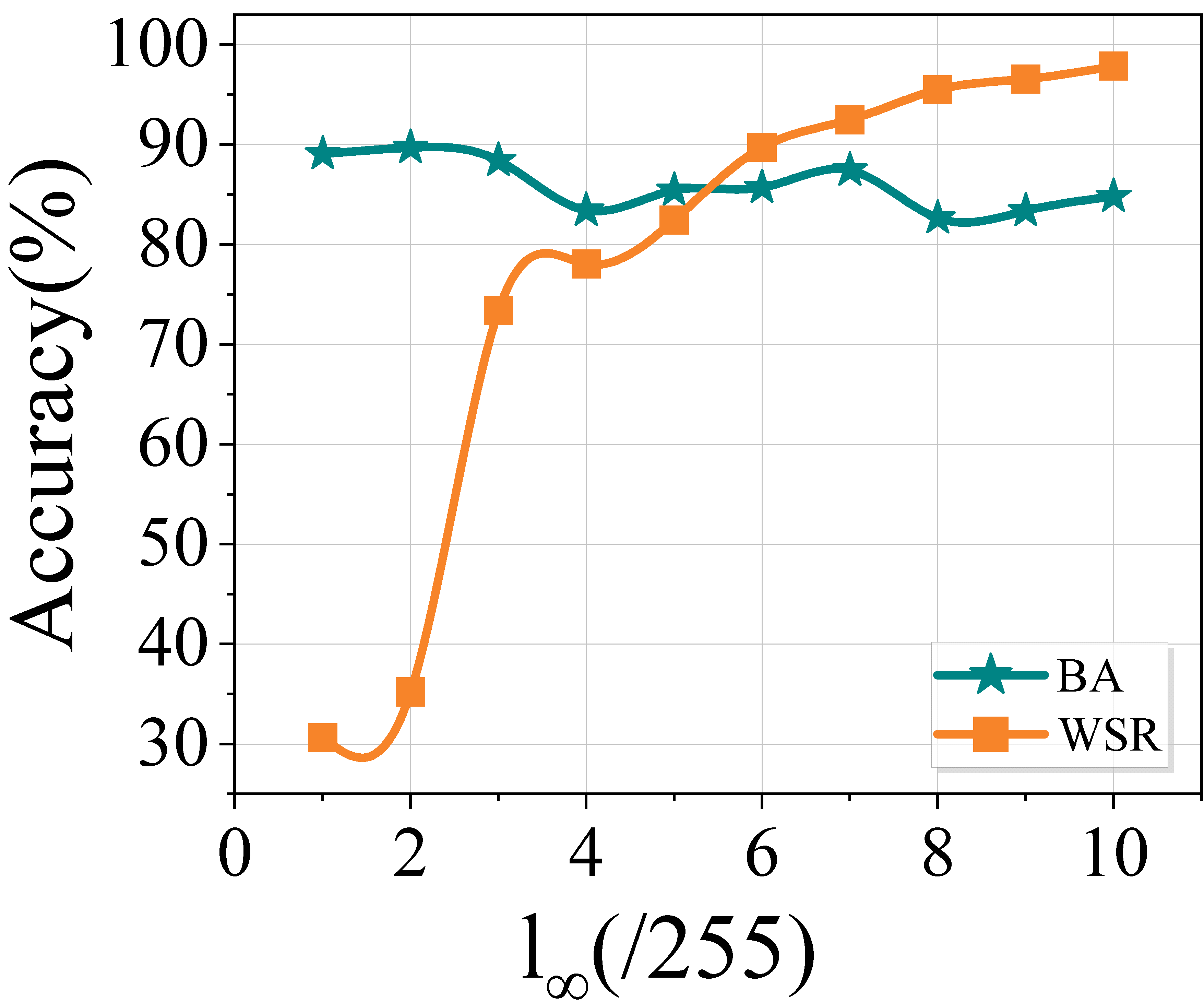

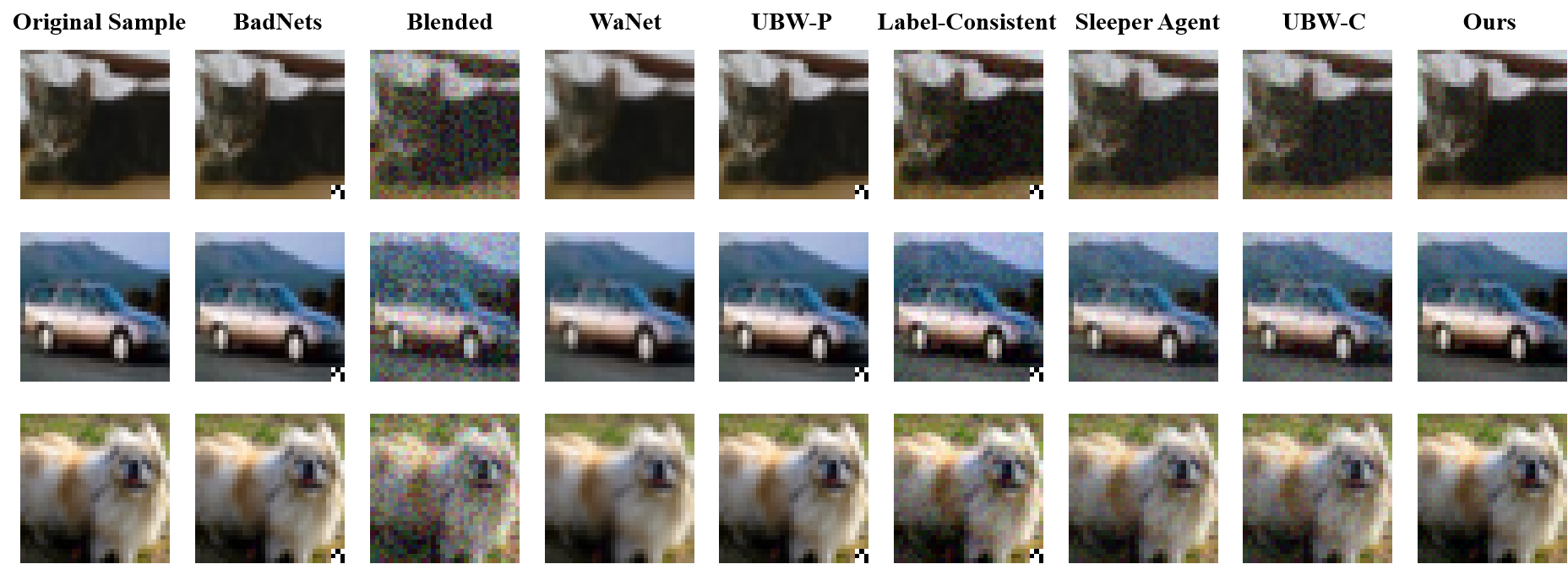

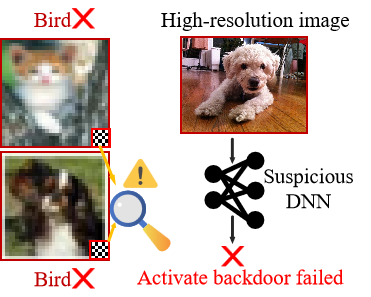

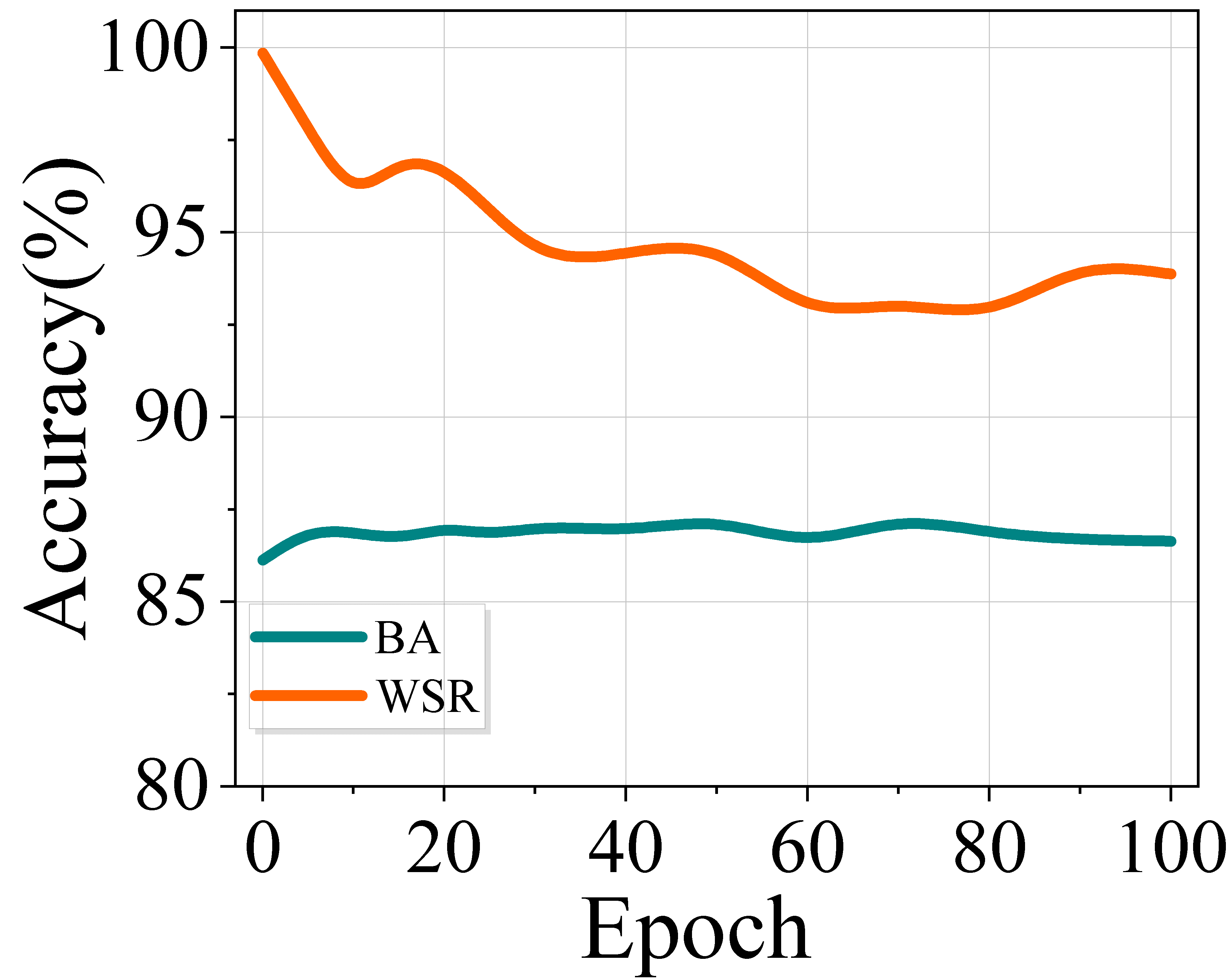

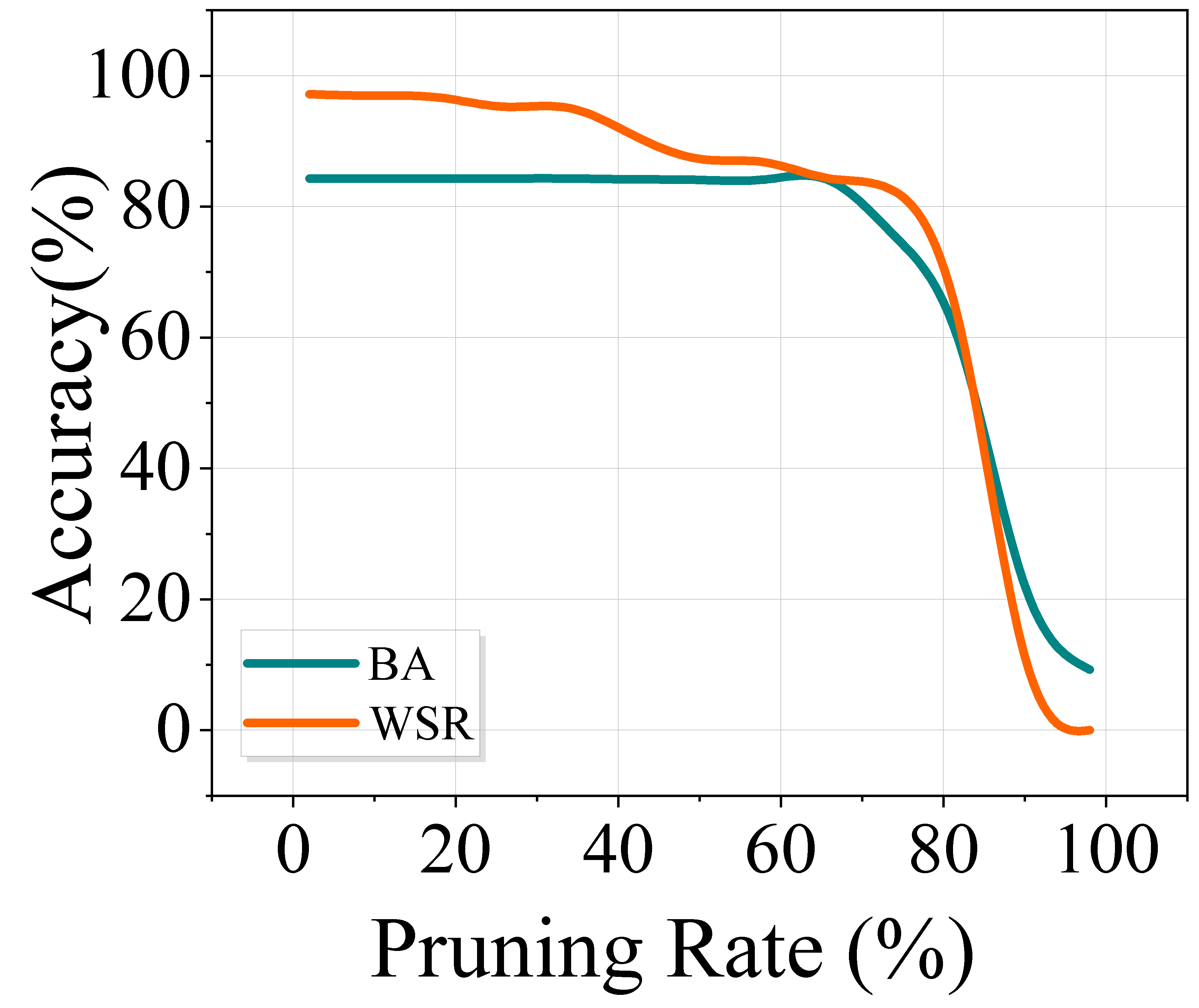

The rapid advancement of deep neural networks (DNNs) heavily relies on large-scale, high-quality datasets. However, unauthorized commercial use of these datasets severely violates the intellectual property rights of dataset owners. Existing backdoor-based dataset ownership verification methods suffer from inherent limitations: poison-label watermarks are easily detectable due to label inconsistencies, while clean-label watermarks face high technical complexity and failure on high-resolution images. Moreover, both approaches employ static watermark patterns that are vulnerable to detection and removal. To address these issues, this paper proposes a sample-specific clean-label backdoor watermarking (i.e., SSCL-BW). By training a U-Net-based watermarked sample generator, this method generates unique watermarks for each sample, fundamentally overcoming the vulnerability of static watermark patterns. The core innovation lies in designing a composite loss function with three components: target sample loss ensures watermark effectiveness, non-target sample loss guarantees trigger reliability, and perceptual similarity loss maintains visual imperceptibility. During ownership verification, black-box testing is employed to check whether suspicious models exhibit predefined backdoor behaviors. Extensive experiments on benchmark datasets demonstrate the effectiveness of the proposed method and its robustness against potential watermark removal attacks.💡 Deep Analysis

📄 Full Content

📸 Image Gallery

Reference

This content is AI-processed based on open access ArXiv data.