Subgraph Federated Learning via Spectral Methods

📝 Original Info

- Title: Subgraph Federated Learning via Spectral Methods

- ArXiv ID: 2510.25657

- Date: 2025-10-29

- Authors: 정보 제공되지 않음

📝 Abstract

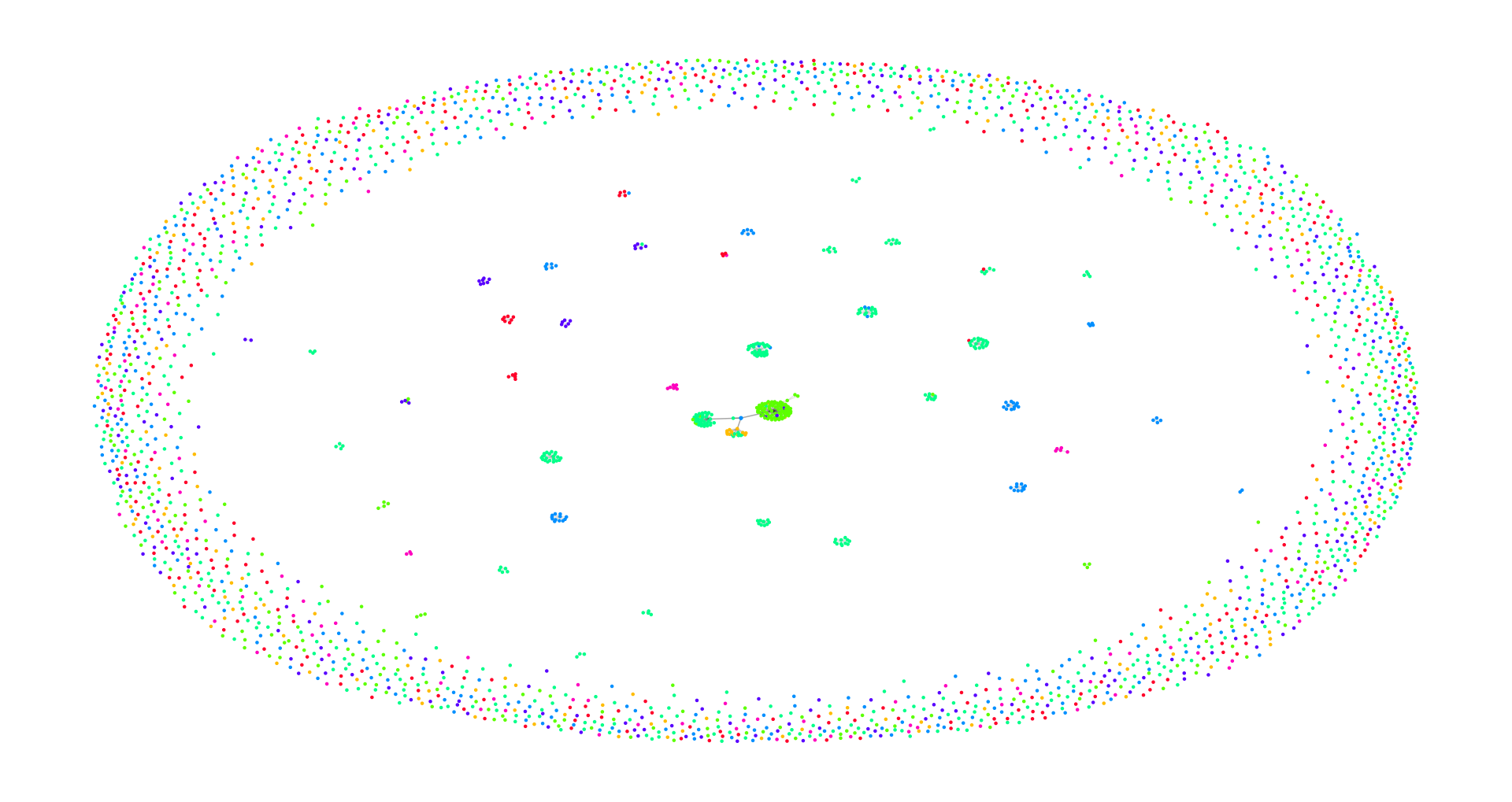

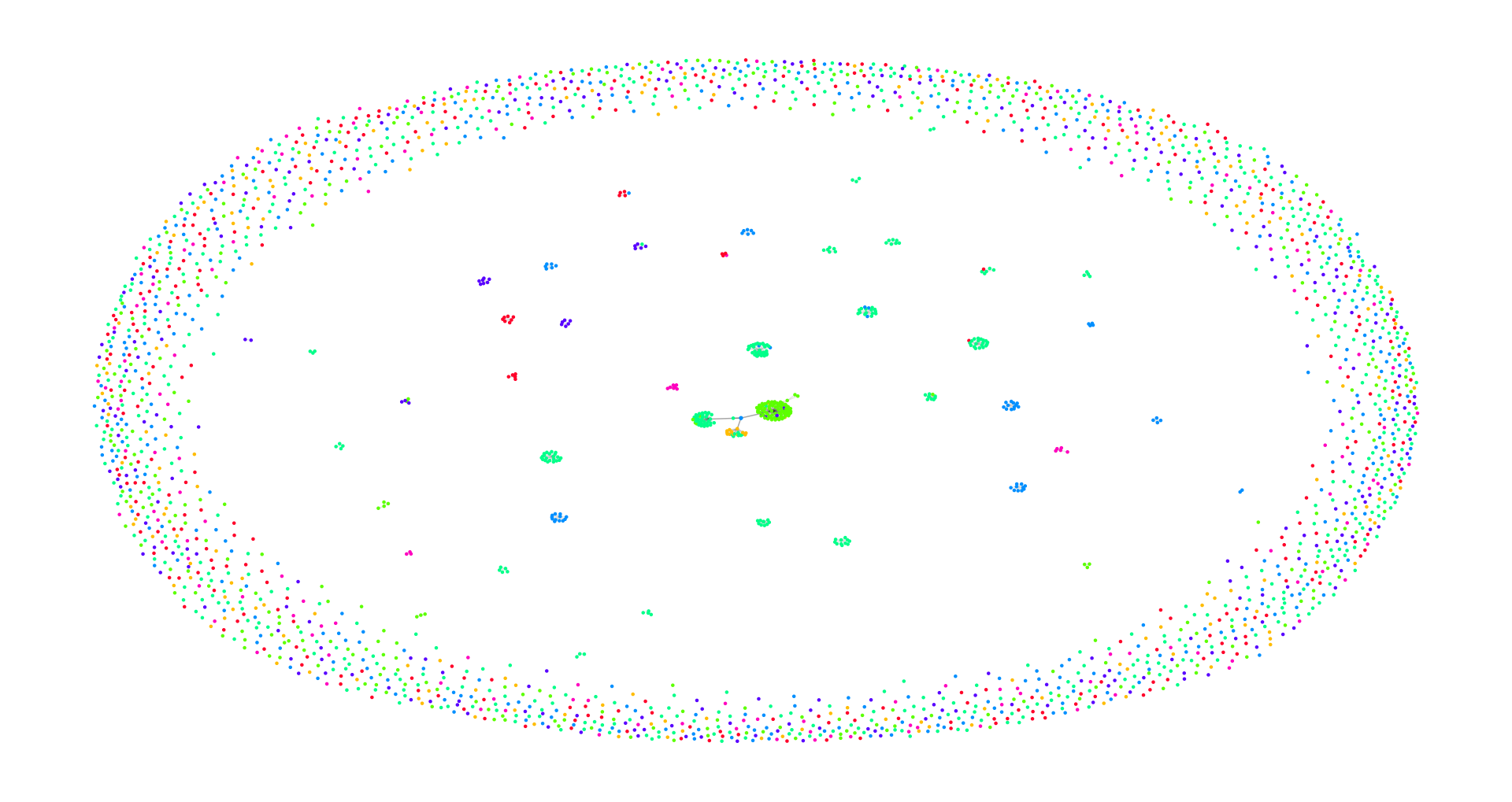

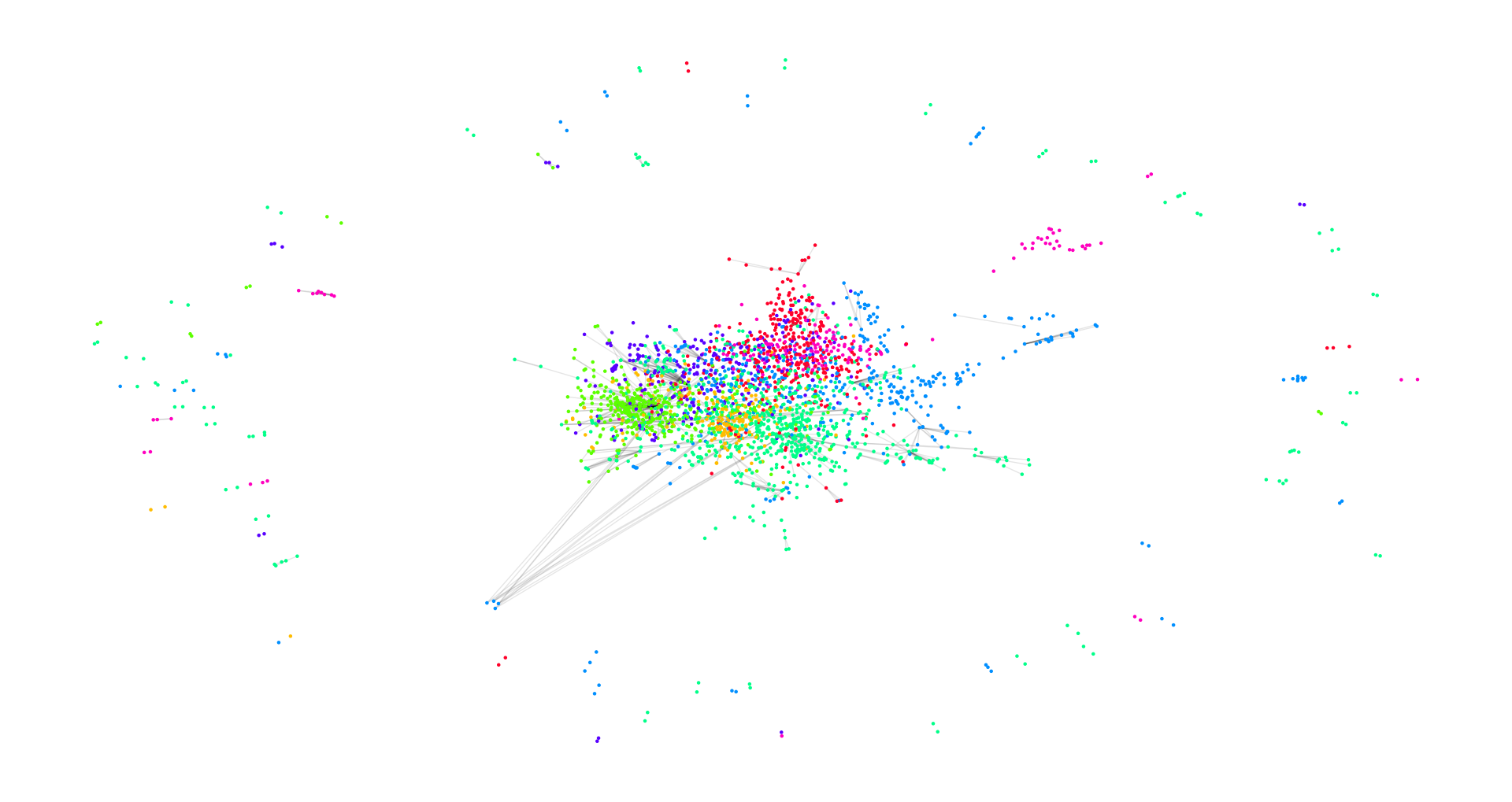

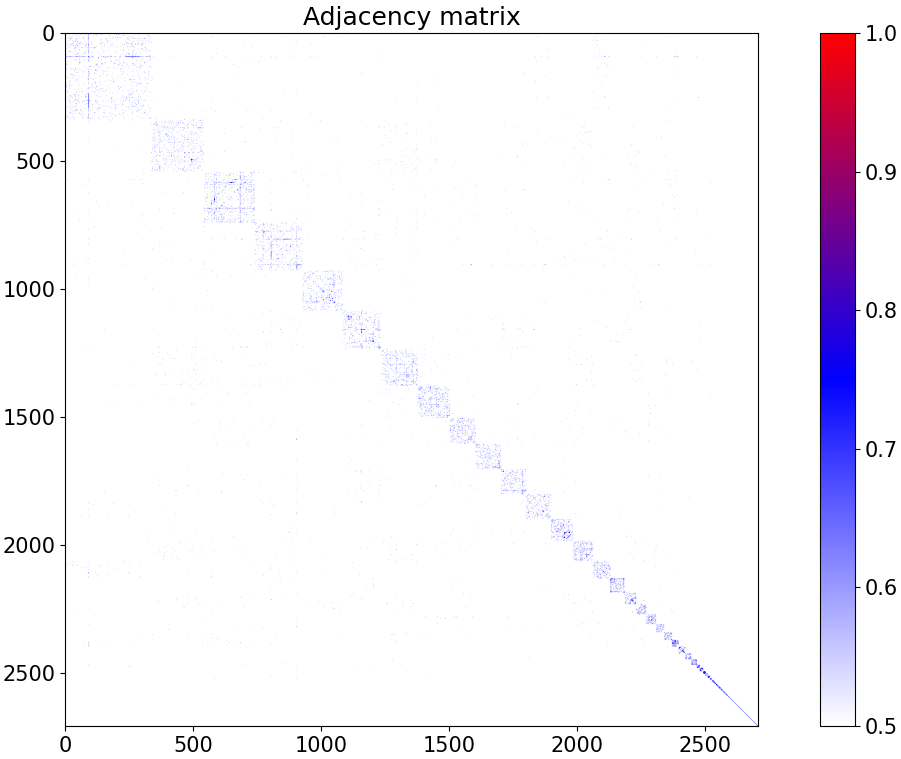

We consider the problem of federated learning (FL) with graph-structured data distributed across multiple clients. In particular, we address the prevalent scenario of interconnected subgraphs, where interconnections between clients significantly influence the learning process. Existing approaches suffer from critical limitations, either requiring the exchange of sensitive node embeddings, thereby posing privacy risks, or relying on computationally-intensive steps, which hinders scalability. To tackle these challenges, we propose FedLap, a novel framework that leverages global structure information via Laplacian smoothing in the spectral domain to effectively capture inter-node dependencies while ensuring privacy and scalability. We provide a formal analysis of the privacy of FedLap, demonstrating that it preserves privacy. Notably, FedLap is the first subgraph FL scheme with strong privacy guarantees. Extensive experiments on benchmark datasets demonstrate that FedLap achieves competitive or superior utility compared to existing techniques.💡 Deep Analysis

📄 Full Content

📸 Image Gallery

Reference

This content is AI-processed based on open access ArXiv data.