Access to expert knowledge often requires real-time human communication. Digital tools improve access to information but rarely create the sense of connection needed for deep understanding. This study addresses this issue using Social Presence Theory, which explains how a feeling of "being together" enhances communication. An "Embodied Information Hub" is proposed as a new way to share knowledge through physical and conversational interaction. The prototype, Suzume-chan, is a small, soft AI agent running locally with a language model and retrieval-augmented generation (RAG). It learns from spoken explanations and responds through dialogue, reducing psychological distance and making knowledge sharing warmer and more human-centered.

In the modern era, people seek not only data but also the stories that lie behind knowledge-such as the passion of experts or the background of artworks. However, access to such stories has often required direct and synchronous communication with experts. Smartphone applications provide efficient access to information, but they tend to isolate users from their surroundings. They can deliver answers but do not create relationships.

This study examines the absence of such relationships. The quality of human communication has been described by Social Presence Theory [8], which explains how the sense that “someone is there” enriches mediated interaction. We ask what happens when this human-centered theory is applied to an AI agent with a physical body. This is the main question of our research.

Authors’ Contact Information: Maya Grace Torii, toriparu@digitalnature.slis.tsukuba. ac.jp, Doctoral Program in Informatics, University of Tsukuba, Tsukuba, Ibaraki, Japan; Takahito Murakami, takahito@digitalnature.slis.tsukuba.ac.jp, Doctoral Program in Informatics, University of Tsukuba, Tsukuba, Ibaraki, Japan; Shuka Koseki, s2430442@ u.tsukuba.ac.jp, Doctoral Program in Nursing Science, University of Tsukuba, Tsukuba, Ibaraki, Japan; Yoichi Ochiai, Institute of Library, Information and Media Science, University of Tsukuba, Tsukuba, Ibaraki, Japan, wizard@slis.tsukuba.ac.jp.

Previous studies such as Paro [7], Kismet [3], and iCub [5] have shown that physical robots can form emotional and social bonds with humans. These studies suggest that an agent can become a social partner rather than a mere tool.

We extend this possibility from the emotional domain to the intellectual one. Suzume-chan was intentionally designed with a soft and friendly appearance to explore this new type of relationship. Its warm, hand-sized form helps reduce psychological barriers and turns intellectual explanation into a calm and trustworthy conversation. The “Embodied Information Hub” proposed in this paper aims to explore a new form of social presence between humans and AI agents, and Suzume-chan represents its first implementation.

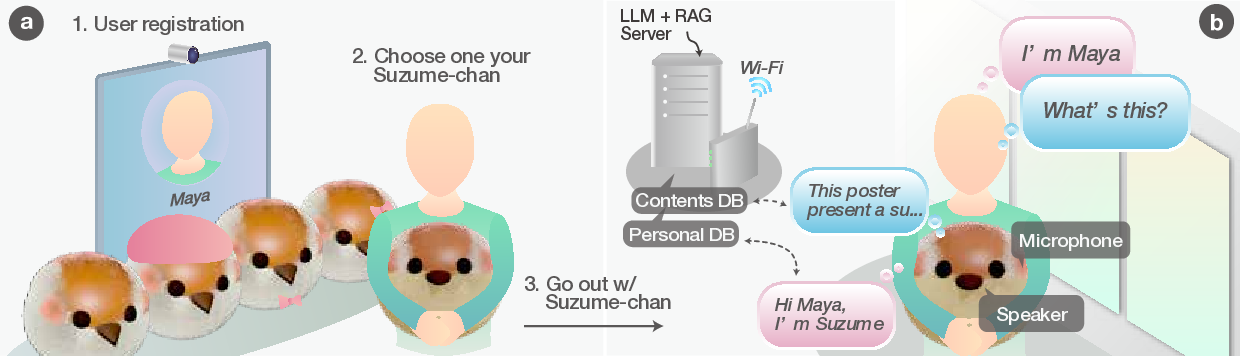

2 Method: Suzume-chan 2.1 System Overview Suzume-chan consists of two components: a handheld agent used by visitors for conversation, and a host computer that performs highload language processing. These two units are connected wirelessly via Wi-Fi, allowing the system to operate in a fully standalone environment.

2.1.1 Hardware. The agent body is covered with a soft plush exterior to provide a sense of psychological safety. Inside the body, a microphone captures the speaker’s voice and a speaker outputs Suzume-chan’s responses. The host computer is a Mac Studio equipped with 128 GB of unified memory. It runs open-source models locally, including a speech recognition model (e.g., Whisper [6]), large language models (LLM; e.g., Llama [10], gpt-oss-120b [2]), a vector database [9], and a speech synthesis engine. This configuration ensures both privacy protection and stable operation without dependence on an external network. arXiv:2512.09932v1 [cs.AI] 29 Oct 2025

The dialogue engine is based on a Retrieval-Augmented Generation (RAG) framework [4]. During the input phase, the spoken explanations are transcribed and converted into vector representations, which are stored in the database. During the explanation phase, visitors’ questions are also vectorized, and the system searches for relevant information within the database. The retrieved results are included in the prompt for the language model, which generates natural and contextually accurate responses.

The interaction with Suzume-chan follows a simple two-phase process. In the input phase, the presenter explains their research content to Suzume-chan, which processes the spoken information, divides it into smaller chunks, and stores them as vector representations in the database. In the explanation phase, visitors can initiate a conversation by using a wake word (e.g., “Hey, Suzume-chan”) (Fig. 1(b)). When they ask questions such as “What is special about this research?”, Suzume-chan retrieves relevant information from the database, summarizes it, and provides a natural and accessible response.

The originality of this study lies in proposing the concept of a “Physical Information Hub,” which expands the role of physical agents from providing emotional comfort to mediating expert knowledge. Conceptually, this work introduces a new type of agent that enables asynchronous mediation of expert knowledge. Technically, it implements a standalone dialogue system that combines a local LLM and RAG framework to ensure both privacy and usability. Practically, it presents a working prototype that addresses real-world challenges in academic knowledge sharing.

We conduct an empirical study with visitors in WISS 2025, Japan [1]. Before the session, presenters will teach Suzume-chan about their own research topics and this project. Visitors will freely interact with the s

This content is AI-processed based on open access ArXiv data.