A Penny for Your Thoughts: Decoding Speech from Inexpensive Brain Signals

Reading time: 1 minute

...

📝 Original Info

- Title: A Penny for Your Thoughts: Decoding Speech from Inexpensive Brain Signals

- ArXiv ID: 2511.04691

- Date: 2025-10-28

- Authors: ** 제공되지 않음 (논문에 저자 정보가 포함되지 않았습니다.) **

📝 Abstract

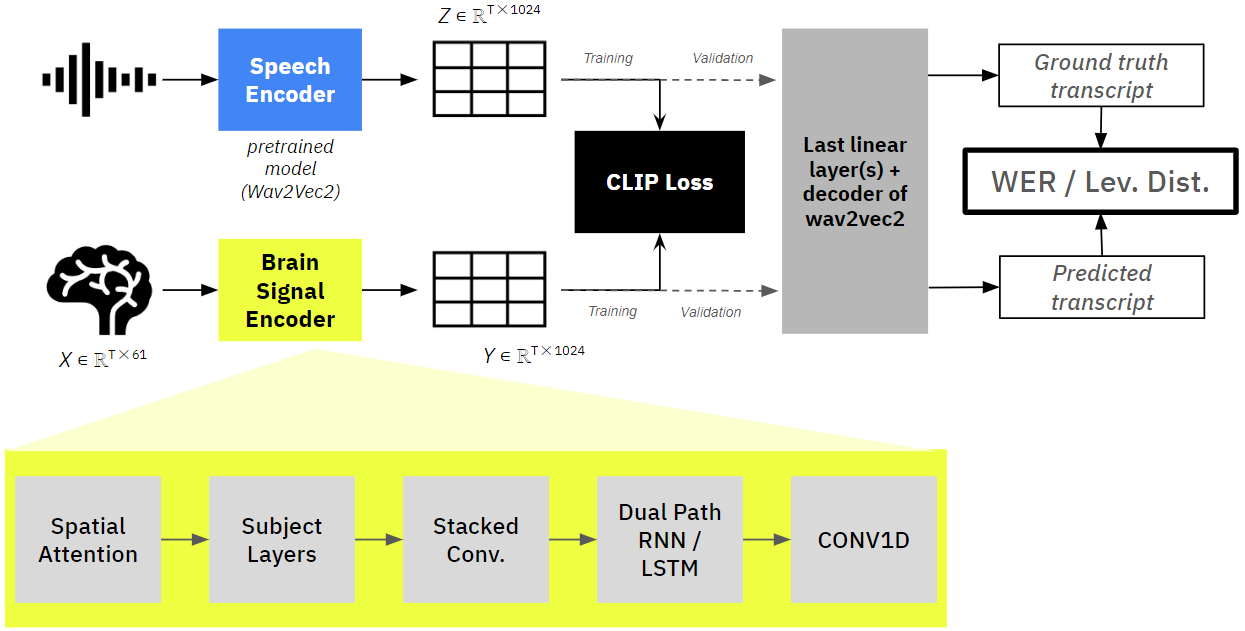

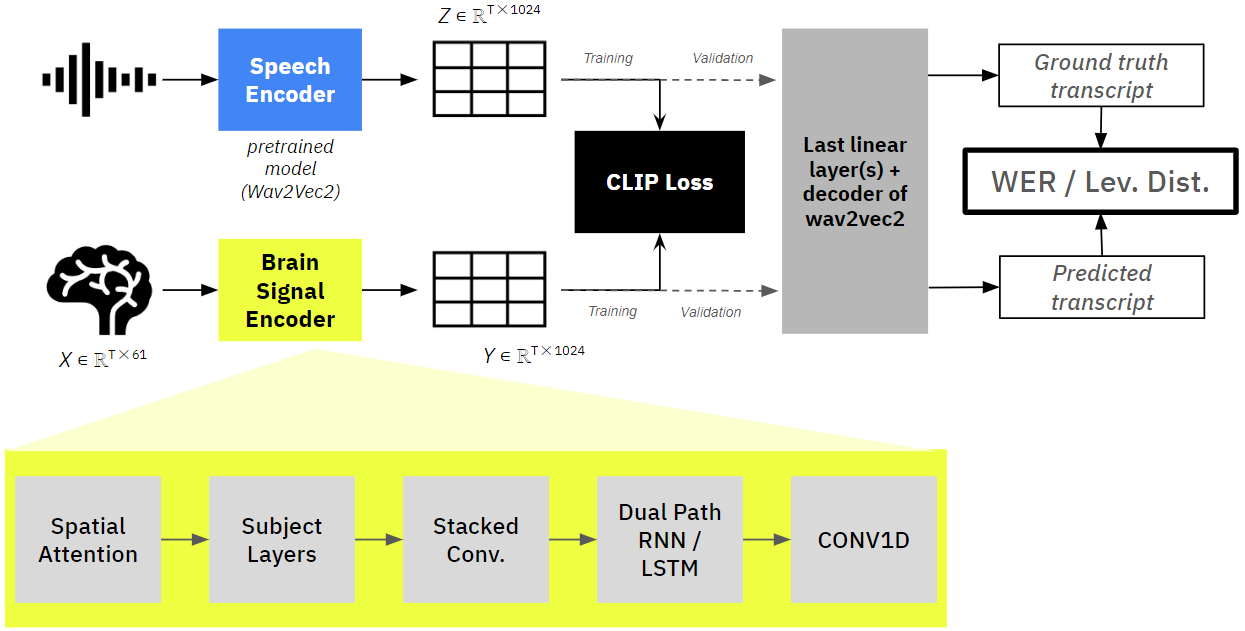

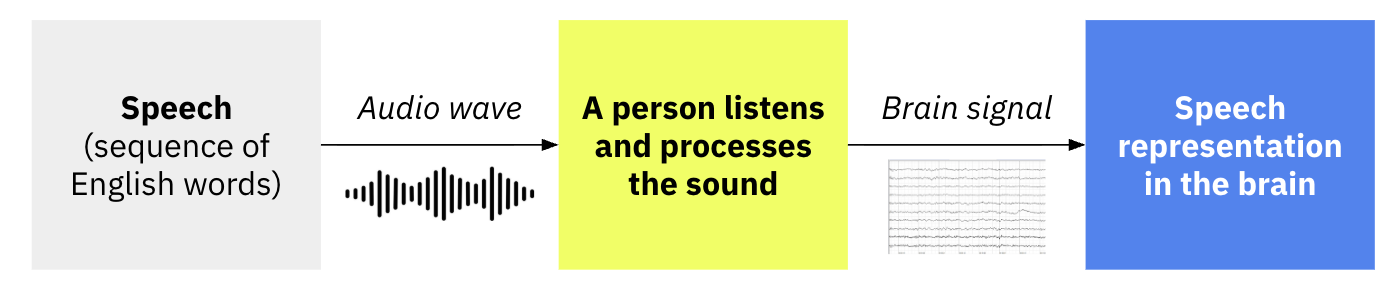

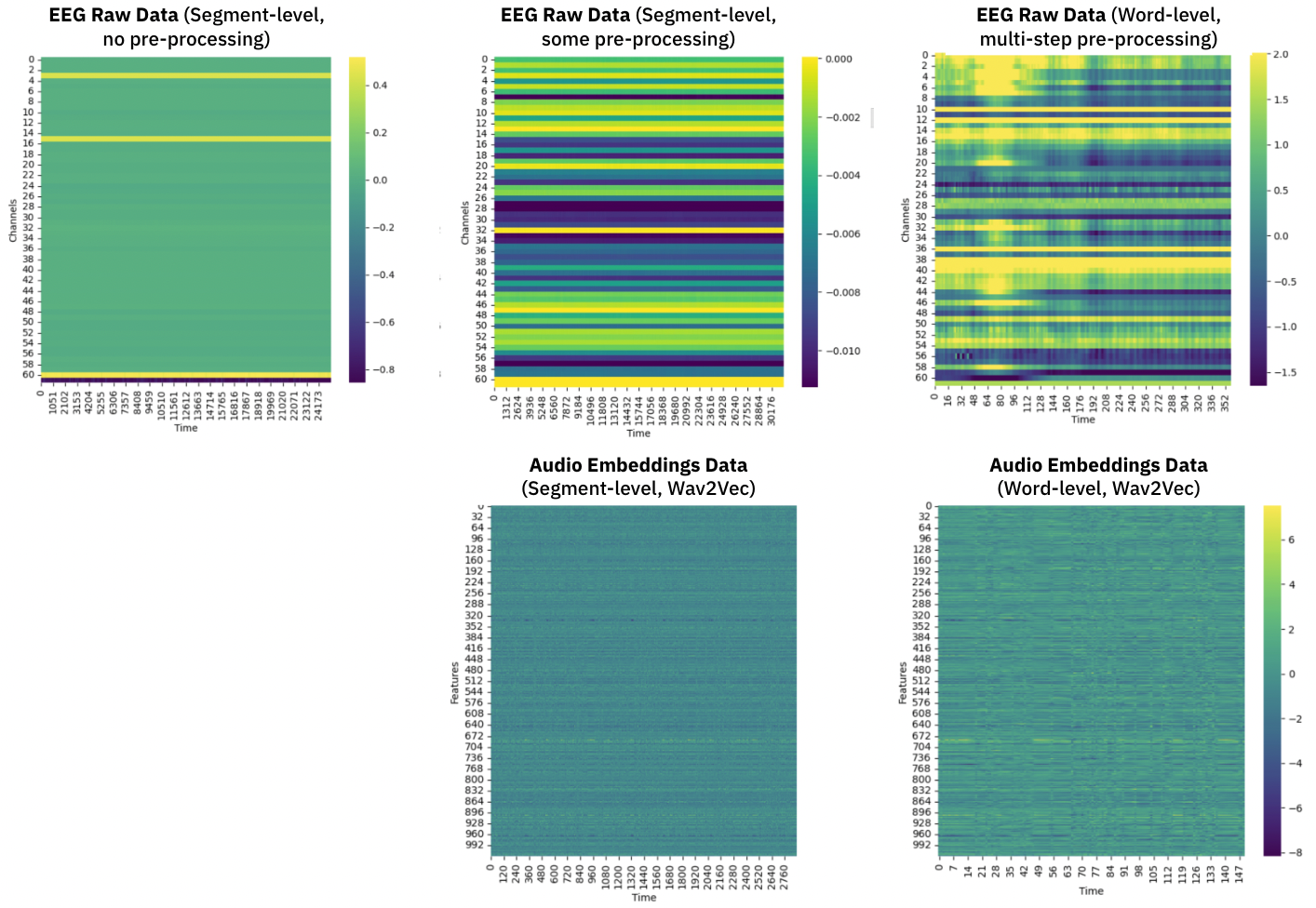

We explore whether neural networks can decode brain activity into speech by mapping EEG recordings to audio representations. Using EEG data recorded as subjects listened to natural speech, we train a model with a contrastive CLIP loss to align EEG-derived embeddings with embeddings from a pre-trained transformer-based speech model. Building on the state-of-the-art EEG decoder from Meta, we introduce three architectural modifications: (i) subject-specific attention layers (+0.15% WER improvement), (ii) personalized spatial attention (+0.45%), and (iii) a dual-path RNN with attention (-1.87%). Two of the three modifications improved performance, highlighting the promise of personalized architectures for brain-to-speech decoding and applications in brain-computer interfaces.💡 Deep Analysis

📄 Full Content

📸 Image Gallery

Reference

This content is AI-processed based on open access ArXiv data.