MGA: Memory-Driven GUI Agent for Observation-Centric Interaction

Reading time: 2 minute

...

📝 Original Info

- Title: MGA: Memory-Driven GUI Agent for Observation-Centric Interaction

- ArXiv ID: 2510.24168

- Date: 2025-10-28

- Authors: Anonymous (논문이 익명으로 제출된 것으로 판단됩니다.)

📝 Abstract

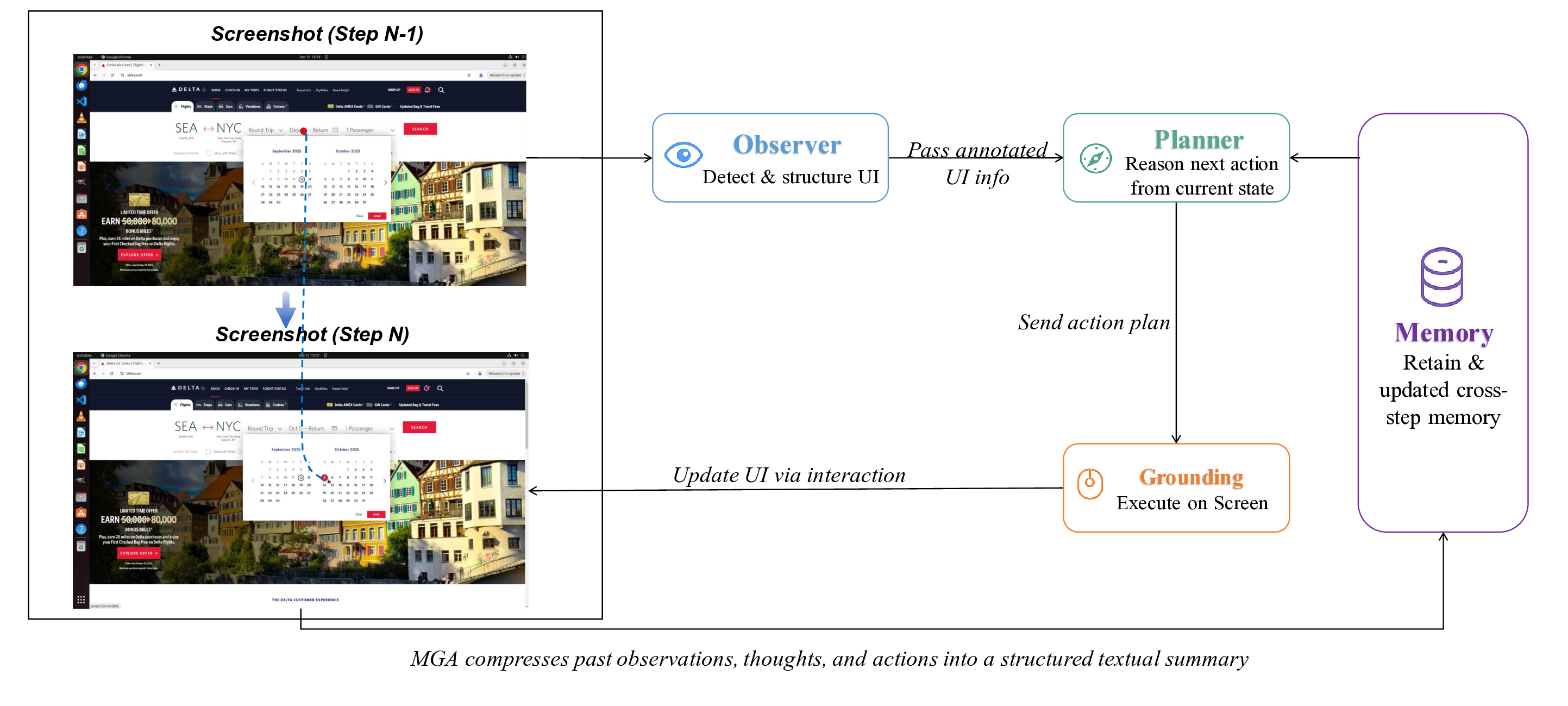

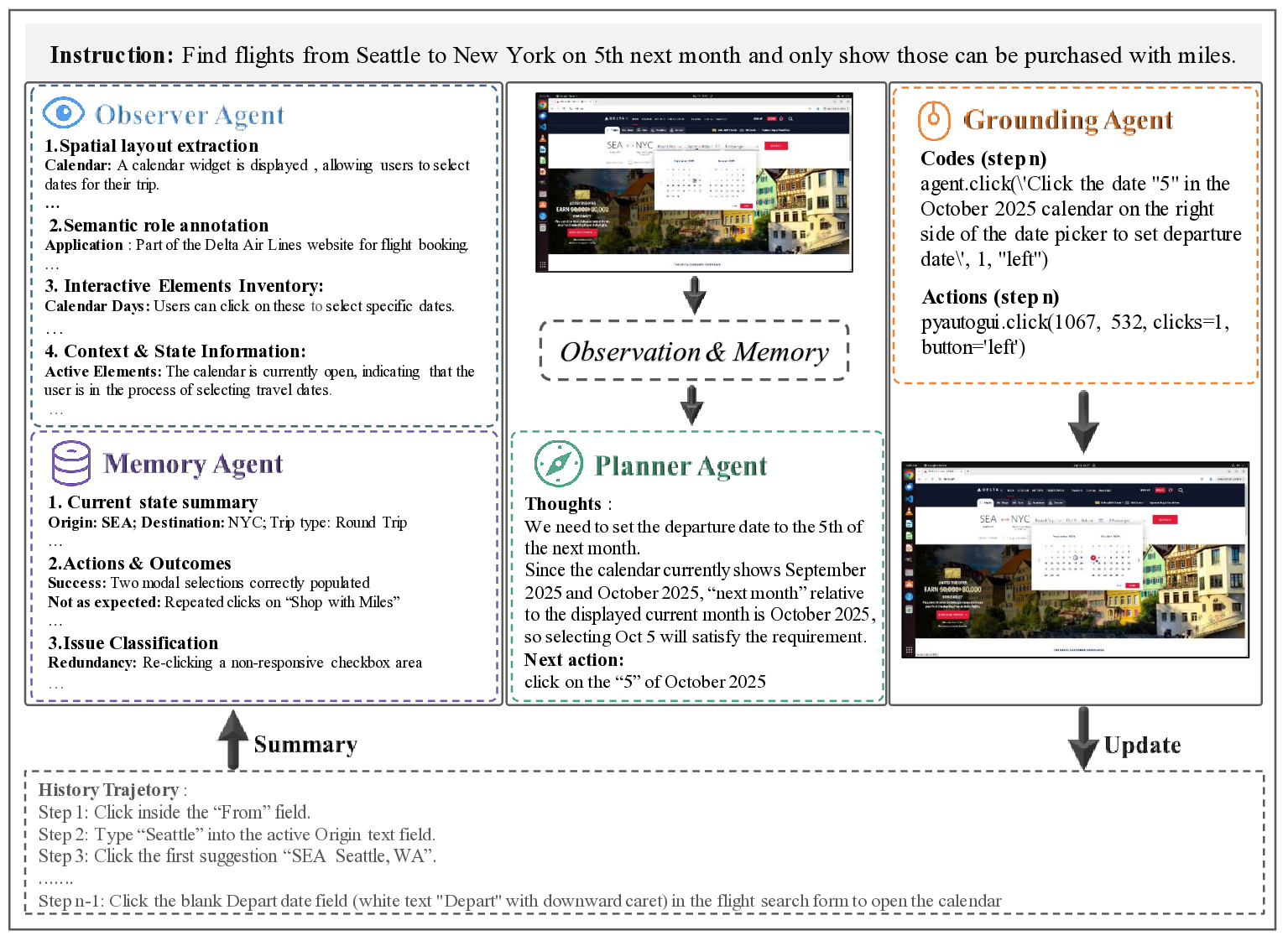

The rapid progress of Large Language Models (LLMs) and their multimodal extensions (MLLMs) has enabled agentic systems capable of perceiving and acting across diverse environments. A challenging yet impactful frontier is the development of GUI agents, which must navigate complex desktop and web interfaces while maintaining robustness and generalization. Existing paradigms typically model tasks as long-chain executions, concatenating historical trajectories into the context. While approaches such as Mirage and GTA1 refine planning or introduce multi-branch action selection, they remain constrained by two persistent issues: Dependence on historical trajectories, which amplifies error propagation. And Local exploration bias, where "decision-first, observation-later" mechanisms overlook critical interface cues. We introduce the Memory-Driven GUI Agent (MGA), which reframes GUI interaction around the principle of observe first, then decide. MGA models each step as an independent, context-rich environment state represented by a triad: current screenshot, task-agnostic spatial information, and a dynamically updated structured memory. Experiments on OSworld benchmarks, real desktop applications (Chrome, VSCode, VLC), and cross-task transfer demonstrate that MGA achieves substantial gains in robustness, generalization, and efficiency compared to state-of-the-art baselines. The code is publicly available at: {https://anonymous.4open.science/r/MGA-3571}.💡 Deep Analysis

📄 Full Content

📸 Image Gallery

Reference

This content is AI-processed based on open access ArXiv data.