MoEMeta: Mixture-of-Experts Meta Learning for Few-Shot Relational Learning

📝 Original Info

- Title: MoEMeta: Mixture-of-Experts Meta Learning for Few-Shot Relational Learning

- ArXiv ID: 2510.23013

- Date: 2025-10-27

- Authors: 정보 없음 (원문에 저자 정보가 제공되지 않았습니다.)

📝 Abstract

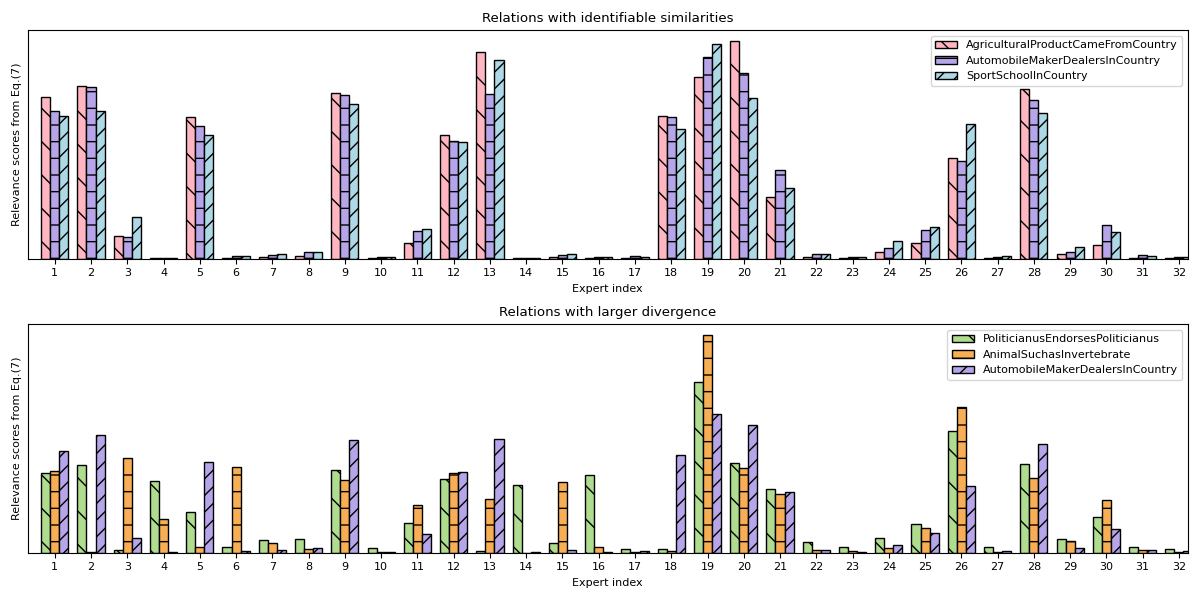

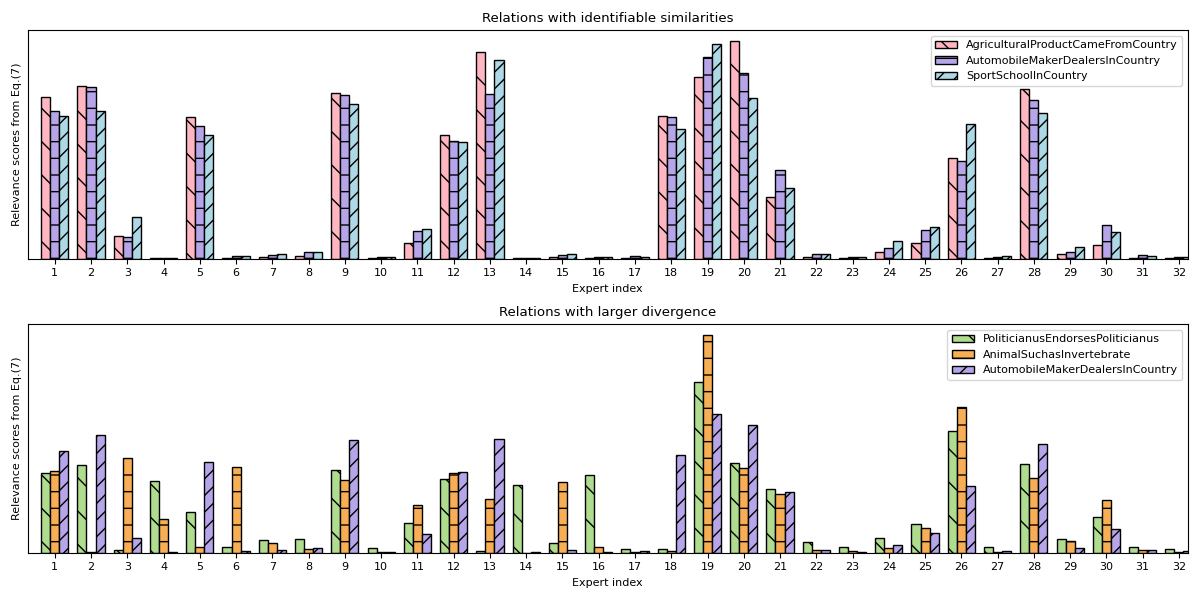

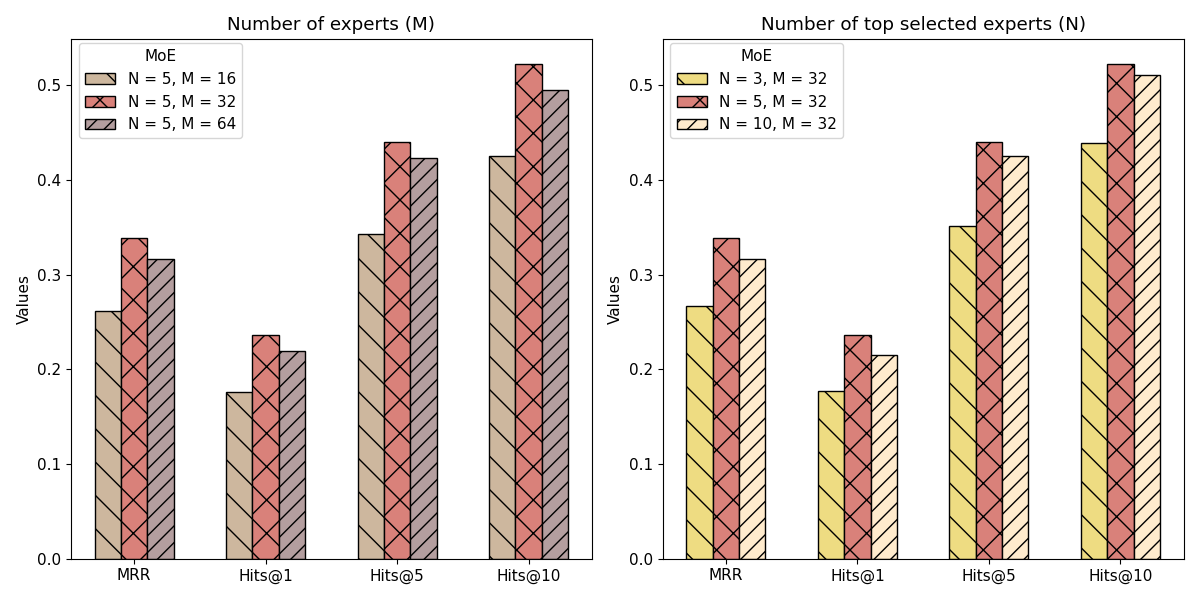

Few-shot knowledge graph relational learning seeks to perform reasoning over relations given only a limited number of training examples. While existing approaches largely adopt a meta-learning framework for enabling fast adaptation to new relations, they suffer from two key pitfalls. First, they learn relation meta-knowledge in isolation, failing to capture common relational patterns shared across tasks. Second, they struggle to effectively incorporate local, task-specific contexts crucial for rapid adaptation. To address these limitations, we propose MoEMeta, a novel meta-learning framework that disentangles globally shared knowledge from task-specific contexts to enable both effective model generalization and rapid adaptation. MoEMeta introduces two key innovations: (i) a mixture-of-experts (MoE) model that learns globally shared relational prototypes to enhance generalization, and (ii) a task-tailored adaptation mechanism that captures local contexts for fast task-specific adaptation. By balancing global generalization with local adaptability, MoEMeta significantly advances few-shot relational learning. Extensive experiments and analyses on three KG benchmarks show that MoEMeta consistently outperforms existing baselines, achieving state-of-the-art performance.💡 Deep Analysis

📄 Full Content

📸 Image Gallery

Reference

This content is AI-processed based on open access ArXiv data.