Mutual Information guided Visual Contrastive Learning

📝 Original Info

- Title: Mutual Information guided Visual Contrastive Learning

- ArXiv ID: 2511.00028

- Date: 2025-10-26

- Authors: ** 제공된 논문 정보에 저자 명단이 포함되어 있지 않아 확인할 수 없습니다. (※ 필요 시 원문 PDF 혹은 학회 페이지에서 확인 바랍니다.) **

📝 Abstract

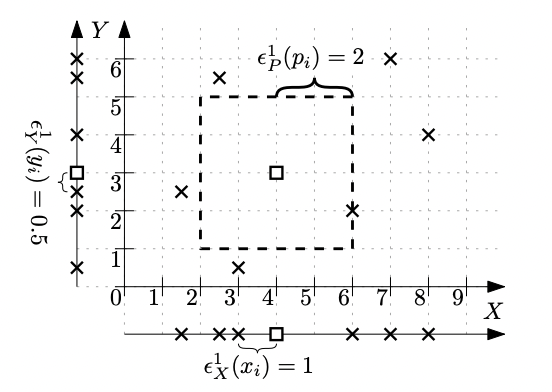

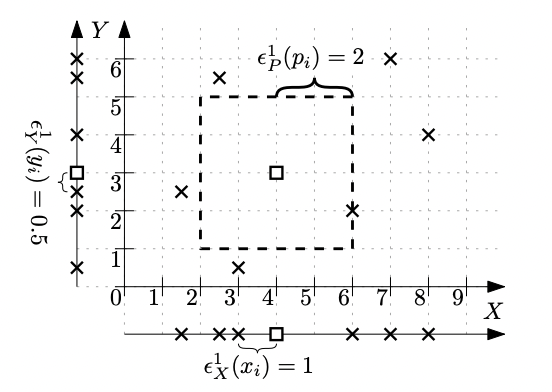

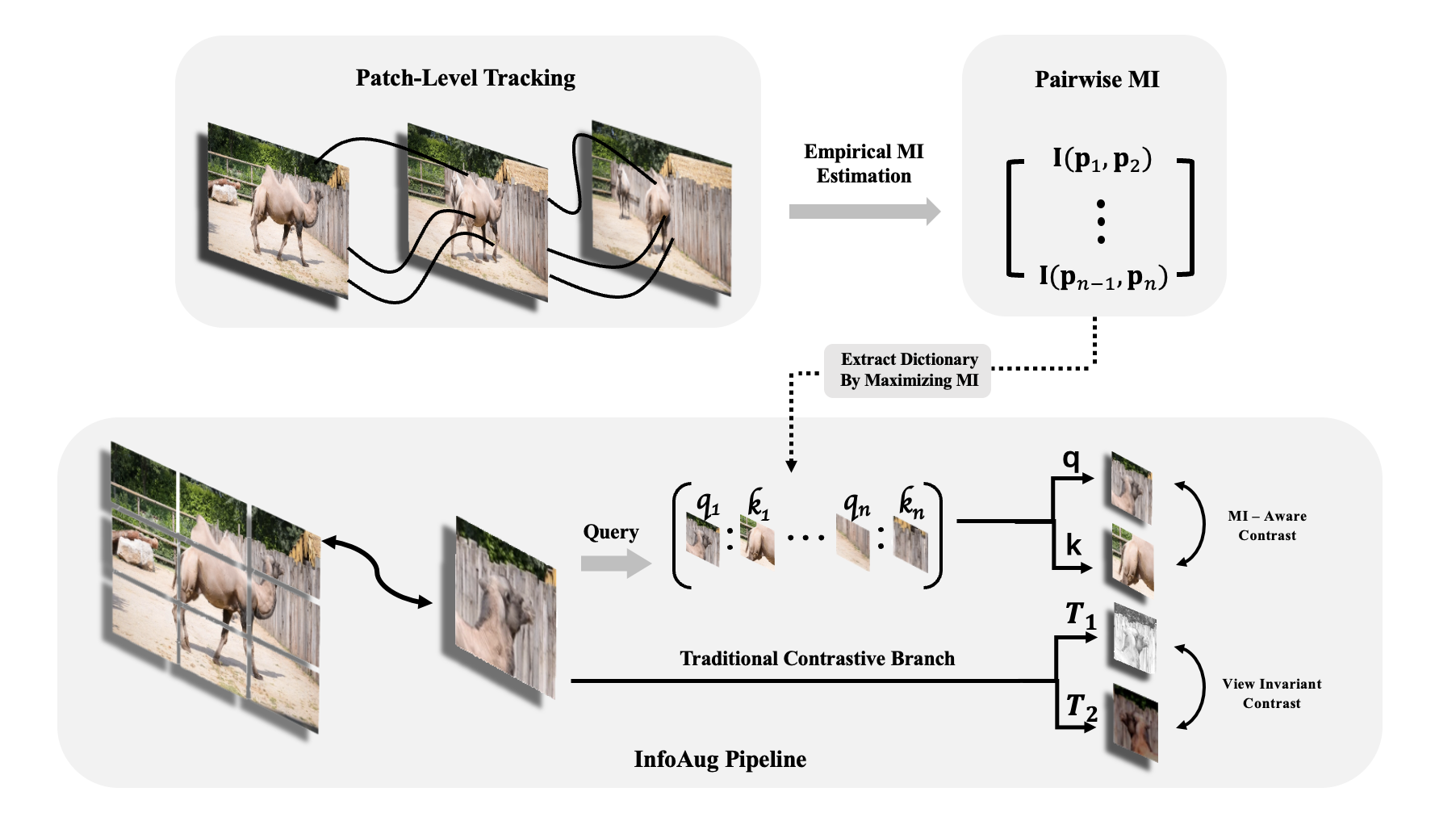

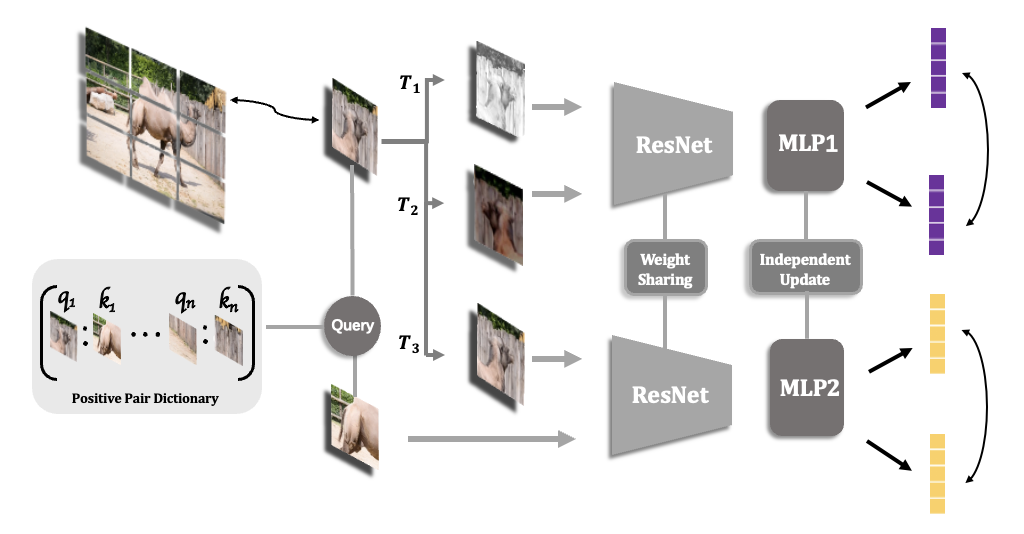

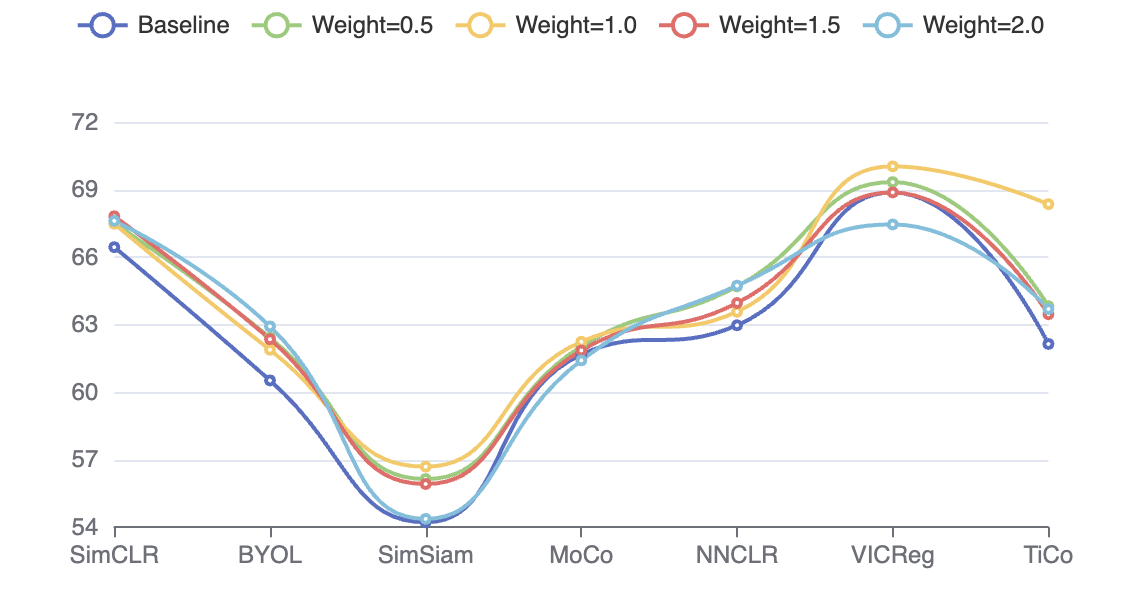

Representation learning methods utilizing the InfoNCE loss have demonstrated considerable capacity in reducing human annotation effort by training invariant neural feature extractors. Although different variants of the training objective adhere to the information maximization principle between the data and learned features, data selection and augmentation still rely on human hypotheses or engineering, which may be suboptimal. For instance, data augmentation in contrastive learning primarily focuses on color jittering, aiming to emulate real-world illumination changes. In this work, we investigate the potential of selecting training data based on their mutual information computed from real-world distributions, which, in principle, should endow the learned features with better generalization when applied in open environments. Specifically, we consider patches attached to scenes that exhibit high mutual information under natural perturbations, such as color changes and motion, as positive samples for learning with contrastive loss. We evaluate the proposed mutual-information-informed data augmentation method on several benchmarks across multiple state-of-the-art representation learning frameworks, demonstrating its effectiveness and establishing it as a promising direction for future research.💡 Deep Analysis

📄 Full Content

📸 Image Gallery

Reference

This content is AI-processed based on open access ArXiv data.