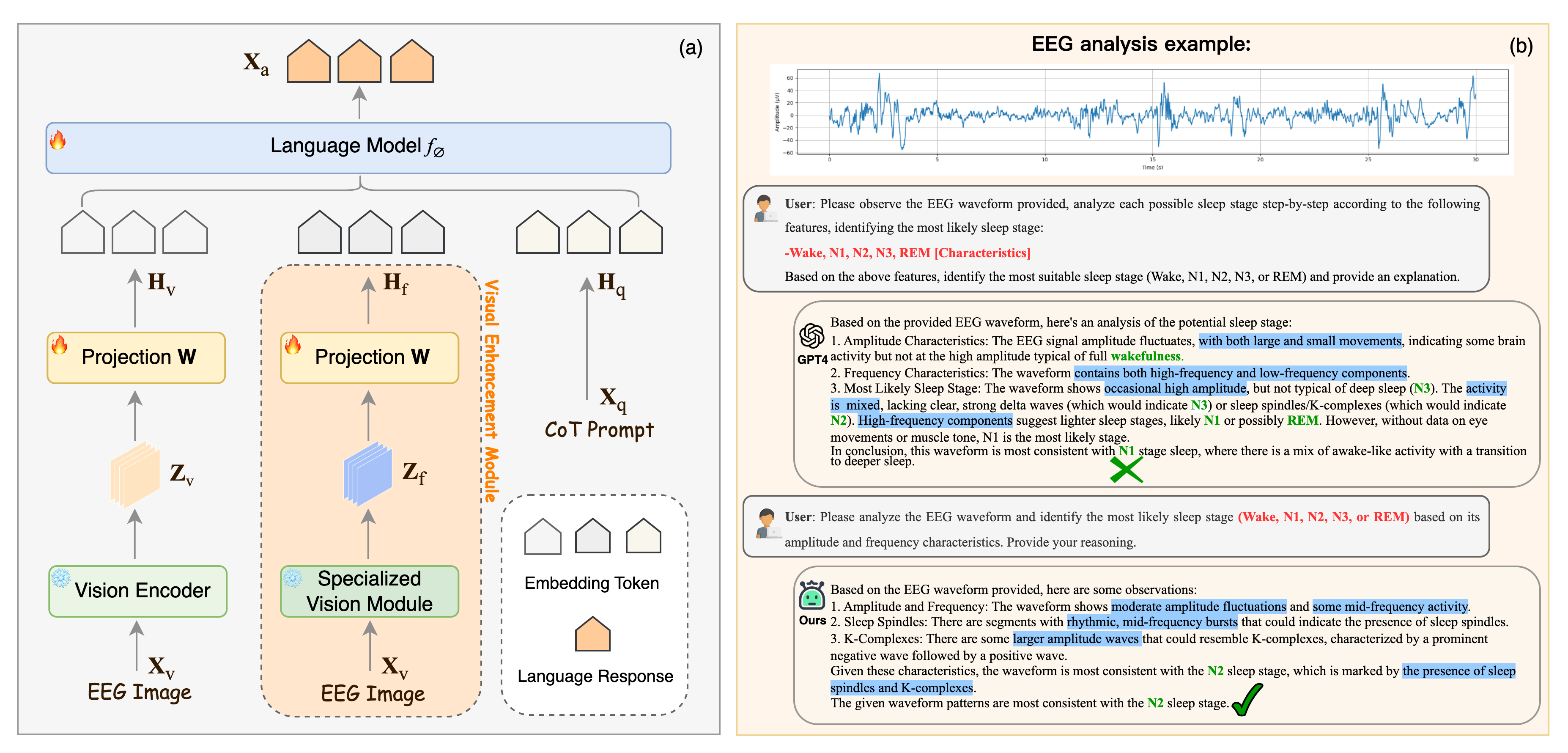

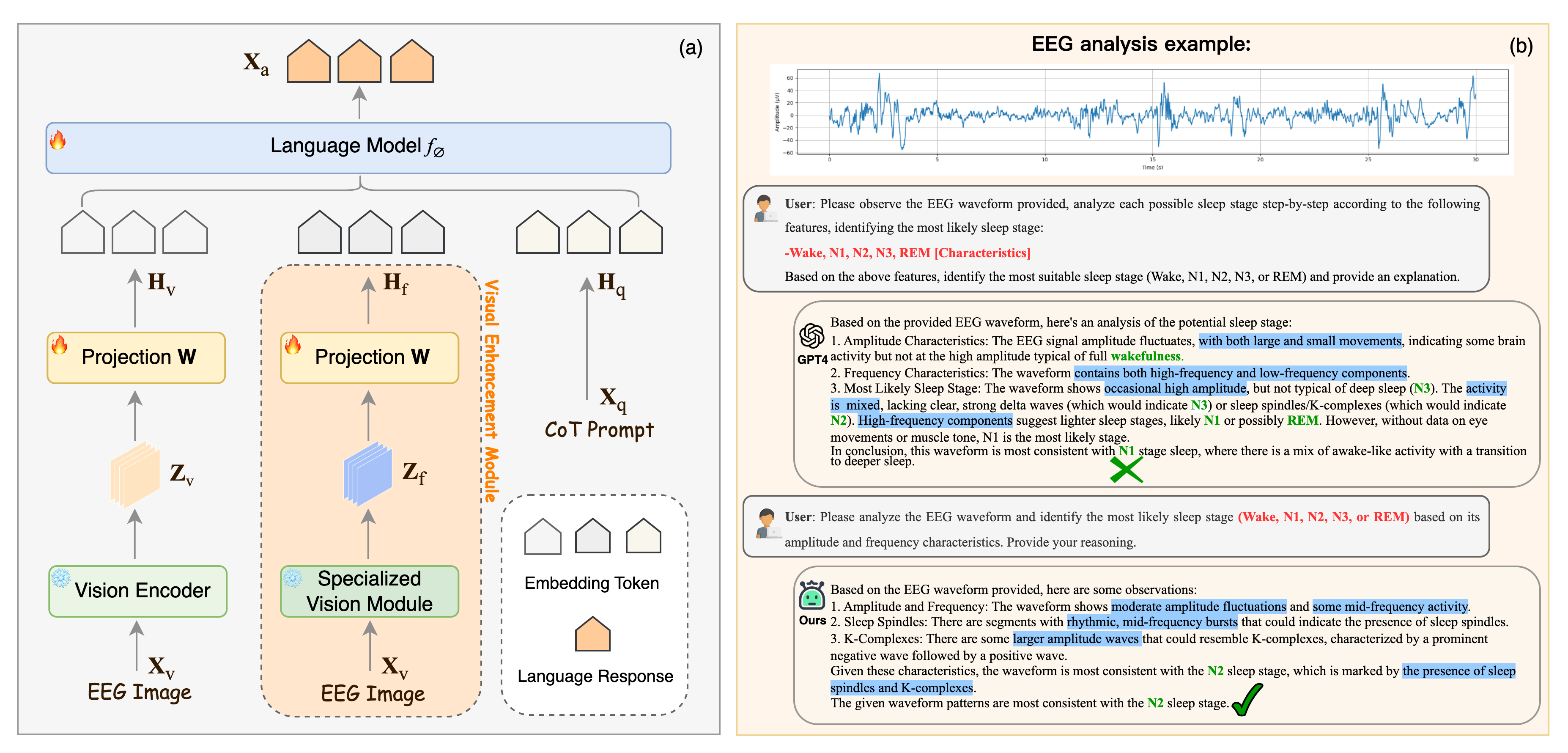

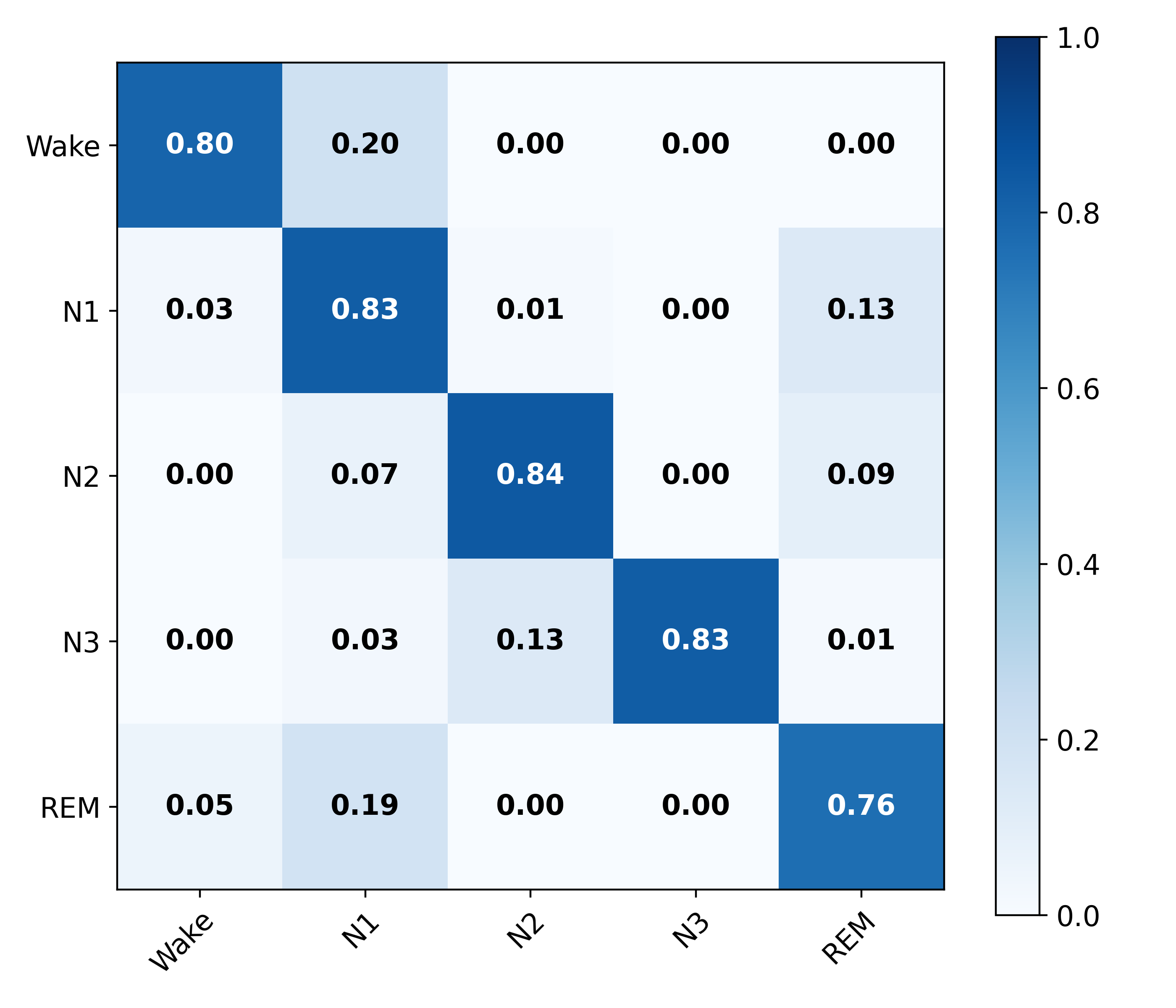

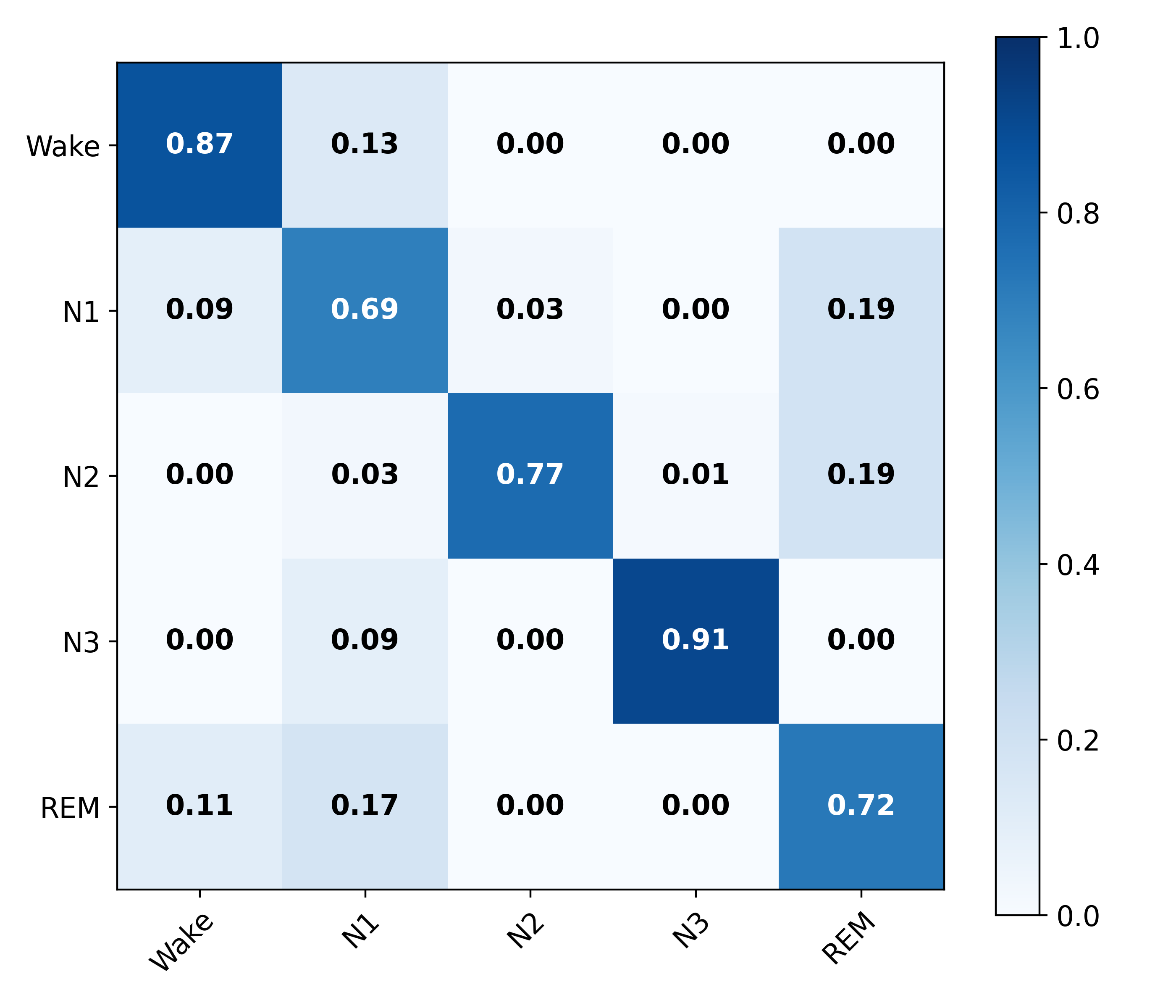

Sleep stage classification based on electroencephalography (EEG) is fundamental for assessing sleep quality and diagnosing sleep-related disorders. However, most traditional machine learning methods rely heavily on prior knowledge and handcrafted features, while existing deep learning models still struggle to jointly capture fine-grained time-frequency patterns and achieve clinical interpretability. Recently, vision-language models (VLMs) have made significant progress in the medical domain, yet their performance remains constrained when applied to physiological waveform data, especially EEG signals, due to their limited visual understanding and insufficient reasoning capability. To address these challenges, we propose EEG-VLM, a hierarchical vision-language framework that integrates multi-level feature alignment with visually enhanced language-guided reasoning for interpretable EEG-based sleep stage classification. Specifically, a specialized visual enhancement module constructs high-level visual tokens from intermediate-layer features to extract rich semantic representations of EEG images. These tokens are further aligned with low-level CLIP features through a multi-level alignment mechanism, enhancing the VLM's image-processing capability. In addition, a Chain-of-Thought (CoT) reasoning strategy decomposes complex medical inference into interpretable logical steps, effectively simulating expert-like decision-making. Experimental results demonstrate that the proposed method significantly improves both the accuracy and interpretability of VLMs in EEG-based sleep stage classification, showing promising potential for automated and explainable EEG analysis in clinical settings.

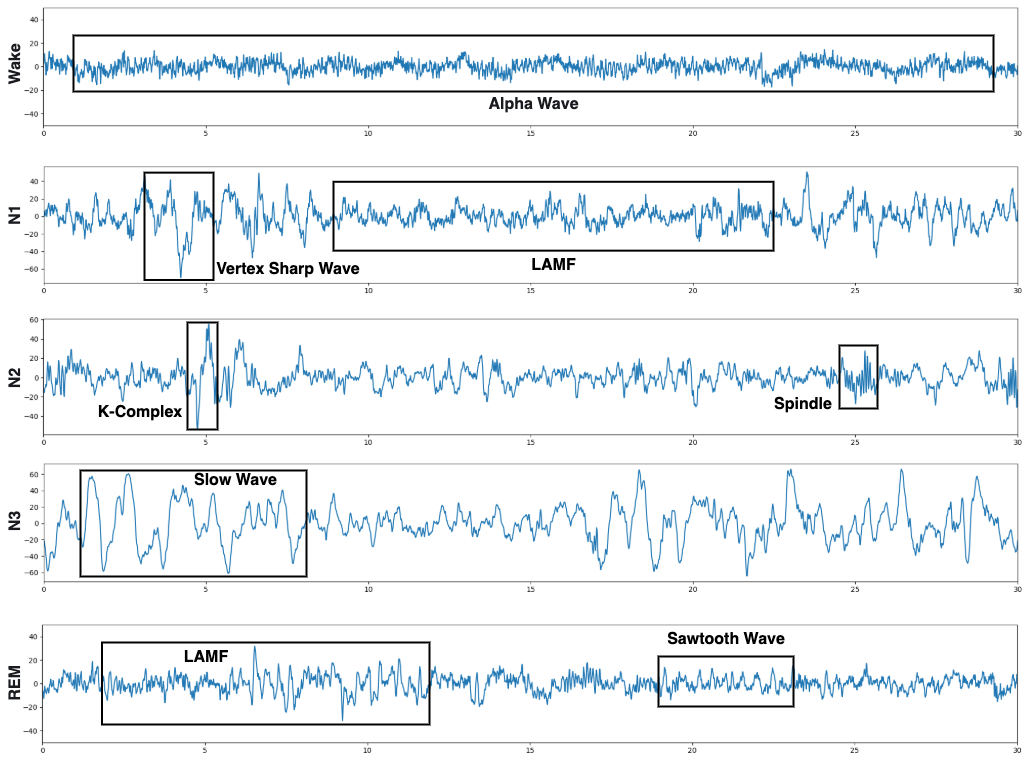

Sleep plays a vital role in maintaining brain function and overall physiological health [1]. Accurate assessment of sleep quality not only reflects an individual's health status but also provides an essential basis for diagnosing and treating sleep-related disorders [2]- [4]. Currently, the American Academy of Sleep Medicine (AASM) standards [5] are widely adopted for sleep stage scoring. Among various physiological signals, electroencephalography (EEG) is widely regarded as the most informative and commonly used modality for sleep stage classification [6]- [8], as it captures rich physiological and pathological information and clearly differentiates between different sleep stages [9], [10].

Waveform morphology and frequency composition are central to EEG-based sleep stage classification. Sleep experts rely on identifying characteristic waveforms-such as alpha, beta, and theta rhythms-within each 30-second epoch to determine sleep stages. However, sleep stage classification is guided by complex clinical criteria, making it a labor-intensive, time-consuming process that is prone to inter-rater variability.

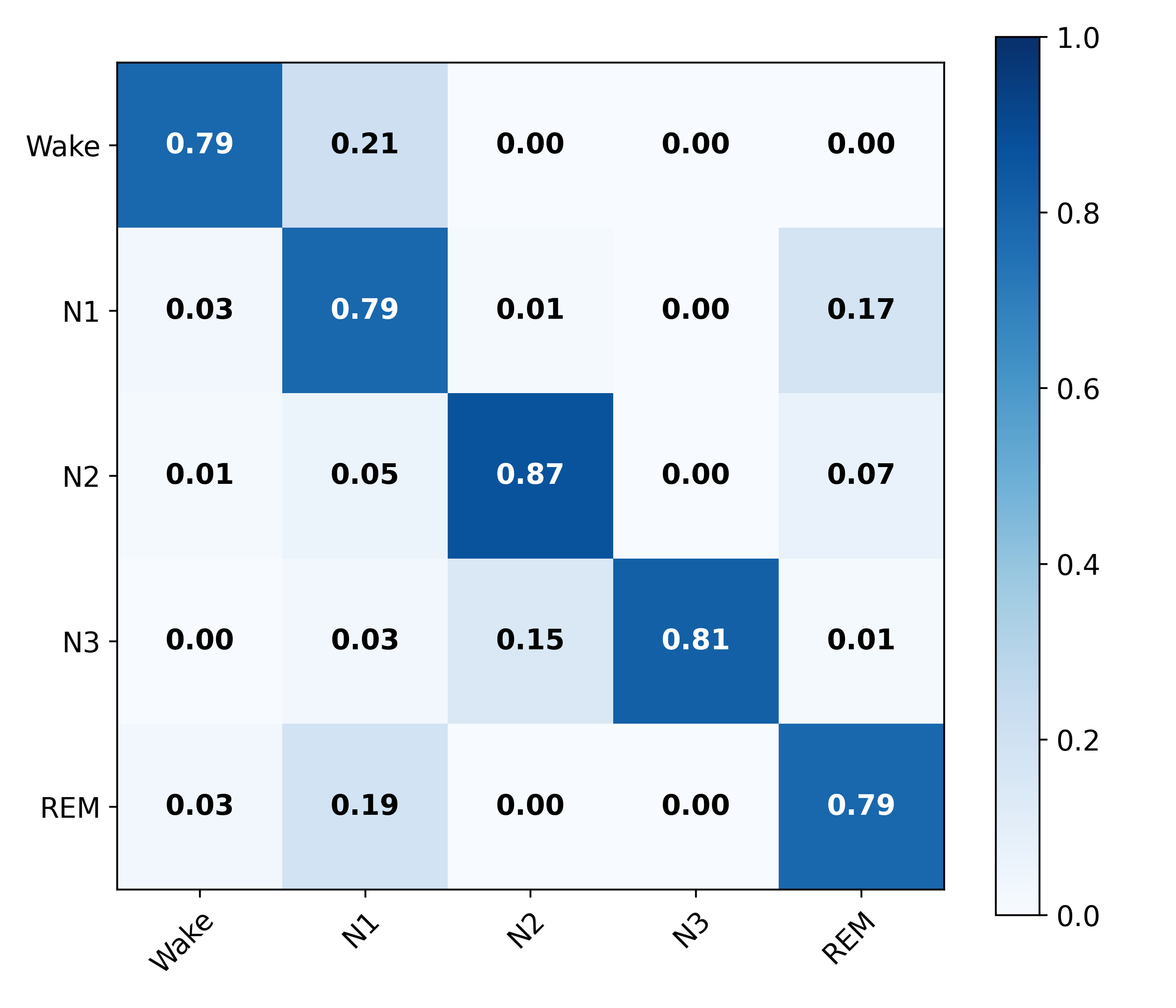

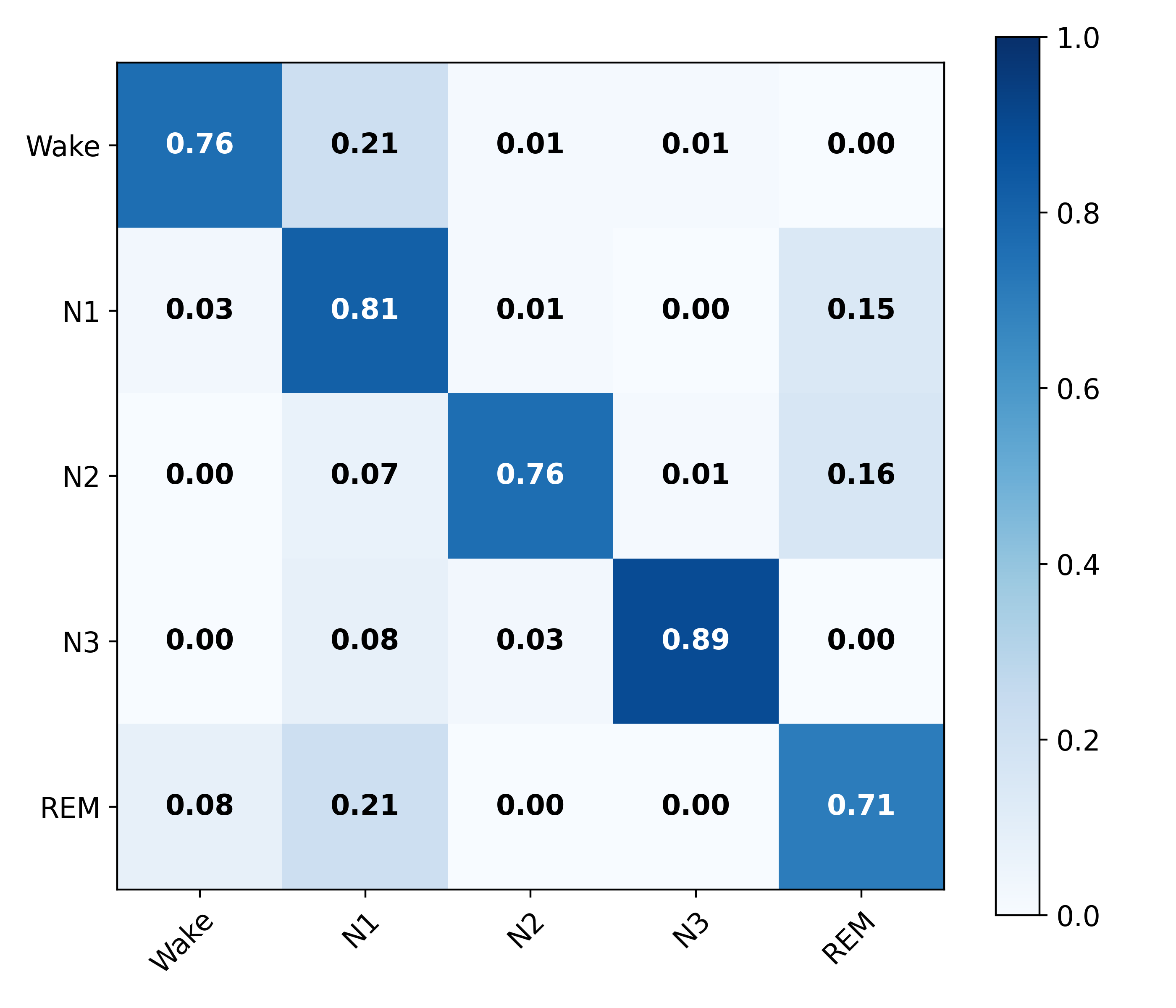

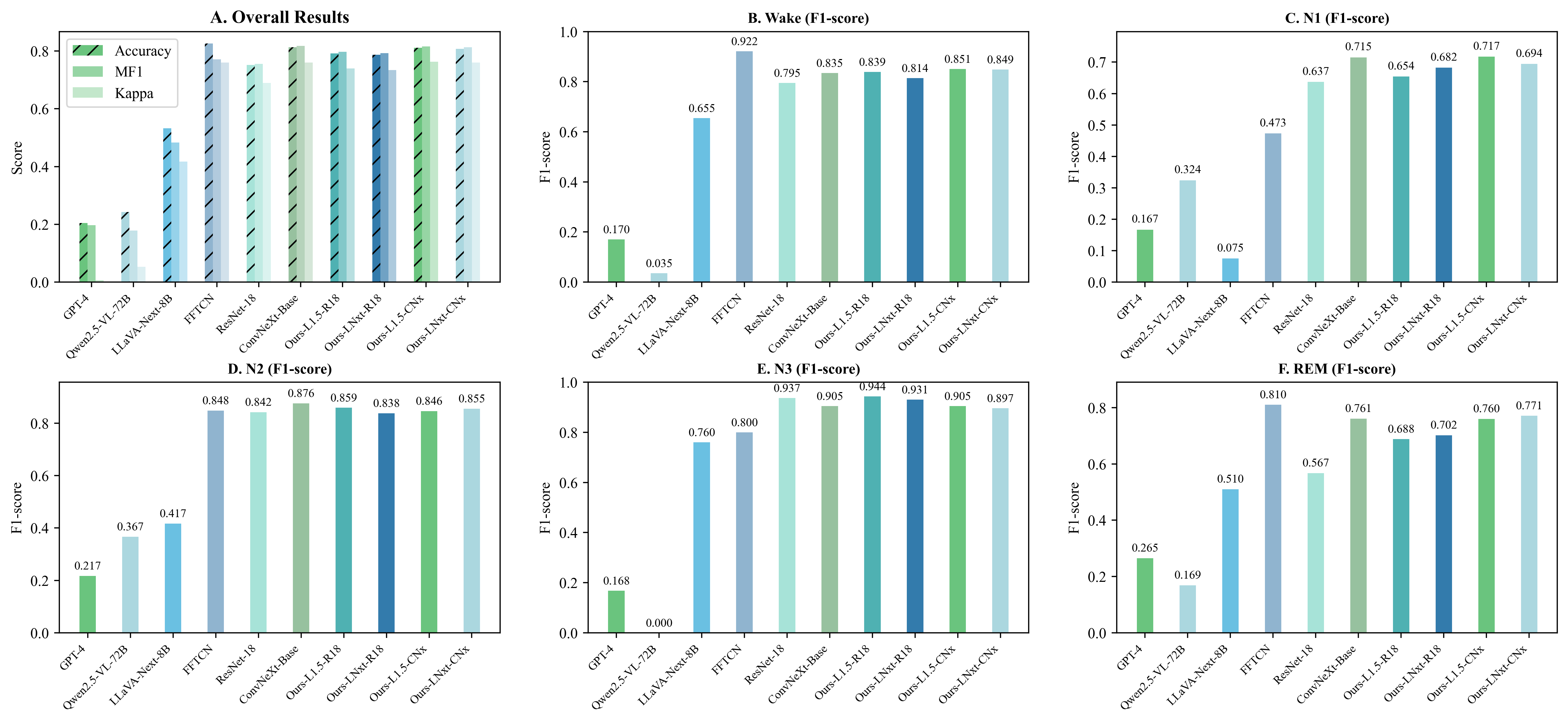

To overcome these limitations, numerous automatic sleep stage classification methods have been proposed. Traditional machine learning approaches [11]- [14] rely heavily on handcrafted features and prior domain knowledge, which limits their adaptability and generalization capability. In contrast, deep learning-based methods [15]- [18] can automatically extract discriminative representations from EEG signals. However, they often struggle to capture fine-grained distinctions-particularly between physiologically similar stages such as N1 and REMresulting in suboptimal performance.

Recently, vision-language models (VLMs) [19]- [24] have demonstrated impressive performance across various general-purpose tasks by leveraging joint visual-textual representations. Although their application in the medical domain has gained increasing attention, their performance remains limited when applied to physiological waveform data-particularly EEG-due to insufficient capacity for fine-grained visual perception, effective image processing, and domain-specific reasoning [25]- [27]. These challenges restrict the applicability of VLMs in complex clinical contexts such as EEG-based sleep stage classification.

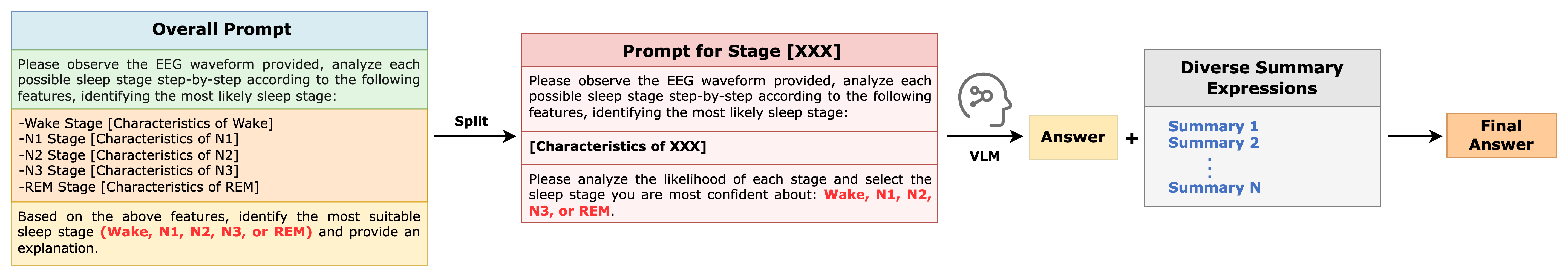

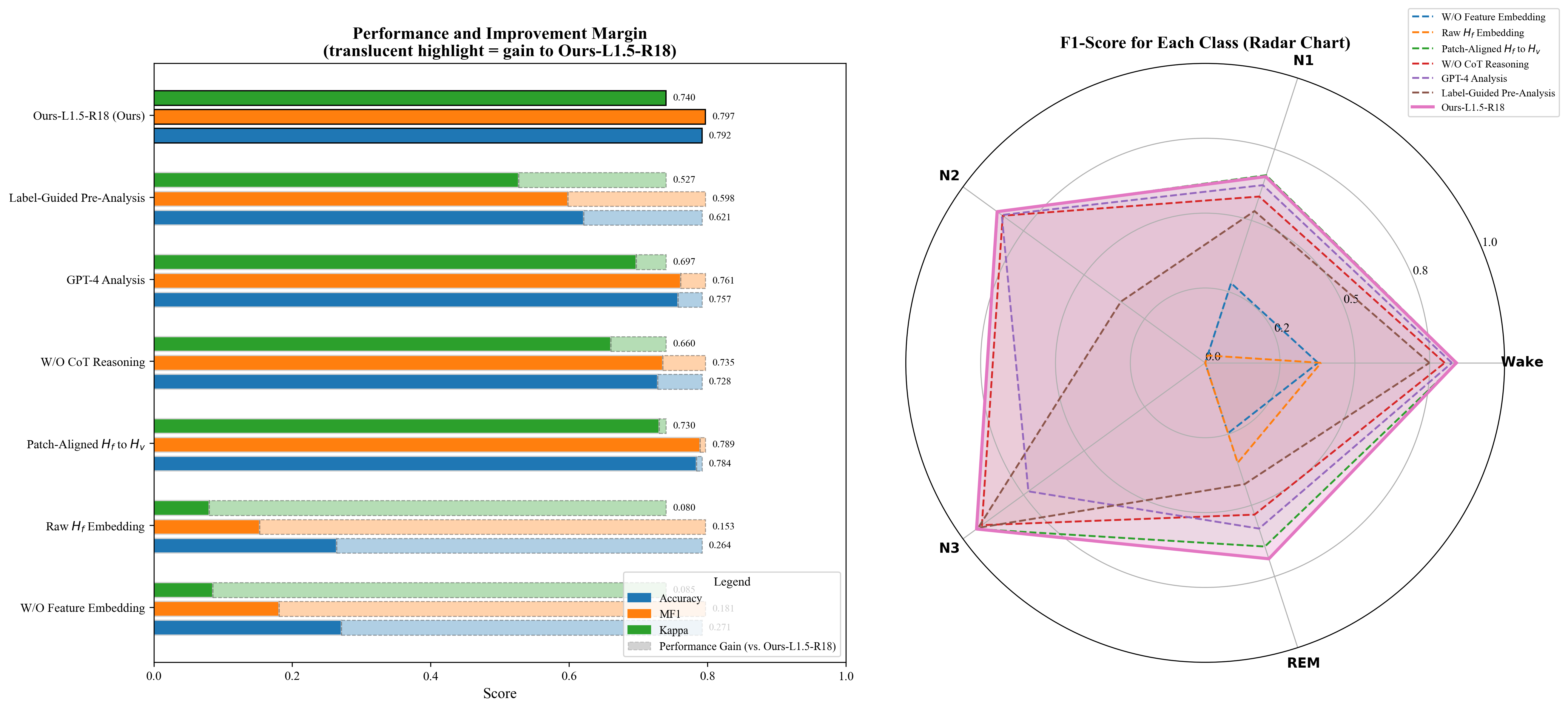

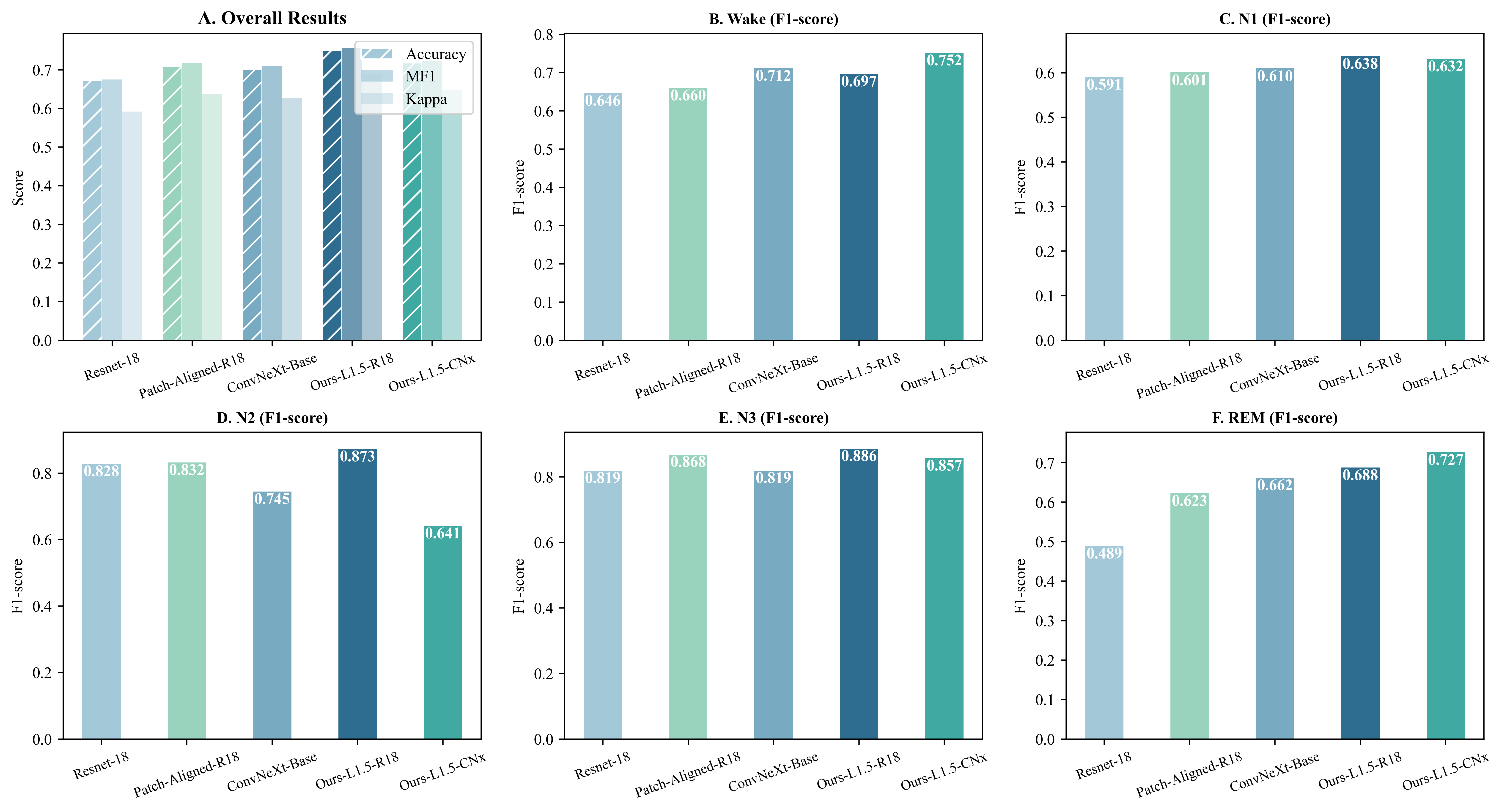

To address these challenges, we propose a hierarchi-cal vision-language framework tailored for EEG imagebased representation learning. Specifically, we augment the visual encoder with a visual enhancement module that extracts intermediate-level representations and transforms them into high-level visual tokens, enabling the model to capture both fine-grained visual details and abstract semantic information. These high-level semantic representations are then aligned and integrated with lowlevel visual features extracted by CLIP through a multilevel feature alignment mechanism, facilitating multi-scale perception and bridging semantic gaps across hierarchical representations. On the language side, we incorporate a CoT prompting strategy to guide the model through structured, step-wise reasoning that simulates expert decision-making. This integrated architecture allows the model to achieve accurate and interpretable predictions, particularly for ambiguous stages such as N1 and REM.

The key contributions of our work are summarized as follows.

- We propose EEG-VLM, a hierarchical VLM that combines multi-level feature alignment with visually enhanced language-guided reasoning, demonstrating the feasibility and potential of VLM for EEG-based sleep stage classification. 2) We design a visual enhancement module that constructs high-level visual representations from intermediate-layer features, enabling the model to capture deep semantic information from EEG images. 3) We introduce a multi-level feature alignment mechanism to effectively fuse visual tokens from different levels, thereby enhancing the model’s image processing and feature representation capabilities. 4) By employing CoT reasoning, we simplify complex inference tasks, improving the transparency and accuracy of the model’s decision-making while effectively simulating the step-by-step judgment of human experts. 5) Experimental results show that our method demonstrates robustness to ambiguous sleep stage boundaries (e.g., N1 and REM) and enhanced interpretability, providing new insights and a promising direction for physiological waveform analysis.

Traditional approaches to automatic sleep stage classification primarily rely on handcrafted features extracted from the time, frequency, or time-frequency domains of EEG signals. These features are typically fed into classical machine learning algorithms such as Support Vector Machines (SVM), k-Nearest Neighbors (KNN), or Random Forests (RF) [7], [28]. For example, [29] combined tunable-Q factor wavelet transform (TQWT) and normal inverse Gaussian (NIG) parameters with AdaBoost, while [30] integrated multiple signal decomp

This content is AI-processed based on open access ArXiv data.