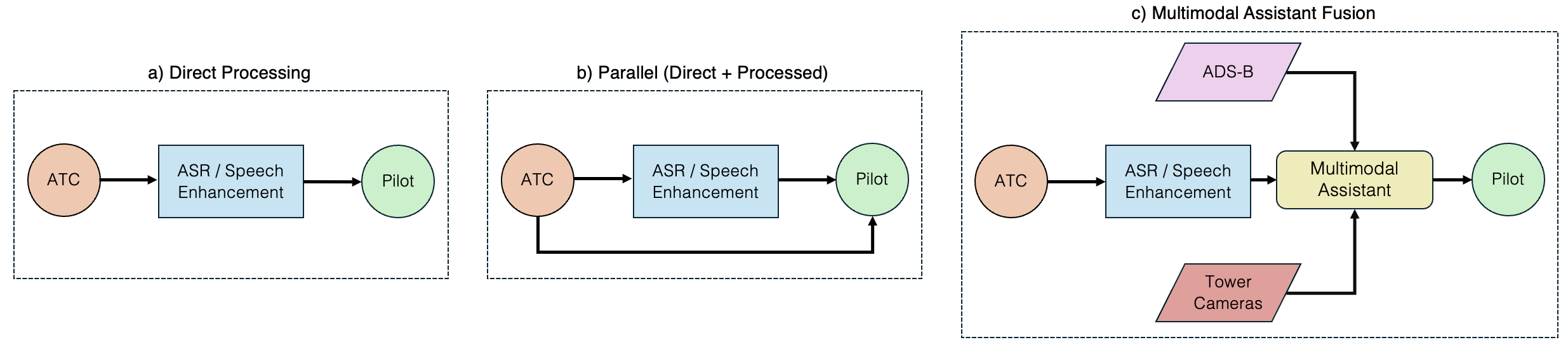

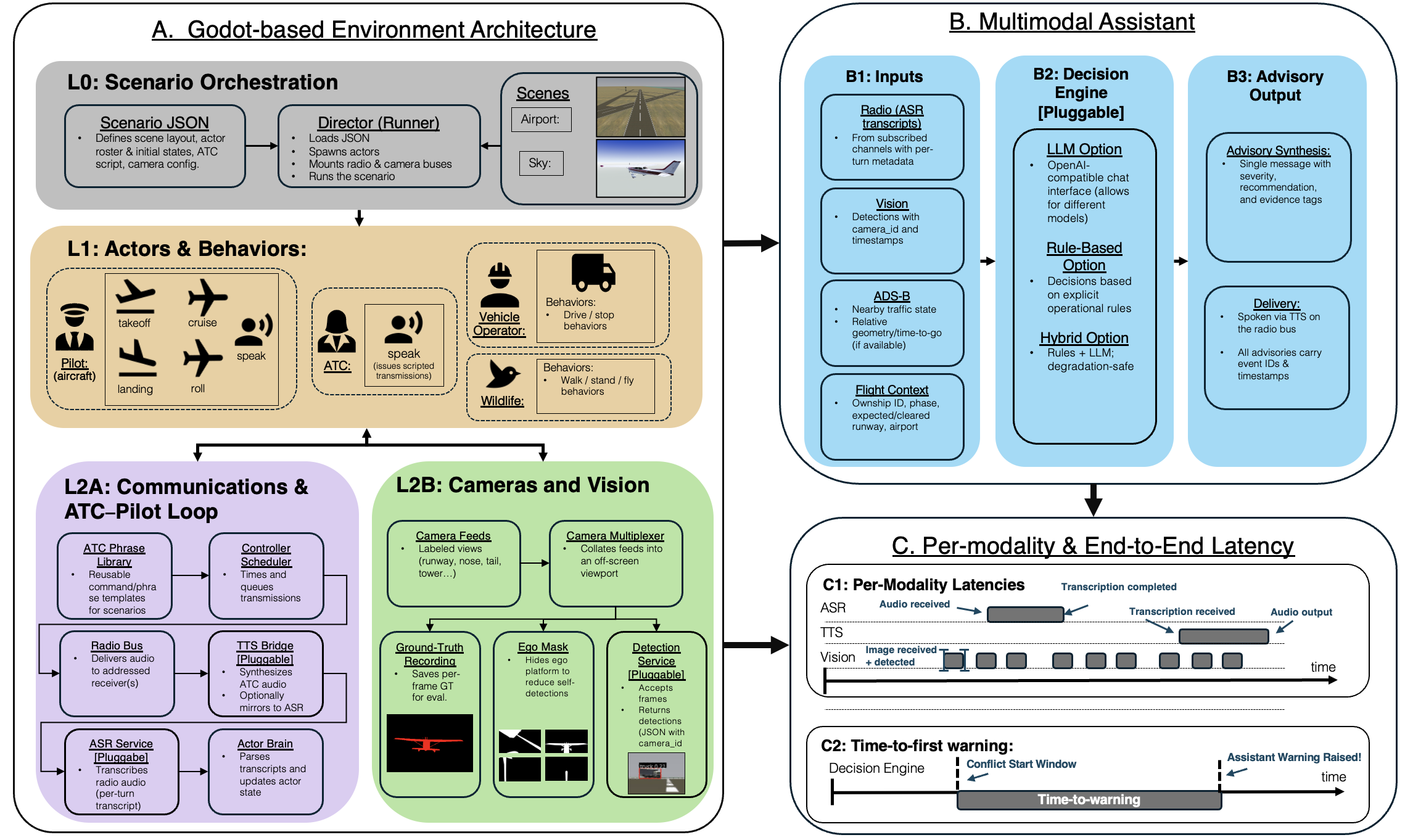

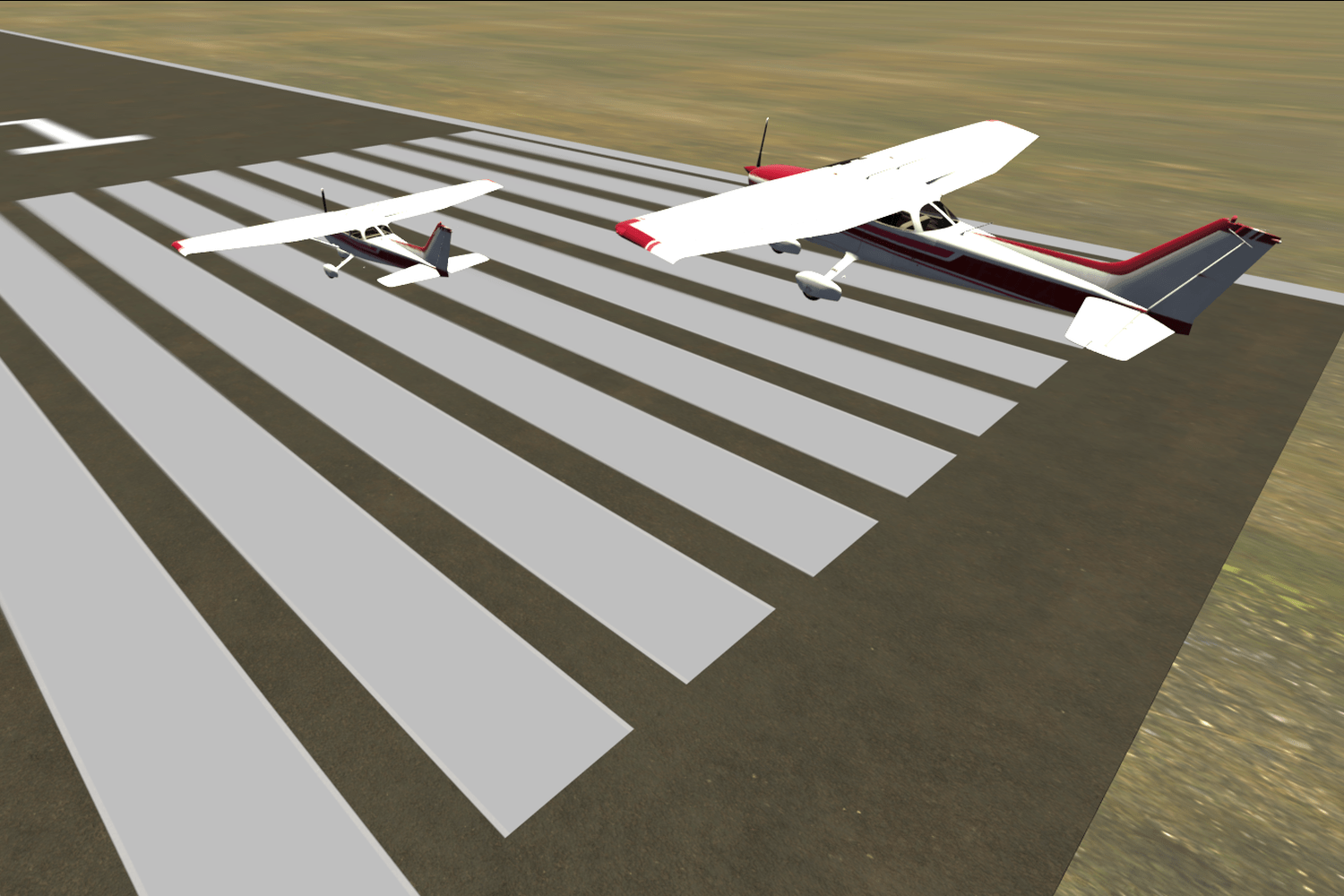

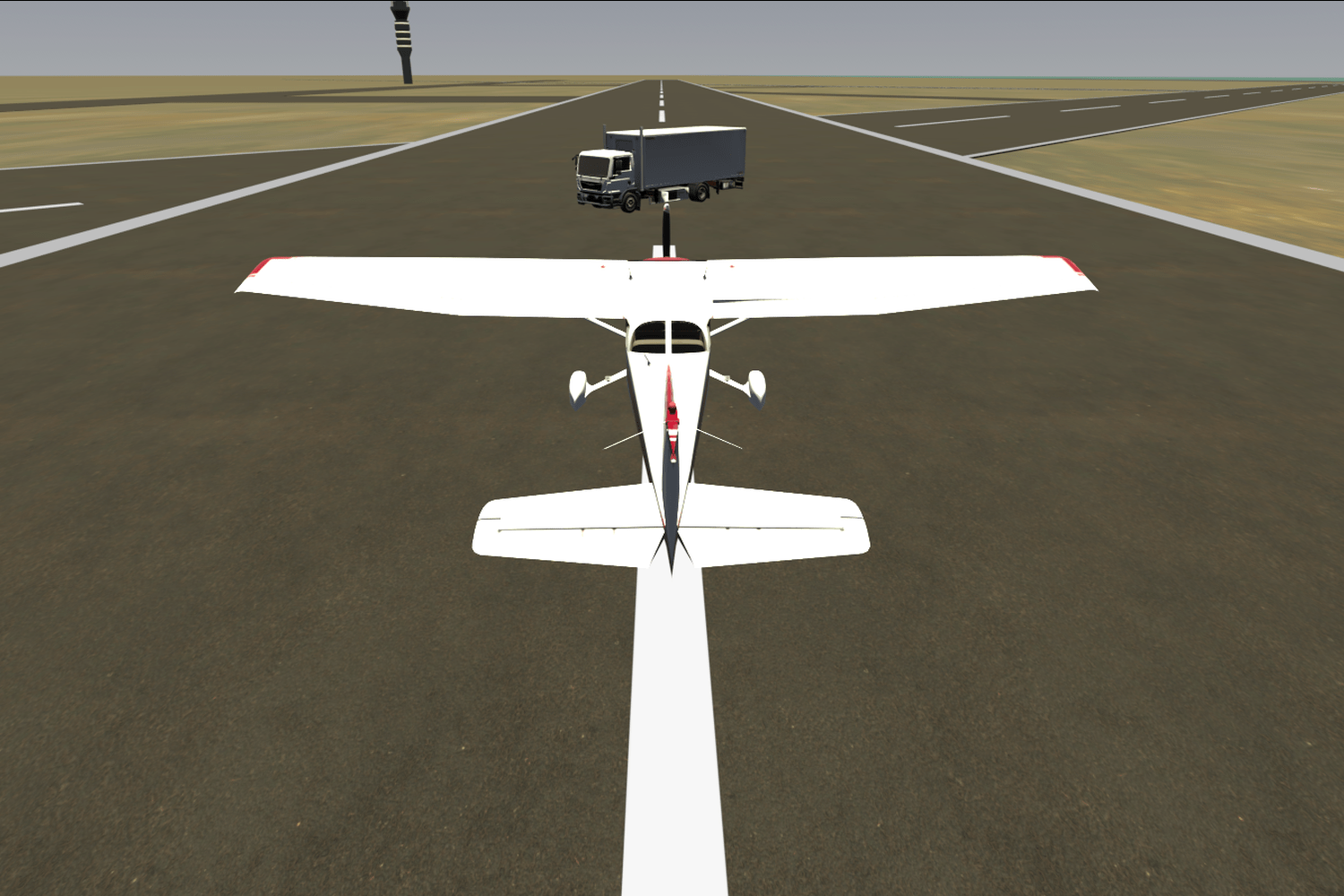

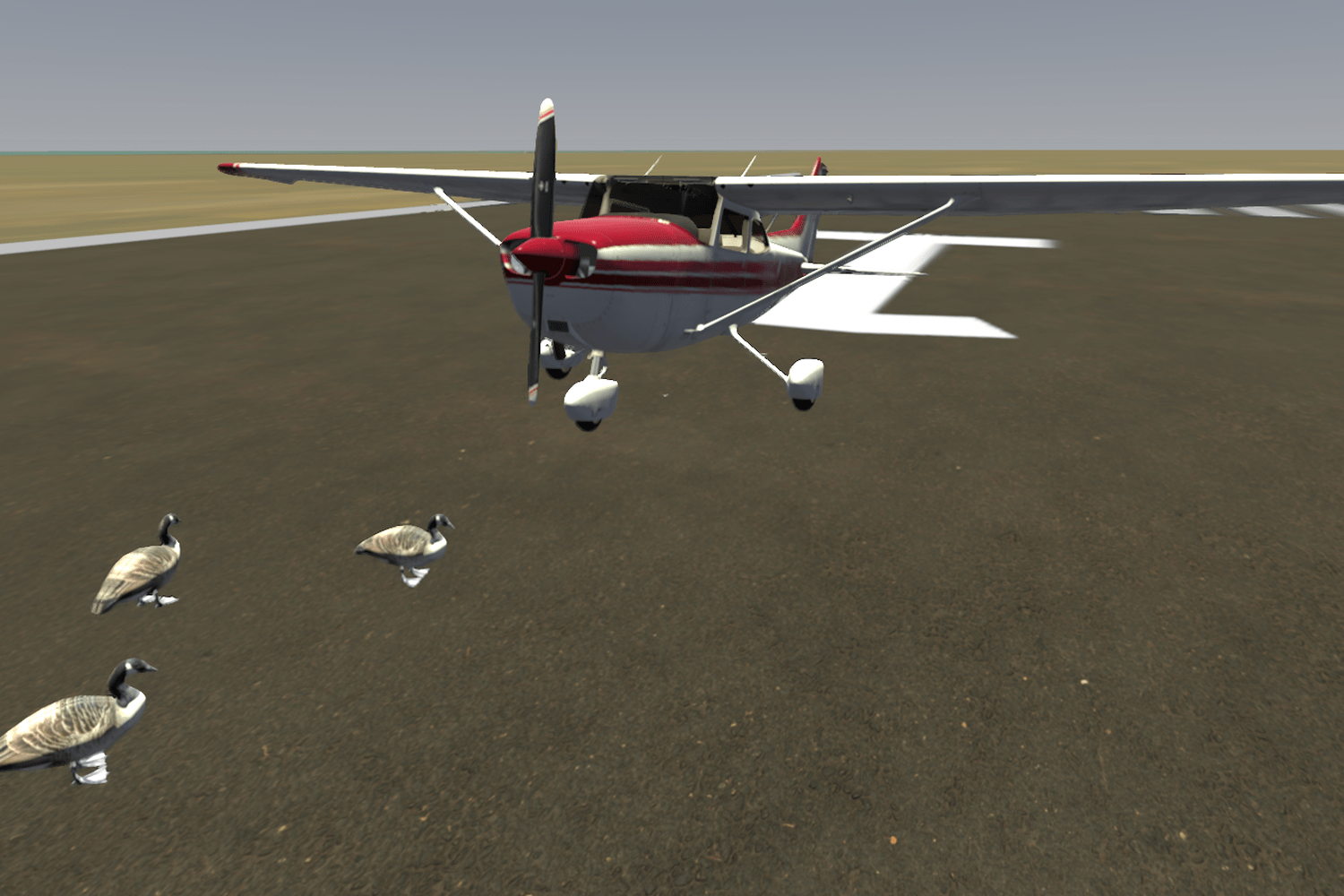

We introduce AIRHILT (Aviation Integrated Reasoning, Human-in-the-Loop Testbed), a modular and lightweight simulation environment designed to evaluate multimodal pilot and air traffic control (ATC) assistance systems for aviation conflict detection. Built on the open-source Godot engine, AIRHILT synchronizes pilot and ATC radio communications, visual scene understanding from camera streams, and ADS-B surveillance data within a unified, scalable platform. The environment supports pilot- and controller-in-the-loop interactions, providing a comprehensive scenario suite covering both terminal area and en route operational conflicts, including communication errors and procedural mistakes. AIRHILT offers standardized JSON-based interfaces that enable researchers to easily integrate, swap, and evaluate automatic speech recognition (ASR), visual detection, decision-making, and text-to-speech (TTS) models. We demonstrate AIRHILT through a reference pipeline incorporating fine-tuned Whisper ASR, YOLO-based visual detection, ADS-B-based conflict logic, and GPT-OSS-20B structured reasoning, and present preliminary results from representative runway-overlap scenarios, where the assistant achieves an average time-to-first-warning of approximately 7.7 s, with average ASR and vision latencies of approximately 5.9 s and 0.4 s, respectively. The AIRHILT environment and scenario suite are openly available, supporting reproducible research on multimodal situational awareness and conflict detection in aviation; code and scenarios are available at https://github.com/ogarib3/airhilt.

Aircraft situational awareness and conflict detection currently rely on accurate multi-aircraft surveillance and radio communications, with command and control of airspace operations managed centrally by human air traffic controllers (ATCs). However, human ATCs and pilots are vulnerable to overwork, fatigue, and loss of attention, increasing the risk of operational errors and conflict events [2], [3].

To alleviate workload pressures and reduce operational errors, there is a growing need for assistive aviation systems that incorporate recent advancements in automatic speech recognition (ASR) and vision-based detection, alongside existing aircraft and radar surveillance data (e.g., ADS-B, radar). Such systems could proactively identify hazards such as traffic conflicts and runway incursions, while enhancing situational awareness and minimizing additional workload for pilots and controllers.

However, the current testing landscape for such aviation assistive systems presents notable challenges. Physically collocated test environments are costly, time-consuming, and require careful scheduling of limited ATC and pilot availability. These sophisticated facilities typically include several pilot and controller workstations along with integrated displays and are commonly utilized for operational scenario studies [4], [5]. Yet, their availability is increasingly constrained by growing global aviation traffic and rising research demands associated with emerging aviation concepts such as unmanned aerial systems (UAS) and advanced air mobility (AAM) [6], [7]. Although rigorous testing at physical facilities remains essential for advanced development and certification phases, it is impractical for early-stage concept evaluations. Additionally, the rapidly expanding design space, driven by new machine learning models and varied computational, sensing, and communication architectures, further underscores the necessity of flexible, efficient, simulation-based environments suitable for rapid, systematic evaluations.

Such a simulation-based environment would significantly broaden access to aviation situational awareness research, allowing researchers worldwide to efficiently explore and identify promising candidate systems while conserving limited pilot and ATC resources. To address these needs, we introduce AIRHILT, a simulation environment explicitly designed to facilitate research into multimodal AI assistance systems through pilot and controller-in-the-loop experimentation.

Contributions. Our contributions in this effort are as follows:

- An open simulation environment that synchronizes pilot-to-ATC communications, ATC control tower camera views, aircraft-mounted camera streams, and ADS-B/radar data, enabling systematic evaluation of multimodal assistive systems with pilot-and controller-inthe-loop interactions. 2) A scalable scenario suite consisting of six conflict scenario families (three terminal and three en route) that model communication, procedural, and visually driven hazards, with parameterized variations in noise, visibility, geometry, and traffic configurations. 3) A reference multimodal pipeline that demonstrates environment capabilities through interchangeable components such as Whisper-based ASR, YOLO-based visual detection, ADS-B-based conflict logic, and a structured large language model (LLM) decision layer, with preliminary latency and time-to-first-warning metrics reported. 4) Reproducible artifacts including the simulation environment, scenario definitions, evaluation scripts, and documentation to support community use and extension. The remainder of this paper is structured as follows: Section II provides background on air traffic management operations and the key components relevant to aviation situational awareness. Section III outlines related challenges to building such systems. Section IV details the environment and interfaces. Section V presents the designed scenarios. Section VI describes the reference pipeline and presents preliminary results from representative runway-overlap scenarios. Section VII provides concluding remarks, discusses current limitations, and introduces avenues for future work.

Aviation operations encompass both air traffic management and conflict detection, including the determination, sequencing, and issuance of clearances from departure through en route and approach phases [8], [9], as well as the identification and mitigation of hazards such as wildlife encounters, mechanical issues, and other unexpected conflicts [10]. Effective traffic management maintains prescribed separation between aircraft while preserving operational efficiency under current operational conditions. Controllers integrate surveillance data (e.g., ADS-B and radar) provided via tower infrastructure, direct visual observations from the control tower, and standardized voice communications with pilots to formulate and issue clearances and instructions [11]. In parallel, pilots routinely manage

This content is AI-processed based on open access ArXiv data.