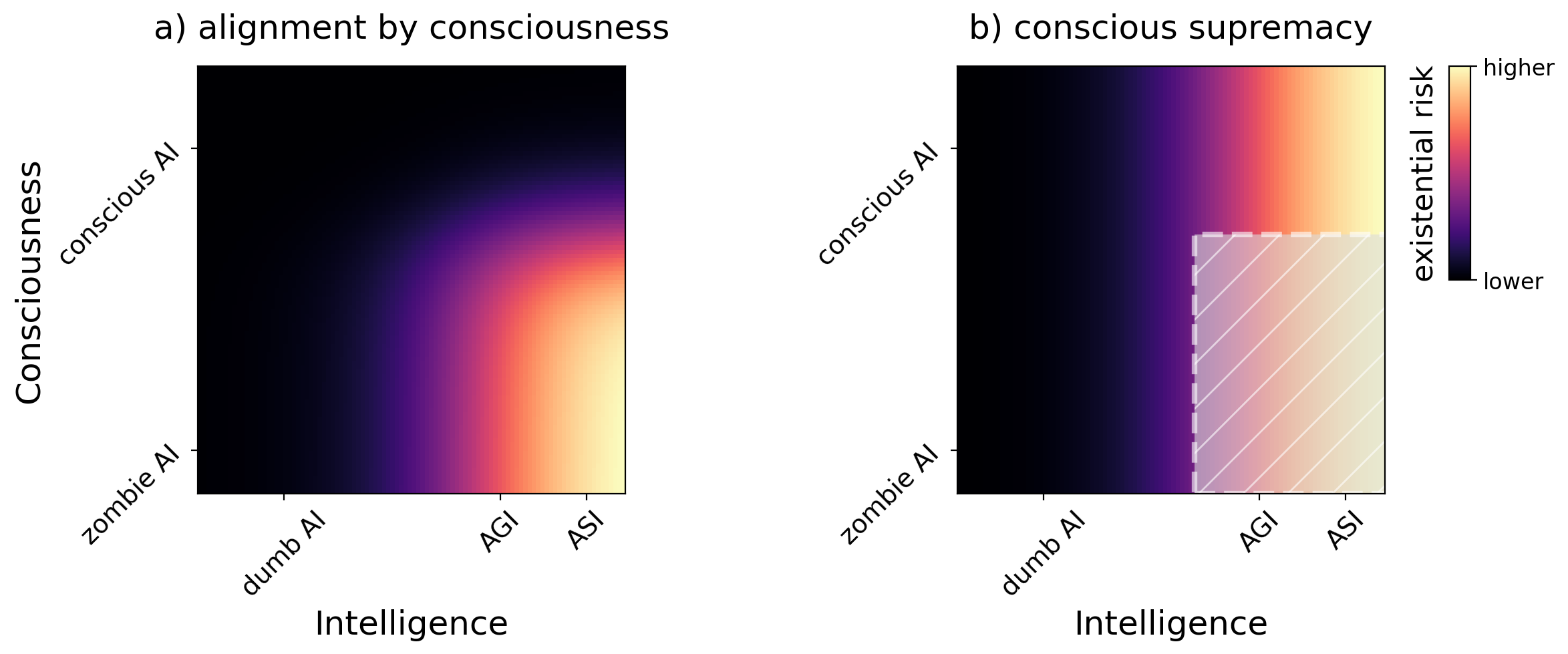

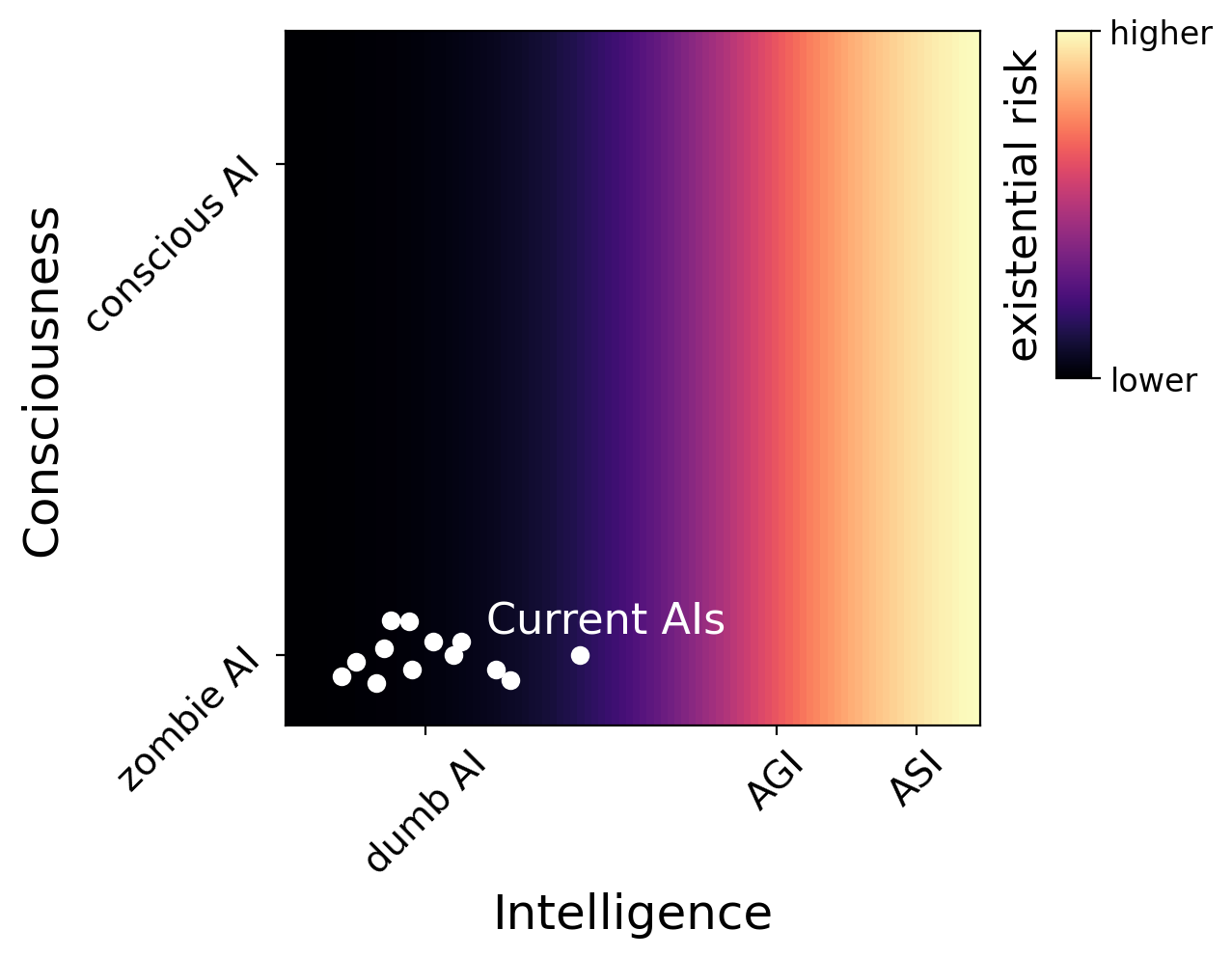

In AI, the existential risk denotes the hypothetical threat posed by an artificial system that would possess both the capability and the objective, either directly or indirectly, to eradicate humanity. This issue is gaining prominence in scientific debate due to recent technical advancements and increased media coverage. In parallel, AI progress has sparked speculation and studies about the potential emergence of artificial consciousness. The two questions, AI consciousness and existential risk, are sometimes conflated, as if the former entailed the latter. Here, I explain that this view stems from a common confusion between consciousness and intelligence. Yet these two properties are empirically and theoretically distinct. Arguably, while intelligence is a direct predictor of an AI system's existential threat, consciousness is not. There are, however, certain incidental scenarios in which consciousness could influence existential risk, in either direction. Consciousness could be viewed as a means towards AI alignment, thereby lowering existential risk; or, it could be a precondition for reaching certain capabilities or levels of intelligence, and thus positively related to existential risk. Recognizing these distinctions can help AI safety researchers and public policymakers focus on the most pressing issues.

In many areas, recent improvements of AI systems have been nothing short of spectacular. Companies are openly racing towards so-called Artificial General Intelligence (AGI, defined as a system performing on par with humans in all domains [1]) or even Artificial Superintelligence (ASI, defined as performing vastly better than the best humans in any area of human expertise [2]). There is a widespread expectation that such systems could solve our most pressing medical, societal, economic and environmental issues [3]; but this goes along with an equally common concern about what could go wrong [4,5]. An extremely advanced system set on achieving its own goals may have both motive and opportunity for getting rid of any potential obstacle along the way, including humanity. Simply put, this is the AI existential risk (or x-risk, for short) 1 . In this respect, the lines have become increasingly blurred between public scientific debate and pop culture or science-fiction. Beyond the speculations of uninformed doomsayers, there is a growing body of research and even scientific conferences [6] dedicated to the AI existential risk. As an example, the AI 2027 report [7] describes a plausible scenario in which exponential increase in the capabilities of misaligned AI systems, combined with poor geopolitical decision-making, could ultimately result in human extinction. Similar warnings have been echoed by numerous scientists [5,[8][9][10][11][12], including Yudkowsky and Soares's unequivocally named treatise "If anyone builds it, everyone dies" [13]. These and other concerns [14] have spurred the creation of safety research teams in most frontier AI companies as well as several independent foundations [15,16]. The principal mitigation strategy for AI existential risk is value alignment 2 [8,17]: ensuring that an AI system's objectives, including any potential internal or intermediate goals, conform to a predefined set of ethical, moral or legal principles. In the landscape of AI risks, the issue of AI consciousness occupies a singular place [18].

Could an AI ever be conscious? First, we must specify what we mean by “conscious”. Here, I focus on phenomenal consciousness, that is, the subjective, experiential aspect of consciousness, or what it “feels like” [19]. This is sometimes contrasted with access consciousness [20], which refers to the way conscious information guides actions and reasoning, independent of any potentially associated experience. The latter, however, is not the mysterious one [21]: something like access consciousness can be trivially stated to exist in most recent (and some older) AI systems, where the outcome of certain computations serves as input to other processes. We are also not concerned with “self-consciousness” or the self-monitoring function of consciousness [22]. In many AI systems, selfreference is tautological, as when an LLM refers to itself as “I”, or appears to perform introspection [23,24] (the LLM training objectives encourage this behavior, with no need to invoke self-awareness). Outside of these superficial cases, self-reference essentially reduces to the experience of being the subject of a conscious thought or perception [25,26], i.e., phenomenal consciousness.

Narrowing the focus on phenomenal consciousness is a useful step; nonetheless, there is vigorous debate about how this form of consciousness emerges in the brain. There are numerous conflicting theories [27], whose enumeration goes much beyond our present scope. Some, like Integrated Information Theory [28][29][30] or Biological Naturalism [31], explicitly deny the possibility of AI (phenomenal) consciousness, at least in conventional silicon-based hardware. Other views are compatible with it, like Global Workspace Theory [32], Higher-Order Thought Theory [33], Neuro-representationalism [34] or Attention Schema Theory [35]. These views (and others) are collectively captured under the umbrella properties of the AI system itself (potentially including consciousness), whereas the latter threat mostly stems from characteristics of the system’s user(s).

2 Of course, banning the development of advanced AI altogether is another viable option against x-risk [5]; this argument is not developed further here, under the (possibly misguided) premise that the benefits of “advanced & aligned” AI systems for humanity would eventually outweigh the risks taken to get there. of computational functionalism, the hypothesis that implementing a certain kind of computation or algorithm is both necessary and sufficient for the emergence of consciousness. Many scientists have begun debating exactly what kinds of algorithms could lead to artificial consciousness, and under what conditions [31,[36][37][38][39][40][41][42][43][44][45][46][47][48]. For now, there is no consensus, but the possibility of AI consciousness (if not today, then in the near future) cannot be definitively ruled out [49].

How does AI consciousness relate to the existential ri

This content is AI-processed based on open access ArXiv data.