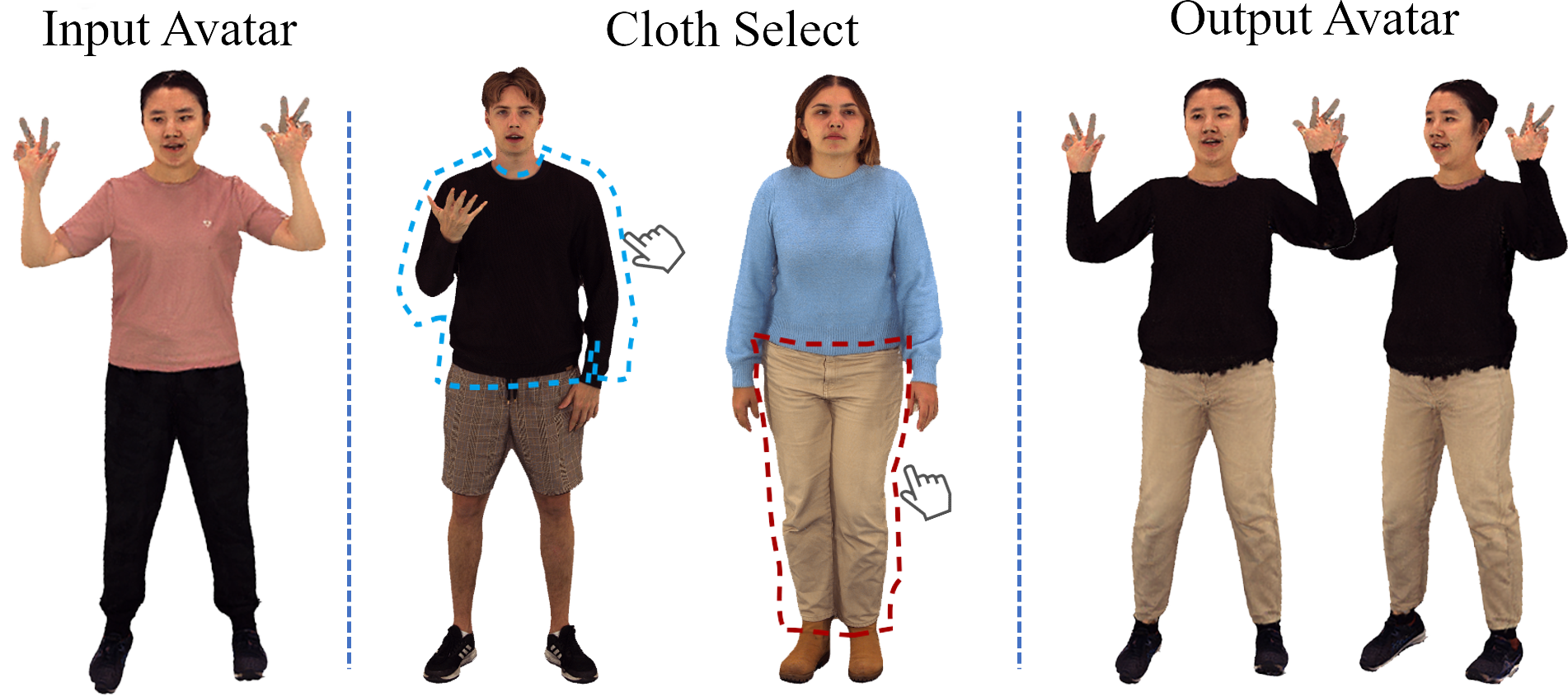

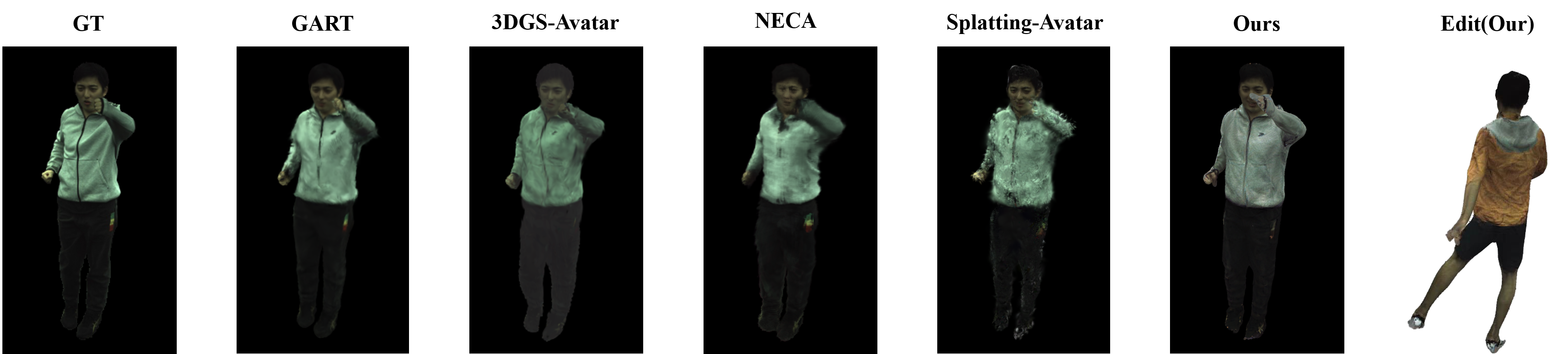

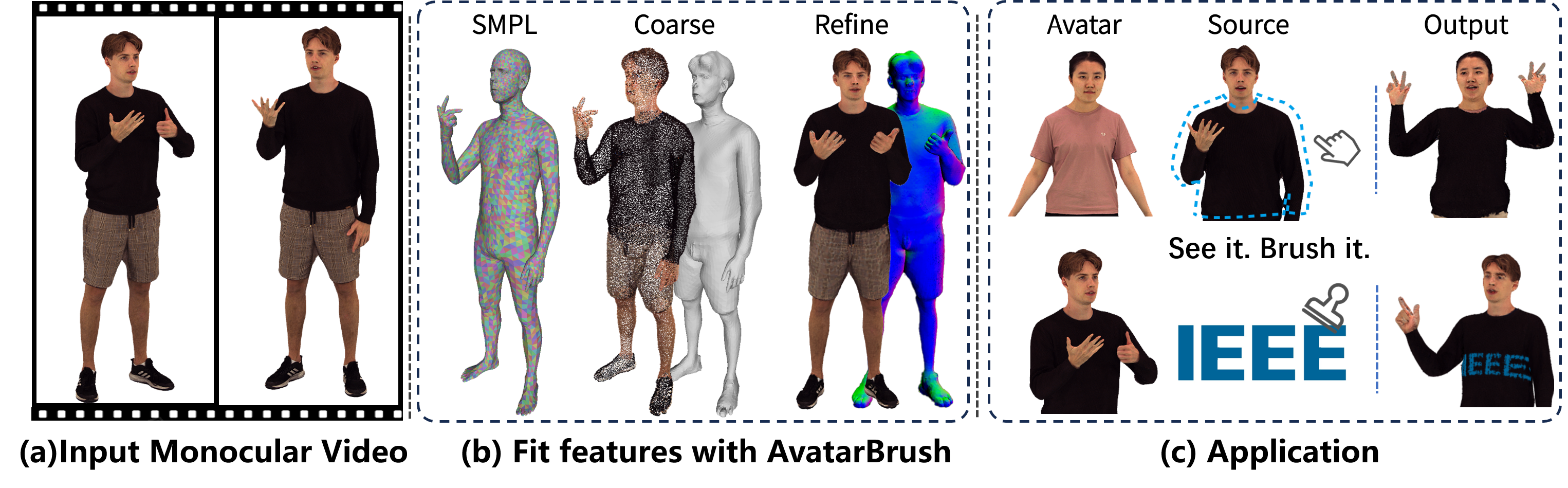

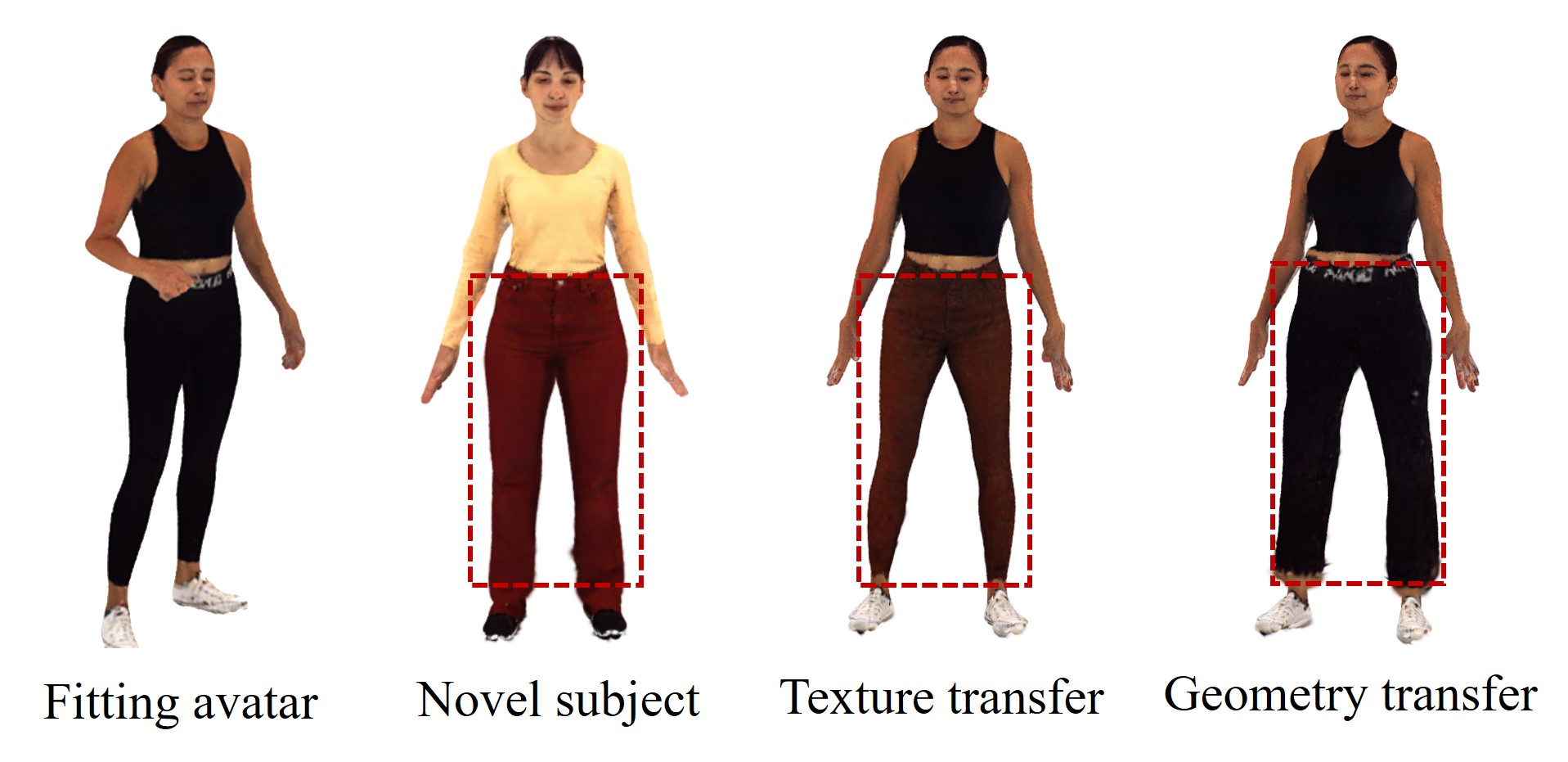

The efficient reconstruction of high-quality and intuitively editable human avatars presents a pressing challenge in the field of computer vision. Recent advancements, such as 3DGS, have demonstrated impressive reconstruction efficiency and rapid rendering speeds. However, intuitive local editing of these representations remains a significant challenge. In this work, we propose AvatarBrush, a framework that reconstructs fully animatable and locally editable avatars using only a monocular video input. We propose a three-layer model to represent the avatar and, inspired by mesh morphing techniques, design a framework to generate the Gaussian model from local information of the parametric body model. Compared to previous methods that require scanned meshes or multi-view captures as input, our approach reduces costs and enhances editing capabilities such as body shape adjustment, local texture modification, and geometry transfer. Our experimental results demonstrate superior quality across two datasets and emphasize the enhanced, user-friendly, and localized editing capabilities of our method.

C REATING high-fidelity clothed human models holds significant applications in virtual reality, telepresence, and movie production. Explicit methods [2], [30] are generally easier to edit, facilitating straightforward adjustments to the model's features; however, they often struggle to reconstruct high-frequency detail information directly. In contrast, implicit methods such as occupancy fields [42], [43], signed distance fields (SDF) [51], and neural radiance fields (NeRFs) [15], [24], [36], [49] have been developed to learn the clothed human body using volume rendering techniques. These implicit methods can achieve high-precision reconstruction results, but they are typically difficult to edit. Many approaches combine implicit methods with explicit methods, seeking to leverage the strengths of both techniques.

CustomHuman [10] combines the SMPL-X model with the SDF field to generate the human avatar, defining the geometry and texture features on the vertices of a deformable body model, stored in a codebook to exploit its consistent M. Li, S. Yao, Y. Pan, and Z. Xie are with Shanghai University, Shanghai, 200444, China. (e-mail: mtli, yaosx033,zhifengxie@shu.edu.cn pany7066@gmail.com) (*Zhifeng Xie is the corresponding author.) M. Li and Z. Xie are also with the Shanghai Film Visual Effects Engineering and Technology Research Center, Shanghai, 200444, China.

H. Xiao is with Tavus Inc., San Francisco, CA 94105, USA. (e-mail: haiyao@tavus.dev) Z. Li is with the East China University of Science and Technology (ECUST), Shanghai, 200237, China. (e-mail: zhongmeili@ecust.edu.cn) K. Chen is with Pinch Inc., San Francisco, CA 94105, USA. (e-mail: chern9511@gmail.com) [35] 3DGS-Avatar [22] Splat. Avatar [44] Cos. Human [10] NECA [50] AvatarBrush (ours) topology during movement and deformation. This method enables feature transfer for model editing, but it requires highaccuracy scanning data for fitting, making the construction of a new digital human very costly.

NECA [50] binds local features to vertices and uses tangent space to propagate these features across sampled viewpoints. This method allows neural scene modification by adjusting SMPL shape parameters and transferring clothing features via network transfer. However, NeRF-based methods have limitations in achieving finer local edits and tend to operate at slower rendering speeds. IntrinsicNGP [55] converts viewpoint coordinates from world space to local coordinates expressed in a UVD grid, allowing points to be represented by a hash grid, which accelerates rendering but restricts altering the model’s shape.

Our objective is to advance beyond current approaches to create realistic avatars from monocular videos, enabling efficient local editing on texture and geometry and greater computational efficiency. We observe that Gaussian-based methods [4], [8], [32] can generate models efficiently by representing the entire model as a basic point cloud, with each point depicted as a disk. To manage the point cloud, methods like GaussianAvatars [40] and SplattingAvatar [44] employ a mesh as the foundational model and set Gaussians within the local coordinate system to construct a radiance field over the mesh. This ensures a high-fidelity avatar at a low deformation cost. Zhan et al. [53] propose a method that leverages both the pose vector and local features derived from local anchor points on the SMPL model, to generate subtle wrinkles. However, these methods cannot directly edit avatars through Gaussian manipulation or SMPL parameter adjustment.

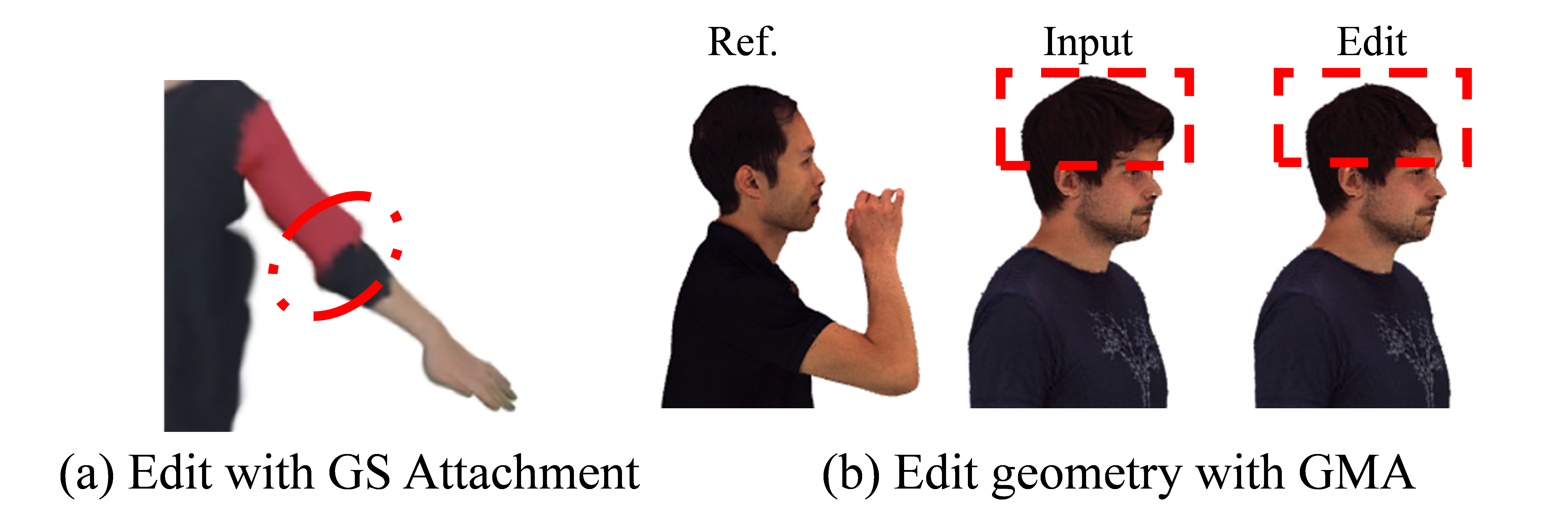

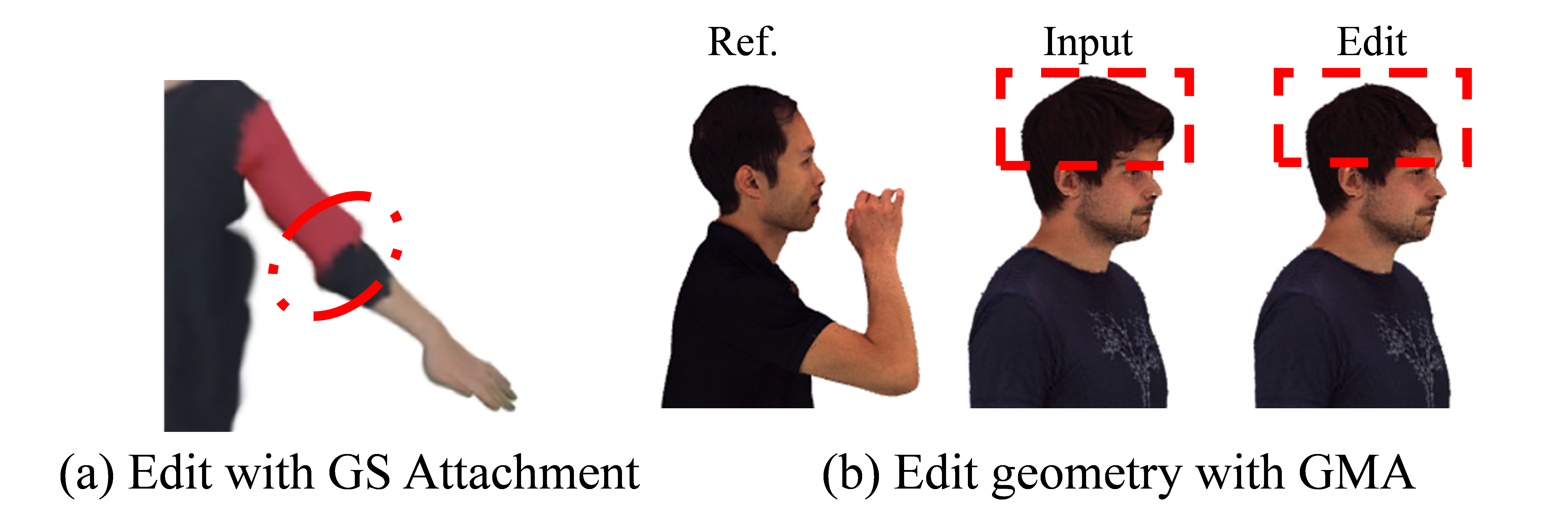

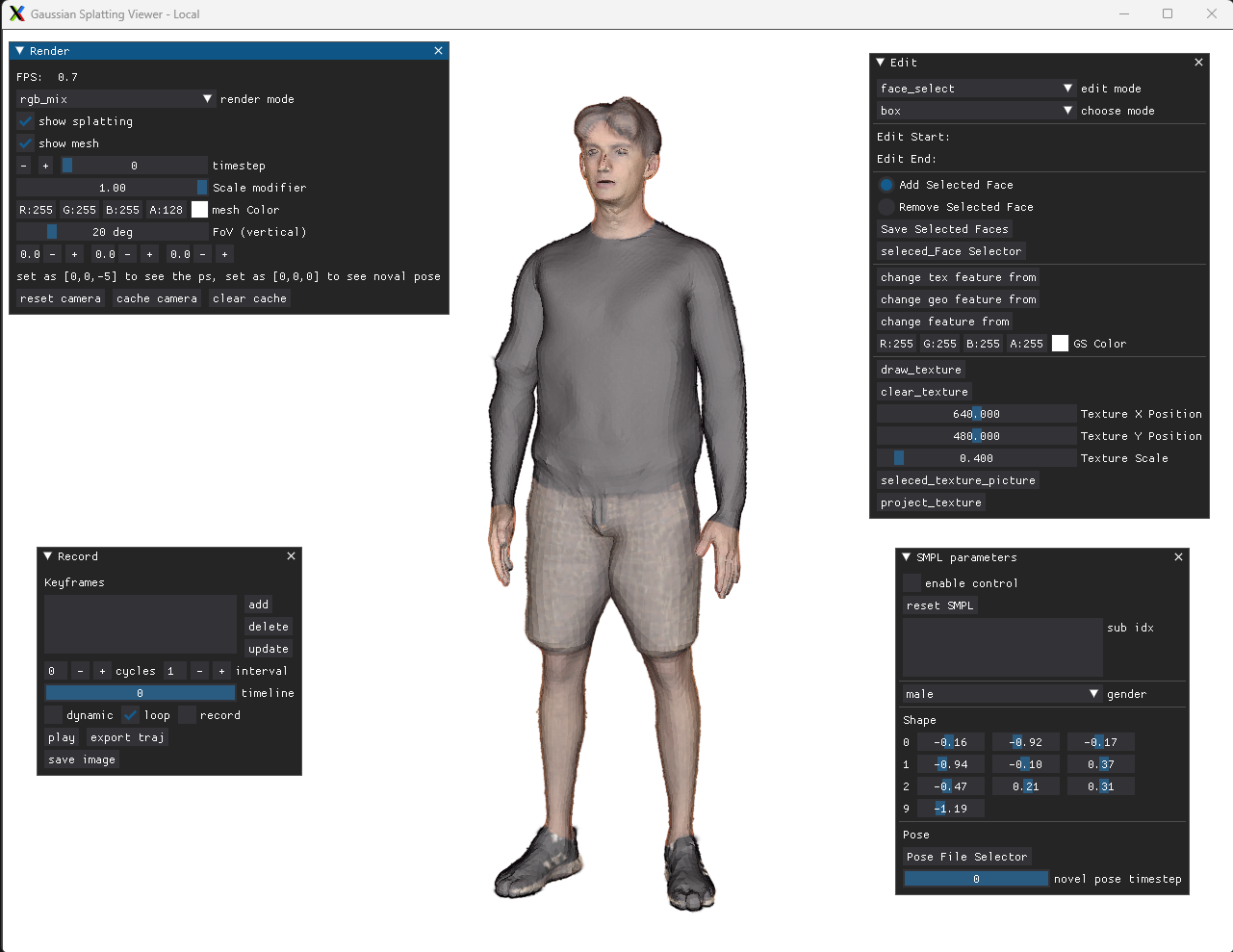

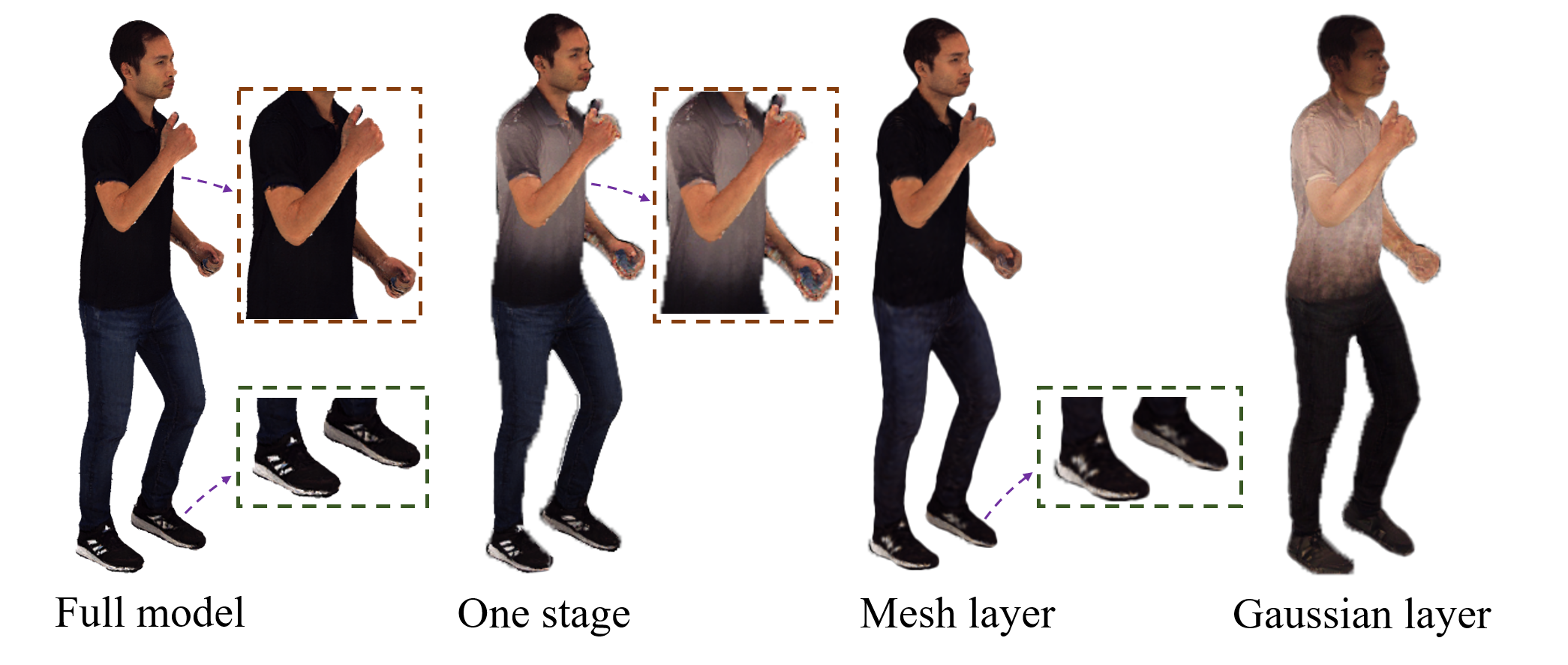

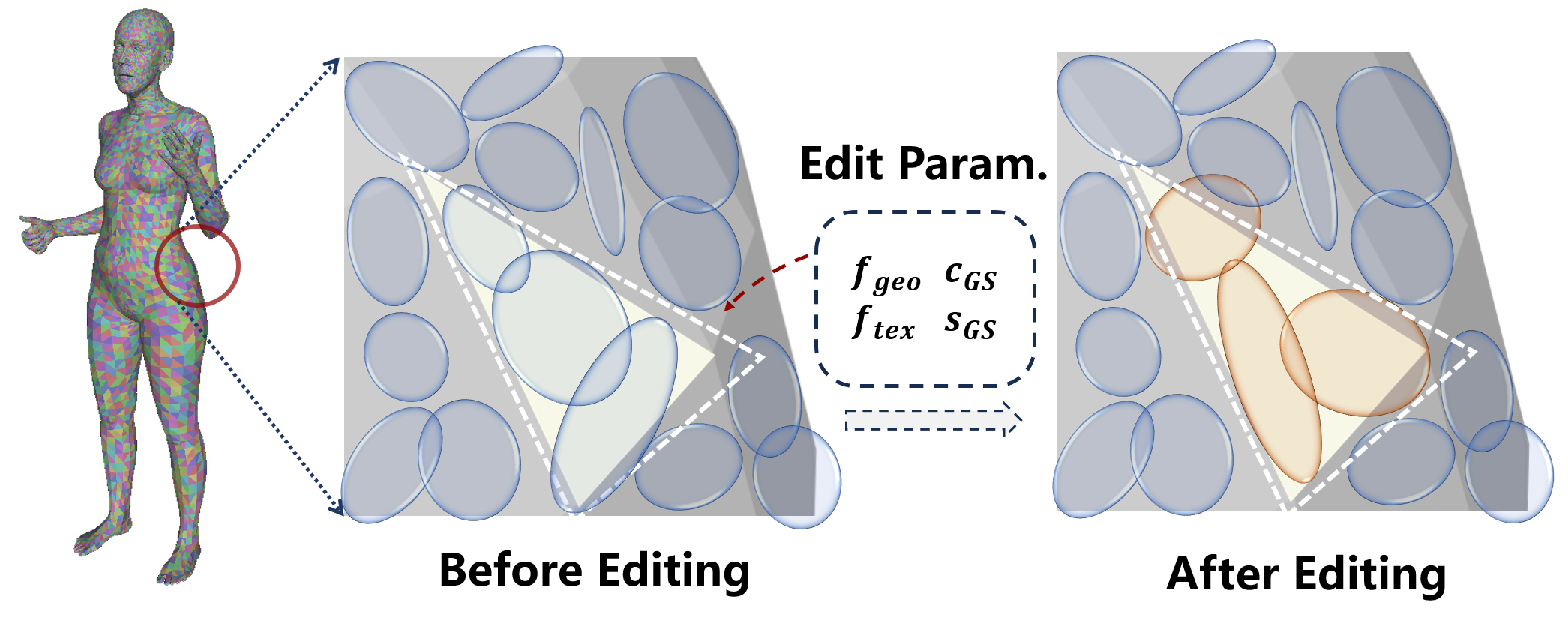

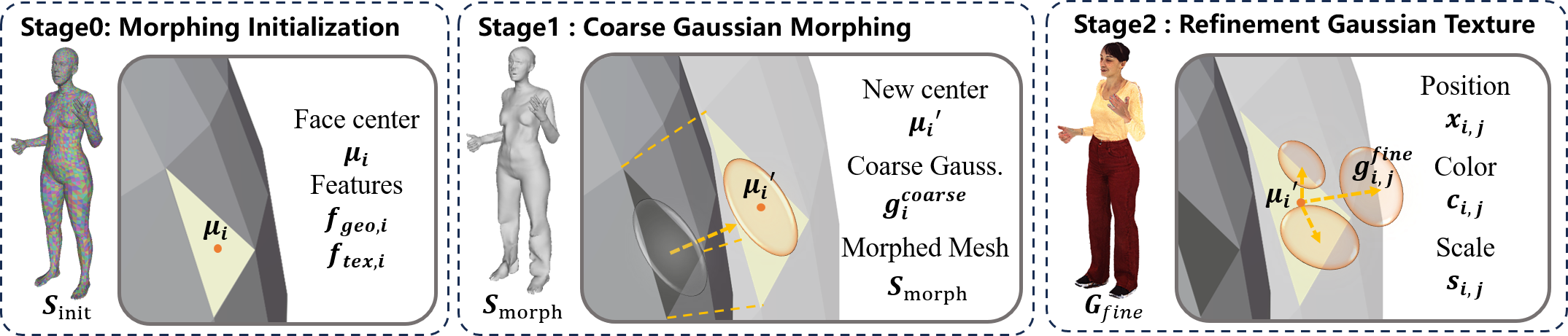

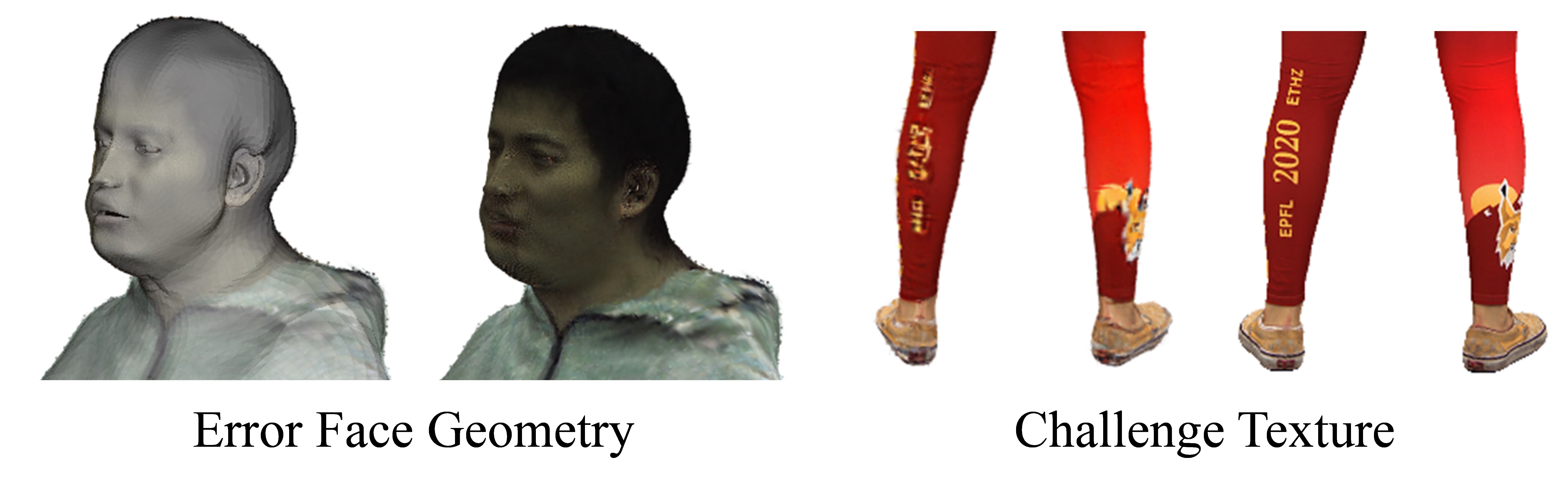

Modeling an editable avatar using Gaussian Splatting presents several critical challenges. One significant challenge is how to bind Gaussians to the explicit mesh while preserving editing capabilities. Simply binding Gaussians to each face and altering the SMPL surface can lead to artifacts due to the inherent differences in the properties of the Gaussian Fig. 1. Using a monocular video as input, we fit a set of features to generate an editable 3D avatar. Leveraging our specialized representation, GMA, this avatar can be easily edited in both texture and geometry by transferring features, while also supporting animation with hand poses and expressions. Our avatar model facilitates the transfer of garments across different identities and allows for the stamping of logos and other customizable elements in a user-friendly, interactive, real-time editing interface. representation. To address this issue, we propose a novel representation called Gaussian Morphing Avatar (GMA), combining three layers-feature layer, mesh layer, and Gaussian layer-to create an editable avatar, as shown in Fig. 2. We apply face offsets to generate a Gaussian-embedded mesh that preserves the original topology of the explicit parametric model. To improve the quality of our representation, we further subdivide coarse Gaussians, enhancing texture detail and capturing finer surface.

Another challenge is how to map the features to the final Gaussian. I

This content is AI-processed based on open access ArXiv data.