In this treatment a text is considered to be a series of word impulses which are read at a constant rate. The brain then assembles these units of information into higher units of meaning. A classical systems approach is used to model an initial part of this assembly process. The concepts of linguistic system response, information energy, and ordering energy are defined and analyzed. Finally, as a demonstration, information energy is used to estimate the publication dates of a series of texts and the similarity of a set of texts.

This paper represents an effort to apply a classic systems approach to the modeling of linguistic processes. Specifically a model is posited from which statistics about the effort required by the reader of a given text can be drawn. This effort is termed the information energy.

The motivational linguistic model is developed first. This is followed by several computations exploring typical behavior of the statistic.

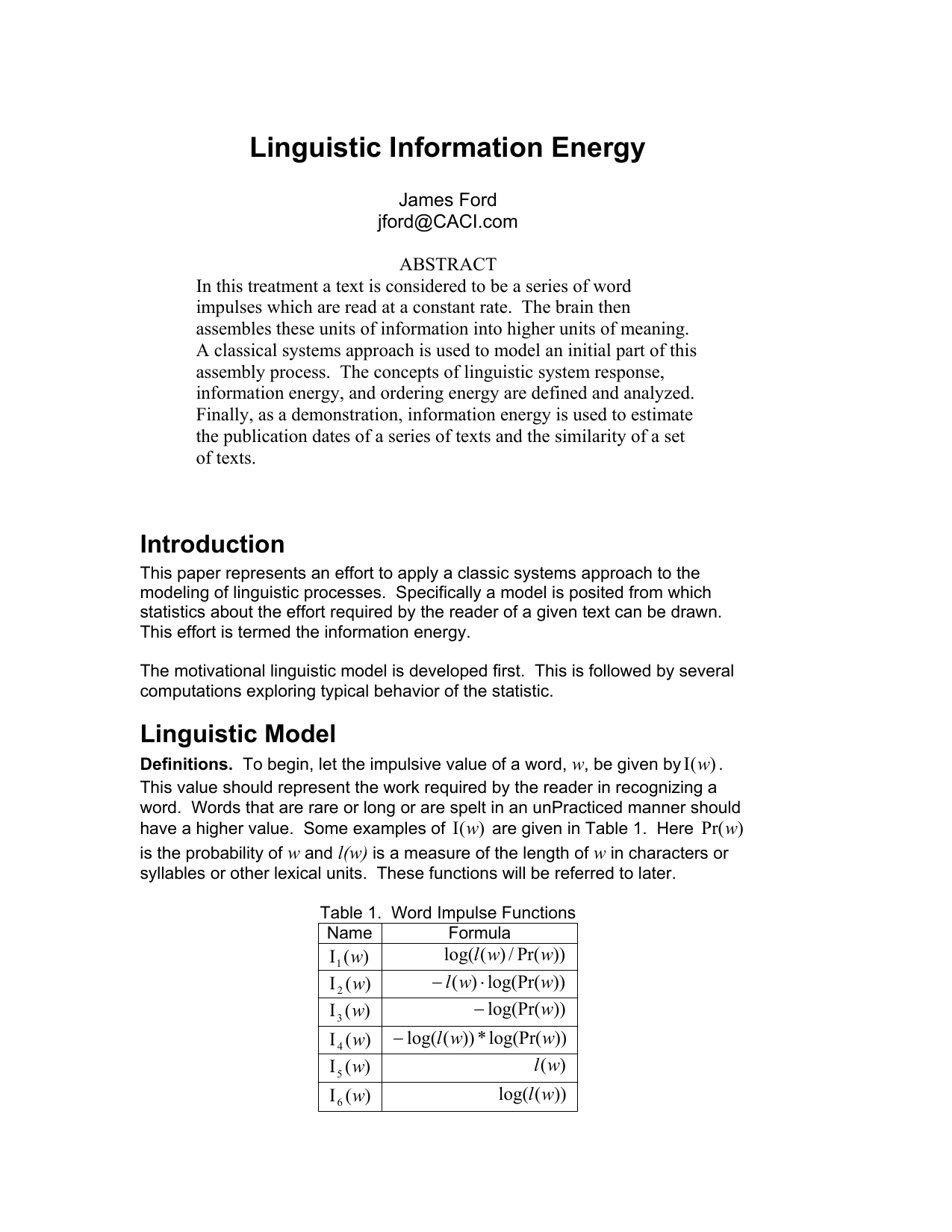

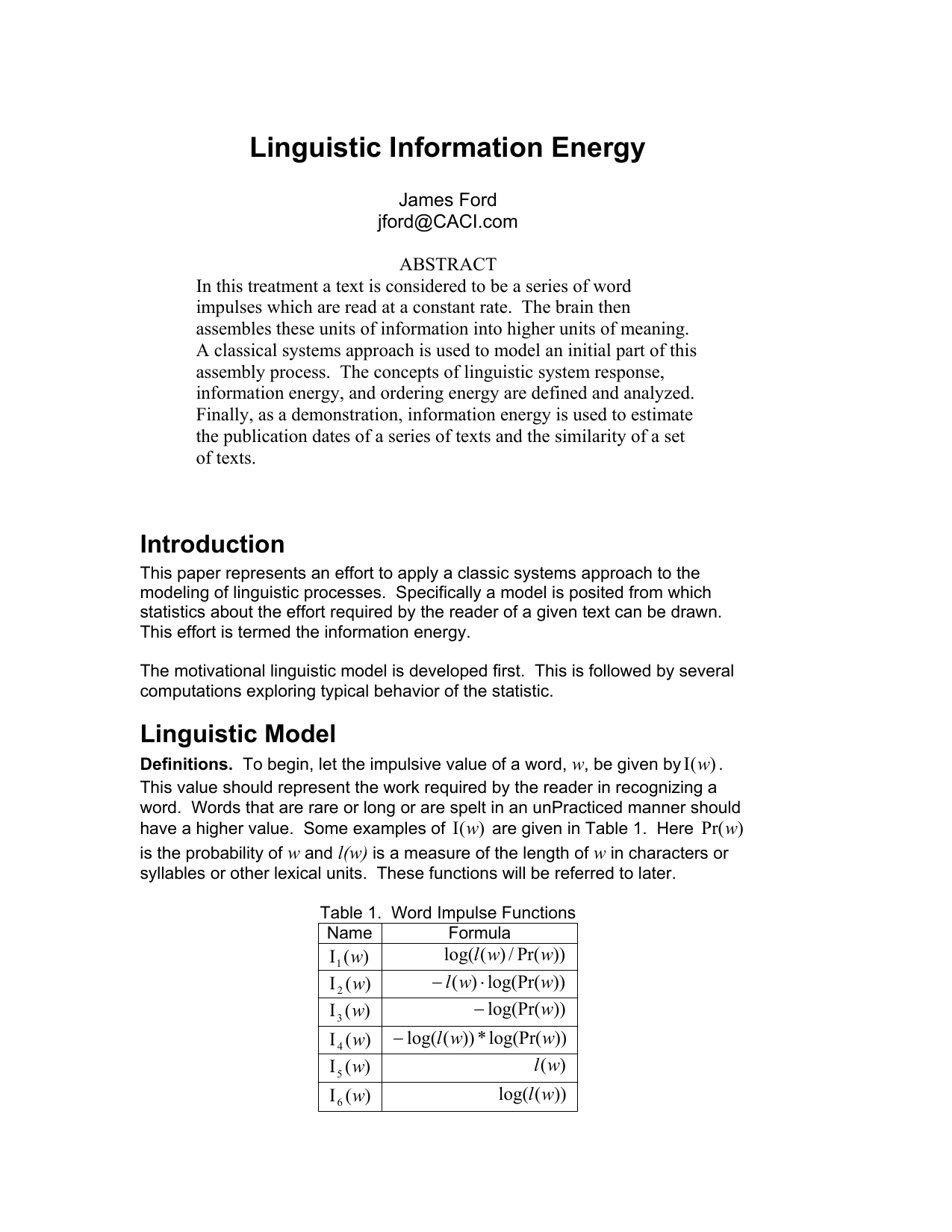

Definitions. To begin, let the impulsive value of a word, w, be given by ) (w Ι . This value should represent the work required by the reader in recognizing a word. Words that are rare or long or are spelt in an unPracticed manner should have a higher value. Some examples of ) (w Ι are given in Table 1. Here ) Pr(w is the probability of w and l(w) is a measure of the length of w in characters or syllables or other lexical units. These functions will be referred to later. Table 1. Word Impulse Functions Name Formula

is classically the amount of information resolved by the occurrence of word w. ) (t τ Μ is the linguistic system response (LSR) of a single word with an impulse value of 1 although in reality a reader would not continue to read after reading the only word in a single word text. The LSR for a text is then

where * is the standard convolution operator [1].

If the LSR is the minimum attention level required to read a text at time t and the energy required to maintain that attention level is equal to it’s square, then the average information energy required by a finite text is

where T is the time extent of the text. Note that a more general term for this might be decoding energy since the exact choice of word impulse function ) (w Ι hasn’t been specified. However, the term information energy will be used throughout this paper.

The information energy defined here is not related to the information energy of Onicescu [2]. With the exception of the first word, evaluation of the LSR will be the evaluation of the sum of two random variables

and this sum will have the density shown in Figure 1. The expected energy for this sum of variables will be

Thus the expected information energy will be

where the first term in the brackets represents the evaluation of the first word. Note that the termination symbol for the text is not evaluated as a separate word, the reader merely stops reading.

Let O be an ordering applied to a text T such that if

. The ordering energy of the text is defined as

Ordering energy is identically zero for 1 = τ and can be zero for 1 > τ since the ordering O may not change the word order. But it can also be negative. As an example consider the text In practice the ordering energy will be zero 3%-4% of the time. The largest such sentence found thus far, without repeating words, had 5 words (example: “How can they do it”). The author will NOT be offering a prize for the largest non-trivial sentence found with an ordering energy of zero. However, it would be interesting to know.

Typically ordering energy isn’t used by itself. Instead, the two statistics unordered information energy IE(T) and ordered information energy IE(O(T)) are handed over in raw form for further processing.

T be a text with two alternating words 2 1 , w w and cardinality N which is even. As in the previous example, using an MSF of the form ) ( , 2 t M ∞ given by (1), the ordering energy is

Datasets. Two datasets, the American National Corpus 2 nd edition (ANC) [3] and a so called plain text corpus (PTC) constructed by the author, were used to examine the information energy of English.

The PTC is a collection of texts written in a “conversational” style. There are no government reports or scientific papers. There are no spoken transcripts, although some of the texts have quoted dialog uttered by their characters. Currently there are 58 texts 8 of which are shared with the ANC where they were labeled as either fiction or non-fiction/OUP. All were written by a single author. About 75% were written after 1900. The earliest text dates from 1529. Most came from Project Gutenberg [4].

The word probabilities for the word impulse function were calculated from the non-shared parts of both the ANC and PTC.

No pre-processing occurred other than the replacement of all numbers with a number symbol. Indeed it is the alternation of what are commonly called stop words with words of high information value that give statistics such as ordering energy a grip on the text. Stemming could reduce the average length of words in a text and cause confusion between different writing styles depending upon which word impulse function was chosen.

Computation 1. The first computation is a calculation of the average persentence information energy on the first 400 sentences of each text in the PTC making a total of 23,600 sentences and 536,583 words. For this calculation, a sentence terminator was considered the last word or symbol read by the reader with a length of 1 and a probability equal to that of a sentence. The impul

This content is AI-processed based on open access ArXiv data.